oba-live-tool

抖音直播带货工具,支持抖音小店和巨量百应登录,能自动弹窗,自动发言,AI助力回复

Stars: 65

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

README:

🍟 多账号管理:支持多组账号配置,针对不同直播间使用不同的配置

🎯 智能消息助手:自动发送消息,告别重复机械喊话

📦 商品自动讲解:自动商品弹窗,随心所欲弹讲解

💃 AI 自动回复:实时监听直播互动评论、自动生成回复内容

🤖 AI 智能助理:接入 DeepSeek,支持官方、OpenRouter、硅基流动、火山引擎等多种支持 openai 接口的提供商

- 操作系统:Windows 10 及以上

- 浏览器:电脑上需要安装 Chrome 或 Edge 浏览器

- 抖音小店/巨量百应:账号需要能正常进入中控台

访问 Releases 页面下载最新版本安装包

git clone https://github.com/qiutongxue/oba-live-tool.git

cd oba-live-tool

pnpm install

pnpm buildpnpm 10 及以上版本请在 package.json 中添加以下配置后再执行

pnpm install:{ "pnpm": { "onlyBuiltDependencies": ["electron"] } }

自动发言、自动弹窗、自动回复功能都需要先连接到中控台才能使用。

- 点击功能列表的「打开中控台」进入直播控制台页面,点击「连接直播控制台」按钮

如果软件显示找不到 Chrome 浏览器,或者想要自己指定浏览器位置,请前往 应用设置 页面的 浏览器设置 中进行相关设置。

- 如果是第一次连接,请在弹出的页面中登录抖音小店的账号

- 等待控制台状态显示绿色圆点和「已连接」,即连接成功

基本功能就不多说了,有一个需要注意的:

目前暂时还没提供运行时更新设置的功能,所以如果需要让新的任务配置生效,需要重启任务。

想要使用 AI 功能,需要先设置 API KEY。

软件提供了四种 DeepSeek 模型的预设:

除此之外,「自定义」还支持几乎任何兼容 openai 对话模型接口的服务。

在 「AI 助手」或「自动回复」的页面,点击「配置 API Key」按钮,就能选择自己需要的提供商和模型了。

注意: 有的(大多数)模型是收费的,使用 AI 功能前请一定要先了解清楚,使用收费模型时请确保自己在提供商的账户有能够消耗的额度。

火山引擎的设置方式和其它提供商有些微区别,除了需要 API KEY 之外,还需要 创建接入点。创建成功后,将接入点的 id 复制到原先选择模型的位置中即可使用。

在使用自动回复功能前,请先

- 设置好你的 API KEY 及模型,确保可用。

- 在「提示词配置」中设置好相关的提示词。

提示词决定了 AI 会扮演什么样的角色,以及 AI 会如何回答用户的问题,会计入 token 消耗。

程序会将「开始任务」之后的新的用户评论交给 AI 处理,用户评论会以 JSON 格式原封不动地作为对话的内容交给 AI:

{

"nickname": "用户昵称",

"content": "用户评论内容",

"commentTag": ["评论标签1", "评论标签2", "..."]

}所以可以把 nickname、commentTag 等插入到提示词中,你的提示词可以是:

你是一个直播间的助手,负责回复观众的评论。请参考下面的要求、产品介绍和直播商品,用简短友好的语气回复,一定一定不要超过45个字。

## 要求

- 回复格式为:@<nickname第一个字符>*** <你的回复> (注意!:三个星号是必须的)

...在使用 AI 助手功能前,请先设置好你的 API KEY 及模型,确保可用。

你可以选择更新源,但是目前最稳定的还是 Github。

亲测:Github 绝对可用。gh-proxy.com 偶尔可用。其余的github代理基本都不可用。

启用开发者模式后,可以使用鼠标右键菜单,在菜单中可打开开发者工具。

启用开发者模式后,连接到中控台时会关闭浏览器的无头模式。

本项目遵循 MIT 许可证

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for oba-live-tool

Similar Open Source Tools

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

AI-Translation-Assistant-Pro

AI Translation Assistant Pro is a powerful AI-driven platform for multilingual translation and content processing. It offers features such as text translation, image recognition, PDF processing, speech recognition, and video processing. The platform includes a subscription system with different membership levels, user management functionalities, quota management, and real-time usage statistics. It utilizes technologies like Next.js, React, TypeScript for the frontend, Node.js, PostgreSQL for the backend, NextAuth.js for authentication, Stripe for payments, and integrates with cloud services like Aliyun OSS and Tencent Cloud for AI services.

prose-polish

prose-polish is a tool for AI interaction through drag-and-drop cards, focusing on editing copy and manuscripts. It can recognize Markdown-formatted documents, automatically breaking them into paragraph cards. Users can create prefabricated prompt cards and quickly connect them to the manuscript for editing. The modified manuscript is still presented in card form, allowing users to drag it out as a new paragraph. To use it smoothly, users just need to remember one rule: 'Plug the plug into the socket!'

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

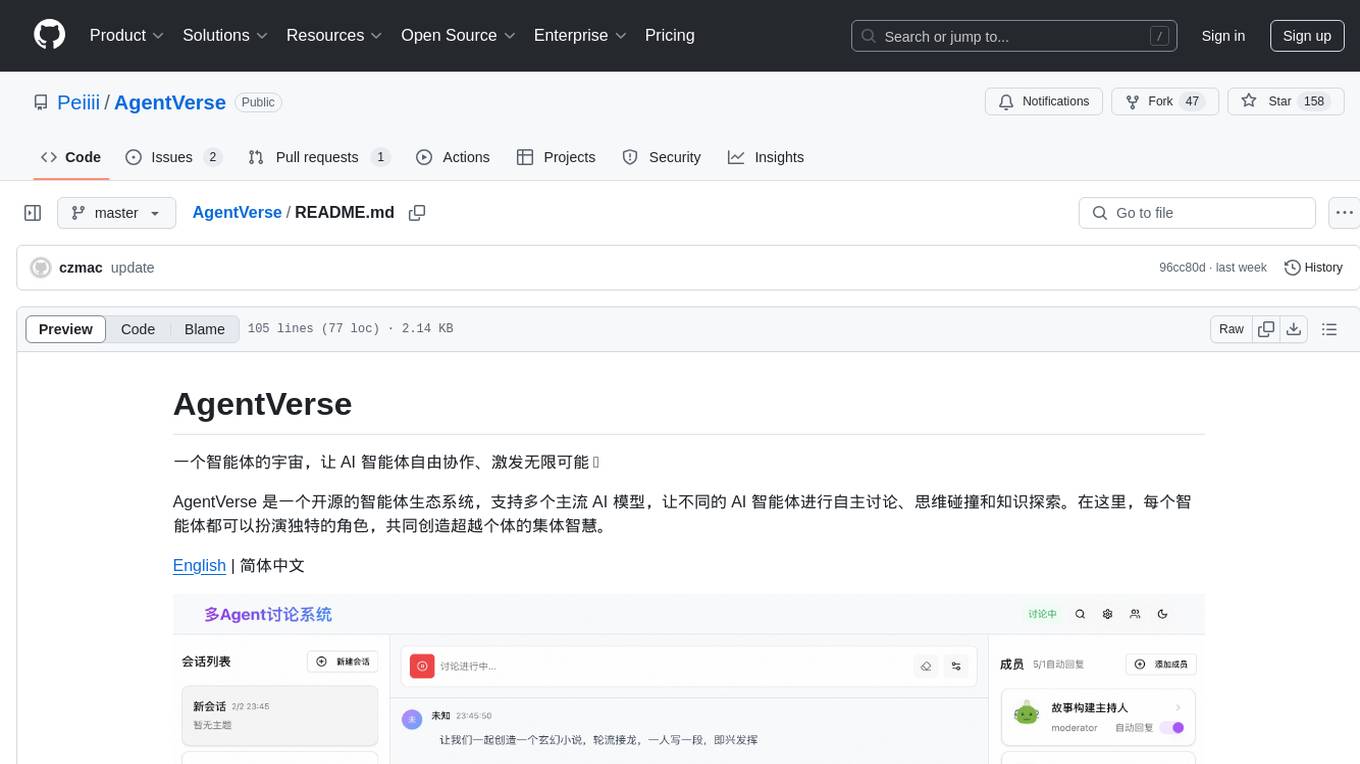

AgentVerse

AgentVerse is an open-source ecosystem for intelligent agents, supporting multiple mainstream AI models to facilitate autonomous discussions, thought collisions, and knowledge exploration. Each intelligent agent can play a unique role here, collectively creating wisdom beyond individuals.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

Code-Interpreter-Api

Code Interpreter API is a project that combines a scheduling center with a sandbox environment, dedicated to creating the world's best code interpreter. It aims to provide a secure, reliable API interface for remotely running code and obtaining execution results, accelerating the development of various AI agents, and being a boon to many AI enthusiasts. The project innovatively combines Docker container technology to achieve secure isolation and execution of Python code. Additionally, the project supports storing generated image data in a PostgreSQL database and accessing it through API endpoints, providing rich data processing and storage capabilities.

meet-libai

The 'meet-libai' project aims to promote and popularize the cultural heritage of the Chinese poet Li Bai by constructing a knowledge graph of Li Bai and training a professional AI intelligent body using large models. The project includes features such as data preprocessing, knowledge graph construction, question-answering system development, and visualization exploration of the graph structure. It also provides code implementations for large models and RAG retrieval enhancement.

XYBotV2

XYBot V2 is a feature-rich WeChat robot framework that supports various interactive functions and gameplays. It provides AI chat, daily news updates, song requests, weather queries, and gaming functionalities like Gomoku and Warthunder player lookup. The tool is open-source and intended for learning and research purposes only, not for commercial or illegal activities. Users must comply with relevant laws and respect WeChat's copyrights and privacy. The tool's functionalities can be extended through a plugin system, allowing for dynamic loading/unloading of plugins.

GitHubSentinel

GitHub Sentinel is an intelligent information retrieval and high-value content mining AI Agent designed for the era of large models (LLMs). It is aimed at users who need frequent and large-scale information retrieval, especially open source enthusiasts, individual developers, and investors. The main features include subscription management, update retrieval, notification system, report generation, multi-model support, scheduled tasks, graphical interface, containerization, continuous integration, and the ability to track and analyze the latest dynamics of GitHub open source projects and expand to other information channels like Hacker News for comprehensive information mining and analysis capabilities.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

z-ai-sdk-python

Z.ai Open Platform Python SDK is the official Python SDK for Z.ai's large model open interface, providing developers with easy access to Z.ai's open APIs. The SDK offers core features like chat completions, embeddings, video generation, audio processing, assistant API, and advanced tools. It supports various functionalities such as speech transcription, text-to-video generation, image understanding, and structured conversation handling. Developers can customize client behavior, configure API keys, and handle errors efficiently. The SDK is designed to simplify AI interactions and enhance AI capabilities for developers.

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

For similar tasks

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

THE-SANDBOX-AutoClicker

The Sandbox AutoClicker is a bot designed for the crypto game The Sandbox, allowing users to automate various processes within the game. The tool offers features such as auto tuning, multi-account auto clicker, multi-threading, a convenient menu, and free proxies. It provides full optimization through a simple menu and is guaranteed to be safe for Windows systems, supporting versions 7/8/8.1/10/11 (x32/64).

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

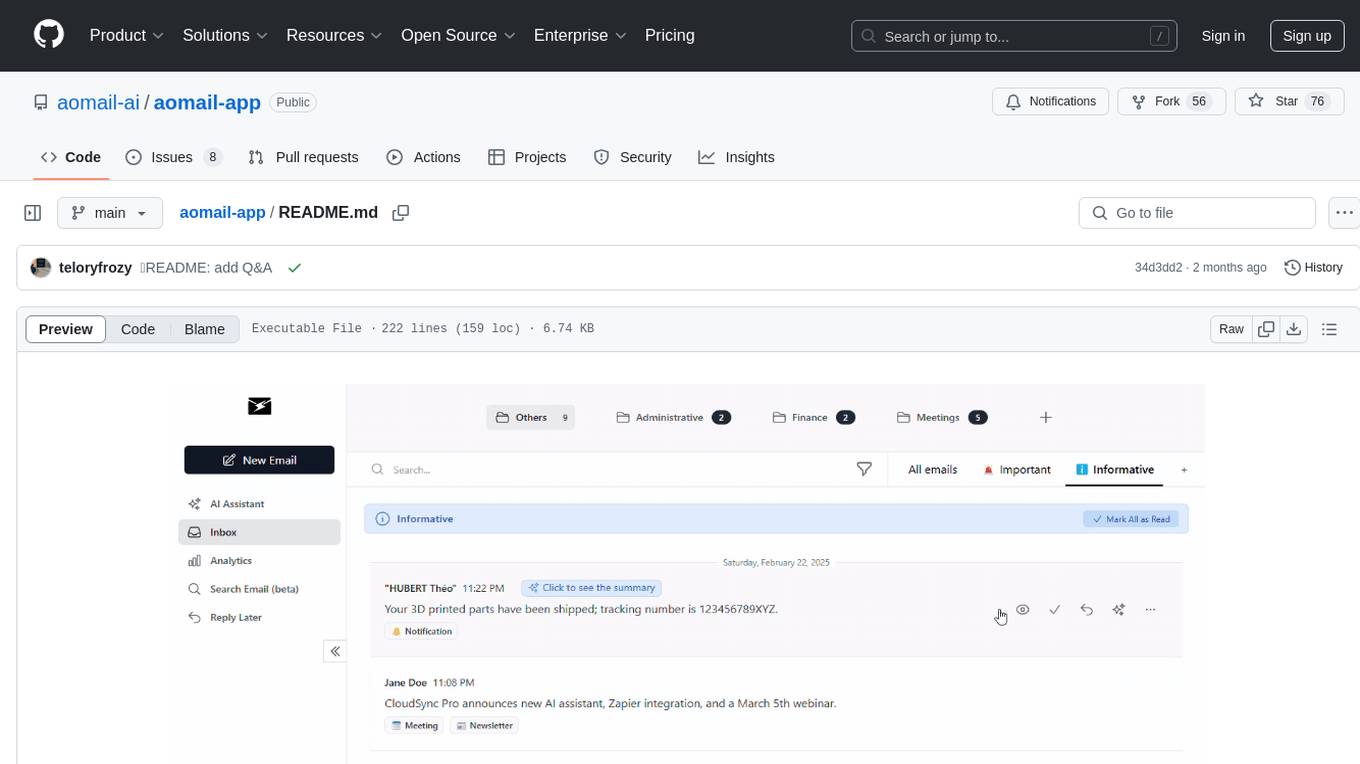

aomail-app

Aomail is an intelligent, open-source email management platform with AI capabilities. It offers email provider integration, AI-powered tools for smart categorization and assistance, analytics and management features. Users can self-host for complete control. Coming soon features include AI custom rules, platform integration with Discord & Slack, and support for various AI providers. The tool is designed to revolutionize email management by providing advanced AI features and analytics.

twitter-automation-ai

Advanced Twitter Automation AI is a modular Python-based framework for automating Twitter at scale. It supports multiple accounts, robust Selenium automation with optional undetected Chrome + stealth, per-account proxies and rotation, structured LLM generation/analysis, community posting, and per-account metrics/logs. The tool allows seamless management and automation of multiple Twitter accounts, content scraping, publishing, LLM integration for generating and analyzing tweet content, engagement automation, configurable automation, browser automation using Selenium, modular design for easy extension, comprehensive logging, community posting, stealth mode for reduced fingerprinting, per-account proxies, LLM structured prompts, and per-account JSON summaries and event logs for observability.

claude-craft

Claude Craft is a comprehensive framework for AI-assisted development with Claude Code, providing standardized rules, agents, and commands across multiple technology stacks. It includes autonomous sprint capabilities, documentation accuracy improvements, CI hardening, and test coverage enhancements. With support for 10 technology stacks, 5 languages, 40 AI agents, 157 slash commands, and various project management features like BMAD v6 framework, Ralph Wiggum loop execution, skills, templates, checklists, and hooks system, Claude Craft offers a robust solution for project development and management. The tool also supports workflow methodology, development tracks, document generation, BMAD v6 project management, quality gates, batch processing, backlog migration, and Claude Code hooks integration.

For similar jobs

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

azure-search-vector-samples

This repository provides code samples in Python, C#, REST, and JavaScript for vector support in Azure AI Search. It includes demos for various languages showcasing vectorization of data, creating indexes, and querying vector data. Additionally, it offers tools like Azure AI Search Lab for experimenting with AI-enabled search scenarios in Azure and templates for deploying custom chat-with-your-data solutions. The repository also features documentation on vector search, hybrid search, creating and querying vector indexes, and REST API references for Azure AI Search and Azure OpenAI Service.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

booster

Booster is a powerful inference accelerator designed for scaling large language models within production environments or for experimental purposes. It is built with performance and scaling in mind, supporting various CPUs and GPUs, including Nvidia CUDA, Apple Metal, and OpenCL cards. The tool can split large models across multiple GPUs, offering fast inference on machines with beefy GPUs. It supports both regular FP16/FP32 models and quantised versions, along with popular LLM architectures. Additionally, Booster features proprietary Janus Sampling for code generation and non-English languages.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

amazon-transcribe-live-call-analytics

The Amazon Transcribe Live Call Analytics (LCA) with Agent Assist Sample Solution is designed to help contact centers assess and optimize caller experiences in real time. It leverages Amazon machine learning services like Amazon Transcribe, Amazon Comprehend, and Amazon SageMaker to transcribe and extract insights from contact center audio. The solution provides real-time supervisor and agent assist features, integrates with existing contact centers, and offers a scalable, cost-effective approach to improve customer interactions. The end-to-end architecture includes features like live call transcription, call summarization, AI-powered agent assistance, and real-time analytics. The solution is event-driven, ensuring low latency and seamless processing flow from ingested speech to live webpage updates.

ai-lab-recipes

This repository contains recipes for building and running containerized AI and LLM applications with Podman. It provides model servers that serve machine-learning models via an API, allowing developers to quickly prototype new AI applications locally. The recipes include components like model servers and AI applications for tasks such as chat, summarization, object detection, etc. Images for sample applications and models are available in `quay.io`, and bootable containers for AI training on Linux OS are enabled.

XLearning

XLearning is a scheduling platform for big data and artificial intelligence, supporting various machine learning and deep learning frameworks. It runs on Hadoop Yarn and integrates frameworks like TensorFlow, MXNet, Caffe, Theano, PyTorch, Keras, XGBoost. XLearning offers scalability, compatibility, multiple deep learning framework support, unified data management based on HDFS, visualization display, and compatibility with code at native frameworks. It provides functions for data input/output strategies, container management, TensorBoard service, and resource usage metrics display. XLearning requires JDK >= 1.7 and Maven >= 3.3 for compilation, and deployment on CentOS 7.2 with Java >= 1.7 and Hadoop 2.6, 2.7, 2.8.