XYBotV2

🤖 功能丰富的微信机器人框架 | AI对话、对接Dify、积分系统、游戏互动、每日新闻、天气查询 | 非Hook非Web实现 | 支持 Windows✅ Linux✅ MacOS✅ | 全新架构解决XYBot第一代痛点!

Stars: 367

XYBot V2 is a feature-rich WeChat robot framework that supports various interactive functions and gameplays. It provides AI chat, daily news updates, song requests, weather queries, and gaming functionalities like Gomoku and Warthunder player lookup. The tool is open-source and intended for learning and research purposes only, not for commercial or illegal activities. Users must comply with relevant laws and respect WeChat's copyrights and privacy. The tool's functionalities can be extended through a plugin system, allowing for dynamic loading/unloading of plugins.

README:

XYBot V2 是一个功能丰富的微信机器人框架,支持多种互动功能和游戏玩法。

- 这个项目免费开源,不存在收费。

- 本工具仅供学习和技术研究使用,不得用于任何商业或非法行为。

- 本工具的作者不对本工具的安全性、完整性、可靠性、有效性、正确性或适用性做任何明示或暗示的保证,也不对本工具的使用或滥用造成的任何直接或间接的损失、责任、索赔、要求或诉讼承担任何责任。

- 本工具的作者保留随时修改、更新、删除或终止本工具的权利,无需事先通知或承担任何义务。

- 本工具的使用者应遵守相关法律法规,尊重微信的版权和隐私,不得侵犯微信或其他第三方的合法权益,不得从事任何违法或不道德的行为。

- 本工具的使用者在下载、安装、运行或使用本工具时,即表示已阅读并同意本免责声明。如有异议,请立即停止使用本工具,并删除所有相关文件。

- 🤖 AI聊天 - 支持文字、图片、语音等多模态交互

- 📰 每日新闻 - 自动推送每日新闻

- 🎵 点歌系统 - 支持在线点歌

- 🌤️ 天气查询 - 查询全国各地天气

- 🎮 游戏功能 - 五子棋、战争雷霆玩家查询等

- 📝 每日签到 - 支持连续签到奖励

- 🎲 抽奖系统 - 多种抽奖玩法

- 🧧 红包系统 - 群内发积分红包

- 💰 积分交易 - 用户间积分转账

- 📊 积分排行 - 查看积分排名

- ⚙️ 插件管理 - 动态加载/卸载插件

- 👥 白名单管理 - 控制机器人使用权限

- 📊 积分管理 - 管理员可调整用户积分

- 🔄 签到重置 - 重置所有用户签到状态

XYBot V2 采用插件化设计,所有功能都以插件形式实现。主要插件包括:

- 👨💼 AdminPoint - 积分管理

- 🔄 AdminSignInReset - 签到重置

- 🛡️ AdminWhitelist - 白名单管理

- 🤖 Ai - AI聊天

- 📊 BotStatus - 机器人状态

- 📱 GetContact - 获取通讯录

- 🌤️ GetWeather - 天气查询

- 🎮 Gomoku - 五子棋游戏

- 🌅 GoodMorning - 早安问候

- 📈 Leaderboard - 积分排行

- 🎲 LuckyDraw - 幸运抽奖

- 📋 Menu - 菜单系统

- 🎵 Music - 点歌系统

- 📰 News - 新闻推送

- 💱 PointTrade - 积分交易

- 💰 QueryPoint - 积分查询

- 🎯 RandomMember - 随机群成员

- 🖼️ RandomPicture - 随机图片

- 🧧 RedPacket - 红包系统

- ✍️ SignIn - 每日签到

✈️ Warthunder - 战争雷霆查询

- 安装 Python 3.11: https://www.python.org/downloads/release/python-3119/

- 安装 ffmpeg: 从ffmpeg官网下载并添加到环境变量

- 安装 Redis: 从Redis下载并启动服务

git clone https://github.com/HenryXiaoYang/XYBotV2.git

cd XYBotV2

python -m venv venv

.\venv\Scripts\activate

pip install -r requirements.txtstart redis-server

python app.pysudo apt update

sudo apt install python3.11 python3.11-venv redis-server ffmpeg

sudo systemctl start redis

sudo systemctl enable redisgit clone https://github.com/HenryXiaoYang/XYBotV2.git

cd XYBotV2

python3.11 -m venv venv

source venv/bin/activate

pip install -r requirements.txtpython app.py如果你不需要WebUI界面,可以直接使用bot.py:

python bot.py- 主配置: main_config.toml

- 插件配置: plugins/all_in_one_config.toml

这几个插件需要配置API密钥:

- 🤖 Ai

- 🌤️ GetWeather

-

与网络相关的报错

- 检查网络连接

- 关闭代理软件

- 重启XYBot和Redis

-

正在运行相关的报错- 将占用9000端口的进程结束

-

无法访问Web界面

- 确保9999端口已开放

- 配置防火墙允许9999端口

提交代码时请使用 feat: something 作为说明,支持的标识如下:

-

feat新功能(feature) -

fix修复bug -

docs文档(documentation) -

style格式(不影响代码运行的变动) -

ref重构(即不是新增功能,也不是修改bug的代码变动) -

perf性能优化(performance) -

test增加测试 -

chore构建过程或辅助工具的变动 -

revert撤销

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for XYBotV2

Similar Open Source Tools

XYBotV2

XYBot V2 is a feature-rich WeChat robot framework that supports various interactive functions and gameplays. It provides AI chat, daily news updates, song requests, weather queries, and gaming functionalities like Gomoku and Warthunder player lookup. The tool is open-source and intended for learning and research purposes only, not for commercial or illegal activities. Users must comply with relevant laws and respect WeChat's copyrights and privacy. The tool's functionalities can be extended through a plugin system, allowing for dynamic loading/unloading of plugins.

prose-polish

prose-polish is a tool for AI interaction through drag-and-drop cards, focusing on editing copy and manuscripts. It can recognize Markdown-formatted documents, automatically breaking them into paragraph cards. Users can create prefabricated prompt cards and quickly connect them to the manuscript for editing. The modified manuscript is still presented in card form, allowing users to drag it out as a new paragraph. To use it smoothly, users just need to remember one rule: 'Plug the plug into the socket!'

wechat-robot-client

The Wechat Robot Client is an intelligent robot management system that provides rich interactive experiences. It includes features such as AI chat, drawing, voice, group chat functionalities, song requests, daily summaries, friend circle viewing, friend adding, group chat management, file messaging, multiple login methods support, and more. The system also supports features like sending files, various login methods, and integration with other apps like '王者荣耀' and '吃鸡'. It offers a comprehensive solution for managing Wechat interactions and automating various tasks.

ZcChat

ZcChat is an AI desktop pet suitable for Galgame characters, featuring long-term memory, expressive actions, control over the computer, and voice functions. It utilizes Letta for AI long-term memory, Galgame-style character illustrations for more actions and expressions, and voice interaction with support for various voice synthesis tools like Vits. Users can configure characters, install Letta, set up voice synthesis and input, and control the pet to interact with the computer. The tool enhances visual and auditory experiences for users interested in AI desktop pets.

ai-paint-today-BE

AI Paint Today is an API server repository that allows users to record their emotions and daily experiences, and based on that, AI generates a beautiful picture diary of their day. The project includes features such as generating picture diaries from written entries, utilizing DALL-E 2 model for image generation, and deploying on AWS and Cloudflare. The project also follows specific conventions and collaboration strategies for development.

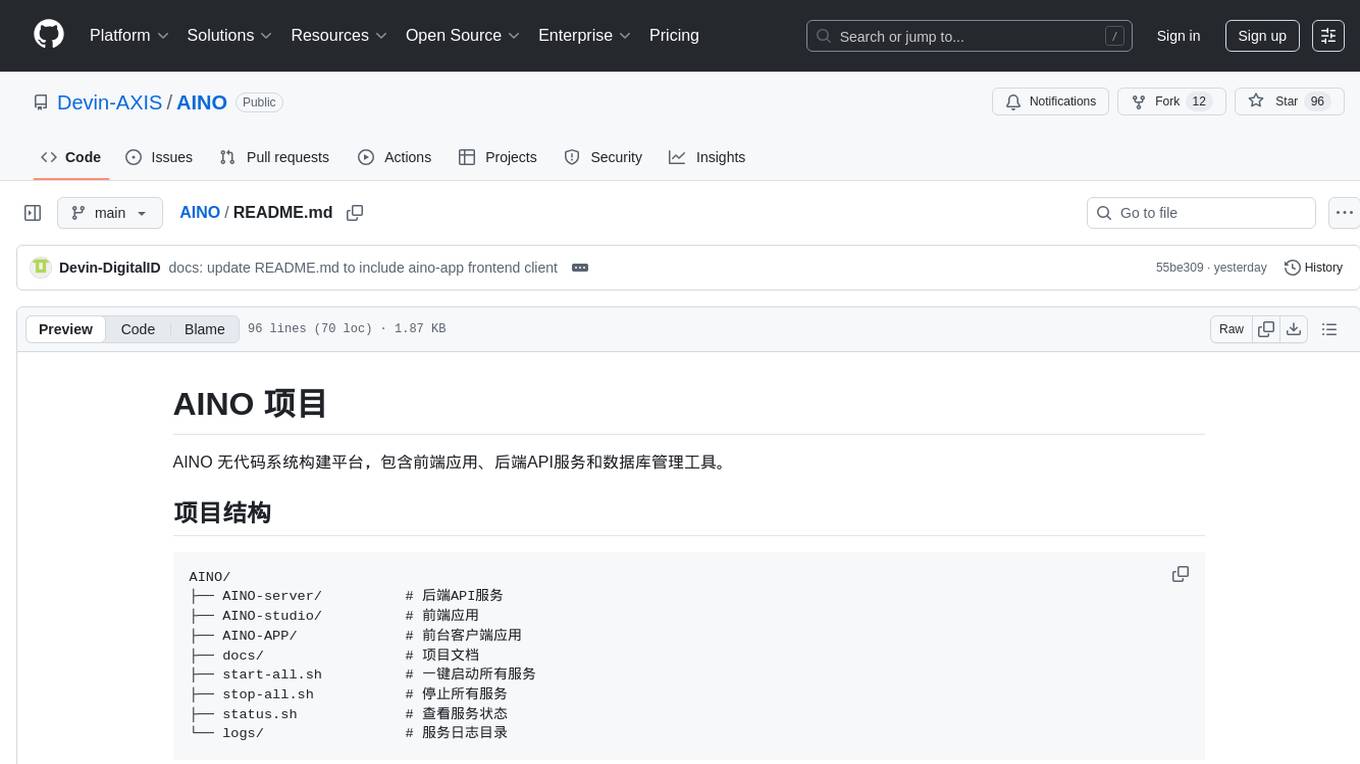

AINO

AINO is a no-code system construction platform that includes front-end applications, back-end API services, and database management tools. The project structure consists of AINO-server for back-end API services, AINO-studio for front-end applications, AINO-APP for front-end client applications, docs for project documentation, start-all.sh for one-click starting of all services, stop-all.sh for stopping all services, status.sh for checking service status, and logs for service logs. AINO utilizes Hono + TypeScript + Drizzle ORM for the back-end, Next.js + React + TypeScript for the front-end, PostgreSQL for the database, and pnpm as the package manager.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

AIMedia

AIMedia is a fully automated AI media software that automatically fetches hot news, generates news, and publishes on various platforms. It supports hot news fetching from platforms like Douyin, NetEase News, Weibo, The Paper, China Daily, and Sohu News. Additionally, it enables AI-generated images for text-only news to enhance originality and reading experience. The tool is currently commercialized with plans to support video auto-generation for platform publishing in the future. It requires a minimum CPU of 4 cores or above, 8GB RAM, and supports Windows 10 or above. Users can deploy the tool by cloning the repository, modifying the configuration file, creating a virtual environment using Conda, and starting the web interface. Feedback and suggestions can be submitted through issues or pull requests.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

astrbot_plugin_qq_group_daily_analysis

AstrBot Plugin QQ Group Daily Analysis is an intelligent chat analysis plugin based on AstrBot. It provides comprehensive statistics on group chat activity and participation, extracts hot topics and discussion points, analyzes user behavior to assign personalized titles, and identifies notable messages in the chat. The plugin generates visually appealing daily chat analysis reports in various formats including images and PDFs. Users can customize analysis parameters, manage specific groups, and schedule automatic daily analysis. The plugin requires configuration of an LLM provider for intelligent analysis and adaptation to the QQ platform adapter.

Long-Novel-GPT

Long-Novel-GPT is a long novel generator based on large language models like GPT. It utilizes a hierarchical outline/chapter/text structure to maintain the coherence of long novels. It optimizes API calls cost through context management and continuously improves based on self or user feedback until reaching the set goal. The tool aims to continuously refine and build novel content based on user-provided initial ideas, ultimately generating long novels at the level of human writers.

awesome-chatgpt-zh

The Awesome ChatGPT Chinese Guide project aims to help Chinese users understand and use ChatGPT. It collects various free and paid ChatGPT resources, as well as methods to communicate more effectively with ChatGPT in Chinese. The repository contains a rich collection of ChatGPT tools, applications, and examples.

claude-init

Claude Code Chinese development suite is a localized version based on the Claude Code Development Kit, offering a seamless Chinese AI programming experience. It features complete Chinese AI commands, documentation system, error messages, and installation experience. The suite includes intelligent context management with a three-tier document structure, automatic context injection, smart document routing, and cross-session state management. It integrates development tools like Hook system, MCP server support, security scans, and notification system. Additionally, it provides a comprehensive template library with project templates, document templates, and configuration examples.

AstrBot

AstrBot is an open-source one-stop Agentic chatbot platform and development framework. It supports large model conversations, multiple messaging platforms, Agent capabilities, plugin extensions, and WebUI for visual configuration and management of the chatbot.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.