mcp-prompts

Model Context Protocol server for managing, storing, and providing prompts and prompt templates for LLM interactions.

Stars: 89

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

README:

A robust, extensible server for managing, versioning, and serving prompts and templates for LLM applications, built on the Model Context Protocol (MCP) with multi-backend support including AWS, PostgreSQL, and file storage.

- 🔄 Multiple Storage Backends: DynamoDB, PostgreSQL, and local file storage

- 📦 Model Context Protocol: Full MCP compliance for seamless AI integration

- 🏗️ Hexagonal Architecture: Clean separation of concerns with adapter pattern

- ⚡ Serverless Ready: AWS Lambda deployment with API Gateway

- 🔍 Advanced Querying: Filter by category, tags, and metadata

- 📊 Version Control: Complete prompt versioning and history

- 🔐 Enterprise Security: IAM, VPC, and encryption support

- 📈 Monitoring: CloudWatch metrics and comprehensive logging

- 🧪 Testing: Full test suite with local development support

- Node.js 18+ and PNPM

- AWS CLI (for AWS deployments)

- PostgreSQL (for database deployments)

- Docker (for containerized deployments)

# Install dependencies

pnpm install

# Start with memory storage (fastest)

STORAGE_TYPE=memory pnpm run dev

# Or use file storage

STORAGE_TYPE=file PROMPTS_DIR=./data/prompts pnpm run dev# Deploy with PostgreSQL

./scripts/deploy-postgres.sh

# Or run locally

STORAGE_TYPE=postgres pnpm run dev# One-command AWS deployment

./scripts/deploy-aws-enhanced.sh- Architecture

- Storage Types

- Installation

- Configuration

- API Reference

- MCP Integration

- AWS Deployment

- Monitoring

- Testing

- Troubleshooting

- Contributing

This project follows hexagonal architecture (ports & adapters) with clean separation between:

- Core Business Logic: Prompt management, versioning, and validation

- Ports: Interfaces for storage, events, and external services

- Adapters: Concrete implementations (DynamoDB, PostgreSQL, S3, etc.)

- Infrastructure: AWS services, HTTP servers, and CLI tools

┌─────────────────────────────────────┐

│ Presentation Layer │

│ ┌─────────────┐ ┌────────────────┐ │

│ │ CLI Tool │ │ HTTP Server │ │

│ └─────────────┘ └────────────────┘ │

└─────────────────────────────────────┘

│

┌─────────────────────────────────────┐

│ Application Layer │

│ ┌────────────────────────────────┐ │

│ │ Prompt Service & Use Cases │ │

│ └────────────────────────────────┘ │

└─────────────────────────────────────┘

│

┌─────────────────────────────────────┐

│ Domain Layer │

│ ┌────────────────────────────────┐ │

│ │ Prompt Entities & Business │ │

│ │ Logic │ │

│ └────────────────────────────────┘ │

└─────────────────────────────────────┘

│

┌─────────────────────────────────────┐

│ Infrastructure Layer │

│ ┌─────┐ ┌─────┐ ┌─────┐ ┌─────┐ │

│ │DDB │ │PG │ │S3 │ │SQS │ │

│ │Adapter│ │Adapter│ │Adapter│ │Adapter│ │

│ └─────┘ └─────┘ └─────┘ └─────┘ │

└─────────────────────────────────────┘

Best for: Development, testing, offline usage

Features:

- Simple JSON file-based storage

- No external dependencies

- Easy backup and migration

- Perfect for local development

Configuration:

STORAGE_TYPE=file

PROMPTS_DIR=./data/promptsUsage:

STORAGE_TYPE=file pnpm run devBest for: Production applications, complex queries, ACID compliance

Features:

- Full ACID compliance

- Complex queries and indexing

- Session management

- Backup and restore capabilities

- Concurrent access support

Configuration:

STORAGE_TYPE=postgres

DB_HOST=localhost

DB_PORT=5432

DB_NAME=mcp_prompts

DB_USER=mcp_user

DB_PASSWORD=secure_passwordDeployment:

./scripts/deploy-postgres.shBest for: Scalable production, cloud-native deployments

Features:

- DynamoDB for metadata storage

- S3 for catalog and assets

- SQS for event processing

- API Gateway for HTTP endpoints

- CloudFront for global CDN

- CloudWatch for monitoring

Configuration:

STORAGE_TYPE=aws

AWS_REGION=us-east-1

PROMPTS_TABLE=mcp-prompts

PROMPTS_BUCKET=mcp-prompts-catalog

PROCESSING_QUEUE=mcp-prompts-processingDeployment:

./scripts/deploy-aws-enhanced.shBest for: Unit testing, CI/CD pipelines, temporary deployments

Features:

- In-memory storage

- No persistence

- Fastest performance

- Sample data included

Configuration:

STORAGE_TYPE=memory# Install globally

npm install -g @sparesparrow/mcp-prompts

# Or use with npx

npx @sparesparrow/mcp-prompts --help# Clone repository

git clone https://github.com/sparesparrow/mcp-prompts.git

cd mcp-prompts

# Install dependencies

pnpm install

# Build project

pnpm run build

# Run locally

STORAGE_TYPE=memory pnpm run dev# Build image

docker build -t mcp-prompts .

# Run with different storage types

docker run -p 3000:3000 -e STORAGE_TYPE=memory mcp-prompts

docker run -p 3000:3000 -e STORAGE_TYPE=file -v $(pwd)/data:/app/data mcp-prompts| Variable | Description | Default | Required |

|---|---|---|---|

STORAGE_TYPE |

Storage backend (memory/file/postgres/aws) | memory |

Yes |

NODE_ENV |

Environment (development/production) | development |

No |

LOG_LEVEL |

Logging level (debug/info/warn/error) | info |

No |

PORT |

HTTP server port | 3000 |

No |

| Variable | Description | Default | Required |

|---|---|---|---|

PROMPTS_DIR |

Directory for prompt files | ./data/prompts |

No |

| Variable | Description | Default | Required |

|---|---|---|---|

DB_HOST |

Database host | localhost |

Yes |

DB_PORT |

Database port | 5432 |

No |

DB_NAME |

Database name | mcp_prompts |

No |

DB_USER |

Database user | - | Yes |

DB_PASSWORD |

Database password | - | Yes |

DB_SSL |

Enable SSL connection | false |

No |

| Variable | Description | Default | Required |

|---|---|---|---|

AWS_REGION |

AWS region | us-east-1 |

Yes (for AWS storage) |

AWS_PROFILE |

AWS profile name | default |

No |

PROMPTS_TABLE |

DynamoDB table name | mcp-prompts |

No |

SESSIONS_TABLE |

Sessions table name | mcp-sessions |

No |

PROMPTS_BUCKET |

S3 bucket name | mcp-prompts-catalog-{account}-{region} |

No |

PROCESSING_QUEUE |

SQS queue URL | - | No |

CATALOG_SYNC_QUEUE |

Catalog sync queue URL | - | No |

-

GET /health- Service health check with component status -

GET /mcp- MCP server capabilities and supported features

-

GET /v1/prompts- List prompts with optional filtering- Query parameters:

?category=,?limit=,?offset=,?tags=

- Query parameters:

-

POST /v1/prompts- Create new prompt -

GET /v1/prompts/{id}- Get specific prompt by ID -

PUT /v1/prompts/{id}- Update existing prompt -

DELETE /v1/prompts/{id}- Delete prompt -

POST /v1/prompts/{id}/apply- Apply template variables

-

GET /mcp/tools- List available MCP tools -

POST /mcp/tools- Execute MCP tool with parameters

curl -X POST http://localhost:3000/v1/prompts \

-H "Content-Type: application/json" \

-d '{

"name": "Code Review Assistant",

"description": "Advanced code review helper",

"template": "Please review this {{language}} code:\n\n```{{language}}\n{{code}}\n```\n\nFocus on:\n- Security issues\n- Performance\n- Best practices",

"category": "development",

"tags": ["code-review", "security", "performance"],

"variables": [

{"name": "language", "description": "Programming language", "type": "string", "required": true},

{"name": "code", "description": "Code to review", "type": "string", "required": true}

]

}'curl -X POST http://localhost:3000/v1/prompts/code_review_assistant/apply \

-H "Content-Type: application/json" \

-d '{

"language": "typescript",

"code": "function hello() { console.log(\"Hello World\"); }"

}'MCP-Prompts implements the full Model Context Protocol specification, making it compatible with any MCP-compatible AI assistant or IDE.

- HTTP Mode (Default): REST API server

- MCP Mode: Native MCP protocol server

- CLI Mode: Command-line interface

Claude Desktop:

{

"mcpServers": {

"mcp-prompts": {

"command": "npx",

"args": ["@sparesparrow/mcp-prompts"],

"env": {

"STORAGE_TYPE": "aws",

"AWS_REGION": "us-east-1"

}

}

}

}VS Code Extension:

{

"mcp": {

"servers": {

"prompts": {

"command": "npx",

"args": ["@sparesparrow/mcp-prompts"],

"options": {

"env": {

"STORAGE_TYPE": "aws",

"AWS_REGION": "us-east-1"

}

}

}

}

}

}- list_prompts: Browse available prompts by category

- get_prompt: Retrieve specific prompt with full details

- create_prompt: Create new prompts programmatically

- update_prompt: Modify existing prompts

- delete_prompt: Remove prompts

- apply_prompt: Apply template variables to generate final prompts

The AWS deployment creates a production-ready serverless architecture:

- API Gateway - RESTful API endpoints with CORS

- Lambda Functions - Serverless compute (MCP server, processing, catalog sync)

- DynamoDB - NoSQL storage with global secondary indexes

- S3 - Object storage for catalog and assets

- SQS - Message queuing for async processing

- CloudFront - Global CDN for performance

- CloudWatch - Monitoring, logging, and metrics

# Prerequisites: AWS CLI configured

aws configure

# One-command deployment

./scripts/deploy-aws-enhanced.sh# Install dependencies

pnpm install

# Build project

pnpm run build

# Deploy CDK infrastructure

cd cdk

pnpm install

cdk bootstrap aws://$AWS_ACCOUNT/$AWS_REGION

cdk deploy --all --require-approval neverThe deployment creates minimal IAM roles following least-privilege:

| Service | Permissions | Purpose |

|---|---|---|

| Lambda | CloudWatch Logs, VPC access | Function execution and logging |

| DynamoDB | Read/write access to tables | Prompt and session storage |

| S3 | Read/write access to bucket | Catalog storage and assets |

| SQS | Send/receive/delete messages | Async processing queues |

| CloudWatch | PutMetricData, CreateLogGroups | Monitoring and metrics |

Free Tier Usage:

- Lambda: 1M requests/month

- DynamoDB: 25GB storage + 25 RCU/WCU

- S3: 5GB storage + 20K GET + 2K PUT requests

- API Gateway: 1M requests/month

Estimated Monthly Costs (beyond free tier):

- $25-35/month for moderate usage

- Scales with usage, no fixed costs

- Encryption at rest (DynamoDB, S3)

- Encryption in transit (HTTPS/TLS)

- IAM roles with minimal permissions

- CloudTrail audit logging

- VPC support (optional)

Automatic custom metrics:

- PromptAccessCount - Usage tracking by prompt/category

- OperationLatency - API response times

- ApiSuccess/ApiError - Success/error rates

- ProcessingLatency - Background processing times

- PromptCreated/Updated/Deleted - CRUD operations

# Check all services

curl https://your-api-gateway-url/health

# Expected response

{

"status": "healthy",

"services": {

"dynamodb": {"status": "healthy"},

"s3": {"status": "healthy"},

"sqs": {"status": "healthy"}

}

}Recommended alarms:

- API error rate > 5%

- Average latency > 1000ms

- DynamoDB throttling events

- Lambda error rate > 1%

- SQS dead letter messages > 0

- VPC Endpoints for private AWS service access

- Security Groups restricting Lambda network access

- WAF protection for API Gateway (optional)

- Encryption at rest for DynamoDB and S3

- Encryption in transit via HTTPS/TLS

- IAM roles following least-privilege principle

- API Gateway authentication via Cognito (optional)

- AWS Systems Manager Parameter Store for configuration

- AWS Secrets Manager for sensitive data

- Environment variables for Lambda configuration

# Run unit tests

pnpm test

# Test with local DynamoDB

docker run -p 8000:8000 amazon/dynamodb-local

export DYNAMODB_ENDPOINT=http://localhost:8000

STORAGE_TYPE=memory pnpm run dev# Test health endpoint

curl http://localhost:3000/health

# Test prompts API

curl http://localhost:3000/v1/prompts

# Test prompt creation

curl -X POST http://localhost:3000/v1/prompts \

-H "Content-Type: application/json" \

-d '{"name": "test", "template": "Hello {{name}}", "category": "greeting"}'# Test deployed API

curl https://your-api-gateway-url/health

# Test with AWS credentials

AWS_REGION=us-east-1 npx @sparesparrow/mcp-prompts list-

403 Access Denied

- Check IAM permissions for your AWS user/role

- Verify resource policies on DynamoDB/S3

- Check VPC configuration if using private subnets

-

CDK Bootstrap Required

cdk bootstrap aws://ACCOUNT/REGION

-

Lambda Timeout

- Increase timeout in CDK stack (default: 30s)

- Optimize cold start performance

- Check DynamoDB/S3 connection latency

-

DynamoDB Throttling

- Switch to On-Demand billing mode

- Optimize partition key distribution

- Implement exponential backoff in clients

-

PostgreSQL Connection Failed

- Verify database credentials

- Check network connectivity

- Ensure database is running and accessible

-

File Storage Permissions

chmod -R 755 data/

-

MCP Server Not Connecting

- Verify environment variables are set

- Check AWS credentials for cloud storage

- Ensure correct MCP server mode

# Check Lambda logs

aws logs describe-log-groups --log-group-name-prefix "/aws/lambda/McpPromptsStack"

# Check DynamoDB table

aws dynamodb describe-table --table-name mcp-prompts

# Check S3 bucket

aws s3 ls s3://your-bucket-name --recursive

# Check SQS queue

aws sqs get-queue-attributes --queue-url your-queue-url --attribute-names All# Check application logs

tail -f logs/mcp-prompts.log

# Test database connectivity

STORAGE_TYPE=postgres pg_isready -h localhost -p 5432

# Check Node.js processes

ps aux | grep mcp-prompts- Increase memory allocation (more CPU)

- Use provisioned concurrency for latency-sensitive functions

- Optimize package size and dependencies

- Use appropriate indexes

- Implement connection pooling

- Monitor query performance

The repository includes several CI/CD workflows:

-

CI/CD Pipeline (

.github/workflows/ci-cd.yml)- Tests on multiple storage types

- Builds and pushes Docker images

- Deploys to AWS

- Publishes to NPM

-

Multi-Platform Docker Build (

.github/workflows/docker-multi-platform.yml)- Builds for multiple architectures

- Pushes to GitHub Container Registry

-

AWS Deployment (

.github/workflows/aws-deploy.yml)- Automated AWS deployment

- Health checks and smoke tests

Configure these secrets in your GitHub repository:

# AWS Deployment

AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY

AWS_REGION

# NPM Publishing

NPM_TOKEN

# Security Scanning

SNYK_TOKEN# Install dependencies

pnpm install

# Build project

pnpm run build

# Run tests

pnpm test

# Deploy to AWS

./scripts/deploy-aws-enhanced.sh

# Or deploy to Docker

./scripts/deploy-docker.sh- Fork the repository

-

Clone your fork

git clone https://github.com/your-username/mcp-prompts.git cd mcp-prompts -

Install dependencies

pnpm install

-

Create feature branch

git checkout -b feature/your-feature-name

-

Make changes and test

pnpm test pnpm run build - Submit pull request

- Follow existing code style and patterns

- Add tests for new functionality

- Update documentation for API changes

- Use conventional commit messages

- Test across all storage backends

- Hexagonal Architecture: Ports & Adapters pattern

- Storage Adapters: Pluggable storage implementations

- MCP Compliance: Full protocol implementation

- TypeScript: Strict typing throughout

MIT License - see LICENSE file for details.

Copyright (c) 2024 Sparre Sparrow

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-prompts

Similar Open Source Tools

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

hub

Hub is an open-source, high-performance LLM gateway written in Rust. It serves as a smart proxy for LLM applications, centralizing control and tracing of all LLM calls and traces. Built for efficiency, it provides a single API to connect to any LLM provider. The tool is designed to be fast, efficient, and completely open-source under the Apache 2.0 license.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

Shannon

Shannon is a battle-tested infrastructure for AI agents that solves problems at scale, such as runaway costs, non-deterministic failures, and security concerns. It offers features like intelligent caching, deterministic replay of workflows, time-travel debugging, WASI sandboxing, and hot-swapping between LLM providers. Shannon allows users to ship faster with zero configuration multi-agent setup, multiple AI patterns, time-travel debugging, and hot configuration changes. It is production-ready with features like WASI sandbox, token budget control, policy engine (OPA), and multi-tenancy. Shannon helps scale without breaking by reducing costs, being provider agnostic, observable by default, and designed for horizontal scaling with Temporal workflow orchestration.

solo-server

Solo Server is a lightweight server designed for managing hardware-aware inference. It provides seamless setup through a simple CLI and HTTP servers, an open model registry for pulling models from platforms like Ollama and Hugging Face, cross-platform compatibility for effortless deployment of AI models on hardware, and a configurable framework that auto-detects hardware components (CPU, GPU, RAM) and sets optimal configurations.

google_workspace_mcp

The Google Workspace MCP Server is a production-ready server that integrates major Google Workspace services with AI assistants. It supports single-user and multi-user authentication via OAuth 2.1, making it a powerful backend for custom applications. Built with FastMCP for optimal performance, it features advanced authentication handling, service caching, and streamlined development patterns. The server provides full natural language control over Google Calendar, Drive, Gmail, Docs, Sheets, Slides, Forms, Tasks, and Chat through all MCP clients, AI assistants, and developer tools. It supports free Google accounts and Google Workspace plans with expanded app options like Chat & Spaces. The server also offers private cloud instance options.

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

shimmy

Shimmy is a 5.1MB single-binary local inference server providing OpenAI-compatible endpoints for GGUF models. It offers fast, reliable AI inference with sub-second responses, zero configuration, and automatic port management. Perfect for developers seeking privacy, cost-effectiveness, speed, and easy integration with popular tools like VSCode and Cursor. Shimmy is designed to be invisible infrastructure that simplifies local AI development and deployment.

claude-flow

Claude-Flow is a workflow automation tool designed to streamline and optimize business processes. It provides a user-friendly interface for creating and managing workflows, allowing users to automate repetitive tasks and improve efficiency. With features such as drag-and-drop workflow builder, customizable templates, and integration with popular business tools, Claude-Flow empowers users to automate their workflows without the need for extensive coding knowledge. Whether you are a small business owner looking to streamline your operations or a project manager seeking to automate task assignments, Claude-Flow offers a flexible and scalable solution to meet your workflow automation needs.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

Rankify

Rankify is a Python toolkit designed for unified retrieval, re-ranking, and retrieval-augmented generation (RAG) research. It integrates 40 pre-retrieved benchmark datasets and supports 7 retrieval techniques, 24 state-of-the-art re-ranking models, and multiple RAG methods. Rankify provides a modular and extensible framework, enabling seamless experimentation and benchmarking across retrieval pipelines. It offers comprehensive documentation, open-source implementation, and pre-built evaluation tools, making it a powerful resource for researchers and practitioners in the field.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

AgentNeo

AgentNeo is an advanced, open-source Agentic AI Application Observability, Monitoring, and Evaluation Framework designed to provide deep insights into AI agents, Large Language Model (LLM) calls, and tool interactions. It offers robust logging, visualization, and evaluation capabilities to help debug and optimize AI applications with ease. With features like tracing LLM calls, monitoring agents and tools, tracking interactions, detailed metrics collection, flexible data storage, simple instrumentation, interactive dashboard, project management, execution graph visualization, and evaluation tools, AgentNeo empowers users to build efficient, cost-effective, and high-quality AI-driven solutions.

ChordMiniApp

ChordMini is an advanced music analysis platform with AI-powered chord recognition, beat detection, and synchronized lyrics. It features a clean and intuitive interface for YouTube search, chord progression visualization, interactive guitar diagrams with accurate fingering patterns, lead sheet with AI assistant for synchronized lyrics transcription, and various add-on features like Roman Numeral Analysis, Key Modulation Signals, Simplified Chord Notation, and Enhanced Chord Correction. The tool requires Node.js, Python 3.9+, and a Firebase account for setup. It offers a hybrid backend architecture for local development and production deployments, with features like beat detection, chord recognition, lyrics processing, rate limiting, and audio processing supporting MP3, WAV, and FLAC formats. ChordMini provides a comprehensive music analysis workflow from user input to visualization, including dual input support, environment-aware processing, intelligent caching, advanced ML pipeline, and rich visualization options.

wealth-tracker

Wealth Tracker is a personal finance management tool designed to help users track their income, expenses, and investments in one place. With intuitive features and customizable categories, users can easily monitor their financial health and make informed decisions. The tool provides detailed reports and visualizations to analyze spending patterns and set financial goals. Whether you are budgeting, saving for a big purchase, or planning for retirement, Wealth Tracker offers a comprehensive solution to manage your money effectively.

For similar tasks

AI_NovelGenerator

AI_NovelGenerator is a versatile novel generation tool based on large language models. It features a novel setting workshop for world-building, character development, and plot blueprinting, intelligent chapter generation for coherent storytelling, a status tracking system for character arcs and foreshadowing management, a semantic retrieval engine for maintaining long-range context consistency, integration with knowledge bases for local document references, an automatic proofreading mechanism for detecting plot contradictions and logic conflicts, and a visual workspace for GUI operations encompassing configuration, generation, and proofreading. The tool aims to assist users in efficiently creating logically rigorous and thematically consistent long-form stories.

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

Jailbreaks

Jailbreaks is a repository dedicated to organizing and curating models suitable for NSFW writing. It serves as a collection of resources for writers looking to explore adult content in a structured manner.

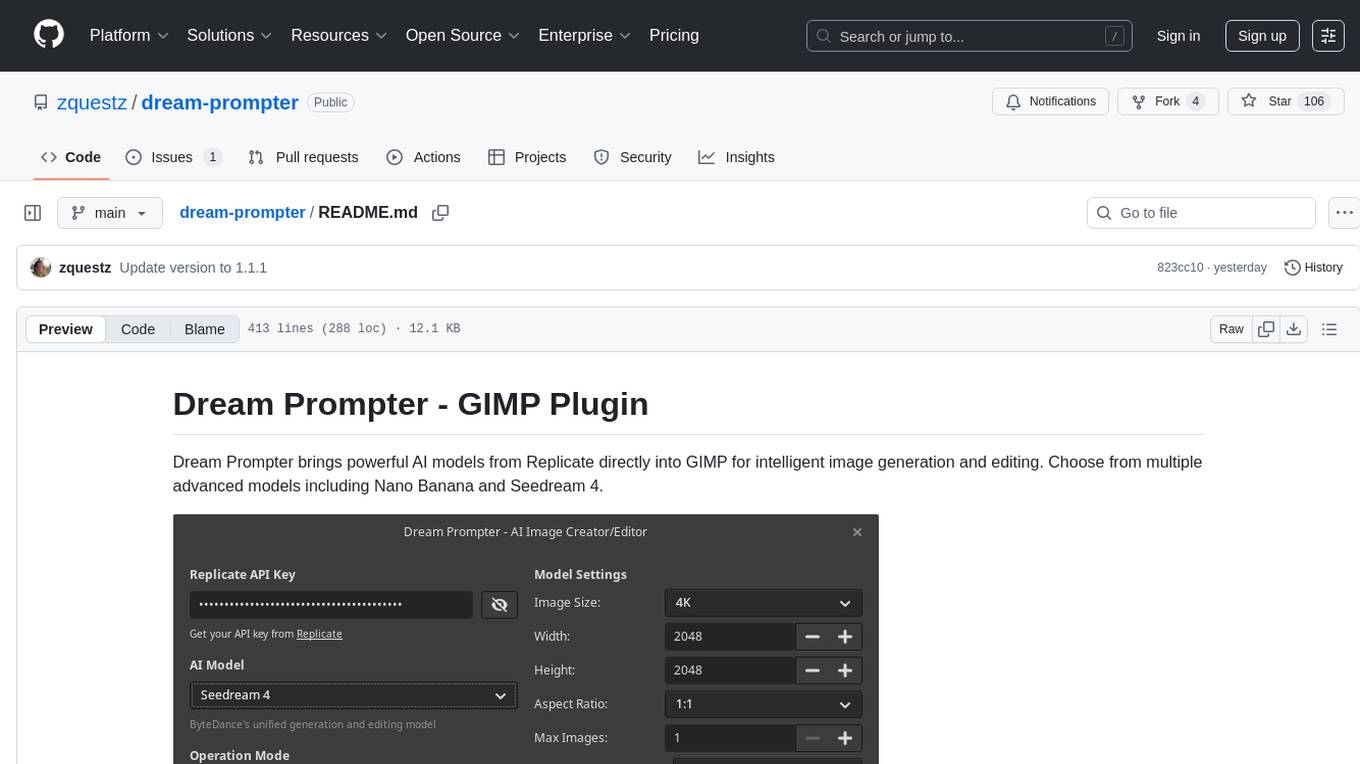

dream-prompter

Dream Prompter is a tool designed to help users generate creative writing prompts for their stories, essays, or any other creative projects. It uses a database of various elements such as characters, settings, and plot twists to randomly generate unique prompts that can inspire writers and spark their creativity. With Dream Prompter, users can easily overcome writer's block and find new ideas to develop their writing skills and produce engaging content.

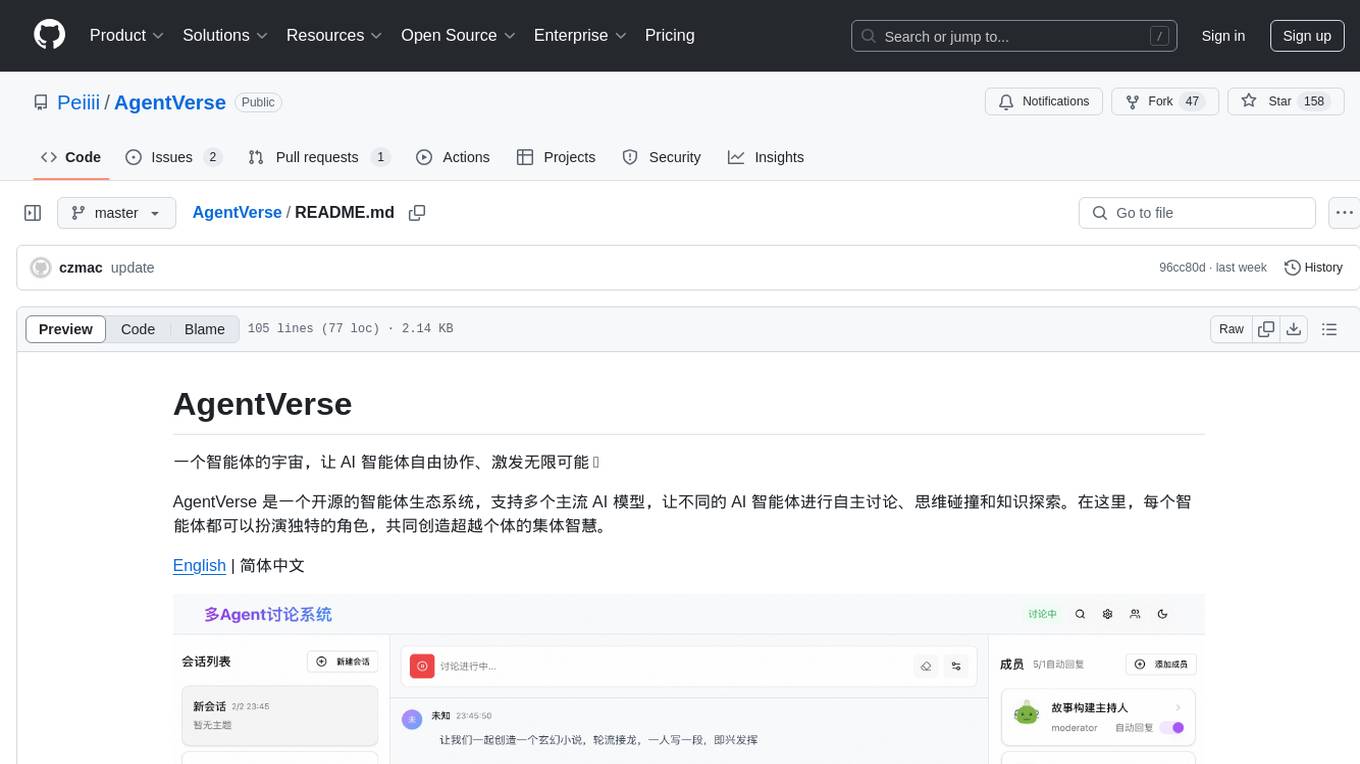

AgentVerse

AgentVerse is an open-source ecosystem for intelligent agents, supporting multiple mainstream AI models to facilitate autonomous discussions, thought collisions, and knowledge exploration. Each intelligent agent can play a unique role here, collectively creating wisdom beyond individuals.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.