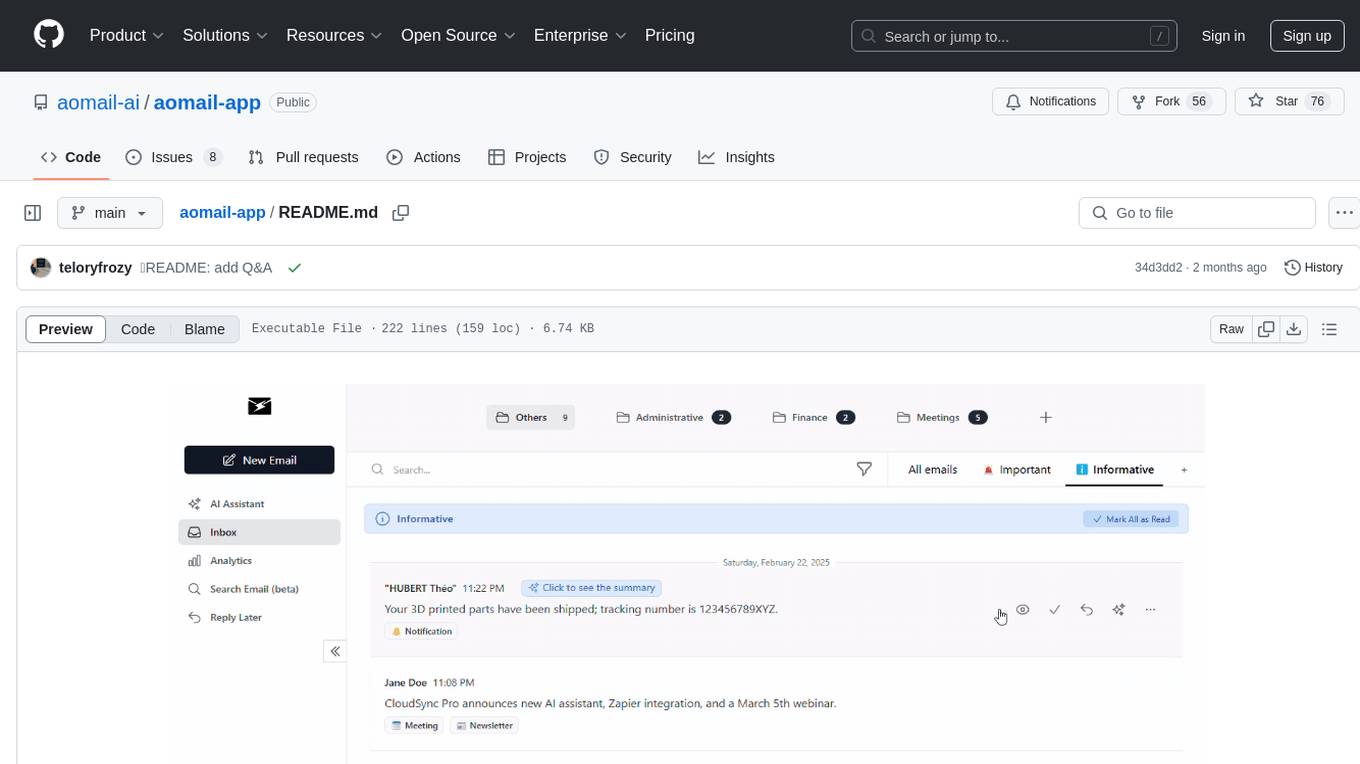

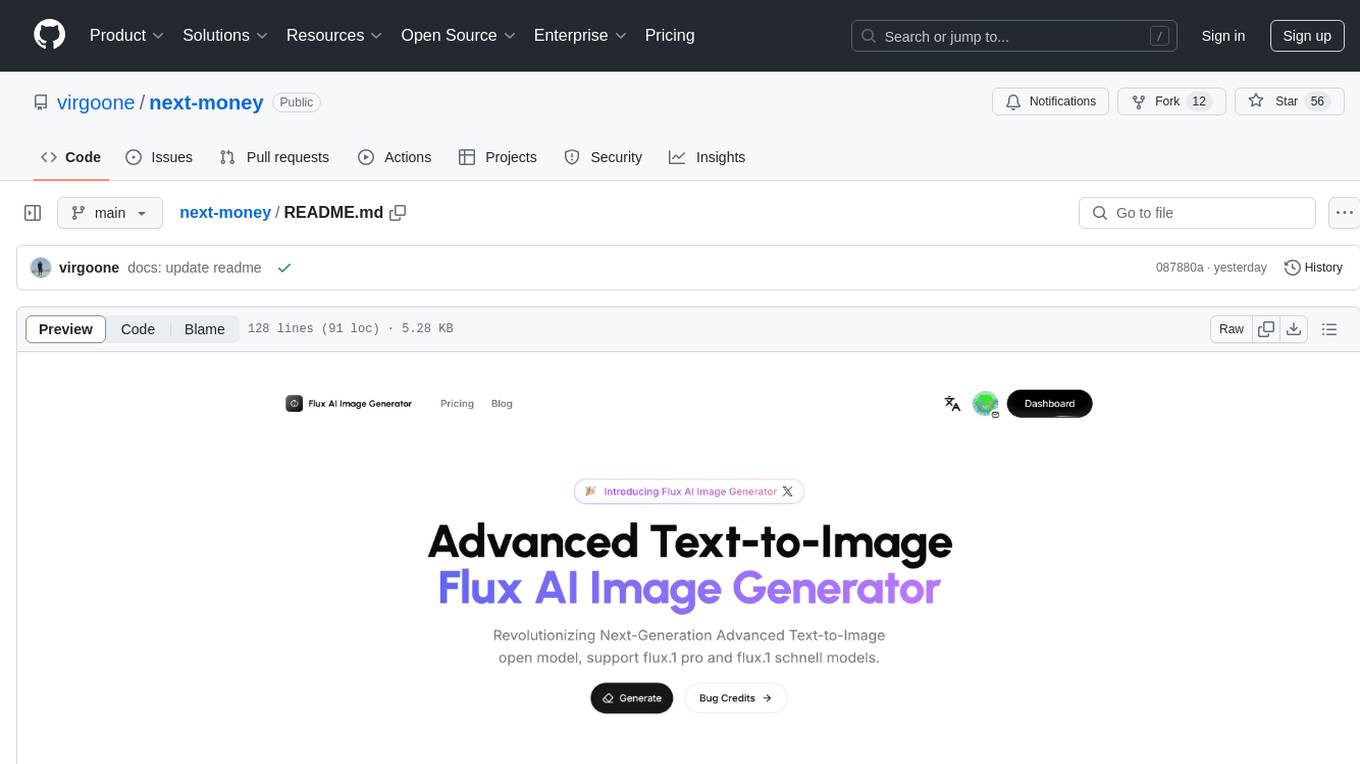

aomail-app

Aomail is an AI interface that connects to Gmail, Outlook, or any IMAP service. It leverages LLMs to categorize, summarize, prioritize, and help write & reply to emails faster. Google-verified, TAC Security–assessed

Stars: 63

Aomail is an intelligent, open-source email management platform with AI capabilities. It offers email provider integration, AI-powered tools for smart categorization and assistance, analytics and management features. Users can self-host for complete control. Coming soon features include AI custom rules, platform integration with Discord & Slack, and support for various AI providers. The tool is designed to revolutionize email management by providing advanced AI features and analytics.

README:

An intelligent, open-source email management platform with AI capabilities. Use our hosted version or self-host for complete control.

🌐 Website: https://aomail.ai

📧 Support: [email protected]

-

📧 Email Provider Integration

- Labels are replicated on Gmail & Outlook

- Link multiple accounts (premium plan)

-

🤖 AI-Powered Tools

- Smart categorization with custom rules

- AI chat assistant for composition and replies

- Customizable AI agents

- Smart email categorization with summaries

- Search emails or ask AI questions (beta)

-

📊 Analytics & Management

- Usage analytics and insights

- Multi-account dashboard

- AI Custom Rules: Automatic forwarding and smart replies

- Platform Integration: Discord & Slack connectivity with smart summaries

- LLM Choice: Support for OpenAI, Anthropic, Llama, Mistral

Try Aomail for free today and experience the future of email management.

No credit card required.

Required Services:

- Gemini API

- Google OAuth

Optional Services:

- Google PubSub

- Microsoft Azure

- Stripe

- Clone and Install:

git clone https://github.com/aomail-ai/aomail-app

cd aomail-app

cd frontend && npm install

cd .. && cp backend/.env.example backend/.env-

Google Project Setup:

1 Generate a Gemini API key here. You also need to enable Gemini API here 2 Create a Google Cloud Console Project 3 Configure OAuth screen with scopes from

/backend/aomail/constants.py-

Set authorized origins:

http://localhost -

Set redirect URIs:

http://localhost/signup-linkhttp://localhost/settings

-

PubSub Setup (Optional):

-

For Local Development:

- Install and run Google Cloud PubSub emulator

- Configure local webhook endpoint

-

For Production:

- Create a new PubSub topic in Google Cloud Console (Create topic)

- Configure webhook URL:

https://your-domain/google/receive_mail_notifications/ - Set up push subscription with your webhook

-

-

-

Configure Environment: Required variables in

.env:

# LLM API KEYS

GEMINI_API_KEY=""

# ENCRYPTION KEYS

SOCIAL_API_REFRESH_TOKEN_KEY=""

EMAIL_ONE_LINE_SUMMARY_KEY=""

EMAIL_SHORT_SUMMARY_KEY=""

EMAIL_HTML_CONTENT_KEY=""

# DJANGO CREDENTIALS

DJANGO_SECRET_KEY=""

# Google Configuration (if using Gmail)

GOOGLE_PROJECT_ID=""

GOOGLE_CLIENT_ID=""

GOOGLE_CLIENT_SECRET=""

# Microsoft Configuration (if using Outlook)

MICROSOFT_CLIENT_ID=""

MICROSOFT_CLIENT_SECRET=""

MICROSOFT_TENANT_ID=""

MICROSOFT_CLIENT_STATE=""

- Launch Application:

chmod +x start.sh

./start.shAccess at http://localhost:8090/

If you encounter database migration problems, run these commands:

sudo rm -fr backend/aomail/migrations

docker exec -it aomail_project-backend_dev-1 python manage.py makemigrations --empty aomail

./start.shCommon port conflict issues:

- Check for any running containers using the same ports

- Look for conflicts between production/development containers

- Try updating ports in

start.shif needed

Follow these steps in order:

- Configure your DNS record

- Update the reverse proxy settings

- Open required port:

sudo ufw allow PORT_NUMBER - Add the subdomain to

ALLOWED_HOSTSin start.sh

Q: How do you ensure email security?

A: We take security seriously:

- All emails are encrypted and stored in a secure database

- Our code is open source and publicly auditable

- We've received a 9.7/10 security rating from TAC Security

- No AI training is performed on your data

Download Security Assessment Report

Q: How do you handle AI and data privacy?

A: We prioritize your privacy:

- No training is performed on your emails

- We use stateless API calls to LLM providers

- You can choose your preferred LLM provider:

Q: How long is the free trial?

A: We offer a 14-day free trial.

Q: Do I need to provide credit card information?

A: No, you can start your free trial without entering any payment information.

Q: Which email providers are supported?

A: Currently, we support:

- Gmail

- Outlook (beta)

Q: How does mailbox linking work?

A: We use industry-standard OAuth 2.0 for secure mailbox integration. Learn more about OAuth 2.0

Q: How do I get unlimited access to Aomail?

A: You'll need to set up the admin dashboard to give yourself unlimited access. Check out our admin dashboard repository for setup instructions.

- Set up development environment (recommended):

python3 -m venv py_env

source py_env/bin/activate

pip install -r requirements.txt- Fork repository

- Create feature branch

- Submit pull request

-

Feature Requests: Use our feature request template with

enhancement+backend/frontendlabels -

Bug Reports: Use our bug report template with

bug+backend/frontendlabels

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aomail-app

Similar Open Source Tools

aomail-app

Aomail is an intelligent, open-source email management platform with AI capabilities. It offers email provider integration, AI-powered tools for smart categorization and assistance, analytics and management features. Users can self-host for complete control. Coming soon features include AI custom rules, platform integration with Discord & Slack, and support for various AI providers. The tool is designed to revolutionize email management by providing advanced AI features and analytics.

bytebot

Bytebot is an open-source AI desktop agent that provides a virtual employee with its own computer to complete tasks for users. It can use various applications, download and organize files, log into websites, process documents, and perform complex multi-step workflows. By giving AI access to a complete desktop environment, Bytebot unlocks capabilities not possible with browser-only agents or API integrations, enabling complete task autonomy, document processing, and usage of real applications.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

gemini-cli

Gemini CLI is an open-source AI agent that provides lightweight access to Gemini, offering powerful capabilities like code understanding, generation, automation, integration, and advanced features. It is designed for developers who prefer working in the command line and offers extensibility through MCP support. The tool integrates directly into GitHub workflows and offers various authentication options for individual developers, enterprise teams, and production workloads. With features like code querying, editing, app generation, debugging, and GitHub integration, Gemini CLI aims to streamline development workflows and enhance productivity.

browser-use

Browser Use is a tool designed to make websites accessible for AI agents. It provides an easy way to connect AI agents with the browser, enabling users to perform tasks such as extracting vision and HTML elements, managing multiple tabs, and executing custom actions. The tool supports various language models and allows users to parallelize multiple agents for efficient processing. With features like self-correction and the ability to register custom actions, Browser Use offers a versatile solution for interacting with web content using AI technology.

anilist-mcp

AniList MCP Server is a Model Context Protocol server that interfaces with the AniList API, allowing LLM clients to access and interact with anime, manga, character, staff, and user data from AniList. It supports searching for anime, manga, characters, staff, and studios, detailed information retrieval, user profiles and lists access, advanced filtering options, genres and media tags retrieval, dual transport support (HTTP and STDIO), and cloud deployment readiness.

g4f.dev

G4f.dev is the official documentation hub for GPT4Free, a free and convenient AI tool with endpoints that can be integrated directly into apps, scripts, and web browsers. The documentation provides clear overviews, quick examples, and deeper insights into the major features of GPT4Free, including text and image generation. Users can choose between Python and JavaScript for installation and setup, and can access various API endpoints, providers, models, and client options for different tasks.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

eliza

Eliza is a versatile AI agent operating system designed to support various models and connectors, enabling users to create chatbots, autonomous agents, handle business processes, create video game NPCs, and engage in trading. It offers multi-agent and room support, document ingestion and interaction, retrievable memory and document store, and extensibility to create custom actions and clients. Eliza is easy to use and provides a comprehensive solution for AI agent development.

farfalle

Farfalle is an open-source AI-powered search engine that allows users to run their own local LLM or utilize the cloud. It provides a tech stack including Next.js for frontend, FastAPI for backend, Tavily for search API, Logfire for logging, and Redis for rate limiting. Users can get started by setting up prerequisites like Docker and Ollama, and obtaining API keys for Tavily, OpenAI, and Groq. The tool supports models like llama3, mistral, and gemma. Users can clone the repository, set environment variables, run containers using Docker Compose, and deploy the backend and frontend using services like Render and Vercel.

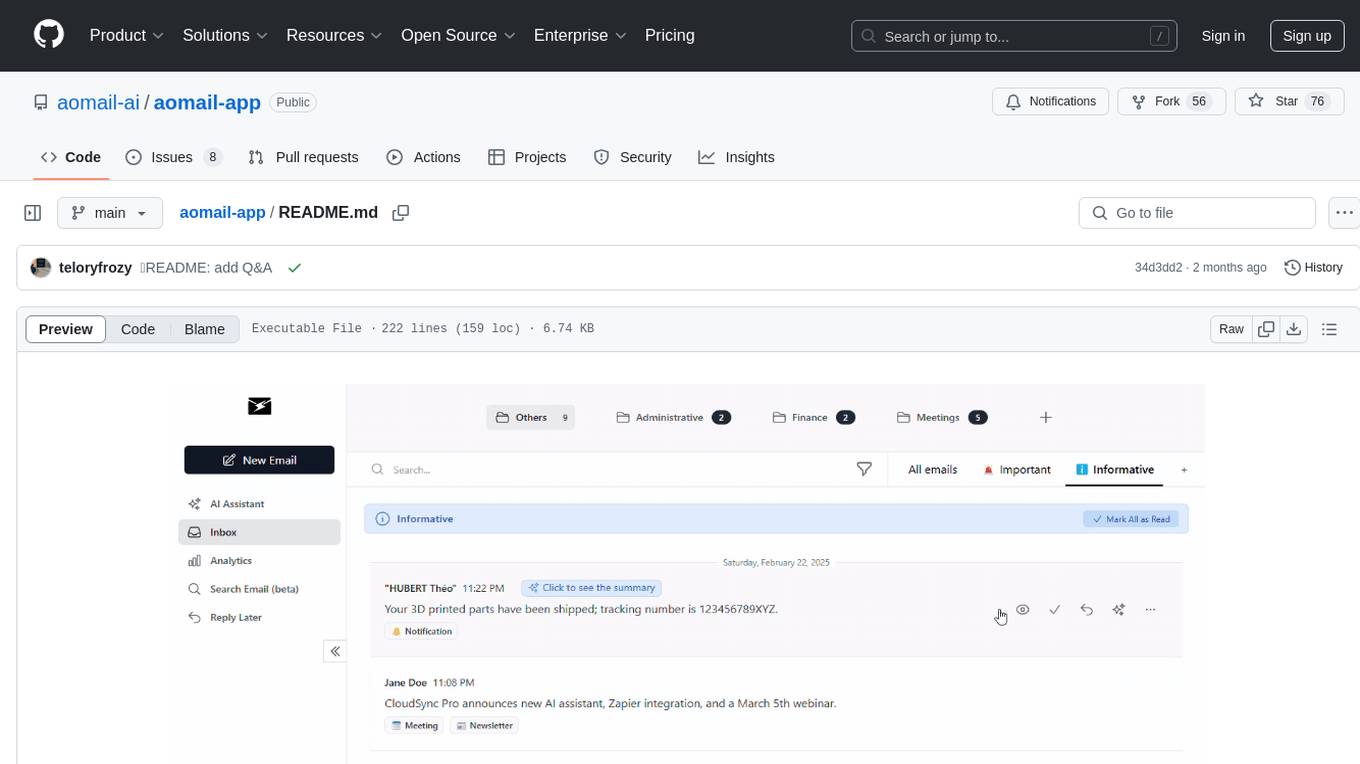

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

gateway

CentralMind Gateway is an AI-first data gateway that securely connects any data source and automatically generates secure, LLM-optimized APIs. It filters out sensitive data, adds traceability, and optimizes for AI workloads. Suitable for companies deploying AI agents for customer support and analytics.

orbit

ORBIT (Open Retrieval-Based Inference Toolkit) is a middleware platform that provides a unified API for AI inference. It acts as a central gateway, allowing you to connect various local and remote AI models with your private data sources like SQL databases, vector stores, and local files. ORBIT uses a flexible adapter architecture to connect your data to AI models, creating specialized 'agents' for specific tasks. It supports scenarios like Knowledge Base Q&A and Chat with Your SQL Database, enabling users to interact with AI models seamlessly. The tool offers a RESTful API for programmatic access and includes features like authentication, API key management, system prompts, health monitoring, and file management. ORBIT is designed to streamline AI inference tasks and facilitate interactions between users and AI models.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

For similar tasks

aomail-app

Aomail is an intelligent, open-source email management platform with AI capabilities. It offers email provider integration, AI-powered tools for smart categorization and assistance, analytics and management features. Users can self-host for complete control. Coming soon features include AI custom rules, platform integration with Discord & Slack, and support for various AI providers. The tool is designed to revolutionize email management by providing advanced AI features and analytics.

THE-SANDBOX-AutoClicker

The Sandbox AutoClicker is a bot designed for the crypto game The Sandbox, allowing users to automate various processes within the game. The tool offers features such as auto tuning, multi-account auto clicker, multi-threading, a convenient menu, and free proxies. It provides full optimization through a simple menu and is guaranteed to be safe for Windows systems, supporting versions 7/8/8.1/10/11 (x32/64).

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

oba-live-tool

The oba live tool is a small tool for Douyin small shops and Kuaishou Baiying live broadcasts. It features multiple account management, intelligent message assistant, automatic product explanation, AI automatic reply, and AI intelligent assistant. The tool requires Windows 10 or above, Chrome or Edge browser, and a valid account for Douyin small shops or Kuaishou Baiying. Users can download the tool from the Releases page, connect to the control panel, set API keys for AI functions, and configure auto-reply prompts. The tool is licensed under the MIT license.

twitter-automation-ai

Advanced Twitter Automation AI is a modular Python-based framework for automating Twitter at scale. It supports multiple accounts, robust Selenium automation with optional undetected Chrome + stealth, per-account proxies and rotation, structured LLM generation/analysis, community posting, and per-account metrics/logs. The tool allows seamless management and automation of multiple Twitter accounts, content scraping, publishing, LLM integration for generating and analyzing tweet content, engagement automation, configurable automation, browser automation using Selenium, modular design for easy extension, comprehensive logging, community posting, stealth mode for reduced fingerprinting, per-account proxies, LLM structured prompts, and per-account JSON summaries and event logs for observability.

claude-craft

Claude Craft is a comprehensive framework for AI-assisted development with Claude Code, providing standardized rules, agents, and commands across multiple technology stacks. It includes autonomous sprint capabilities, documentation accuracy improvements, CI hardening, and test coverage enhancements. With support for 10 technology stacks, 5 languages, 40 AI agents, 157 slash commands, and various project management features like BMAD v6 framework, Ralph Wiggum loop execution, skills, templates, checklists, and hooks system, Claude Craft offers a robust solution for project development and management. The tool also supports workflow methodology, development tracks, document generation, BMAD v6 project management, quality gates, batch processing, backlog migration, and Claude Code hooks integration.

dwata

dwata is an open source desktop app designed to manage all your private data on your laptop, providing offline access, fast search capabilities, and organization features for emails, files, contacts, events, and tasks. It aims to reduce cognitive overhead in daily digital life by offering a centralized platform for personal data management. The tool prioritizes user privacy, with no data being sent outside the user's computer without explicit permission. dwata is still in early development stages and offers integration with AI providers for advanced functionalities.

auth0-assistant0

Assistant0 is an AI personal assistant that consolidates digital life by accessing multiple tools to help users stay organized and efficient. It integrates with Gmail for email summaries, manages calendars, retrieves user information, enables online shopping with human-in-the-loop authorizations, uploads and retrieves documents, lists GitHub repositories and events, and soon provides Slack notifications and Google Drive access. With tool-calling capabilities, it acts as a digital personal secretary, enhancing efficiency and ushering in intelligent automation. Security challenges are addressed by using Auth0 for secure tool calling with scoped access tokens, ensuring user data protection.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.