workbench-example-hybrid-rag

An NVIDIA AI Workbench example project for Retrieval Augmented Generation (RAG)

Stars: 106

This NVIDIA AI Workbench project is designed for developing a Retrieval Augmented Generation application with a customizable Gradio Chat app. It allows users to embed documents into a locally running vector database and run inference locally on a Hugging Face TGI server, in the cloud using NVIDIA inference endpoints, or using microservices via NVIDIA Inference Microservices (NIMs). The project supports various models with different quantization options and provides tutorials for using different inference modes. Users can troubleshoot issues, customize the Gradio app, and access advanced tutorials for specific tasks.

README:

This is an NVIDIA AI Workbench project for developing a Retrieval Augmented Generation application with a customizable Gradio Chat app. It lets you:

- Embed your documents into a locally running vector database.

- Run inference locally on a Hugging Face TGI server, in the cloud using NVIDIA inference endpoints, or using microservices via NVIDIA Inference Microservices (NIMs):

- 4-bit, 8-bit, and no quantization options are supported for locally running models served by TGI.

- Other models may be specified to run locally using their Hugging Face tag.

- Locally-running microservice option is supported for Docker users only.

| Model | Local (TGI) | Cloud (NVIDIA API Catalog) | Microservices (NVIDIA NIMs) |

|---|---|---|---|

| Llama3-ChatQA-1.5-8B | Y | Y | * |

| Llama3-ChatQA-1.5-70B | Y | * | |

| Nemotron-4-340B-Instruct | Y | * | |

| Mistral-NeMo 12B Instruct | Y | * | |

| Mistral-7B-Instruct-v0.1 | Y (gated) | * | |

| Mistral-7B-Instruct-v0.2 | Y (gated) | Y | * |

| Mistral-7B-Instruct-v0.3 | Y | Y | |

| Mistral-Large | Y | * | |

| Mixtral-8x7B-Instruct-v0.1 | Y | Y | |

| Mixtral-8x22B-Instruct-v0.1 | Y | Y | |

| Mamba Codestral 7B v0.1 | Y | * | |

| Codestral-22B-Instruct-v0.1 | Y | * | |

| Llama-2-7B-Chat | Y (gated) | * | |

| Llama-2-13B-Chat | * | ||

| Llama-2-70B-Chat | Y | * | |

| Llama-3-8B-Instruct | Y (gated) | Y | Y (default) * |

| Llama-3-70B-Instruct | Y | Y | |

| Llama-3.1-8B-Instruct | Y | * | |

| Llama-3.1-70B-Instruct | Y | * | |

| Llama-3.1-405B-Instruct | Y | * | |

| Gemma-2B | Y | * | |

| Gemma-7B | Y | * | |

| CodeGemma-7B | Y | * | |

| Phi-3-Mini-4k-Instruct | Y | * | |

| Phi-3-Mini-128k-Instruct | Y | Y | * |

| Phi-3-Small-8k-Instruct | Y | * | |

| Phi-3-Small-128k-Instruct | Y | * | |

| Phi-3-Medium-4k-Instruct | Y | * | |

| Arctic | Y | * | |

| Granite-8B-Code-Instruct | Y | * | |

| Granite-34B-Code-Instruct | Y | * | |

| Solar-10.7B-Instruct | Y | * |

*NIM containers for LLMs are starting to roll out under General Availability (GA). If you set up any accessible language model NIM running on another system, it is supported under Remote NIM inference inside this project. For Local NIM inference, this project provides a flow for setting up the default meta/llama3-8b-instruct NIM locally as an example. Advanced users may choose to swap this NIM Container Image out with other NIMs as they are released.

This section demonstrates how to use this project to run RAG via NVIDIA Inference Endpoints hosted on the NVIDIA API Catalog. For other inference options, including local inference, see the Advanced Tutorials section for set up and instructions.

- An NGC account is required to generate an NVCF run key.

- A valid NVCF key is required to access NVIDIA API endpoints. Generate a key on any NVIDIA API catalog model card, eg. here by clicking "Get API Key".

- Install and configure AI Workbench locally and open up AI Workbench. Select a location of your choice.

- Fork this repo into your own GitHub account.

-

Inside AI Workbench:

- Click Clone Project and enter the repo URL of your newly-forked repo.

- AI Workbench will automatically clone the repo and build out the project environment, which can take several minutes to complete.

- Upon

Build Complete, navigate to Environment > Secrets > NVCF_RUN_KEY > Configure and paste in your NVCF run key as a project secret. - Select Open Chat on the top right of the AI Workbench window, and the Gradio app will open in a browser.

-

In the Gradio Chat app:

- Click Set up RAG Backend. This triggers a one-time backend build which can take a few moments to initialize.

- Select the Cloud option, select a model family and model name, and submit a query.

- To perform RAG, select Upload Documents Here from the right hand panel of the chat UI.

- You may see a warning that the vector database is not ready yet. If so wait a moment and try again.

- When the database starts, select Click to Upload and choose the text files to upload.

- Once the files upload, the Toggle to Use Vector Database next to the text input box will turn on.

- Now query your documents! What are they telling you?

- To change the endpoint, choose a different model from the dropdown on the right-hand settings panel and continue querying.

Next Steps:

- If you get stuck, check out the "Troubleshooting" section.

- For tutorials on other supported inference modes, check out the "Advanced Tutorials" section below. Note: All subsequent tutorials will assume

NVCF_RUN_KEYis already configured with your credentials.

Note: NVIDIA AI Workbench is the easiest way to get this RAG app running.

- NVIDIA AI Workbench is a free client application that you can install on your own machines.

- It provides portable and reproducible dev environments by handling Git repos and containers for you.

- Installing on a local system? Check out our guides here for Windows, Local Ubuntu 22.04 and for macOS 12 or higher

- Installing on a remote system? Check out our guide for Remote Ubuntu 22.04

Need help? Submit any questions, bugs, feature requests, and feedback at the Developer Forum for AI Workbench. The dedicated thread for this Hybrid RAG example project is located here.

-

Make sure you installed AI Workbench. There should be a desktop icon on your system. Double click it to start AI Workbench.

-

Make sure you have opened AI Workbench.

-

Click on the Local location (or whatever location you want to clone into).

-

If this is your first project, click the green Clone Existing Project button.

- Otherwise, click Clone Project in the top right

-

Drop in the repo URL, leave the default path, and click Clone.

-

The container is likely building and can take several minutes.

-

Look at the very bottom of the Workbench window, you will see a Build Status widget.

-

Click it to expand the build output.

-

When the container is built, the widget will say

Build Ready. -

Now you can begin.

-

Check that the container finished building.

-

When it finishes, click the green Open Chat button at the top right.

-

Look at the bottom left of the AI Workbench window, you will see an Output widget.

-

Click it to expand the output.

-

Expand the dropdown, navigate to

Applications>Chat. -

You can now view all debug messages in the Output window in real time.

-

Check that the container is built.

-

Then click the green dropdown next to the

Open Chatbutton at the top right. -

Select JupyterLab to start editing the code. Alternatively, you may configure VSCode support here.

This section shows you how to use different inference modes with this RAG project. For these tutorials, a GPU of at least 12 GB of vRAM is recommended. If you don't have one, go back to the Quickstart Tutorial that shows how to use Cloud Endpoints.

This tutorial assumes you already cloned this Hybrid RAG project to your AI Workbench. If not, please follow the beginning of the Quickstart Tutorial.

The following models are ungated. These can be accessed, downloaded, and run locally inside the project with no additional configurations required:

Some additional configurations in AI Workbench are required to run certain listed models. Unlike the previous tutorials, these configs are not added to the project by default, so please follow the following instructions closely to ensure a proper setup. Namely, a Hugging Face API token is required for running gated models locally. See how to create a token here.

The following models are gated. Verify that You have been granted access to this model appears on the model cards for any models you are interested in running locally:

Then, complete the following steps:

- If the project is already running, shut down the project environment under Environment > Stop Environment. This will ensure restarting the environment will incorporate all the below configurations.

- In AI Workbench, add the following entries under Environment > Secrets.

-

Your Hugging Face Token: This is used to clone the model weights locally from Hugging Face.

-

Name:

HUGGING_FACE_HUB_TOKEN - Value: (Your HF API Key)

- Description: HF Token for cloning model weights locally

-

Name:

-

Your Hugging Face Token: This is used to clone the model weights locally from Hugging Face.

- Rebuild the project if needed to incorporate changes.

Note: All subsequent tutorials will assume both NVCF_RUN_KEY and HUGGING_FACE_HUB_TOKEN are already configured with your credentials.

- Select the green Open Chat button on the top right the AI Workbench project window.

- Once the UI opens, click Set up RAG Backend. This triggers a one-time backend build which can take a few moments to initialize.

- Select the Local System inference mode under

Inference Settings>Inference Mode. - Select a model from the dropdown on the right hand settings panel. You can filter by gated vs ungated models for convenience.

- Ensure you have proper access permissions for the model; instructions are here.

- You can also input a custom model from Hugging Face, following the same format. Careful, as not all models and quantization levels may be supported in the current TGI version!

- Select a quantization level. The recommended precision for your system will be pre-selected for you, but full, 8-bit, and 4-bit bitsandbytes precision levels are currently supported.

| vRAM | System RAM | Disk Storage | Model Size & Quantization |

|---|---|---|---|

| >=12 GB | 32 GB | 40 GB | 7B & int4 |

| >=24 GB | 64 GB | 40 GB | 7B & int8 |

| >=40 GB | 64 GB | 40 GB | 7B & none |

- Select Load Model to pre-fetch the model. This will take up to several minutes to perform an initial download of the model to the project cache. Subsequent loads will detect this cached model.

- Select Start Server to start the inference server with your current local GPU. This may take a moment to warm up.

- Now, start chatting! Queries will be made to the model running on your local system whenever this inference mode is selected.

- In the right hand panel of the Chat UI select Upload Documents Here. Click to upload or drag and drop the desired text files to upload.

- You may see a warning that the vector database is not ready yet. If so wait a moment and try again.

- Once the files upload, the Toggle to Use Vector Database next to the text input box will turn on by default.

- Now query your documents! To use a different model, stop the server, make your selections, and restart the inference server.

This tutorial assumes you already cloned this Hybrid RAG project to your AI Workbench. If not, please follow the beginning of the Quickstart Tutorial.

- Set up your NVIDIA Inference Microservice (NIM) to run self-hosted on another system of your choice. The playbook to get started is located here. Remember the model name (if not the

meta/llama3-8b-instructdefault) and the ip address of this running microservice. Ports for NIMs are generally set to 8000 by default. - Alternatively, you may set up any other 3rd party supporting the OpenAI API Specification. One example is Ollama, as they support the OpenAI API Spec. Remember the model name, port, and the ip address when you set this up.

- Select the green Open Chat button on the top right the AI Workbench project window.

- Once the UI opens, click Set up RAG Backend. This triggers a one-time backend build which can take a few moments to initialize.

- Select the Self-hosted Microservice inference mode under

Inference Settings>Inference Mode. - Select the Remote tab in the right hand settings panel. Input the IP address of the accessible system running the microservice, Port if different from the 8000 default for NIMs, as well as the model name to run if different from the

meta/llama3-8b-instructdefault. - Now start chatting! Queries will be made to the microservice running on a remote system whenever this inference mode is selected.

- In the right hand panel of the Chat UI select Upload Documents Here. Click to upload or drag and drop the desired text files to upload.

- You may see a warning that the vector database is not ready yet. If so wait a moment and try again.

- Once uploaded successfully, the Toggle to Use Vector Database should turn on by default next to your text input box.

- Now you may query your documents!

This tutorial assumes you already cloned this Hybrid RAG project to your AI Workbench. If not, please follow the beginning of the Quickstart Tutorial.

Here are some important PREREQUISITES:

- Your AI Workbench must be running with a DOCKER container runtime. Podman is unsupported.

- You must have access to NeMo Inference Microservice (NIMs) General Availability Program.

- Shut down any other processes running locally on the GPU as these may result in memory issues when running the microservice locally.

Some additional configurations in AI Workbench are required to run this tutorial. Unlike the previous tutorials, these configs are not added to the project by default, so please follow the following instructions closely to ensure a proper setup.

- If running, shut down the project environment under Environment > Stop Environment. This will ensure restarting the environment will incorporate all the below configurations.

- In AI Workbench, add the following entries under Environment > Secrets:

-

Your NGC API Key: This is used to authenticate when pulling the NIM container from NGC. Remember, you must be in the General Availability Program to access this container.

-

Name:

NGC_CLI_API_KEY - Value: (Your NGC API Key)

- Description: NGC API Key for NIM authentication

-

Name:

-

Your NGC API Key: This is used to authenticate when pulling the NIM container from NGC. Remember, you must be in the General Availability Program to access this container.

- Add and/or modify the following under Environment > Variables:

-

DOCKER_HOST: location of your docker socket, eg.unix:///var/host-run/docker.sock -

LOCAL_NIM_HOME: location of where your NIM files will be stored, for example/mnt/c/Users/<my-user>for Windows or/home/<my-user>for Linux

-

- Add the following under Environment > Mounts:

-

A Docker Socket Mount: This is a mount for the docker socket for the container to properly interact with the host Docker Engine.

-

Type:

Host Mount -

Target:

/var/host-run -

Source:

/var/run - Description: Docker socket Host Mount

-

Type:

-

A Filesystem Mount: This is a mount to properly run and manage your LOCAL_NIM_HOME on the host from inside the project container for generating the model repo.

-

Type:

Host Mount -

Target:

/mnt/host-home -

Source: (Your LOCAL_NIM_HOME location) , for example

/mnt/c/Users/<my-user>for Windows or/home/<my-user>for Linux - Description: Host mount for LOCAL_NIM_HOME

-

Type:

-

A Docker Socket Mount: This is a mount for the docker socket for the container to properly interact with the host Docker Engine.

- Rebuild the project if needed.

- Select the green Open Chat button on the top right the AI Workbench project window.

- Once the UI opens, click Set up RAG Backend. This triggers a one-time backend build which can take a few moments to initialize.

- Select the Self-hosted Microservice inference mode under

Inference Settings>Inference Mode. - Select the Local sub-tab in the right hand settings panel.

- Bring your NIM Container Image (placeholder can be used as the default flow), and select Prefetch NIM. This one-time process can take a few moments to pull down the NIM container.

- Select Start Microservice. This may take a few moments to complete.

- Now, you can start chatting! Queries will be made to your microservice running on the local system whenever this inference mode is selected.

- In the right hand panel of the Chat UI select Upload Documents Here. Click to upload or drag and drop the desired text files to upload.

- You may see a warning that the vector database is not ready yet. If so wait a moment and try again.

- Once uploaded successfully, the Toggle to Use Vector Database should turn on by default next to your text input box.

- Now you may query your documents!

By default, you may customize Gradio app using the jupyterlab container application. Alternatively, you may configure VSCode support here.

- In AI Workbench, select the green dropdown from the top right and select Open JupyterLab.

- Go into the

code/chatui/folder and start editing the files. - Save the files.

- To see your changes, stop the Chat UI and restart it.

- To version your changes, commit them in the Workbench project window and push to your GitHub repo.

In addition to modifying the Gradio frontend, you can also use the Jupyterlab or another IDE to customize other aspects of the project, eg. custom chains, backend server, scripts, configs, etc.

This NVIDIA AI Workbench example project is under the Apache 2.0 License

This project may download and install additional third-party open source software projects. Review the license terms of these open source projects before use. Third party components used as part of this project are subject to their separate legal notices or terms that accompany the components. You are responsible for confirming compliance with third-party component license terms and requirements.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for workbench-example-hybrid-rag

Similar Open Source Tools

workbench-example-hybrid-rag

This NVIDIA AI Workbench project is designed for developing a Retrieval Augmented Generation application with a customizable Gradio Chat app. It allows users to embed documents into a locally running vector database and run inference locally on a Hugging Face TGI server, in the cloud using NVIDIA inference endpoints, or using microservices via NVIDIA Inference Microservices (NIMs). The project supports various models with different quantization options and provides tutorials for using different inference modes. Users can troubleshoot issues, customize the Gradio app, and access advanced tutorials for specific tasks.

QuestCameraKit

QuestCameraKit is a collection of template and reference projects demonstrating how to use Meta Quest’s new Passthrough Camera API (PCA) for advanced AR/VR vision, tracking, and shader effects. It includes samples like Color Picker, Object Detection with Unity Sentis, QR Code Tracking with ZXing, Frosted Glass Shader, OpenAI vision model, and WebRTC video streaming. The repository provides detailed instructions on how to run each sample and troubleshoot known issues. Users can explore various functionalities such as converting 3D points to 2D image pixels, detecting objects, tracking QR codes, applying custom shader effects, interacting with OpenAI's vision model, and streaming camera feed over WebRTC.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

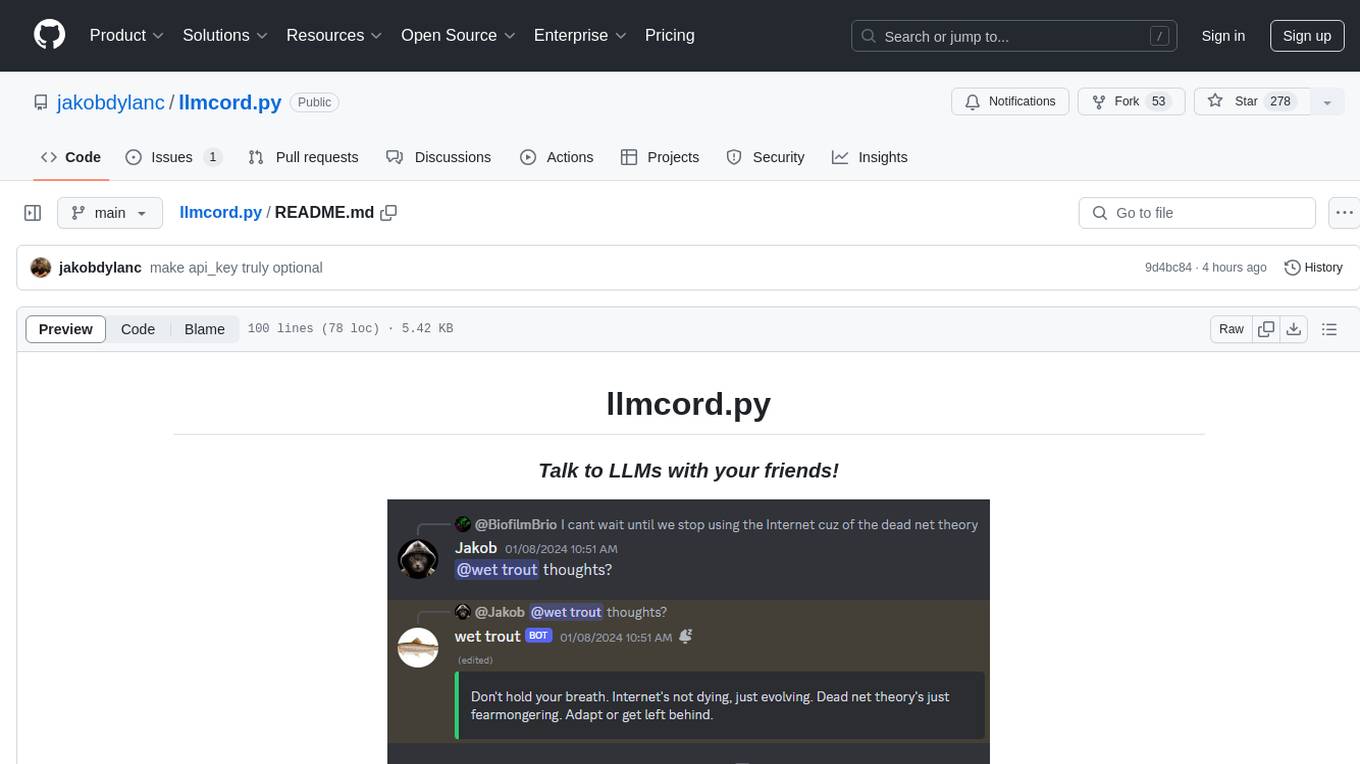

llmcord.py

llmcord.py is a tool that allows users to chat with Language Model Models (LLMs) directly in Discord. It supports various LLM providers, both remote and locally hosted, and offers features like reply-based chat system, choosing any LLM, support for image and text file attachments, customizable system prompt, private access via DM, user identity awareness, streamed responses, warning messages, efficient message data caching, and asynchronous operation. The tool is designed to facilitate seamless conversations with LLMs and enhance user experience on Discord.

llmcord

llmcord is a Discord bot that transforms Discord into a collaborative LLM frontend, allowing users to interact with various LLM models. It features a reply-based chat system that enables branching conversations, supports remote and local LLM models, allows image and text file attachments, offers customizable personality settings, and provides streamed responses. The bot is fully asynchronous, efficient in managing message data, and offers hot reloading config. With just one Python file and around 200 lines of code, llmcord provides a seamless experience for engaging with LLMs on Discord.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

upscayl

Upscayl is a free and open-source AI image upscaler that uses advanced AI algorithms to enlarge and enhance low-resolution images without losing quality. It is a cross-platform application built with the Linux-first philosophy, available on all major desktop operating systems. Upscayl utilizes Real-ESRGAN and Vulkan architecture for image enhancement, and its backend is fully open-source under the AGPLv3 license. It is important to note that a Vulkan compatible GPU is required for Upscayl to function effectively.

AIWritingCompanion

AIWritingCompanion is a lightweight and versatile browser extension designed to translate text within input fields. It offers universal compatibility, multiple activation methods, and support for various translation providers like Gemini, OpenAI, and WebAI to API. Users can install it via CRX file or Git, set API key, and use it for automatic translation or via shortcut. The tool is suitable for writers, translators, students, researchers, and bloggers. AI keywords include writing assistant, translation tool, browser extension, language translation, and text translator. Users can use it for tasks like translate text, assist in writing, simplify content, check language accuracy, and enhance communication.

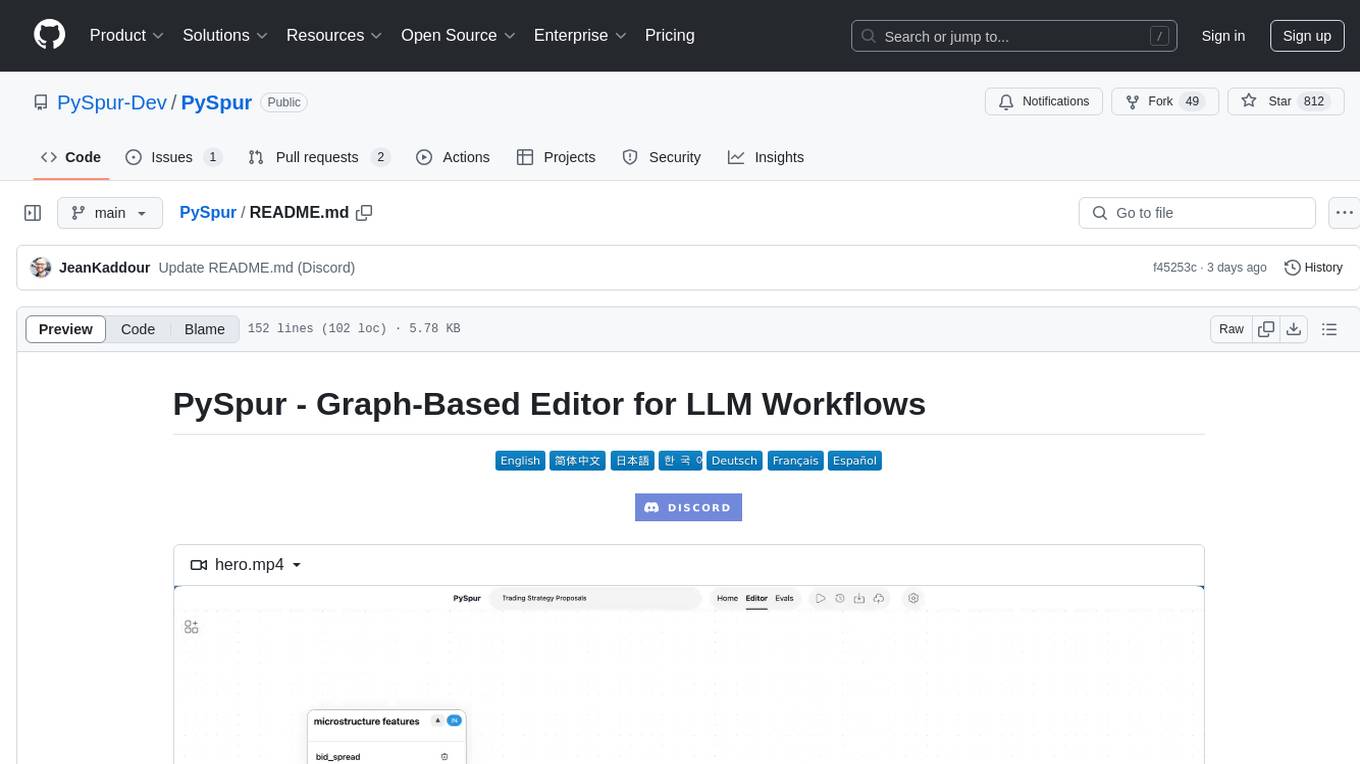

PySpur

PySpur is a graph-based editor designed for LLM workflows, offering modular building blocks for easy workflow creation and debugging at node level. It allows users to evaluate final performance and promises self-improvement features in the future. PySpur is easy-to-hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies, making it a versatile tool for workflow management in the field of AI and machine learning.

discord-llm-chatbot

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

SurfSense

SurfSense is a tool designed to help users save and organize content from the internet into a personal Knowledge Graph. It allows users to capture web browsing sessions and webpage content using a Chrome extension, enabling easy retrieval and recall of saved information. SurfSense offers features like powerful search capabilities, natural language interaction with saved content, self-hosting options, and integration with GraphRAG for meaningful content relations. The tool eliminates the need for web scraping by directly reading data from the DOM, making it a convenient solution for managing online information.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

Stable-Diffusion-Android

Stable Diffusion AI is an easy-to-use app for generating images from text or other images. It allows communication with servers powered by various AI technologies like AI Horde, Hugging Face Inference API, OpenAI, StabilityAI, and LocalDiffusion. The app supports Txt2Img and Img2Img modes, positive and negative prompts, dynamic size and sampling methods, unique seed input, and batch image generation. Users can also inpaint images, select faces from gallery or camera, and export images. The app offers settings for server URL, SD Model selection, auto-saving images, and clearing cache.

PentestGPT

PentestGPT is a penetration testing tool empowered by ChatGPT, designed to automate the penetration testing process. It operates interactively to guide penetration testers in overall progress and specific operations. The tool supports solving easy to medium HackTheBox machines and other CTF challenges. Users can use PentestGPT to perform tasks like testing connections, using different reasoning models, discussing with the tool, searching on Google, and generating reports. It also supports local LLMs with custom parsers for advanced users.

AmigaGPT

AmigaGPT is a versatile ChatGPT client for AmigaOS 3.x, 4.1, and MorphOS. It brings the capabilities of OpenAI’s GPT to Amiga systems, enabling text generation, question answering, and creative exploration. AmigaGPT can generate images using DALL-E, supports speech output, and seamlessly integrates with AmigaOS. Users can customize the UI, choose fonts and colors, and enjoy a native user experience. The tool requires specific system requirements and offers features like state-of-the-art language models, AI image generation, speech capability, and UI customization.

For similar tasks

workbench-example-hybrid-rag

This NVIDIA AI Workbench project is designed for developing a Retrieval Augmented Generation application with a customizable Gradio Chat app. It allows users to embed documents into a locally running vector database and run inference locally on a Hugging Face TGI server, in the cloud using NVIDIA inference endpoints, or using microservices via NVIDIA Inference Microservices (NIMs). The project supports various models with different quantization options and provides tutorials for using different inference modes. Users can troubleshoot issues, customize the Gradio app, and access advanced tutorials for specific tasks.

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

Forza-Mods-AIO

Forza Mods AIO is a free and open-source tool that enhances the gaming experience in Forza Horizon 4 and 5. It offers a range of time-saving and quality-of-life features, making gameplay more enjoyable and efficient. The tool is designed to streamline various aspects of the game, improving user satisfaction and overall enjoyment.

openssa

OpenSSA is an open-source framework for creating efficient, domain-specific AI agents. It enables the development of Small Specialist Agents (SSAs) that solve complex problems in specific domains. SSAs tackle multi-step problems that require planning and reasoning beyond traditional language models. They apply OODA for deliberative reasoning (OODAR) and iterative, hierarchical task planning (HTP). This "System-2 Intelligence" breaks down complex tasks into manageable steps. SSAs make informed decisions based on domain-specific knowledge. With OpenSSA, users can create agents that process, generate, and reason about information, making them more effective and efficient in solving real-world challenges.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

llm_qlora

LLM_QLoRA is a repository for fine-tuning Large Language Models (LLMs) using QLoRA methodology. It provides scripts for training LLMs on custom datasets, pushing models to HuggingFace Hub, and performing inference. Additionally, it includes models trained on HuggingFace Hub, a blog post detailing the QLoRA fine-tuning process, and instructions for converting and quantizing models. The repository also addresses troubleshooting issues related to Python versions and dependencies.

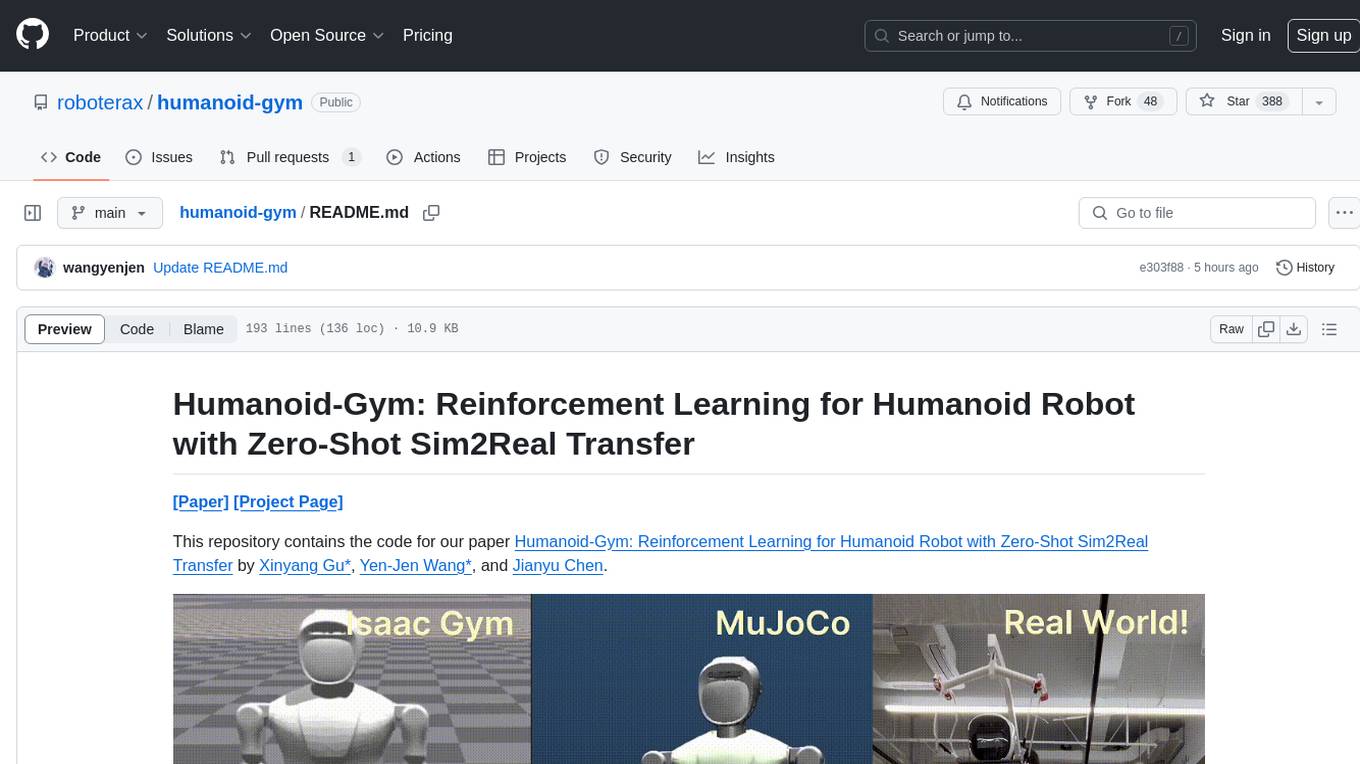

humanoid-gym

Humanoid-Gym is a reinforcement learning framework designed for training locomotion skills for humanoid robots, focusing on zero-shot transfer from simulation to real-world environments. It integrates a sim-to-sim framework from Isaac Gym to Mujoco for verifying trained policies in different physical simulations. The codebase is verified with RobotEra's XBot-S and XBot-L humanoid robots. It offers comprehensive training guidelines, step-by-step configuration instructions, and execution scripts for easy deployment. The sim2sim support allows transferring trained policies to accurate simulated environments. The upcoming features include Denoising World Model Learning and Dexterous Hand Manipulation. Installation and usage guides are provided along with examples for training PPO policies and sim-to-sim transformations. The code structure includes environment and configuration files, with instructions on adding new environments. Troubleshooting tips are provided for common issues, along with a citation and acknowledgment section.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.