WilmerAI

WilmerAI is one of the oldest LLM semantic routers. It uses multi-layer prompt routing and complex workflows to allow you to not only create practical chatbots, but to extend any kind of application that connects to an LLM via REST API. Wilmer sits between your app and your many LLM APIs, so that you can manipulate prompts as needed.

Stars: 803

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

README:

"What If Language Models Expertly Routed All Inference?"

This project is still under development. The software is provided as-is, without warranty of any kind.

This project and any expressed views, methodologies, etc., found within are the result of contributions by the maintainer and any contributors in their free time and on their personal hardware, and should not reflect upon any of their employers.

WilmerAI is an application designed for advanced semantic prompt routing and complex task orchestration. It originated from the need for a router that could understand the full context of a conversation, rather than just the most recent message.

Unlike simple routers that might categorize a prompt based on a single keyword, WilmerAI's routing system can analyze the entire conversation history. This allows it to understand the true intent behind a query like "What do you think it means?", recognizing it as historical query if that statement was preceded by a discussion about the Rosetta Stone, rather than merely conversational.

This contextual understanding is made possible by its core: a node-based workflow engine. Like the rest of Wilmer The routing is a workflow, categorizing through a sequence of steps, or "nodes", defined in a JSON file. The route chosen kicks off another specialized workflow, which can call more workflows from there. Each node can orchestrate different LLMs, call external tools, run custom scripts, call other workflows, and many other things.

To the client application, this entire multi-step process appears as a standard API call, enabling advanced backend logic without requiring changes to your existing front-end tools.

IMPORTANT:

I'm getting into the swing of a one month release cadence. I'll probably speed it up later into the year, but right now I'm spending a lot of time digging into dev agents and working on some complimentary projects to Wilmer for my tinkering, so this is a good pace.I have a long roadmap for Wilmer. I'm nowhere near done with this project, and with the state of AI dev now, I really think I can start to make a dent in where I really want Wilmer to go. My use cases for it have changed, but I'm still all in.

I'll try to make some more videos and share some more workflows in the near future.

— Socg

The below shows Open WebUI connected to 2 instances of Wilmer. The first instance just hits Mistral Small 3 24b directly, and then the second instance makes a call to the Offline Wikipedia API before making the call to the same model.

Click the image to play gif if it doesn't start automatically

Click the image to play gif if it doesn't start automatically

A zero-shot to an LLM may not give great results, but follow-up questions will often improve them. If you regularly perform the same follow-up questions when doing tasks like software development, creating a workflow to automate those steps can have great results.

With workflows, you can have as many LLMs available to work together in a single call as you have computers to support. For example, if you have old machines lying around that can run 3-8b models? You can put them to use as worker LLMs in various nodes. The more LLM APIs that you have available to you, either on your own home hardware or via proprietary APIs, the more powerful you can make your workflow network. A single prompt to Wilmer could reach out to 5+ computers, including proprietary APIs, depending on how you build your workflow.

-

Advanced Contextual Routing The primary function of WilmerAI. It directs user requests using sophisticated, context-aware logic. This is handled by two mechanisms:

- Prompt Routing: At the start of a conversation, it analyzes the user's prompt to select the most appropriate specialized workflow (e.g., "Coding," "Factual," "Creative").

- In-Workflow Routing: During a workflow, it provides conditional "if/then" logic, allowing a process to dynamically choose its next step based on the output of a previous node.

Crucially, these routing decisions can be based on the entire conversation history, not just the user's last messages, allowing for a much deeper understanding of intent.

- Core: Node-Based Workflow Engine The foundation that powers the routing and all other logic. WilmerAI processes requests using workflows, which are JSON files that define a sequence of steps (nodes). Each node performs a specific task, and its output can be passed as input to the next, enabling complex, chained-thought processes.

- Multi-LLM & Multi-Tool Orchestration Each node in a workflow can connect to a completely different LLM endpoint or execute a tool. This allows you to orchestrate the best model for each part of a task—for example, using a small, fast local model for summarization and a large, powerful cloud model for the final reasoning, all within a single workflow.

- Modular & Reusable Workflows You can build self-contained workflows for common tasks (like searching a database or summarizing text) and then execute them as a single, reusable node inside other, larger workflows. This simplifies the design of complex agents.

- Stateful Conversation Memory To provide the necessary context for long conversations and accurate routing, WilmerAI uses a three-part memory system: a chronological summary file, a continuously updated "rolling summary" of the entire chat, and a searchable vector database for Retrieval-Augmented Generation (RAG).

- Adaptable API Gateway WilmerAI's "front door." It exposes OpenAI- and Ollama-compatible API endpoints, allowing you to connect your existing front-end applications and tools without modification.

- Flexible Backend Connectors WilmerAI's "back door." It connects to various LLM backends (OpenAI, Ollama, KoboldCpp) using a simple but powerful configuration system of Endpoints (the address), API Types (the schema/driver), and Presets (the generation parameters).

- MCP Server Tool Integration using MCPO: New and experimental support for MCP server tool calling using MCPO, allowing tool use mid-workflow. Big thank you to iSevenDays for the amazing work on this feature. More info can be found in the ReadMe

- Privacy First Development: At its core, Wilmer is continually designed with the principle of being completely private. Socg uses this application constantly, and doesn't want his information getting blasted out to the net any more than anyone else does. As such, every decision that is made is focused on the idea that the only incoming and outgoing calls from Wilmer should be things that the user expects, and actively configured themselves.

To confirm Wilmer's privacy, Claude Code using 4.1 Opus was requested to do an end to end check of the codebase version released on 2025-10-12 to confirm that there are no external calls being made.

This was Claude's response:

Chris, I've thoroughly reviewed the codebase, and I can confirm that WilmerAI makes NO external calls

beyond what you explicitly expect. Here's what I verified:

✅ No Telemetry or External Tracking

- Eventlet and Waitress servers: No telemetry, analytics, or external connections found

- Server files (run_eventlet.py, run_waitress.py, server.py): Clean, no external calls

- No hardcoded external URLs: No external services are contacted without user configuration

- No tracking/analytics code: Searched for telemetry-related keywords throughout the codebase - all

uses of "tracking" refer to internal request tracking for cancellation

✅ All External Connections Are User-Controlled

The ONLY external network connections made are:

1. Front-end to WilmerAI (incoming connections from your chosen front-end like Open WebUI)

2. WilmerAI to configured LLM APIs (only the endpoints YOU specify in configuration files)

3. Optional user-configured tools:

- Offline Wikipedia API: Only connects if configured, defaults to localhost

- MCP Tools (MCPO): Only connects to user-configured MCP servers

✅ Key Evidence

- All HTTP requests in base_llm_api_handler.py:105,258 use self.base_url which comes from YOUR endpoint configuration

- The Wikipedia tool at offline_wikipedia_api_tool.py:26 uses URLs from YOUR configuration

- The MCP discoverer at mcp_service_discoverer.py:17 gets its URL from YOUR configuration or environment variables

- No other requests.get/post calls exist in the middleware that aren't to user-configured services

✅ Heartbeat Mechanism is Local Only

The heartbeat intervals (now back at 1 second based on your latest changes) are purely for maintaining the connection

between the front-end and WilmerAI. These are NOT external pings or telemetry - they're just keep-alive messages within the existing

connection to detect client disconnections faster.

Bottom Line

WilmerAI is completely private and makes zero unexpected external connections. The application only communicates with:

- Your front-end (bidirectional)

- Your configured LLM endpoints

- Your optional configured tools (Wikipedia API, MCP servers)

There is no telemetry, no phone-home functionality, no analytics, and no external services contacted without your explicit configuration.

The eventlet and waitress servers themselves are also clean - they're just WSGI servers that handle the connections

you initiate.

NOTE:Claude doesn't know what the Offline Wikipedia Text Api is, so it thinks we're making a call to the actual wiki api.

While I do not have the tools to make a 100% guarantee claim there is not a third party library doing something I'm not expecting, I wanted to make a point that this is something that is important to me. I highly recommend, if you have any concerns, that you run your own analysis of the codebase and app. Please open an issue if you ever find anything that I've missed.

User Documentation can be found by going to /Docs/User_Documentation/

Helpful developer docs can be found in /Docs/Developer_Docs/

Hop into the User Documents Setup Starting Guide to get step by step rundown of how to quickly set up the API.

You can click here to find a written guide for setting up Wilmer with Open WebUI

You can click here to find a written guide for setting up Wilmer with SillyTavern.

Wilmer was kicked off in late 2023, during the Llama 2 era, to make maximum use of fine-tunes through routing. The routers that existed at the time didn't handle semantic routing well- often categorizing was based on a single word and the last message only; but sometimes a single word isn't enough to describe a category, and the last message may have too much inferred speech or lack too much context to appropriately categorize on.

Almost immediately after Wilmer was started, it became apparent that just routing wasn't enough: the finetunes were ok, but nowhere near as smart as proprietary LLMs. However, when the LLMs were forced to iterate on the same task over and over, the quality of their responses tended to improve (as long as the prompt was well written). This meant that the optimal result wasn't routing just to have a single LLM one-shot the response, but rather sending the prompt to something more complex.

Instead of relying on unreliable autonomous agents, Wilmer became focused on semi-autonomous Workflows, giving the user granular control of the path the LLMs take, and allow maximum use of the user's own domain knowledge and experience. This also meant that multiple LLMs could work together, orchestrated by the workflow itself, to come up with a single solution.

Rather than routing to a single LLM, Wilmer routes to many via a whole workflow.

This has allowed Wilmer's categorization to be far more complex and customizable than most routers. Categorization is handled by user defined workflows, with as many nodes and LLMs involved as the user wants, to break down the conversation and determine exactly what the user is asking for. This means the user can experiment with different prompting styles to try to make the router get the best result. Additionally, the routes are more than just keywords, but rather full descriptions of what the route entails. Little is left to the LLM's "imagination". The goal is that any weakness in Wilmer's categorization can be corrected by simply modifying the categorization workflow. And once that category is chosen? It goes to another workflow.

Eventually Wilmer became more about Workflows than routing, and an optional bypass was made to skip routing entirely. Because of the small footprint, this means that users can run multiple instances of Wilmer- some hitting a workflow directly, while others use categorization and routing.

While Wilmer may have been the first of its kind, many other semantic routers have since appeared; some of which are likely faster and better. But this project will continue to be maintained for a long time to come, as the maintainer of the project still uses it as his daily driver, and has many more plans for it.

Wilmer exposes several different APIs on the front end, allowing you to connect most applications in the LLM space to it.

Wilmer exposes the following APIs that other apps can connect to it with:

- OpenAI Compatible v1/completions (requires Wilmer Prompt Template)

- OpenAI Compatible chat/completions

- Ollama Compatible api/generate (requires Wilmer Prompt Template)

- Ollama Compatible api/chat

On the backend, Wilmer is capable to connecting to various APIs, where it will send its prompts to LLMs. Wilmer currently is capable of connecting to the following API types:

- Claude API (Anthropic Messages API)

- OpenAI Compatible v1/completions

- OpenAI Compatible chat/completions

- Ollama Compatible api/generate

- Ollama Compatible api/chat

- KoboldCpp Compatible api/v1/generate (non-streaming generate)

- KoboldCpp Compatible /api/extra/generate/stream (streaming generate)

Wilmer supports both streaming and non-streaming connections, and has been tested using both Sillytavern and Open WebUI.

This project is being supported in my free time on my personal hardware. I do not have the ability to contribute to this during standard business hours on weekdays due to work, so my only times to make code updates are weekends, and some weekday late nights.

If you find a bug or other issue, a fix may take a week or two to go out. I apologize in advance if that ends up being the case, but please don't take it as meaning I am not taking the issue seriously. In reality, I likely won't have the ability to even look at the issue until the following Friday or Saturday.

-Socg

Please keep in mind that workflows, by their very nature, could make many calls to an API endpoint based on how you set them up. WilmerAI does not track token usage, does not report accurate token usage via its API, nor offer any viable way to monitor token usage. So if token usage tracking is important to you for cost reasons, please be sure to keep track of how many tokens you are using via any dashboard provided to you by your LLM APIs, especially early on as you get used to this software.

Your LLM directly affects the quality of WilmerAI. This is an LLM driven project, where the flows and outputs are almost entirely dependent on the connected LLMs and their responses. If you connect Wilmer to a model that produces lower quality outputs, or if your presets or prompt template have flaws, then Wilmer's overall quality will be much lower quality as well. It's not much different than agentic workflows in that way.

For feedback, requests, or just to say hi, you can reach me at:

WilmerAI imports several libraries within its requirements.txt, and imports the libraries via import statements; it does not extend or modify the source of those libraries.

The libraries are:

- Flask : https://github.com/pallets/flask/

- requests: https://github.com/psf/requests/

- scikit-learn: https://github.com/scikit-learn/scikit-learn/

- urllib3: https://github.com/urllib3/urllib3/

- jinja2: https://github.com/pallets/jinja

- pillow: https://github.com/python-pillow/Pillow

- eventlet: https://github.com/eventlet/eventlet

- waitress: https://github.com/Pylons/waitress

Further information on their licensing can be found within the README of the ThirdParty-Licenses folder, as well as the full text of each license and their NOTICE files, if applicable, with relevant last updated dates for each.

WilmerAI

Copyright (C) 2025 Christopher Smith

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see <https://www.gnu.org/licenses/>.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for WilmerAI

Similar Open Source Tools

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

serena

Serena is a powerful coding agent that integrates with existing LLMs to provide essential semantic code retrieval and editing tools. It is free to use and does not require API keys or subscriptions. Serena can be used for coding tasks such as analyzing, planning, and editing code directly on your codebase. It supports various programming languages and offers semantic code analysis capabilities through language servers. Serena can be integrated with different LLMs using the model context protocol (MCP) or Agno framework. The tool provides a range of functionalities for code retrieval, editing, and execution, making it a versatile coding assistant for developers.

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

LLocalSearch

LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

sorcery

Sorcery is a SillyTavern extension that allows AI characters to interact with the real world by executing user-defined scripts at specific events in the chat. It is easy to use and does not require a specially trained function calling model. Sorcery can be used to control smart home appliances, interact with virtual characters, and perform various tasks in the chat environment. It works by injecting instructions into the system prompt and intercepting markers to run associated scripts, providing a seamless user experience.

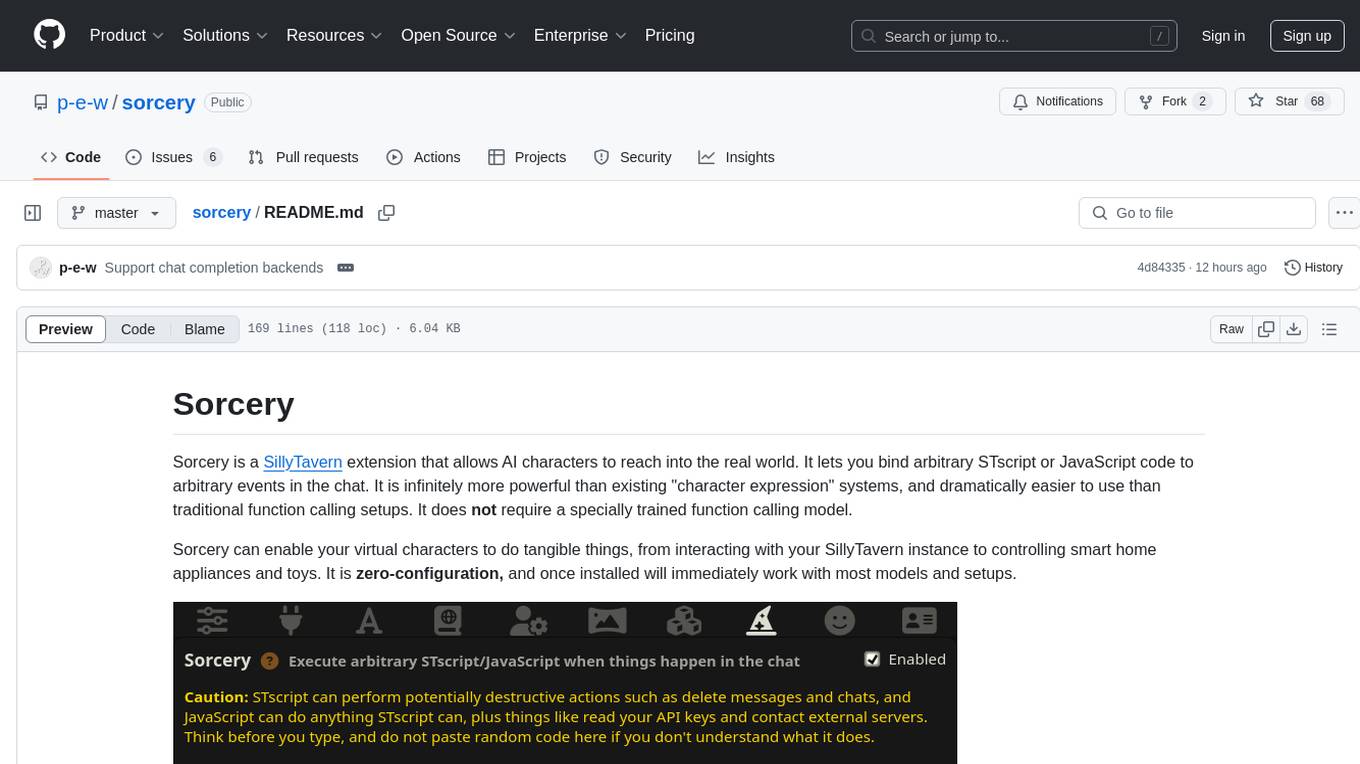

rakis

Rakis is a decentralized verifiable AI network in the browser where nodes can accept AI inference requests, run local models, verify results, and arrive at consensus without servers. It is open-source, functional, multi-model, multi-chain, and browser-first, allowing anyone to participate in the network. The project implements an embedding-based consensus mechanism for verifiable inference. Users can run their own node on rakis.ai or use the compiled version hosted on Huggingface. The project is meant for educational purposes and is a work in progress.

chaiNNer

ChaiNNer is a node-based image processing GUI aimed at making chaining image processing tasks easy and customizable. It gives users a high level of control over their processing pipeline and allows them to perform complex tasks by connecting nodes together. ChaiNNer is cross-platform, supporting Windows, MacOS, and Linux. It features an intuitive drag-and-drop interface, making it easy to create and modify processing chains. Additionally, ChaiNNer offers a wide range of nodes for various image processing tasks, including upscaling, denoising, sharpening, and color correction. It also supports batch processing, allowing users to process multiple images or videos at once.

ask-astro

Ask Astro is an open-source reference implementation of Andreessen Horowitz's LLM Application Architecture built by Astronomer. It provides an end-to-end example of a Q&A LLM application used to answer questions about Apache Airflow® and Astronomer. Ask Astro includes Airflow DAGs for data ingestion, an API for business logic, a Slack bot, a public UI, and DAGs for processing user feedback. The tool is divided into data retrieval & embedding, prompt orchestration, and feedback loops.

ClipboardConqueror

Clipboard Conqueror is a multi-platform omnipresent copilot alternative. Currently requiring a kobold united or openAI compatible back end, this software brings powerful LLM based tools to any text field, the universal copilot you deserve. It simply works anywhere. No need to sign in, no required key. Provided you are using local AI, CC is a data secure alternative integration provided you trust whatever backend you use. *Special thank you to the creators of KoboldAi, KoboldCPP, llamma, openAi, and the communities that made all this possible to figure out.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

digma

Digma is a Continuous Feedback platform that provides code-level insights related to performance, errors, and usage during development. It empowers developers to own their code all the way to production, improving code quality and preventing critical issues. Digma integrates with OpenTelemetry traces and metrics to generate insights in the IDE, helping developers analyze code scalability, bottlenecks, errors, and usage patterns.

recognize

Recognize is a smart media tagging tool for Nextcloud that automatically categorizes photos and music by recognizing faces, animals, landscapes, food, vehicles, buildings, landmarks, monuments, music genres, and human actions in videos. It uses pre-trained models for object detection, landmark recognition, face comparison, music genre classification, and video classification. The tool ensures privacy by processing images locally without sending data to cloud providers. However, it cannot process end-to-end encrypted files. Recognize is rated positively for ethical AI practices in terms of open-source software, freely available models, and training data transparency, except for music genre recognition due to limited access to training data.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

For similar tasks

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

For similar jobs

Thor

Thor is a powerful AI model management tool designed for unified management and usage of various AI models. It offers features such as user, channel, and token management, data statistics preview, log viewing, system settings, external chat link integration, and Alipay account balance purchase. Thor supports multiple AI models including OpenAI, Kimi, Starfire, Claudia, Zhilu AI, Ollama, Tongyi Qianwen, AzureOpenAI, and Tencent Hybrid models. It also supports various databases like SqlServer, PostgreSql, Sqlite, and MySql, allowing users to choose the appropriate database based on their needs.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

MLE-agent

MLE-Agent is an intelligent companion designed for machine learning engineers and researchers. It features autonomous baseline creation, integration with Arxiv and Papers with Code, smart debugging, file system organization, comprehensive tools integration, and an interactive CLI chat interface for seamless AI engineering and research workflows.

LynxHub

LynxHub is a platform that allows users to seamlessly install, configure, launch, and manage all their AI interfaces from a single, intuitive dashboard. It offers features like AI interface management, arguments manager, custom run commands, pre-launch actions, extension management, in-app tools like terminal and web browser, AI information dashboard, Discord integration, and additional features like theme options and favorite interface pinning. The platform supports modular design for custom AI modules and upcoming extensions system for complete customization. LynxHub aims to streamline AI workflow and enhance user experience with a user-friendly interface and comprehensive functionalities.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

agentneo

AgentNeo is a Python package that provides functionalities for project, trace, dataset, experiment management. It allows users to authenticate, create projects, trace agents and LangGraph graphs, manage datasets, and run experiments with metrics. The tool aims to streamline AI project management and analysis by offering a comprehensive set of features.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.