PythonAI

None

Stars: 69

PythonAI is an open-source AI Assistant designed for the Raspberry Pi by Kevin McAleer. The project aims to enhance the capabilities of the Raspberry Pi by providing features such as conversation history, a conversation API, a web interface, a skills framework using plugin technology, and an event framework for adding functionality via plugins. The tool utilizes the Vosk offline library for speech-to-text conversion and offers a simple skills framework for easy implementation of new skills. Users can create new skills by adding Python files to the 'skills' folder and updating the 'skills.json' file. PythonAI is designed to be easy to read, maintain, and extend, making it a valuable tool for Raspberry Pi enthusiasts looking to build AI applications.

README:

An opensource AI Assistant for the Raspberry Pi By Kevin McAleer

I'm returning to this project and will be doing a couple of new videos to improve the capabilities and to tidy up our code as its grown a bit unwieldly!

Here are a couple of things I'll be looking next:

- [x] a conversation history

- [X] a conversation API

- [X] a web interface (finally!)

- [X] a 'proper' skills framework, using plugin technology

- [X] an event framework to be able to add functionality via plugins

Google have stopped supporting the APi we previously used to convert speech audio to text, so I've not moved to an offline library called Vosk. Its very easy to setup - just type:

pip install voskand you'll install the main library for Python.

You'll also need to download a model from https://alphacephei.com/vosk/models. I went with the vosk-model-en-us-0.22 model, which although large is ery accurate. To install the model, just unzip the vosk-model-en-us-0.22.zip file and rename the unzipped folder to model and put that in the root of the git repository.

Since I started this project I've learned a lot more about Python, and Python itself has undergone many minor releases. I've refactored almost all the code from the original project to make it easier to read, maintain and extend. Each skill now as a separate skill file that contains everything associated with that skill, and there is a new skill framework for importing the skills at runtime. This means we can add new skills without having to touch the main program.

The new skills framework mean that adding a new conversation history was very simple - I was even able to quickly add an API on top of the conversation history so we can read that in and dynamically update it using some javascript (and jQuery to pull in the convesation history data from the API).

The new skills framework is very simple to implement:

- Create a new python file in the

skillsfolder - add a new class such as:

@dataclass

class Insults_skill:

name = 'insults'

def commands(self, command:str):

return ['insult me', 'tell me an insult', 'give me an insult', 'roast me']

def handle_command(self, command:str, ai:AI):

ai.say('you are a worm')

def initialize():

factory.register('insult_skill', Insult_skill)- Update the

skills.jsonfile to include the new skill:

{

"plugins": ["skills.goodday", "skills.weather", "skills.facts", "skills.jokes", "skills.calendar", "skills.insult"],

"skills": [

{

"name": "weather_skill"

},

{

"name": "facts_skill"

},

{

"name": "jokes_skill"

},

{

"name": "goodday_skill"

},

{

"name": "calendar_skill"

},

{

"name": "insult_skill"

}

]

}- Run the

alf.pyPython program - The skills factory will load the

skills.jsonfile and create a new list of skills, including this new Insults skill. Thecommandsfunction within the skill returns all the words or phrases that the AI will listen for and then handle those requests by running thehandle_commandfunction.

Create an API key (its free) at <home.openweathermap.org>

Make sure you have pyaudio and espeak installed:

sudo apt-get install espeak

sudo apt-get install python-audio Using the respeaker hat from Seeed studios:

git clone https://github.com/respeaker/seeed-voicecard

cd seeed-voicecard

sudo ./install.sh

sudo rebootIf this doesn't work and you get ASLA error messages, try:

It may probably happen that the driver won't compile with the latest kernel when raspbian rolls out new patches to the kernel. If so, please try sudo ./install.sh --compat-kernel which uses an older kernel but ensures that the driver can work.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PythonAI

Similar Open Source Tools

PythonAI

PythonAI is an open-source AI Assistant designed for the Raspberry Pi by Kevin McAleer. The project aims to enhance the capabilities of the Raspberry Pi by providing features such as conversation history, a conversation API, a web interface, a skills framework using plugin technology, and an event framework for adding functionality via plugins. The tool utilizes the Vosk offline library for speech-to-text conversion and offers a simple skills framework for easy implementation of new skills. Users can create new skills by adding Python files to the 'skills' folder and updating the 'skills.json' file. PythonAI is designed to be easy to read, maintain, and extend, making it a valuable tool for Raspberry Pi enthusiasts looking to build AI applications.

openai-cf-workers-ai

OpenAI for Workers AI is a simple, quick, and dirty implementation of OpenAI's API on Cloudflare's new Workers AI platform. It allows developers to use the OpenAI SDKs with the new LLMs without having to rewrite all of their code. The API currently supports completions, chat completions, audio transcription, embeddings, audio translation, and image generation. It is not production ready but will be semi-regularly updated with new features as they roll out to Workers AI.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

ell

ell is a command-line interface for Language Model Models (LLMs) written in Bash. It allows users to interact with LLMs from the terminal, supports piping, context bringing, and chatting with LLMs. Users can also call functions and use templates. The tool requires bash, jq for JSON parsing, curl for HTTPS requests, and perl for PCRE. Configuration involves setting variables for different LLM models and APIs. Usage examples include asking questions, specifying models, recording input/output, running in interactive mode, and using templates. The tool is lightweight, easy to install, and pipe-friendly, making it suitable for interacting with LLMs in a terminal environment.

ray-llm

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve. It provides an extensive suite of pre-configured open source LLMs, with defaults that work out of the box. RayLLM supports Transformer models hosted on Hugging Face Hub or present on local disk. It simplifies the deployment of multiple LLMs, the addition of new LLMs, and offers unique autoscaling support, including scale-to-zero. RayLLM fully supports multi-GPU & multi-node model deployments and offers high performance features like continuous batching, quantization and streaming. It provides a REST API that is similar to OpenAI's to make it easy to migrate and cross test them. RayLLM supports multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

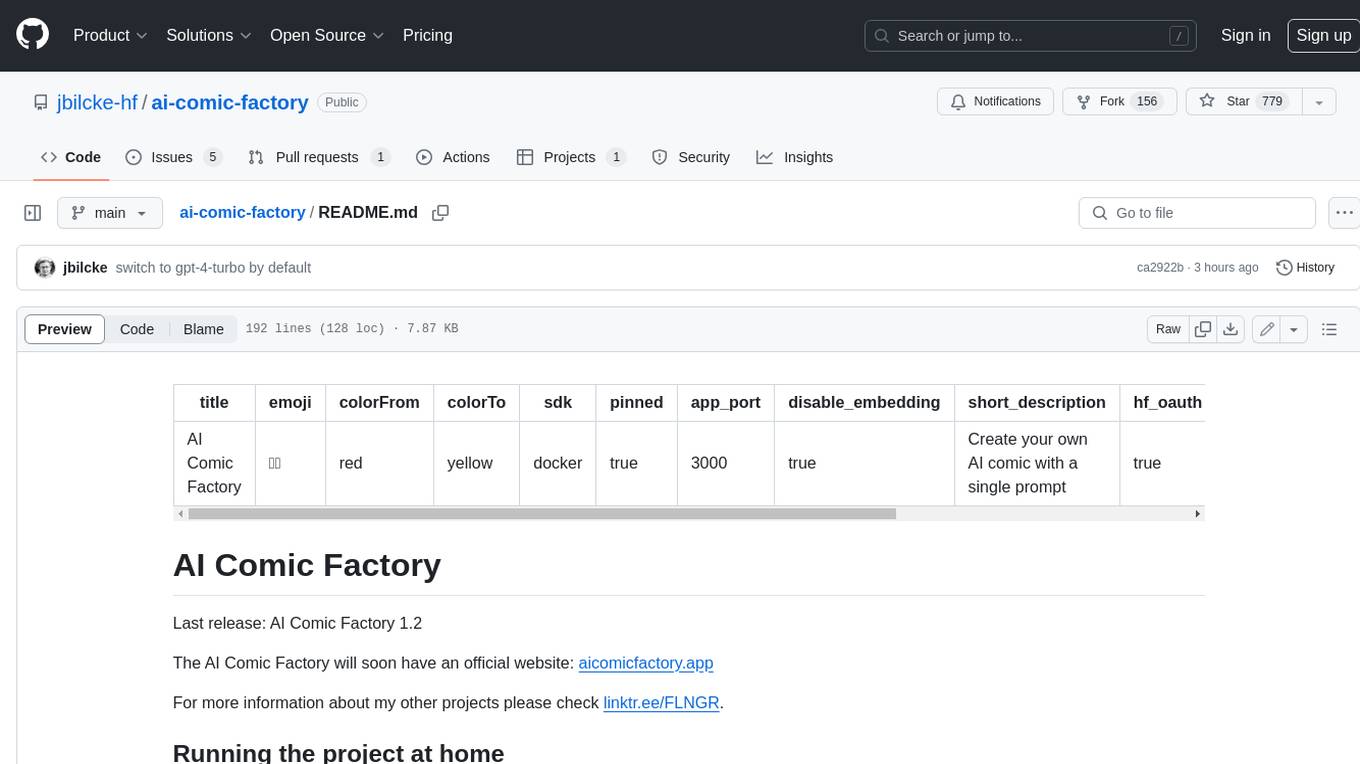

ai-comic-factory

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

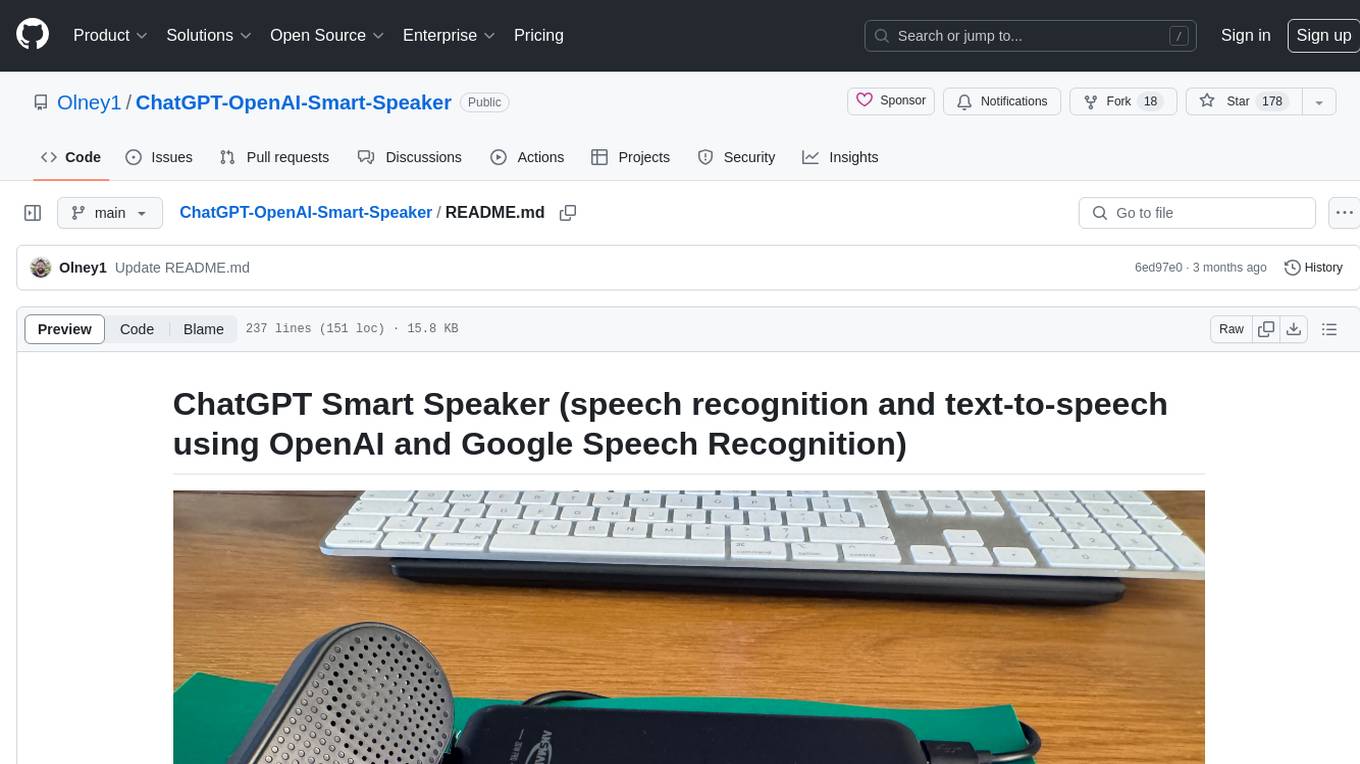

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

For similar tasks

PythonAI

PythonAI is an open-source AI Assistant designed for the Raspberry Pi by Kevin McAleer. The project aims to enhance the capabilities of the Raspberry Pi by providing features such as conversation history, a conversation API, a web interface, a skills framework using plugin technology, and an event framework for adding functionality via plugins. The tool utilizes the Vosk offline library for speech-to-text conversion and offers a simple skills framework for easy implementation of new skills. Users can create new skills by adding Python files to the 'skills' folder and updating the 'skills.json' file. PythonAI is designed to be easy to read, maintain, and extend, making it a valuable tool for Raspberry Pi enthusiasts looking to build AI applications.

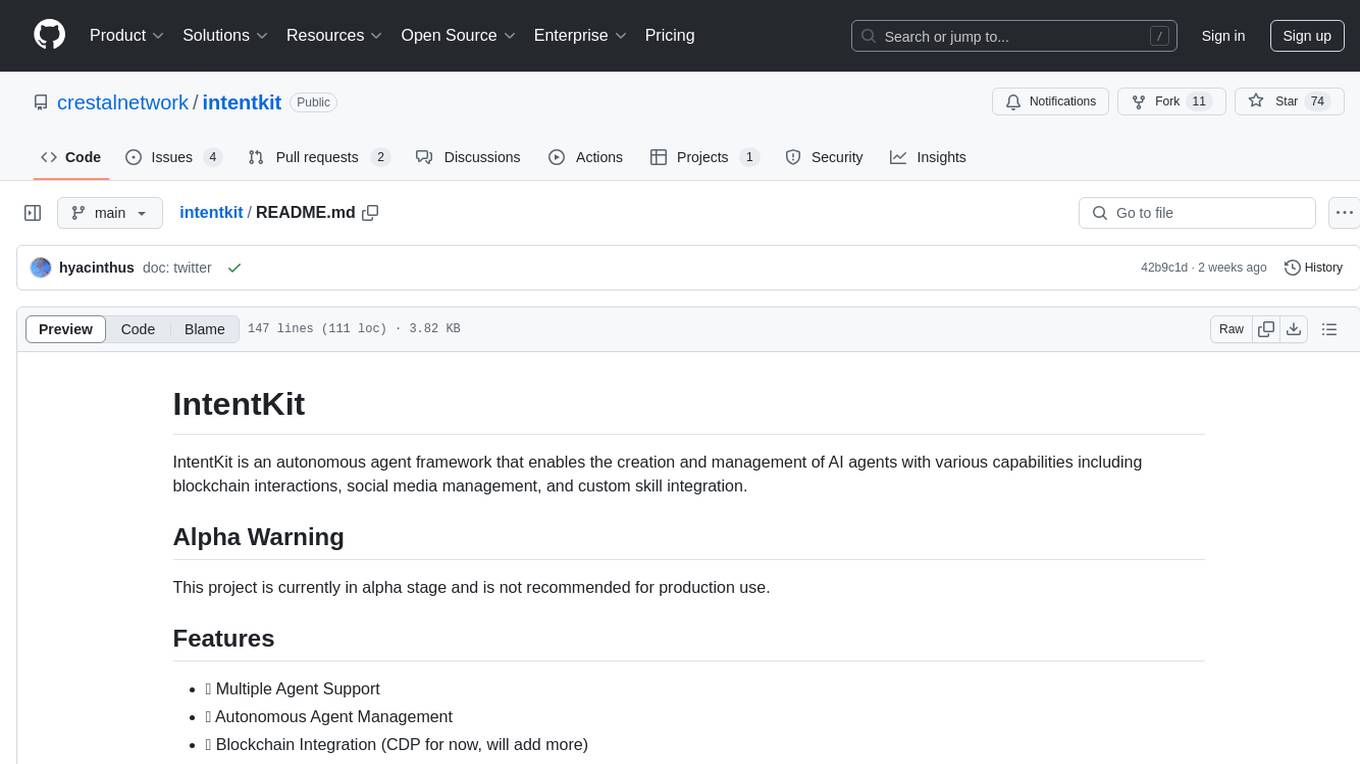

intentkit

IntentKit is an autonomous agent framework that enables the creation and management of AI agents with capabilities including blockchain interactions, social media management, and custom skill integration. It supports multiple agents, autonomous agent management, blockchain integration, social media integration, extensible skill system, and plugin system. The project is in alpha stage and not recommended for production use. It provides quick start guides for Docker and local development, integrations with Twitter and Coinbase, configuration options using environment variables or AWS Secrets Manager, project structure with core application code, entry points, configuration management, database models, skills, skill sets, and utility functions. Developers can add new skills by creating, implementing, and registering them in the skill directory.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.