Cradle

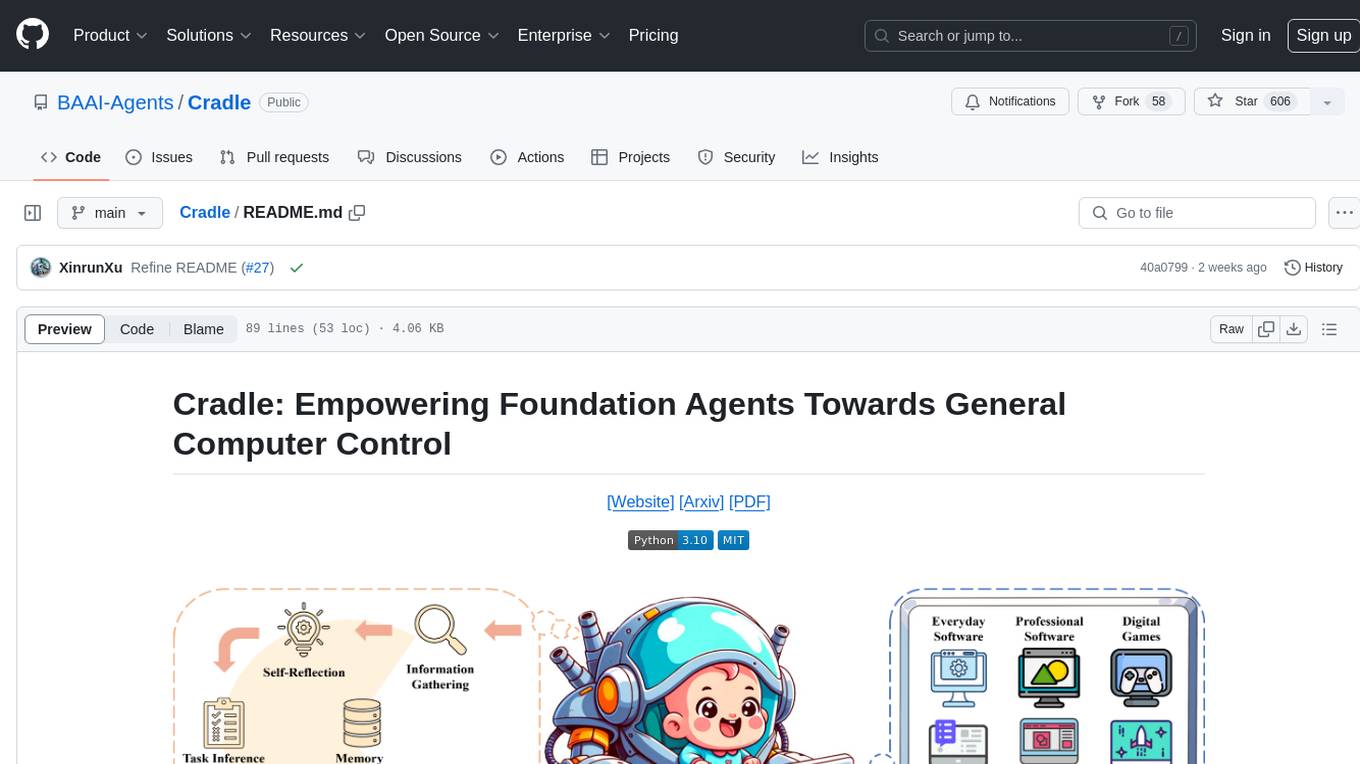

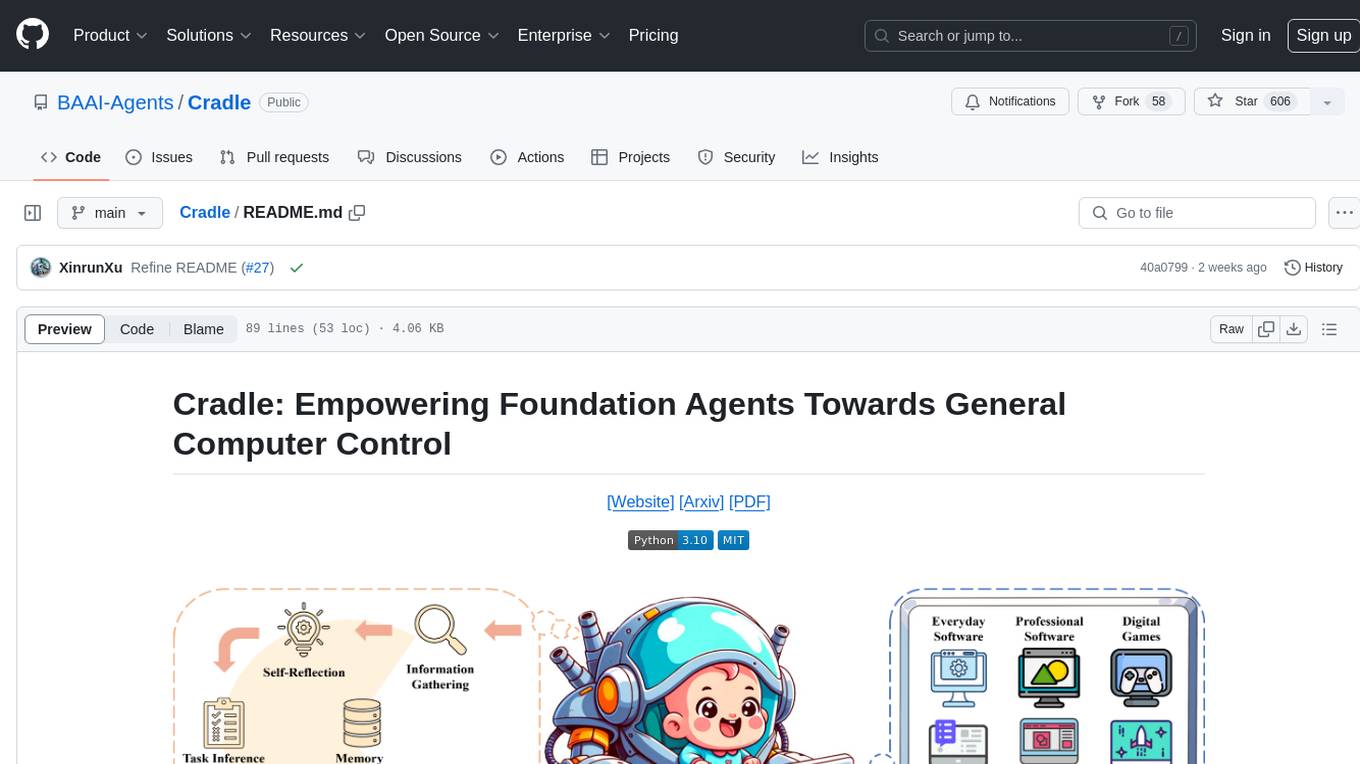

The Cradle framework is a first attempt at General Computer Control (GCC). Cradle supports agents to ace any computer task by enabling strong reasoning abilities, self-improvment, and skill curation, in a standardized general environment with minimal requirements.

Stars: 1721

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

README:

The Cradle framework empowers nascent foundation models to perform complex computer tasks via the same unified interface humans use, i.e., screenshots as input and keyboard & mouse operations as output.

- 2024-06-27: A major update! Cradle is extened to four games: RDR2, Stardew Valley, Cities: Skylines, and Dealer's Life 2 and various software, including but not limited to Chrome, Outlook, Capcut, Meitu and Feishu. We also release our latest paper. Check it out!

Click on either of the video thumbnails above to watch them on YouTube.

We currently provide access to OpenAI's and Claude's API. Please create a .env file in the root of the repository to store the keys (one of them is enough).

Sample .env file containing private information:

OA_OPENAI_KEY = "abc123abc123abc123abc123abc123ab"

RF_CLAUDE_AK = "abc123abc123abc123abc123abc123ab" # Access Key for Claude

RF_CLAUDE_SK = "123abc123abc123abc123abc123abc12" # Secret Access Key for Claude

AZ_OPENAI_KEY = "123abc123abc123abc123abc123abc12"

AZ_BASE_URL = "https://abc123.openai.azure.com/"

RF_CLAUDE_AK = "abc123abc123abc123abc123abc123ab"

RF_CLAUDE_SK = "123abc123abc123abc123abc123abc12"

IDE_NAME = "Code"

OA_OPENAI_KEY is the OpenAI API key. You can get it from the OpenAI.

AZ_OPENAI_KEY is the Azure OpenAI API key. You can get it from the Azure Portal.

OA_CLAUDE_KEY is the Anthropic Claude API key. You can get it from the Anthropic.

RF_CLAUDE_AK and RF_CLAUDE_SK are AWS Restful API key and secret key for Claude API.

IDE_NAME refers to the IDE environment in which the repository's code runs, such as PyCharm or Code (VSCode). It is primarily used to enable automatic switching between the IDE and the target environment.

Please setup your python environment and install the required dependencies as:

# Clone the repository

git clone https://github.com/BAAI-Agents/Cradle.git

cd Cradle

# Create a new conda environment

conda create --name cradle-dev python=3.10

conda activate cradle-dev

pip install -r requirements.txt1. Option 1

# Download best-matching version of specific model for your spaCy installation

python -m spacy download en_core_web_lg

or

# pip install .tar.gz archive or .whl from path or URL

pip install https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.7.1/en_core_web_lg-3.7.1.tar.gz

2. Option 2

# Copy this url https://github.com/explosion/spacy-models/releases/download/en_core_web_lg-3.7.1/en_core_web_lg-3.7.1.tar.gz

# Paste it in the browser and download the file to res/spacy/data

cd res/spacy/data

pip install en_core_web_lg-3.7.1.tar.gz

Due to the vast differences between each game and software, we have provided the specific settings for each of them below.

Since some users may want to apply our framework to new games, this section primarily showcases the core directories and organizational structure of Cradle. We will highlight in "⭐⭐⭐" the modules related to migrating to new games, and provide detailed explanations later.

Cradle

├── cache # Cache the GroundingDino model and the bert-base-uncased model

├── conf # ⭐⭐⭐ The configuration files for the environment and the llm model

│ ├── env_config_dealers.json

│ ├── env_config_rdr2_main_storyline.json

│ ├── env_config_rdr2_open_ended_mission.json

│ ├── env_config_skylines.json

│ ├── env_config_stardew_cultivation.json

│ ├── env_config_stardew_farm_clearup.json

│ ├── env_config_stardew_shopping.json

│ ├── openai_config.json

│ ├── claude_config.json

│ ├── restful_claude_config.json

│ └── ...

├── deps # The dependencies for the Cradle framework, ignore this folder

├── docs # The documentation for the Cradle framework, ignore this folder

├── res # The resources for the Cradle framework

│ ├── models # Ignore this folder

│ ├── tool # Subfinder for RDR2

│ ├── [game or software] # ⭐⭐⭐ The resources for game, exmpale: rdr2, dealers, skylines, stardew, outlook, chrome, capcut, meitu, feishu

│ │ ├── prompts # The prompts for the game

│ │ │ └── templates

│ │ │ ├── action_planning.prompt

│ │ │ ├── information_gathering.prompt

│ │ │ ├── self_reflection.prompt

│ │ │ └── task_inference.prompt

│ │ ├── skills # The skills json for the game, it will be generated automatically

│ │ ├── icons # The icons difficult for GPT-4 to recognize in the game can be replaced with text for better recognition using an icon replacer

│ │ └── saves # Save files in the game

│ └── ...

├── requirements.txt # The requirements for the Cradle framework

├── runner.py # The main entry for the Cradle framework

├── cradle # Cradle's core modules

│ ├── config # The configuration for the Cradle framework

│ ├── environment # The environment for the Cradle framework

│ │ ├── [game or software] # ⭐⭐⭐ The environment for the game, exmpale: rdr2, dealers, skylines, stardew, outlook, chrome, capcut, meitu, feishu

│ │ │ ├── __init__.py # The initialization file for the environment

│ │ │ ├── atomic_skills # Atomic skills in the game. Users should customise them to suit the needs of the game or software, e.g. character movement

│ │ │ ├── composite_skills # Combination skills for atomic skills in games or software

│ │ │ ├── skill_registry.py # The skill registry for the game. Will register all atomic skills and composite skills into the registry.

│ │ │ └── ui_control.py # The UI control for the game. Define functions to pause the game and switch to the game window

│ │ └── ...

│ ├── gameio # Interfaces that directly wrap the skill registry and ui control in the environment

│ ├── log # The log for the Cradle framework

│ ├── memory # The memory for the Cradle framework

│ ├── module # Currently there is only the skill execution module. Later will migrate action planning, self-reflection and other modules from planner and provider

│ ├── planner # The planner for the Cradle framework. Unified interface for action planning, self-reflection and other modules. This module will be deleted later and will be moved to the module module.

│ ├── runner # ⭐⭐⭐ The logical flow of execution for each game and software. All game and software processes will then be unified into a single runner

│ ├── utils # Defines some helper functions such as save json and load json

│ └── provider # The provider for the Cradle framework. We have semantically decomposed most of the execution flow in the runner into providers

│ ├── augment # Methods for image augmentation

│ ├── llm # Call for the LLM model, e.g. OpenAI's GPT-4o, Claude, etc.

│ ├── module # ⭐⭐⭐ The module for the Cradle framework. e.g., action planning, self-reflection and other modules. It will be migrated to the cradle/module later.

│ ├── object_detect # Methods for object detection

│ ├── process # ⭐⭐⭐ Methods for pre-processing and post-processing for action planning, self-reflection and other modules

│ ├── video # Methods for video processing

│ ├── others # Methods for other operations, e.g., save and load coordinates for skylines

│ ├── circle_detector.py # The circle detector for the rdr2

│ ├── icon_replacer.py # Methods for replacing icons with text

│ ├── sam_provider.py # Segment anything for software

│ └── ...

└── ...

Since each game's settings and the operating systems they are compatible with are different, Cradle cannot simply replace one game name to migrate to a new game. We suggest considering each game specifically. For example, RDR2, an independent AAA game, requires real-time combat, so we need to pause the game to wait for GPT-4o's response and then unpause the game to execute the actions. Stardew has the same issue. Other games like Dealer's Life 2 and Cities: Skylines do not have real-time requirements, so they do not need to pause. If the new game is similar to the latter, we recommend copying Cities: Skylines' implementation and following its implementation path to create the corresponding modules. Although each game may differ significantly, our Cradle framework can still achieve a unified adaptation for a game. Assuming the new game's name is newgame, the specific migration pipeline can be found Migrate to New Game Guide.

If you find our work useful, please consider citing us!

@article{tan2024cradle,

title={Cradle: Empowering Foundation Agents towards General Computer Control},

author={Weihao Tan and Wentao Zhang and Xinrun Xu and Haochong Xia and Ziluo Ding and Boyu Li and Bohan Zhou and Junpeng Yue and Jiechuan Jiang and Yewen Li and Ruyi An and Molei Qin and Chuqiao Zong and Longtao Zheng and Yujie Wu and Xiaoqiang Chai and Yifei Bi and Tianbao Xie and Pengjie Gu and Xiyun Li and Ceyao Zhang and Long Tian and Chaojie Wang and Xinrun Wang and Börje F. Karlsson and Bo An and Shuicheng Yan and Zongqing Lu},

journal={arXiv preprint arXiv:2403.03186},

year={2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Cradle

Similar Open Source Tools

Cradle

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

FinAnGPT-Pro

FinAnGPT-Pro is a financial data downloader and AI query system that downloads quarterly and annual financial data for stocks from EOD Historical Data, storing it in MongoDB and Google BigQuery. It includes an AI-powered natural language interface for querying financial data. Users can set up the tool by following the prerequisites and setup instructions provided in the README. The tool allows users to download financial data for all stocks in a watchlist or for a single stock, query financial data using a natural language interface, and receive responses in a structured format. Important considerations include error handling, rate limiting, data validation, BigQuery costs, MongoDB connection, and security measures for API keys and credentials.

RooFlow

RooFlow is a VS Code extension that enhances AI-assisted development by providing persistent project context and optimized mode interactions. It reduces token consumption and streamlines workflow by integrating Architect, Code, Test, Debug, and Ask modes. The tool simplifies setup, offers real-time updates, and provides clearer instructions through YAML-based rule files. It includes components like Memory Bank, System Prompts, VS Code Integration, and Real-time Updates. Users can install RooFlow by downloading specific files, placing them in the project structure, and running an insert-variables script. They can then start a chat, select a mode, interact with Roo, and use the 'Update Memory Bank' command for synchronization. The Memory Bank structure includes files for active context, decision log, product context, progress tracking, and system patterns. RooFlow features persistent context, real-time updates, mode collaboration, and reduced token consumption.

ai-hedge-fund

AI Hedge Fund is a proof of concept for an AI-powered hedge fund that explores the use of AI to make trading decisions. The project is for educational purposes only and simulates trading decisions without actual trading. It employs agents like Market Data Analyst, Valuation Agent, Sentiment Agent, Fundamentals Agent, Technical Analyst, Risk Manager, and Portfolio Manager to gather and analyze data, calculate risk metrics, and make trading decisions.

Local-File-Organizer

The Local File Organizer is an AI-powered tool designed to help users organize their digital files efficiently and securely on their local device. By leveraging advanced AI models for text and visual content analysis, the tool automatically scans and categorizes files, generates relevant descriptions and filenames, and organizes them into a new directory structure. All AI processing occurs locally using the Nexa SDK, ensuring privacy and security. With support for multiple file types and customizable prompts, this tool aims to simplify file management and bring order to users' digital lives.

nori-skillsets

The Nori Skillsets Client is a CLI tool for installing and managing Skillsets from noriskillsets.dev. Skillsets are unified configurations defining agent behaviors, including step-by-step instructions, custom workflows, specialized agents, and quick actions. Users can install, switch, and manage Skillsets, maintaining multiple configurations without losing any settings. The tool requires Node.js, Claude Code CLI, and runs on Mac or Linux. Teams can create custom Skillsets, set up private registries, and benefit from access control, package sharing, and optional Skills Review service.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

swark

Swark is a VS Code extension that automatically generates architecture diagrams from code using large language models (LLMs). It is directly integrated with GitHub Copilot, requires no authentication or API key, and supports all languages. Swark helps users learn new codebases, review AI-generated code, improve documentation, understand legacy code, spot design flaws, and gain test coverage insights. It saves output in a 'swark-output' folder with diagram and log files. Source code is only shared with GitHub Copilot for privacy. The extension settings allow customization for file reading, file extensions, exclusion patterns, and language model selection. Swark is open source under the GNU Affero General Public License v3.0.

atropos

Atropos is a robust and scalable framework for Reinforcement Learning Environments with Large Language Models (LLMs). It provides a flexible platform to accelerate LLM-based RL research across diverse interactive settings. Atropos supports multi-turn and asynchronous RL interactions, integrates with various inference APIs, offers a standardized training interface for experimenting with different RL algorithms, and allows for easy scalability by launching more environment instances. The framework manages diverse environment types concurrently for heterogeneous, multi-modal training.

KrillinAI

KrillinAI is a video subtitle translation and dubbing tool based on AI large models, featuring speech recognition, intelligent sentence segmentation, professional translation, and one-click deployment of the entire process. It provides a one-stop workflow from video downloading to the final product, empowering cross-language cultural communication with AI. The tool supports multiple languages for input and translation, integrates features like automatic dependency installation, video downloading from platforms like YouTube and Bilibili, high-speed subtitle recognition, intelligent subtitle segmentation and alignment, custom vocabulary replacement, professional-level translation engine, and diverse external service selection for speech and large model services.

KlicStudio

Klic Studio is a versatile audio and video localization and enhancement solution developed by Krillin AI. This minimalist yet powerful tool integrates video translation, dubbing, and voice cloning, supporting both landscape and portrait formats. With an end-to-end workflow, users can transform raw materials into beautifully ready-to-use cross-platform content with just a few clicks. The tool offers features like video acquisition, accurate speech recognition, intelligent segmentation, terminology replacement, professional translation, voice cloning, video composition, and cross-platform support. It also supports various speech recognition services, large language models, and TTS text-to-speech services. Users can easily deploy the tool using Docker and configure it for different tasks like subtitle translation, large model translation, and optional voice services.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

morph

Morph is a python-centric full-stack framework for building and deploying data apps. It is fast to start, deploy and operate, requires no HTML/CSS knowledge, and is customizable with Python and SQL for advanced data workflows. With Markdown-based syntax and pre-made components, users can create visually appealing designs without writing HTML or CSS.

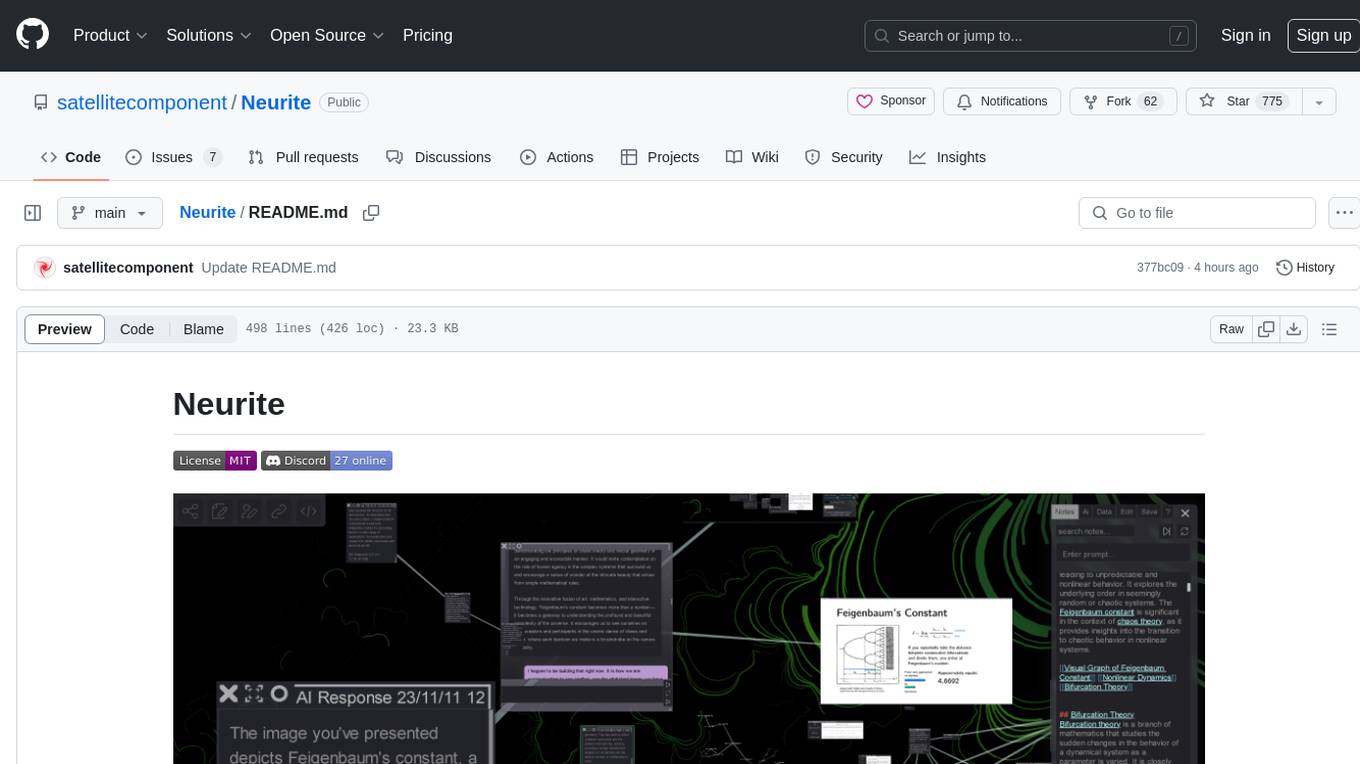

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.