craftium

A framework for creating rich, 3D, Minecraft-like single and multi-agent environments for AI research based on Minetest

Stars: 64

Craftium is an open-source platform based on the Minetest voxel game engine and the Gymnasium and PettingZoo APIs, designed for creating fast, rich, and diverse single and multi-agent environments. It allows for connecting to Craftium's Python process, executing actions as keyboard and mouse controls, extending the Lua API for creating RL environments and tasks, and supporting client/server synchronization for slow agents. Craftium is fully extensible, extensively documented, modern RL API compatible, fully open source, and eliminates the need for Java. It offers a variety of environments for research and development in reinforcement learning.

README:

✨ Imagine the richness of Minecraft: open worlds, procedural generation, fully destructible voxel environments... but open source, without Java, easily extensible in Lua, and with the modern Gymnasium and PettingZoo APIs for AI single-and multi-agent research... This is Craftium! ✨

[Docs] [GitHub] [Paper (ArXiv)]

Craftium is a fully open-source platform for creating fast, rich, and diverse single and multi-agent environments. Craftium is based on the amazing Luanti voxel game engine and the popular Gymnasium and PettingZoo APIs. Craftium forks Luanti to support:

- Connecting to Craftium's Python process (sending environment's data and receiving agent's action commands)

- Executing Craftium actions as keyboard and mouse controls.

- Extensions to the Lua API for creating RL environments.

- Enabling soft resets of the environments without restarting the engine.

- Luanti client(s)/server synchronization for slow agents (e.g., LLMs or VLMs).

📚 Craftium's documentation can be found here.

📑 If you use craftium in your projects or research please consider citing the project, and don't hesitate to let us know what you have created using the library! 🤗

-

Super extensible 🧩: Luanti is built with modding in mind! The game engine is completely extensible using the Lua programming language. Easily create mods to implement the environment that suits the needs of your research! See environments for a showcase of what is possible with craftium.

-

Blazingly fast ⚡: Craftium achieves +2K steps per second more than Minecraft-based alternatives! Environment richness, 3D, computational efficiency all meet in craftium!

-

Extensive documentation 📚: Craftium extensively documents how to use existing environments and create new ones. The documentation also includes a low-level reference of the exported Python classes and gymnasium Wrappers, extensions to the Lua API, and code examples. Moreover, Craftium greatly benefits from existing Luanti documentation like the wiki, reference, and modding book.

-

Modern API 🤖: Craftium implements the well-known Gymnasium's PettingZoo APIs, opening the door to a huge number of existing tools and libraries compatible with these APIs. For example, stable-baselines3 or CleanRL (check examples section!).

-

Fully open source 🤠: Both, craftium and Luanti are fully open-source projects. This allowed us to modify Luanti's source code to tightly integrate it with our library, reducing the number of ugly hacks.

-

No more Java 👹: The Minecraft game is written in Java, not a very friendly language for clusters and high-performance applications. Contrarily, Luanti is written in C++, much more friendly for clusters, and highly performant!

First, clone craftium using the --recurse-submodules flag (important: the flag fetches submodules needed by the library) and cd into the project's main directory:

git clone --recurse-submodules https://github.com/mikelma/craftium.git # if you prefer ssh: [email protected]:mikelma/craftium.git

cd craftiumcraftium depends on Luanti, which in turn, depends on a series of libraries that we need to install. Luanti's repo contains instructions on how to setup the build environment for many Linux distros (and MacOS. In Ubuntu/Debian the following command installs all the required libraries:

sudo apt install g++ make libc6-dev cmake libpng-dev libjpeg-dev libgl1-mesa-dev libsqlite3-dev libogg-dev libvorbis-dev libopenal-dev libcurl4-gnutls-dev libfreetype6-dev zlib1g-dev libgmp-dev libjsoncpp-dev libzstd-dev libluajit-5.1-dev gettext libsdl2-devAdditionally, craftium requires Python's header files to build a dedicated Python module implemented in C (mt_server):

sudo apt install libpython3-devThen, check that setuptools is updated and run the installation command in the craftium repo's directory:

pip install -U setuptoolsand,

pip install .This last command should compile Luanti, install python dependencies, and, finally, craftium. If the installation process fails, please consider submitting an issue here. Note that this command only installs the minimum dependencies to run craftium, execute pip install ".[examples]" for installing optional dependencies (e.g., for running examples).

As craftium implements the Gymnasium and PettingZoo APIs, using craftium environments is as simple as using any Gymnasium environment. The example below shows the implementation of a random agent in one of the craftium's default environments:

import gymnasium as gym

import craftium

env = gym.make("Craftium/ChopTree-v0")

observation, info = env.reset()

for t in range(100):

action = env.action_space.sample() # get a random action

observation, reward, terminated, truncated, _info = env.step(action)

if terminated or truncated:

observation, info = env.reset()

env.close()Training a CNN-based agent using PPO is as simple as:

from stable_baselines3 import PPO

from stable_baselines3.common import logger

import gymnasium as gym

import craftium

env = gym.make("Craftium/ChopTree-v0")

model = PPO("CnnPolicy", env, verbose=1)

new_logger = logger.configure("logs-ppo-agent", ["stdout", "csv"])

model.set_logger(new_logger)

model.learn(total_timesteps=1_000_000)

env.close()This example trains a CNN-based agent for 1M timesteps in the Craftium/ChopTree-v0 environment using PPO. Additionally, we set up a custom logger that records training statistics to a CSV file inside the logs-ppo-agent/ directory.

Craftium is not only limited to "typical" RL scenarios, its versatility makes it the ideal platform for meta-RL, open-ended learning, continual and lifelong RL, or unsupervised environment design (and more!). As a showcase, Craftium provides open-world and procedurally generated tasks (see environments for more info). This code snippet initializes a procedurally generated dungeon environment with 5 rooms and a maximum number of 4 monsters per room:

env, map_str = craftium.make_dungeon_env(

mapgen_kwargs=dict(

n_rooms=5,

max_monsters_per_room=5,

),

return_map_str=True,

)

print(map_str.split("-")[1])By implementing Gymnasium's API, craftium supports many existing tools and libraries. Check these scripts for some examples:

- Stable baselines3: sb3_train.py

- Ray rllib: sb3_train.py

- CleanRL: cleanrl_ppo_train.py

@article{malagon2024craftium,

title={Craftium: An Extensible Framework for Creating Reinforcement Learning Environments},

author={Mikel Malag{\'o}n and Josu Ceberio and Jose A. Lozano},

journal={arXiv preprint arXiv:2407.03969},

year={2024}

}We appreciate contributions to craftium! craftium is in early development, so many possible improvements and bugs are expected. If you have found a bug or have a suggestion, please consider opening an issue if it isn't already covered. In case you want to submit a fix or an improvement to the library, pull requests are also very welcome!

Craftium forks Luanti and its source code is distributed with this library. Craftium maintains the same licenses as Luanti: MIT for code and CC-BY-SA 3.0 for content.

We thank the Luanti and Farama developers and community for maintaining such an excellent open-source project. We also thank minerl, and projects integrating Luanti with RL (here and here) for serving as inspiration for this project.

We are especially grateful to:

- @vadel for helping with obscure bugs 🐛.

- @abarrainkua for reading preliminary versions of the paper.

- Pascu for the technical support in HPC.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for craftium

Similar Open Source Tools

craftium

Craftium is an open-source platform based on the Minetest voxel game engine and the Gymnasium and PettingZoo APIs, designed for creating fast, rich, and diverse single and multi-agent environments. It allows for connecting to Craftium's Python process, executing actions as keyboard and mouse controls, extending the Lua API for creating RL environments and tasks, and supporting client/server synchronization for slow agents. Craftium is fully extensible, extensively documented, modern RL API compatible, fully open source, and eliminates the need for Java. It offers a variety of environments for research and development in reinforcement learning.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

langchain

LangChain is a framework for developing Elixir applications powered by language models. It enables applications to connect language models to other data sources and interact with the environment. The library provides components for working with language models and off-the-shelf chains for specific tasks. It aims to assist in building applications that combine large language models with other sources of computation or knowledge. LangChain is written in Elixir and is not aimed for parity with the JavaScript and Python versions due to differences in programming paradigms and design choices. The library is designed to make it easy to integrate language models into applications and expose features, data, and functionality to the models.

lmql

LMQL is a programming language designed for large language models (LLMs) that offers a unique way of integrating traditional programming with LLM interaction. It allows users to write programs that combine algorithmic logic with LLM calls, enabling model reasoning capabilities within the context of the program. LMQL provides features such as Python syntax integration, rich control-flow options, advanced decoding techniques, powerful constraints via logit masking, runtime optimization, sync and async API support, multi-model compatibility, and extensive applications like JSON decoding and interactive chat interfaces. The tool also offers library integration, flexible tooling, and output streaming options for easy model output handling.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

OM1

OpenMind's OM1 is a modular AI runtime empowering developers to create and deploy multimodal AI agents across digital environments and physical robots. OM1 agents process diverse inputs like web data, social media, camera feeds, and LIDAR, enabling actions including motion, autonomous navigation, and natural conversations. The goal is to create highly capable human-focused robots that are easy to upgrade and reconfigure for different physical form factors. OM1 features a modular architecture, supports new hardware via plugins, offers web-based debugging display, and pre-configured endpoints for various services.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

verifAI

VerifAI is a document-based question-answering system that addresses hallucinations in generative large language models and search engines. It retrieves relevant documents, generates answers with references, and verifies answers for accuracy. The engine uses generative search technology and a verification model to ensure no misinformation. VerifAI supports various document formats and offers user registration with a React.js interface. It is open-source and designed to be user-friendly, making it accessible for anyone to use.

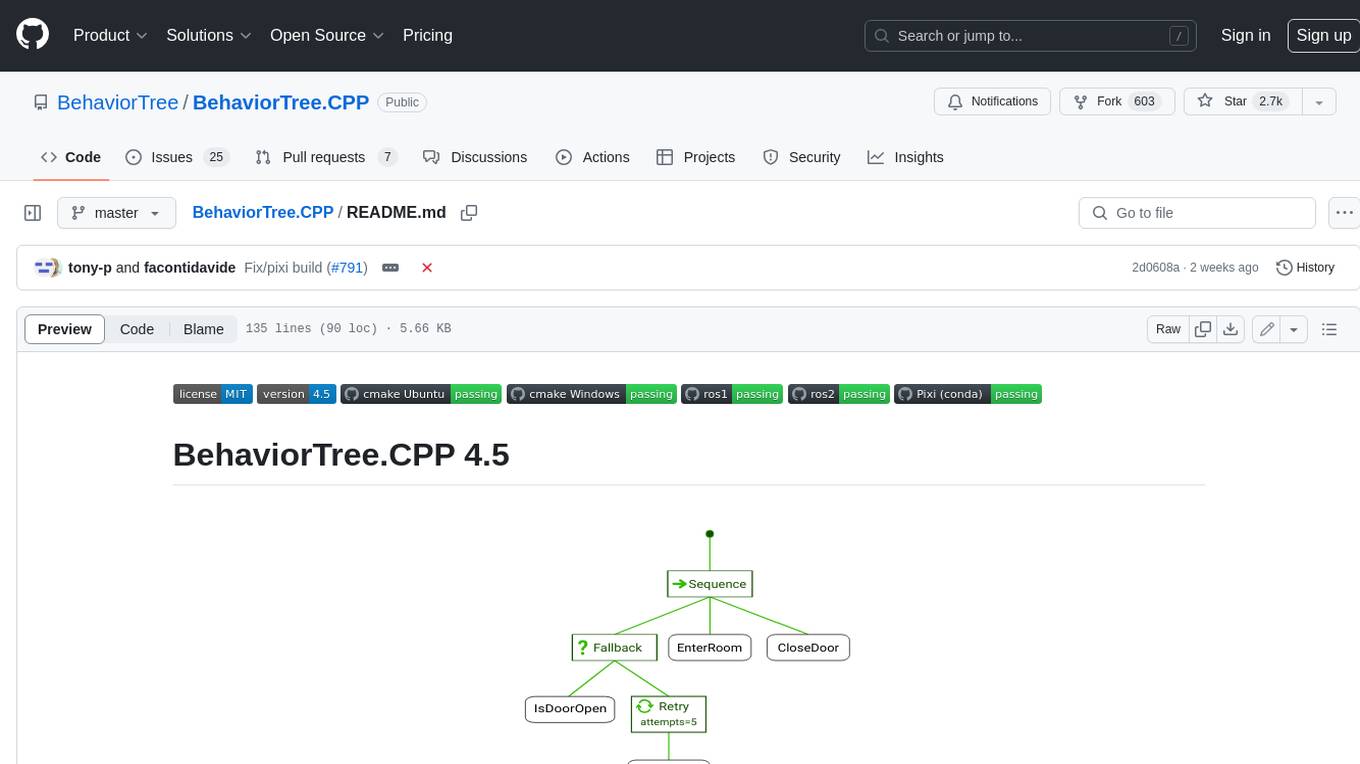

BehaviorTree.CPP

BehaviorTree.CPP is a C++ 17 library that provides a framework to create BehaviorTrees. It was designed to be flexible, easy to use, reactive and fast. Even if our main use-case is robotics, you can use this library to build AI for games, or to replace Finite State Machines. There are few features which make BehaviorTree.CPP unique, when compared to other implementations: It makes asynchronous Actions, i.e. non-blocking, a first-class citizen. You can build reactive behaviors that execute multiple Actions concurrently (orthogonality). Trees are defined using a Domain Specific scripting language (based on XML), and can be loaded at run-time; in other words, even if written in C++, the morphology of the Trees is not hard-coded. You can statically link your custom TreeNodes or convert them into plugins and load them at run-time. It provides a type-safe and flexible mechanism to do Dataflow between Nodes of the Tree. It includes a logging/profiling infrastructure that allows the user to visualize, record, replay and analyze state transitions.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.

rig

Rig is a Rust library designed for building scalable, modular, and user-friendly applications powered by large language models (LLMs). It provides full support for LLM completion and embedding workflows, offers simple yet powerful abstractions for LLM providers like OpenAI and Cohere, as well as vector stores such as MongoDB and in-memory storage. With Rig, users can easily integrate LLMs into their applications with minimal boilerplate code.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

lmstudio-js

LM Studio Client SDK lmstudio-ts is LM Studio's official JavaScript/TypeScript client SDK. It allows you to use LLMs to respond in chats or predict text completions, define functions as tools, and turn LLMs into autonomous agents that run completely locally, load, configure, and unload models from memory, supports both browser and any Node-compatible environments, generate embeddings for text, and more! Why use `lmstudio-js` over `openai` sdk? Open AI's SDK is designed to use with Open AI's proprietary models. As such, it is missing many features that are essential for using LLMs in a local environment, such as managing loading and unloading models from memory, configuring load parameters (context length, gpu offload settings, etc.), speculative decoding, getting information (such as context length, model size, etc.) about a model, and more. In addition, while `openai` sdk is automatically generated, `lmstudio-js` is designed from ground-up to be clean and easy to use for TypeScript/JavaScript developers.

For similar tasks

Open-Prompt-Injection

OpenPromptInjection is an open-source toolkit for attacks and defenses in LLM-integrated applications, enabling easy implementation, evaluation, and extension of attacks, defenses, and LLMs. It supports various attack and defense strategies, including prompt injection, paraphrasing, retokenization, data prompt isolation, instructional prevention, sandwich prevention, perplexity-based detection, LLM-based detection, response-based detection, and know-answer detection. Users can create models, tasks, and apps to evaluate different scenarios. The toolkit currently supports PaLM2 and provides a demo for querying models with prompts. Users can also evaluate ASV for different scenarios by injecting tasks and querying models with attacked data prompts.

LLM-LieDetector

This repository contains code for reproducing experiments on lie detection in black-box LLMs by asking unrelated questions. It includes Q/A datasets, prompts, and fine-tuning datasets for generating lies with language models. The lie detectors rely on asking binary 'elicitation questions' to diagnose whether the model has lied. The code covers generating lies from language models, training and testing lie detectors, and generalization experiments. It requires access to GPUs and OpenAI API calls for running experiments with open-source models. Results are stored in the repository for reproducibility.

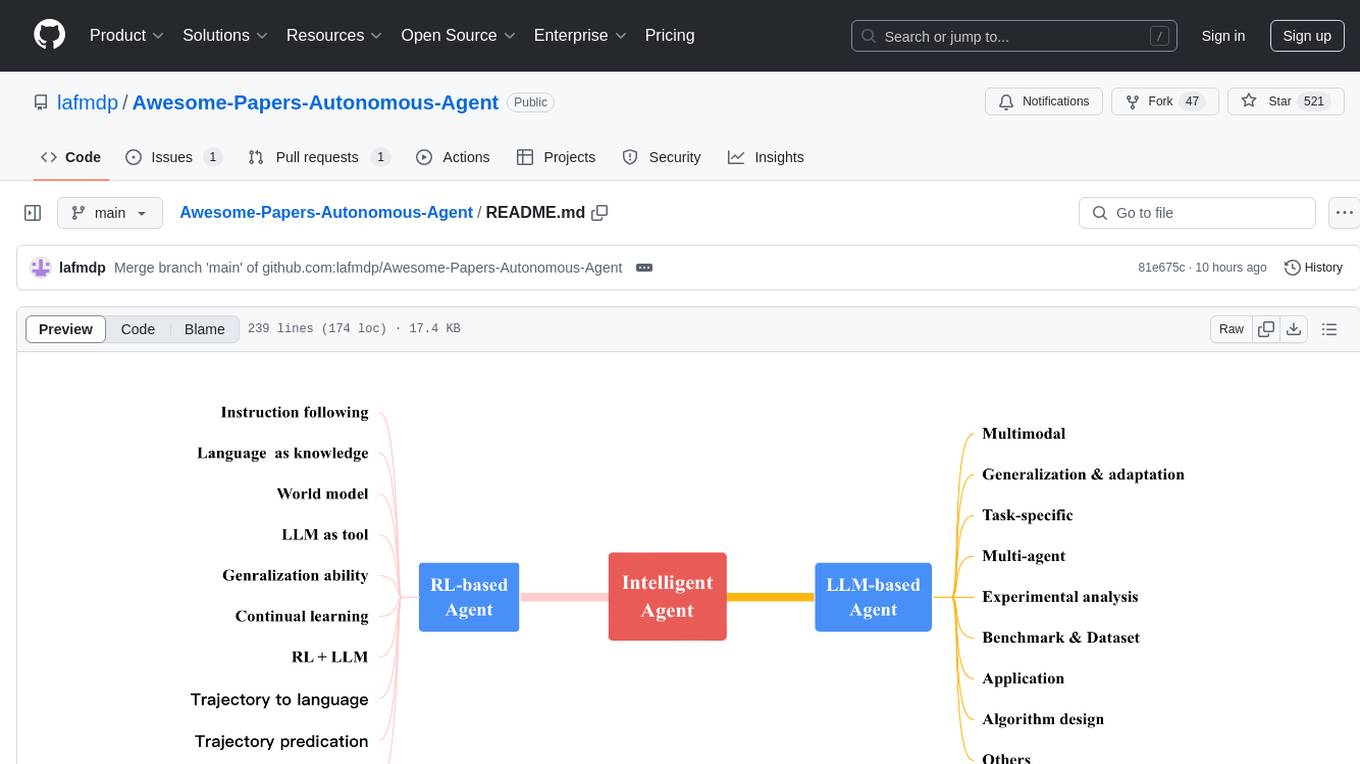

Awesome-Papers-Autonomous-Agent

Awesome-Papers-Autonomous-Agent is a curated collection of recent papers focusing on autonomous agents, specifically interested in RL-based agents and LLM-based agents. The repository aims to provide a comprehensive resource for researchers and practitioners interested in intelligent agents that can achieve goals, acquire knowledge, and continually improve. The collection includes papers on various topics such as instruction following, building agents based on world models, using language as knowledge, leveraging LLMs as a tool, generalization across tasks, continual learning, combining RL and LLM, transformer-based policies, trajectory to language, trajectory prediction, multimodal agents, training LLMs for generalization and adaptation, task-specific designing, multi-agent systems, experimental analysis, benchmarking, applications, algorithm design, and combining with RL.

SwiftSage

SwiftSage is a tool designed for conducting experiments in the field of machine learning and artificial intelligence. It provides a platform for researchers and developers to implement and test various algorithms and models. The tool is particularly useful for exploring new ideas and conducting experiments in a controlled environment. SwiftSage aims to streamline the process of developing and testing machine learning models, making it easier for users to iterate on their ideas and achieve better results. With its user-friendly interface and powerful features, SwiftSage is a valuable tool for anyone working in the field of AI and ML.

MemoryLLM

MemoryLLM is a large language model designed for self-updating capabilities. It offers pretrained models with different memory capacities and features, such as chat models. The repository provides training code, evaluation scripts, and datasets for custom experiments. MemoryLLM aims to enhance knowledge retention and performance on various natural language processing tasks.

ppl.llm.kernel.cuda

Primitive cuda kernel library for ppl.nn.llm, part of PPL.LLM system, tested on Ampere and Hopper, requires Linux on x86_64 or arm64 CPUs, GCC >= 9.4.0, CMake >= 3.18, Git >= 2.7.0, CUDA Toolkit >= 11.4. 11.6 recommended. Provides cuda kernel functionalities for deep learning tasks.

craftium

Craftium is an open-source platform based on the Minetest voxel game engine and the Gymnasium and PettingZoo APIs, designed for creating fast, rich, and diverse single and multi-agent environments. It allows for connecting to Craftium's Python process, executing actions as keyboard and mouse controls, extending the Lua API for creating RL environments and tasks, and supporting client/server synchronization for slow agents. Craftium is fully extensible, extensively documented, modern RL API compatible, fully open source, and eliminates the need for Java. It offers a variety of environments for research and development in reinforcement learning.

LLMsKnow

LLMs Know More Than They Show is a repository containing code to reproduce the results in the paper. It includes scripts to generate model answers, extract exact answers, probe all layers and tokens, probe specific layers and tokens, conduct generalization experiments, perform resampling for error type probing and answer selection experiments, and run other baselines like logprob detection and p_true detection. The repository supports various datasets such as TriviaQA, Movies, HotpotQA, Winobias, Winogrande, NLI, IMDB, Math, and Natural questions. It also provides supported models like Mistral-7B-Instruct-v0.2, Mistral-7B-v0.3, Meta-Llama-3-8B, and Meta-Llama-3-8B-Instruct.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.