1Panel

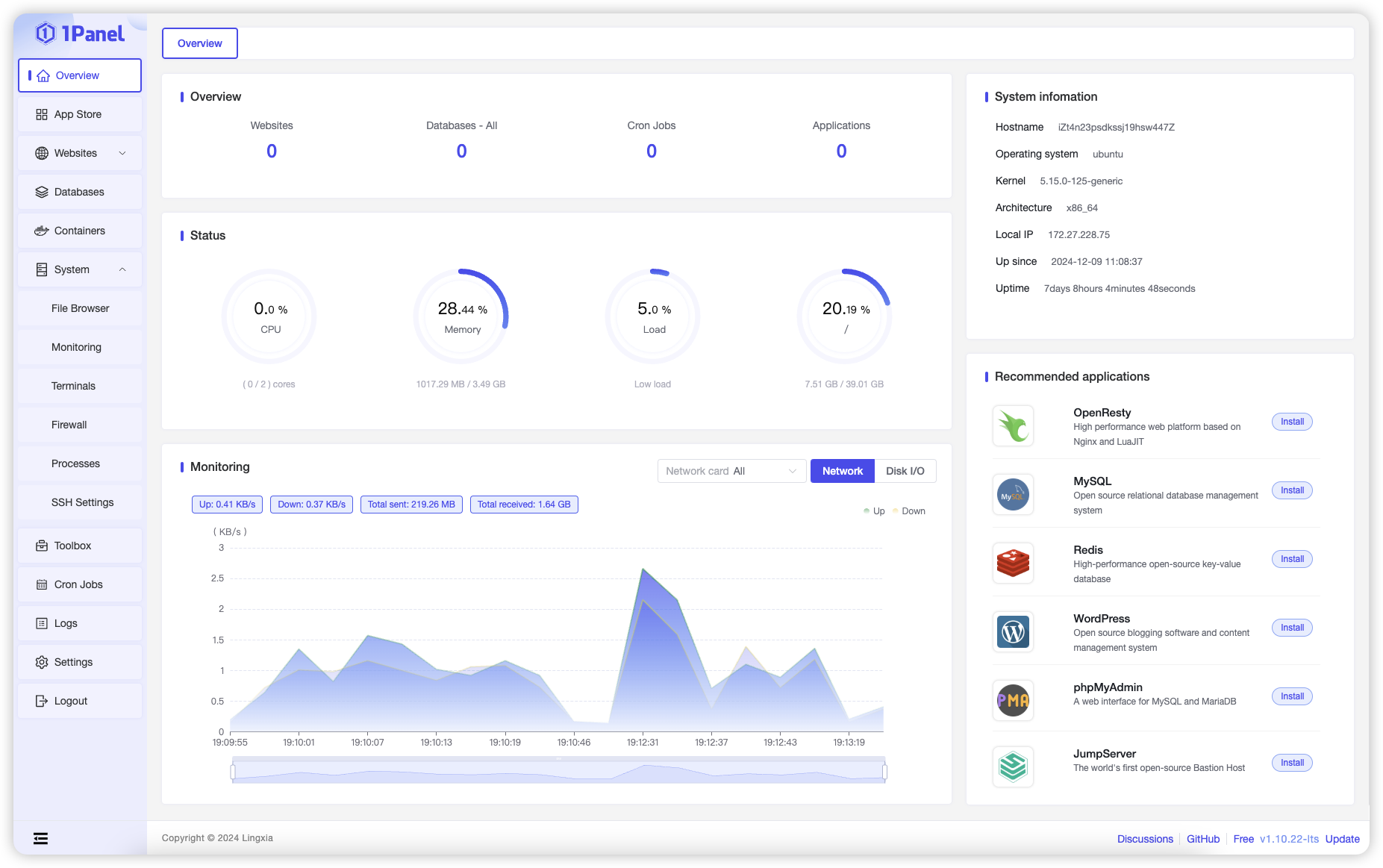

🔥 1Panel offers an intuitive web interface for a Linux server / VPS, making it easy to manage OpenClaw agents, local LLMs, websites, databases, containers, files, and scheduled tasks.

Stars: 33336

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

README:

1Panel is a modern, open-source Linux server management panel that streamlines administration through an intuitive, clean web interface.

🔹 AI Integration

Seamlessly deploy OpenClaw agents, host local LLMs via Ollama, and monitor GPU utilization—all within a unified workspace engineered for efficient AI development and inference.

🔹 One-Click Website Deployment

Launch production-ready websites instantly with automatic domain binding, SSL certificate provisioning, and configuration—zero manual setup required.

🔹 Curated App Marketplace

Discover, install, and update trusted open-source applications (WordPress, MySQL, Redis, and more) with a single click.

🔹 Centralized Server Management

Monitor system resources and manage files, databases, containers, and services effortlessly through a unified, intuitive dashboard.

🔹 Enterprise-Grade Security

Strengthen defenses with container isolation, firewall controls, audit logging, and secure deployment practices—ensuring compliance and peace of mind.

🔹 Intelligent Backup & Restore

Schedule automated backups or perform instant restores to local storage or leading cloud platforms (AWS S3, Cloudflare R2, and more), with flexible retention and destination options.

Execute the script below and follow the prompts to install 1Panel:

bash -c "$(curl -sSL https://resource.fit2cloud.com/1panel/package/v2/quick_start.sh)"Compared to the OSS Edition, 1Panel Pro Edition provides users with a wealth of enhanced features and technical support services. Enhanced features include WAF enhancement, website tamper protection, website monitoring, GPU monitoring, custom logo and theme color, etc. Click to view the detailed introduction of the Pro Edition.

If you discover any security issues, please refer to SECURITY.md.

Licensed under The GNU General Public License version 3 (GPLv3) (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

https://www.gnu.org/licenses/gpl-3.0.html

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for 1Panel

Similar Open Source Tools

1Panel

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

toolhive-studio

ToolHive Studio is an experimental project under active development and testing, providing an easy way to discover, deploy, and manage Model Context Protocol (MCP) servers securely. Users can launch any MCP server in a locked-down container with just a few clicks, eliminating manual setup, security concerns, and runtime issues. The tool ensures instant deployment, default security measures, cross-platform compatibility, and seamless integration with popular clients like GitHub Copilot, Cursor, and Claude Code.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

sealos

Sealos is a cloud operating system distribution based on the Kubernetes kernel, designed for a seamless development lifecycle. It allows users to spin up full-stack environments in seconds, effortlessly push releases, and scale production seamlessly. With core features like easy application management, quick database creation, and cloud universality, Sealos offers efficient and economical cloud management with high universality and ease of use. The platform also emphasizes agility and security through its multi-tenancy sharing model. Sealos is supported by a community offering full documentation, Discord support, and active development roadmap.

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

dreamfactory

DreamFactory is a self-hosted platform that provides governed API access to any data source for enterprise apps and local LLMs. It is a secure enterprise data access platform built on top of the Laravel framework, offering role-based access control, identity passthrough, and customization of API behavior using PHP, Python, and NodeJS scripting languages. DreamFactory allows users to generate powerful APIs for SQL and NoSQL databases, files, email, and push notifications in seconds, while ensuring security with features like user management, SSO authentication, OAuth, and Active Directory integration.

kubesphere

KubeSphere is a distributed operating system for cloud-native application management, using Kubernetes as its kernel. It provides a plug-and-play architecture, allowing third-party applications to be seamlessly integrated into its ecosystem. KubeSphere is also a multi-tenant container platform with full-stack automated IT operation and streamlined DevOps workflows. It provides developer-friendly wizard web UI, helping enterprises to build out a more robust and feature-rich platform, which includes most common functionalities needed for enterprise Kubernetes strategy.

taipy

Taipy is an open-source Python library for easy, end-to-end application development, featuring what-if analyses, smart pipeline execution, built-in scheduling, and deployment tools.

MaixCDK

MaixCDK (Maix C/CPP Development Kit) is a C/C++ development kit that integrates practical functions such as AI, machine vision, and IoT. It provides easy-to-use encapsulation for quickly building projects in vision, artificial intelligence, IoT, robotics, industrial cameras, and more. It supports hardware-accelerated execution of AI models, common vision algorithms, OpenCV, and interfaces for peripheral operations. MaixCDK offers cross-platform support, easy-to-use API, simple environment setup, online debugging, and a complete ecosystem including MaixPy and MaixVision. Supported devices include Sipeed MaixCAM, Sipeed MaixCAM-Pro, and partial support for Common Linux.

ClicShopping_V3

ClicShoppingAI is a powerful open-source Ecommerce solution that supports B2B, B2C, and B2B-B2C. Integrated with cutting-edge generative artificial intelligence systems like Gpt and Ollama, it helps merchants increase turnover and competitiveness for free. With AI capabilities, it optimizes inventory, offers personalized recommendations, and provides top-notch customer service. The solution is modular, lightweight, and user-friendly, with a seamless, responsive design for all devices. Installation is easy, empowering ongoing development through community support. Features include GPT API integration, generative AI functionalities, real-time safety stock predictive, WYSIWYG product description creation, image editor management, full SEO optimization, payment and shipping modules, extension system, GDPR compliance, multi-language support, and more.

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

ClaudeSync

ClaudeSync is a powerful tool designed to seamlessly synchronize local files with Claude.ai projects. It bridges the gap between local development environment and Claude.ai's knowledge base, offering real-time synchronization, CLI for easy management, support for multiple organizations and projects, intelligent file filtering, configurable sync interval, two-way synchronization, and more. It ensures data privacy, open source transparency, and comes with disclaimers for use at own risk. Users can quickly start syncing by installing, logging in, selecting organization and project, and running sync. Advanced features include API, organization, project, file, chat management, configuration, synchronization modes, scheduled sync, providers, custom ignore file, and troubleshooting. Contributions are welcome, and communication channels include GitHub Issues and Discord. Licensed under MIT License.

devopness

Devopness is a tool that simplifies the management of cloud applications and multi-cloud infrastructure for both AI agents and humans. It provides role-based access control, permission management, cost control, and visibility into DevOps and CI/CD workflows. The tool allows provisioning and deployment to major cloud providers like AWS, Azure, DigitalOcean, and GCP. Devopness aims to make software deployment and cloud infrastructure management accessible and affordable to all involved in software projects.

ai-laravel

The Aimeos Laravel adapter allows integration of Aimeos web shop components into Laravel framework, leveraging native Laravel components for content caching, logging messages, session handling, generating URLs, and accessing the user table. Users of Laravel can quickly set up a web shop using the Aimeos Laravel package, saving time and effort in installation and configuration. The adapter is licensed under LGPLv3 and offers seamless integration with Laravel infrastructure for various functionalities like URL building, caching, logging, session management, email sending, and translations.

xpander.ai

xpander.ai is a Backend-as-a-Service for autonomous agents that abstracts the ops layer, allowing AI engineers to focus on behavior and outcomes. It provides managed agent hosting with version control and CI/CD, a fully managed PostgreSQL memory layer, and a library of 2,000+ functions. The platform features an AI native triggering system that processes inputs from various sources and delivers unified messages to agents. With support for any agent framework or SDK, including Agno and OpenAI, xpander.ai enables users to build intelligent, production-ready AI agents without dealing with infrastructure complexity.

For similar tasks

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

niledatabase

Nile is a serverless Postgres database designed for modern SaaS applications. It virtualizes tenants/customers/organizations into Postgres to enable native tenant data isolation, performance isolation, per-tenant backups, and tenant placement on shared or dedicated compute globally. With Nile, you can manage multiple tenants effortlessly, without complex permissions or buggy scripts. Additionally, it offers opt-in user management capabilities, customer-specific vector embeddings, and instant tenant admin dashboards. Built for the cloud, Nile provides a true serverless experience with effortless scaling.

1Panel

1Panel is an open-source, modern web-based control panel for Linux server management. It provides efficient management through a user-friendly web graphical interface, enabling users to effortlessly manage their Linux servers. Key features include host monitoring, file management, database administration, container management, rapid website deployment with WordPress integration, an application store for easy installation and updates, security and reliability through containerization and secure application deployment practices, integrated firewall management, log auditing capabilities, and one-click backup & restore functionality supporting various cloud storage solutions.

job-hunting

Job Hunting is a browser extension designed to enhance the job searching experience on popular recruitment platforms in China. It aims to improve job listing visibility, provide personalized job search capabilities, analyze job data, facilitate job discussions, and offer company insights. The extension offers features such as job card display, company reputation checks, quick company information lookup, job and company data storage, job and company tagging, data analysis, data sharing, personal job preferences, automation tasks, discussion forums, data backup and recovery, and data sharing plans. It supports platforms like BOSS 直聘, 前程无忧, 智联招聘, 拉钩网, and 猎聘网, and provides visualizations for job posting trends and company data.

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

ai-toolbox

AI Toolbox is a cross-platform desktop application designed to efficiently manage various AI programming assistant configurations. It supports Windows, macOS, and Linux. The tool provides visual management of OpenCode, Oh-My-OpenCode, Slim plugin configurations, Claude Code API supplier configurations, Codex CLI configurations, MCP server management, Skills management, WSL synchronization, AI supplier management, system tray for quick configuration switching, data backup, theme switching, multilingual support, and automatic update checks.

LunaBox

LunaBox is a lightweight, fast, and feature-rich tool for managing and tracking visual novels, with the ability to customize game categories, automatically track playtime, generate personalized reports through AI analysis, import data from other platforms, backup data locally or on cloud services, and ensure privacy and security by storing sensitive data locally. The tool supports multi-dimensional statistics, offers a variety of customization options, and provides a user-friendly interface for easy navigation and usage.

chromadb-chart

Chromadb-chart is a Kubernetes Chart for deploying a single-node Chroma AI application database on a Kubernetes cluster using the Helm package manager. It provides the ability to secure Chroma API with TLS, backup and restore index data, and monitor the cluster using Prometheus and Grafana. The chart configuration values allow customization of ChromaDB version, data persistence, telemetry, authentication, logging, image settings, and more. Users can verify installation, build Docker images, set up a Kubernetes cluster, and configure Chroma authentication using token or basic auth. The chart can also be used as a dependency in other charts, and extra config options are available for Rust server configurations.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

-d9d9d9)

-d9d9d9)

-d9d9d9)