agents

Trade autonomously on Polymarket using AI Agents

Stars: 60

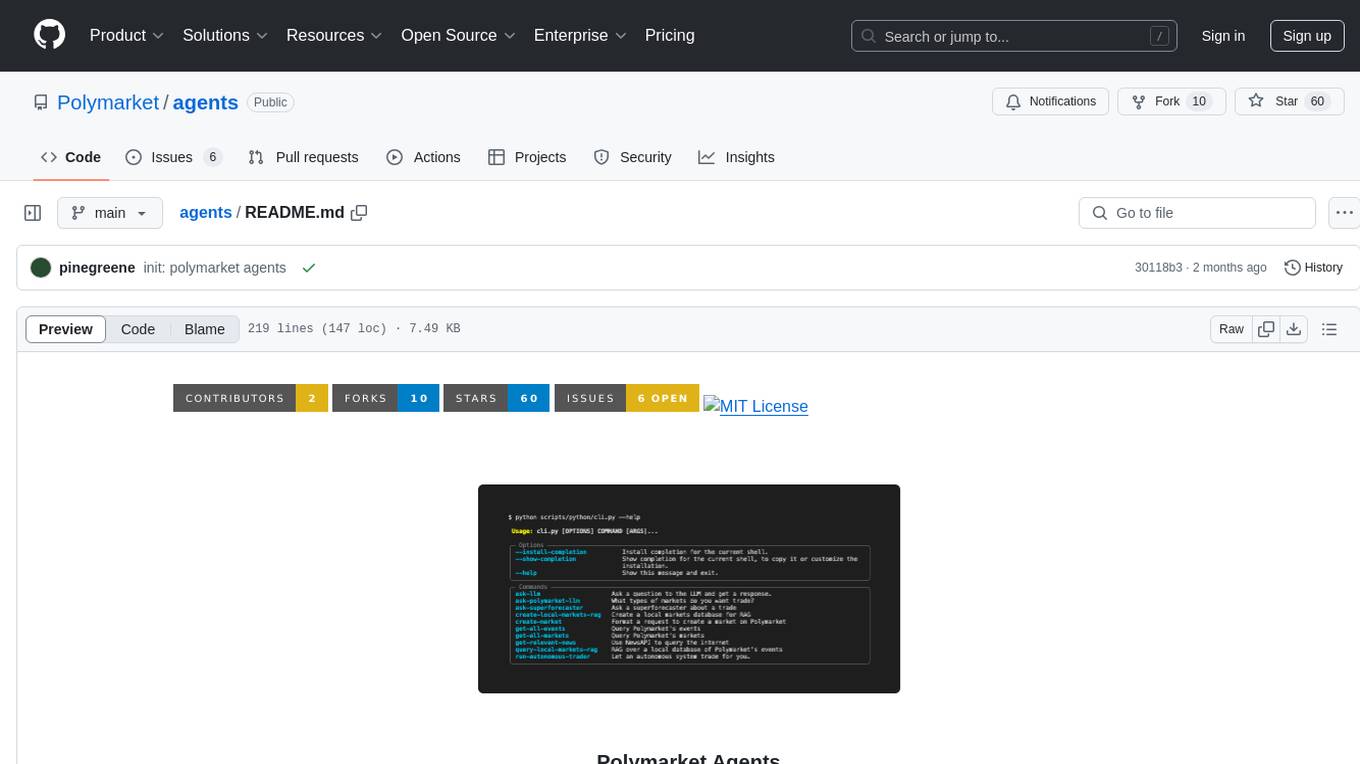

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

README:

Trade autonomously on Polymarket using AI Agents

Explore the docs »

View Demo

·

Report Bug

·

Request Feature

Polymarket Agents is a developer framework and set of utilities for building AI agents for Polymarket.

This code is free and publicly available under MIT License open source license (terms of service)!

- Integration with Polymarket API

- AI agent utilities for prediction markets

- Local and remote RAG (Retrieval-Augmented Generation) support

- Data sourcing from betting services, news providers, and web search

- Comphrehensive LLM tools for prompt engineering

This repo is inteded for use with Python 3.9

-

Clone the repository

git clone https://github.com/{username}/polymarket-agents.git cd polymarket-agents -

Create the virtual environment

virtualenv --python=python3.9 .venv -

Activate the virtual environment

- On Windows:

.venv\Scripts\activate- On macOS and Linux:

source .venv/bin/activate -

Install the required dependencies:

pip install -r requirements.txt -

Set up your environment variables:

- Create a

.envfile in the project root directory

cp .env.example .env- Add the following environment variables:

POLYGON_WALLET_PRIVATE_KEY="" OPENAI_API_KEY="" - Create a

-

Load your wallet with USDC.

-

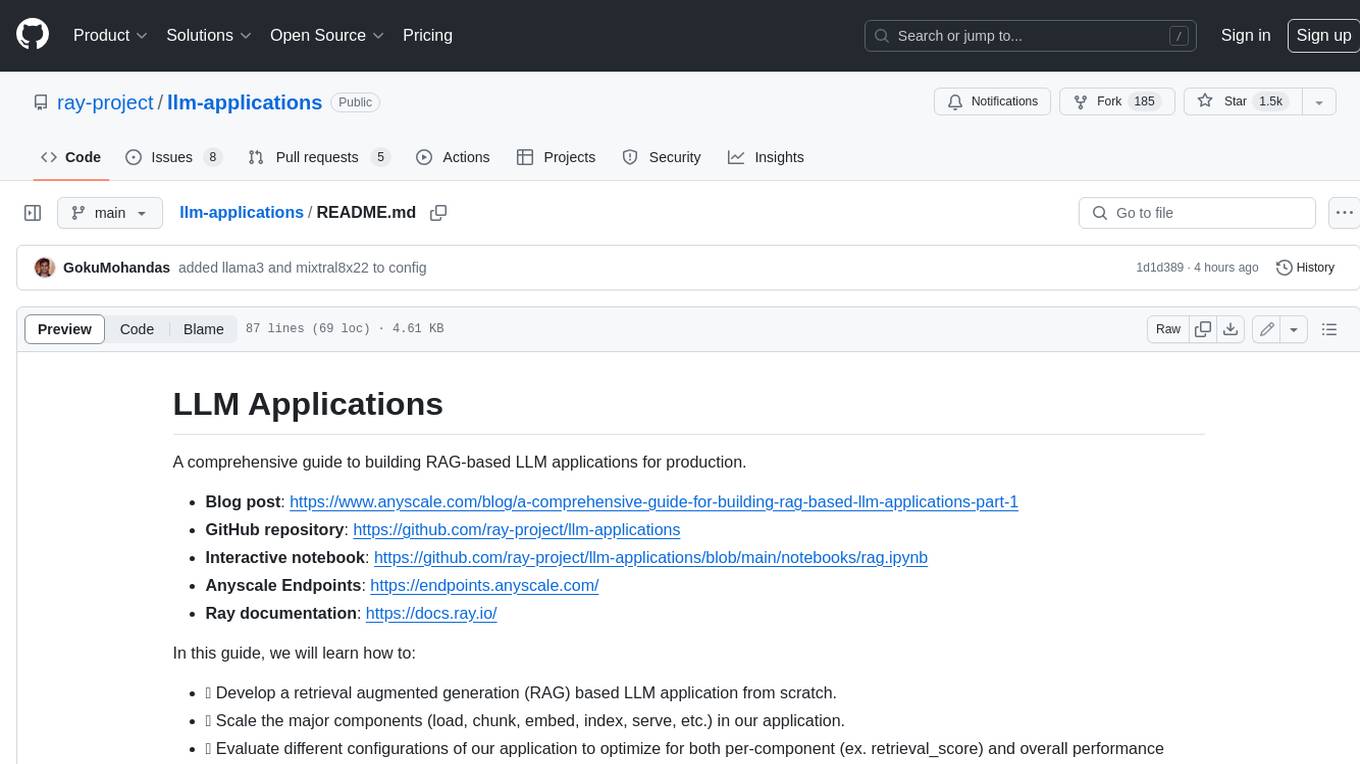

Try the command line interface...

python scripts/python/cli.pyOr just go trade!

python agents/application/trade.py -

Note: If running the command outside of docker, please set the following env var:

export PYTHONPATH="."If running with docker is preferred, we provide the following scripts:

./scripts/bash/build-docker.sh ./scripts/bash/run-docker-dev.sh

The Polymarket Agents architecture features modular components that can be maintained and extended by individual community members.

Polymarket Agents connectors standardize data sources and order types.

-

Chroma.py: chroma DB for vectorizing news sources and other API data. Developers are able to add their own vector database implementations. -

Gamma.py: definesGammaMarketClientclass, which interfaces with the Polymarket Gamma API to fetch and parse market and event metadata. Methods to retrieve current and tradable markets, as well as defined information on specific markets and events. -

Polymarket.py: defines a Polymarket class that interacts with the Polymarket API to retrieve and manage market and event data, and to execute orders on the Polymarket DEX. It includes methods for API key initialization, market and event data retrieval, and trade execution. The file also provides utility functions for building and signing orders, as well as examples for testing API interactions. -

Objects.py: data models using Pydantic; representations for trades, markets, events, and related entities.

Files for managing your local environment, server set-up to run the application remotely, and cli for end-user commands.

cli.py is the primary user interface for the repo. Users can run various commands to interact with the Polymarket API, retrieve relevant news articles, query local data, send data/prompts to LLMs, and execute trades in Polymarkets.

Commands should follow this format:

python scripts/python/cli.py command_name [attribute value] [attribute value]

Example:

get_all_markets

Retrieve and display a list of markets from Polymarket, sorted by volume.

python scripts/python/cli.py get_all_markets --limit <LIMIT> --sort-by <SORT_BY>

- limit: The number of markets to retrieve (default: 5).

- sort_by: The sorting criterion, either volume (default) or another valid attribute.

If you would like to contribute to this project, please follow these steps:

- Fork the repository.

- Create a new branch.

- Make your changes.

- Submit a pull request.

Please run pre-commit hooks before making contributions. To initialize them:

pre-commit install

- py-clob-client: Python client for the Polymarket CLOB

- python-order-utils: Python utilities to generate and sign orders from Polymarket's CLOB

- Polymarket CLOB client: Typescript client for Polymarket CLOB

- Langchain: Utility for building context-aware reasoning applications

- Chroma: Chroma is an AI-native open-source vector database

- Prediction Markets: Bottlenecks and the Next Major Unlocks, Mikey 0x: https://mirror.xyz/1kx.eth/jnQhA56Kx9p3RODKiGzqzHGGEODpbskivUUNdd7hwh0

- The promise and challenges of crypto + AI applications, Vitalik Buterin: https://vitalik.eth.limo/general/2024/01/30/cryptoai.html

- Superforecasting: How to Upgrade Your Company's Judgement, Schoemaker and Tetlock: https://hbr.org/2016/05/superforecasting-how-to-upgrade-your-companys-judgment

This project is licensed under the MIT License. See the LICENSE file for details.

For any questions or inquiries, please contact [email protected] or reach out at www.greenestreet.xyz

Enjoy using the CLI application! If you encounter any issues, feel free to open an issue on the repository.

Terms of Service prohibit US persons and persons from certain other jurisdictions from trading on Polymarket (via UI & API and including agents developed by persons in restricted jurisdictions), although data and information is viewable globally.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agents

Similar Open Source Tools

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

RepoAgent

RepoAgent is an LLM-powered framework designed for repository-level code documentation generation. It automates the process of detecting changes in Git repositories, analyzing code structure through AST, identifying inter-object relationships, replacing Markdown content, and executing multi-threaded operations. The tool aims to assist developers in understanding and maintaining codebases by providing comprehensive documentation, ultimately improving efficiency and saving time.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

agent-service-toolkit

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

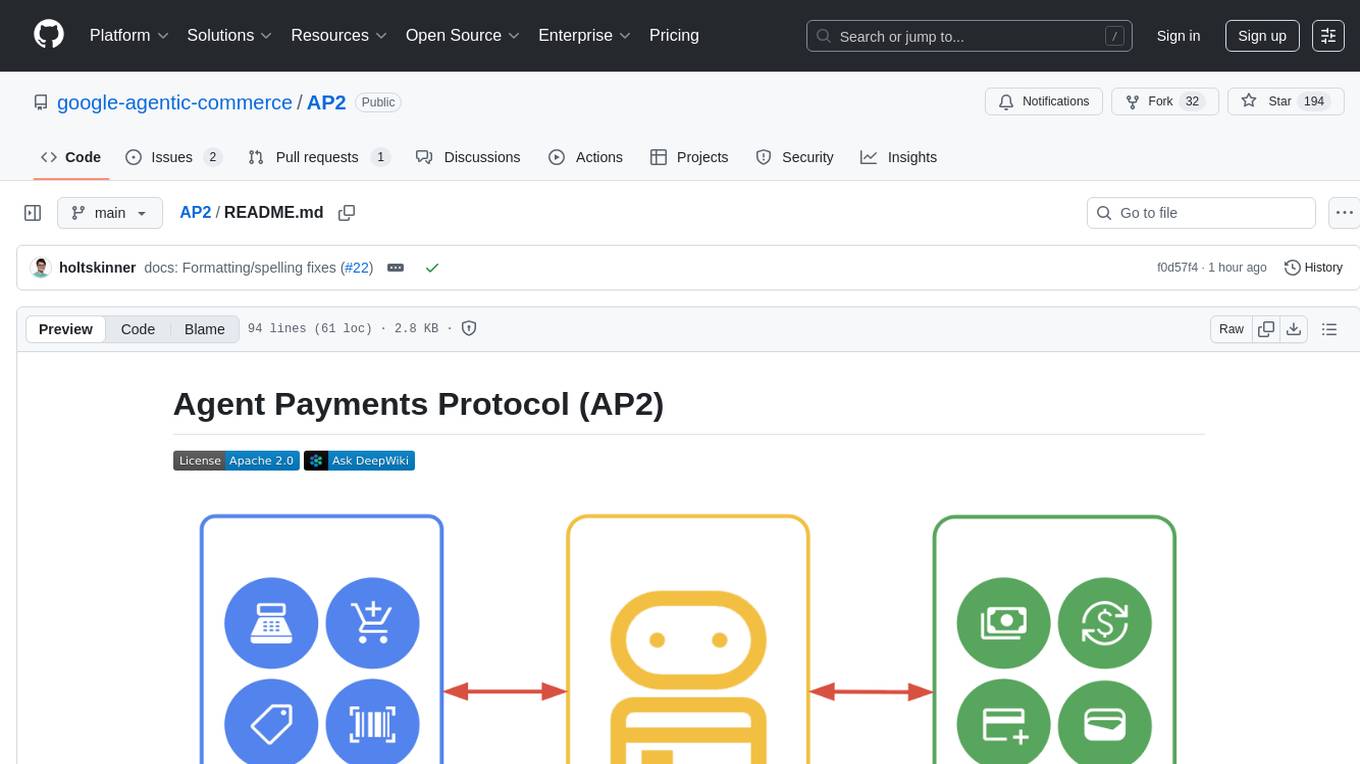

AP2

The Agent Payments Protocol (AP2) repository contains code samples and demos showcasing the protocol. It includes curated scenarios demonstrating key components, utilizing the Agent Development Kit (ADK) and Gemini 2.5 Flash. Users are free to use any tools to build agents. The repository features various agents and servers, with source code located in specific directories. Users can run scenarios by following README instructions and using run scripts. Additionally, the repository provides guidance on setting up prerequisites, obtaining a Google API key, and installing the AP2 types package.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

GraphRAG-Local-UI

GraphRAG Local with Interactive UI is an adaptation of Microsoft's GraphRAG, tailored to support local models and featuring a comprehensive interactive user interface. It allows users to leverage local models for LLM and embeddings, visualize knowledge graphs in 2D or 3D, manage files, settings, and queries, and explore indexing outputs. The tool aims to be cost-effective by eliminating dependency on costly cloud-based models and offers flexible querying options for global, local, and direct chat queries.

codebox-api

CodeBox is a cloud infrastructure tool designed for running Python code in an isolated environment. It also offers simple file input/output capabilities and will soon support vector database operations. Users can install CodeBox using pip and utilize it by setting up an API key. The tool allows users to execute Python code snippets and interact with the isolated environment. CodeBox is currently in early development stages and requires manual handling for certain operations like refunds and cancellations. The tool is open for contributions through issue reporting and pull requests. It is licensed under MIT and can be contacted via email at [email protected].

TaxHacker

TaxHacker is a self-hosted accountant app designed for freelancers and small businesses to automate expense and income tracking using the power of GenAI. It can analyze uploaded photos, receipts, or PDFs to extract important data like name, total amount, date, merchant, and VAT, saving them as structured transactions. The tool supports automatic currency conversion, filters, multiple projects, import-export functionalities, custom categories, and allows users to create custom fields for extraction. TaxHacker simplifies reporting and tax filing by organizing and storing data efficiently.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

BotServer

General Bot is a chat bot server that accelerates bot development by providing code base, resources, deployment to the cloud, and templates for creating new bots. It allows modification of bot packages without code through a database and service backend. Users can develop bot packages using custom code in editors like Visual Studio Code, Atom, or Brackets. The tool supports creating bots by copying and pasting files and using favorite tools from Office or Photoshop. It also enables building custom dialogs with BASIC for extending bots.

llm-applications

A comprehensive guide to building Retrieval Augmented Generation (RAG)-based LLM applications for production. This guide covers developing a RAG-based LLM application from scratch, scaling the major components, evaluating different configurations, implementing LLM hybrid routing, serving the application in a highly scalable and available manner, and sharing the impacts LLM applications have had on products.

For similar tasks

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

tradecat

TradeCat is a comprehensive data analysis and trading platform designed for cryptocurrency, stock, and macroeconomic data. It offers a wide range of features including multi-market data collection, technical indicator modules, AI analysis, signal detection engine, Telegram bot integration, and more. The platform utilizes technologies like Python, TimescaleDB, TA-Lib, Pandas, NumPy, and various APIs to provide users with valuable insights and tools for trading decisions. With a modular architecture and detailed documentation, TradeCat aims to empower users in making informed trading decisions across different markets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.