Best AI tools for< Serve Models >

20 - AI tool Sites

vLLM

vLLM is a fast and easy-to-use library for LLM inference and serving. It offers state-of-the-art serving throughput, efficient management of attention key and value memory, continuous batching of incoming requests, fast model execution with CUDA/HIP graph, and various decoding algorithms. The tool is flexible with seamless integration with popular HuggingFace models, high-throughput serving, tensor parallelism support, and streaming outputs. It supports NVIDIA GPUs and AMD GPUs, Prefix caching, and Multi-lora. vLLM is designed to provide fast and efficient LLM serving for everyone.

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

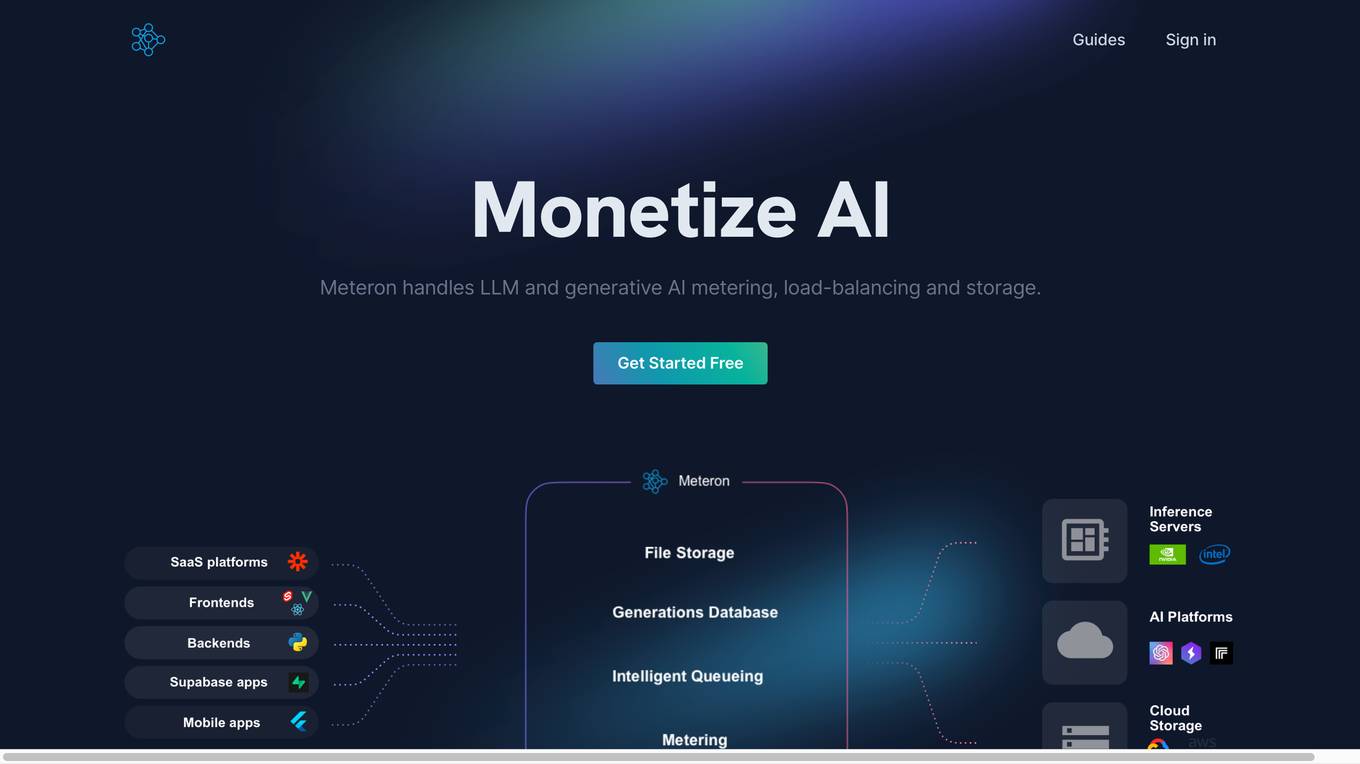

Meteron AI

Meteron AI is an all-in-one AI toolset that helps developers build AI-powered products faster and easier. It provides a simple, yet powerful metering mechanism, elastic scaling, unlimited storage, and works with any model. With Meteron, developers can focus on building AI products instead of worrying about the underlying infrastructure.

BentoML

BentoML is a platform for software engineers to build, ship, and scale AI products. It provides a unified AI application framework that makes it easy to manage and version models, create service APIs, and build and run AI applications anywhere. BentoML is used by over 1000 organizations and has a global community of over 3000 members.

Baseten

Baseten is a machine learning infrastructure that provides a unified platform for data scientists and engineers to build, train, and deploy machine learning models. It offers a range of features to simplify the ML lifecycle, including data preparation, model training, and deployment. Baseten also provides a marketplace of pre-built models and components that can be used to accelerate the development of ML applications.

Anyscale

Anyscale is a company that provides a scalable compute platform for AI and Python applications. Their platform includes a serverless API for serving and fine-tuning open LLMs, a private cloud solution for data privacy and governance, and an open source framework for training, batch, and real-time workloads. Anyscale's platform is used by companies such as OpenAI, Uber, and Spotify to power their AI workloads.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

Tecton

Tecton is an AI data platform that helps build smarter AI applications by simplifying feature engineering, generating training data, serving real-time data, and enhancing AI models with context-rich prompts. It automates data pipelines, improves model accuracy, and lowers production costs, enabling faster deployment of AI models. Tecton abstracts away data complexity, provides a developer-friendly experience, and allows users to create features from any source. Trusted by top engineering teams, Tecton streamlines ML delivery processes, improves customer interactions, and automates release processes through CI/CD pipelines.

Predibase

Predibase is a platform for fine-tuning and serving Large Language Models (LLMs). It provides a cost-effective and efficient way to train and deploy LLMs for a variety of tasks, including classification, information extraction, customer sentiment analysis, customer support, code generation, and named entity recognition. Predibase is built on proven open-source technology, including LoRAX, Ludwig, and Horovod.

AiPlus

AiPlus is an AI tool designed to serve as a cost-efficient model gateway. It offers users a platform to access and utilize various AI models for their projects and tasks. With AiPlus, users can easily integrate AI capabilities into their applications without the need for extensive development or resources. The tool aims to streamline the process of leveraging AI technology, making it accessible to a wider audience.

Radicalbit

Radicalbit is an MLOps and AI Observability platform that helps businesses deploy, serve, observe, and explain their AI models. It provides a range of features to help data teams maintain full control over the entire data lifecycle, including real-time data exploration, outlier and drift detection, and model monitoring in production. Radicalbit can be seamlessly integrated into any ML stack, whether SaaS or on-prem, and can be used to run AI applications in minutes.

Humley

Humley is a Conversational AI platform that allows users to build and launch AI assistants in under an hour. The platform provides a no-code environment for creating self-serve experiences and managing AI outputs. Humley aims to revolutionize customer experiences and boost efficiencies by making Conversational AI accessible and safe for all users. With features like Knowledge Search, Build Flows, Integrate with Systems, Capture Feedback, and Multi-Channel Support, Humley Studio offers a comprehensive toolkit for creating engaging conversational experiences. The platform empowers businesses to deliver exceptional customer service, streamline access to AI models, and improve operational efficiencies.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

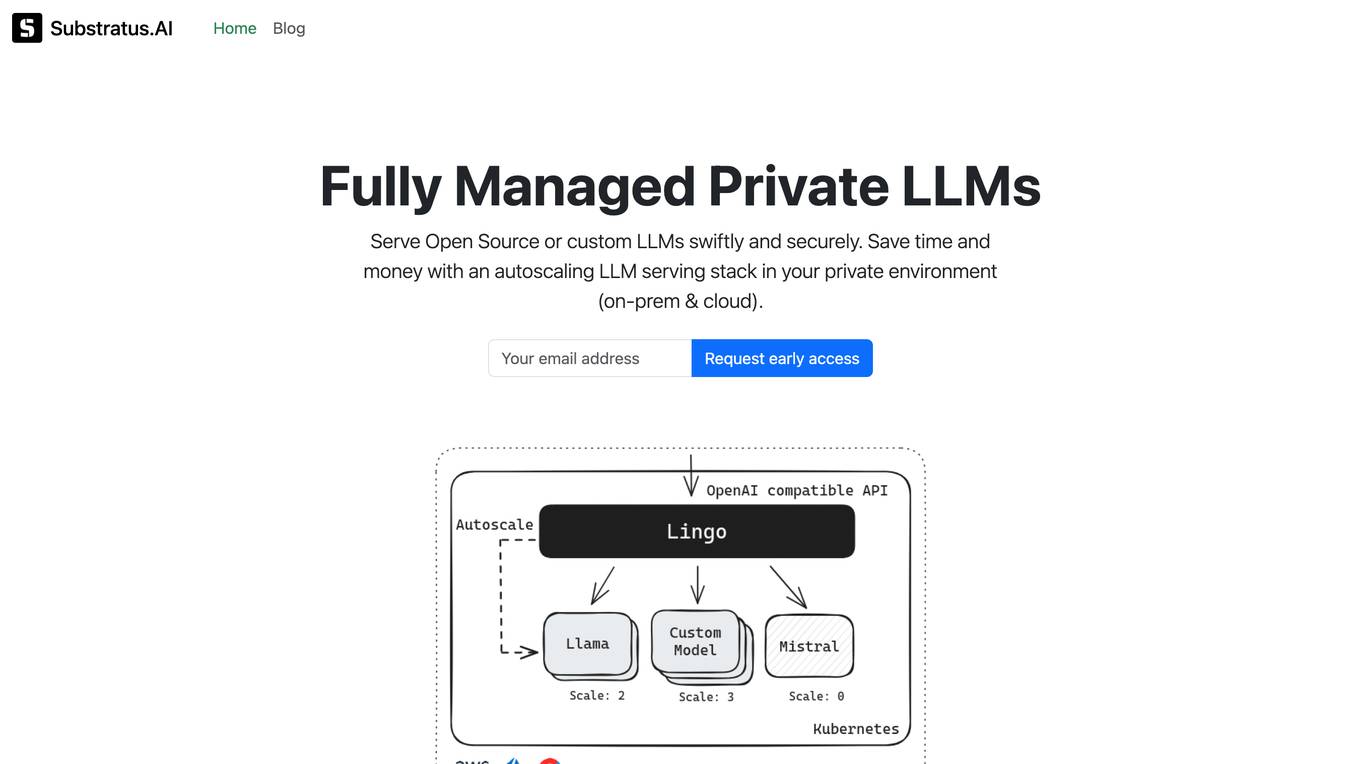

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

Intuz

Intuz is an AI, IoT, Mobile & Web Development Services Company that empowers businesses with AI-enabled solutions. They have delivered over 1700 successful projects to enterprise, SMEs, and Fortune 500 clients globally. Intuz offers services such as AI development, IoT solutions, mobile app development, web applications, and enterprise app development. They serve various industries with use-case specific AI and IoT services, including Consumer Electronics, EV & Green Energy, Smart Manufacturing, Fleet Management & Supply Chain, and Smart Factory & Plants.

Empower

Empower is a serverless fine-tuned LLM hosting platform that offers a developer platform for fine-tuned LLMs. It provides prebuilt task-specific base models with GPT4 level response quality, enabling users to save up to 80% on LLM bills with just 5 lines of code change. Empower allows users to own their models, offers cost-effective serving with no compromise on performance, and charges on a per-token basis. The platform is designed to be user-friendly, efficient, and cost-effective for deploying and serving fine-tuned LLMs.

Dappier

Dappier is a platform that enables publishers and AI developers to monetize their content by creating branded AI agents and syndicating trusted, rights-cleared data. Users can easily connect their data sources, transform content for AI interaction, and launch custom AI agents for natural search and content recommendations. The platform offers a self-serve fine-tuning platform and a marketplace for syndicating content to AI developers, enabling users to generate new revenue streams. Dappier prioritizes data security and privacy, ensuring that training data is never shared with external parties.

Amazon Q in QuickSight

Amazon Q in QuickSight is a generative BI assistant that makes it easy to build and consume insights. With Amazon Q, BI users can build, discover, and share actionable insights and narratives in seconds using intuitive natural language experiences. Analysts can quickly build visuals and calculations and refine visuals using natural language. Business users can self-serve data and insights using natural language. Amazon Q is built with security and privacy in mind. It can understand and respect your existing governance identities, roles, and permissions and use this information to personalize its interactions. If a user doesn't have permission to access certain data without Amazon Q, they can't access it using Amazon Q either. Amazon Q in QuickSight is designed to meet the most stringent enterprise requirements from day one—none of your data or Amazon Q inputs and outputs are used to improve underlying models of Amazon Q for anyone but you.

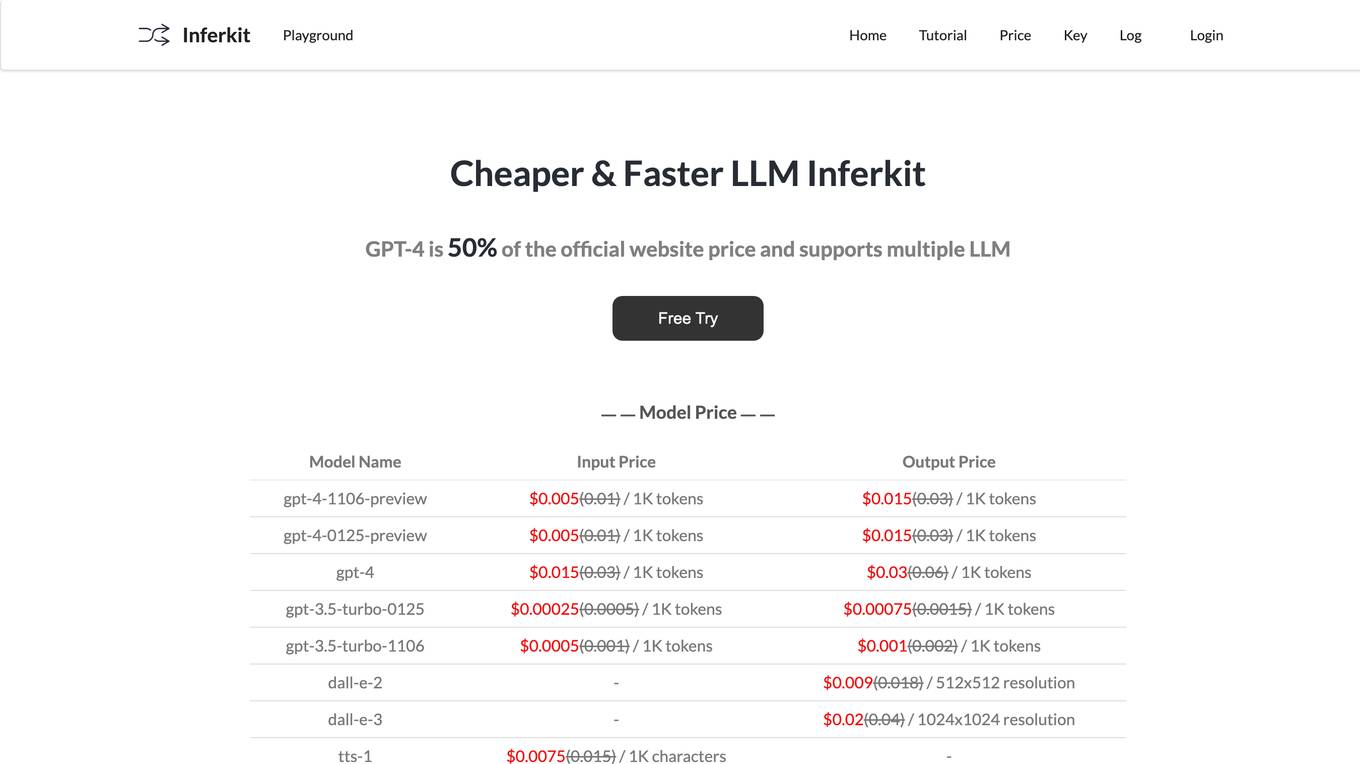

Inferkit AI

Inferkit AI is an AI tool that offers a cheaper and faster LLM router. It provides users with the ability to generate text content efficiently and cost-effectively. The tool is designed to assist users in creating various types of written content, such as articles, stories, and more, by leveraging advanced language models. Inferkit AI aims to streamline the content creation process and enhance productivity for individuals and businesses alike.

EnterpriseAI

EnterpriseAI is an advanced computing platform that focuses on the intersection of high-performance computing (HPC) and artificial intelligence (AI). The platform provides in-depth coverage of the latest developments, trends, and innovations in the AI-enabled computing landscape. EnterpriseAI offers insights into various sectors such as financial services, government, healthcare, life sciences, energy, manufacturing, retail, and academia. The platform covers a wide range of topics including AI applications, security, data storage, networking, and edge/IoT technologies.

15 - Open Source AI Tools

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

instill-core

Instill Core is an open-source orchestrator comprising a collection of source-available projects designed to streamline every aspect of building versatile AI features with unstructured data. It includes Instill VDP (Versatile Data Pipeline) for unstructured data, AI, and pipeline orchestration, Instill Model for scalable MLOps and LLMOps for open-source or custom AI models, and Instill Artifact for unified unstructured data management. Instill Core can be used for tasks such as building, testing, and sharing pipelines, importing, serving, fine-tuning, and monitoring ML models, and transforming documents, images, audio, and video into a unified AI-ready format.

truss-examples

Truss is the simplest way to serve AI/ML models in production. This repository provides dozens of example models, each ready to deploy as-is or adapt to your needs. To get started, clone the repository, install Truss, and pick a model to deploy by passing a path to that model. Truss will prompt you for an API Key, which can be obtained from the Baseten API keys page. Invocation depends on the model's input and output specifications. Refer to individual model READMEs for invocation details. Contributions of new models and improvements to existing models are welcome. See CONTRIBUTING.md for details.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

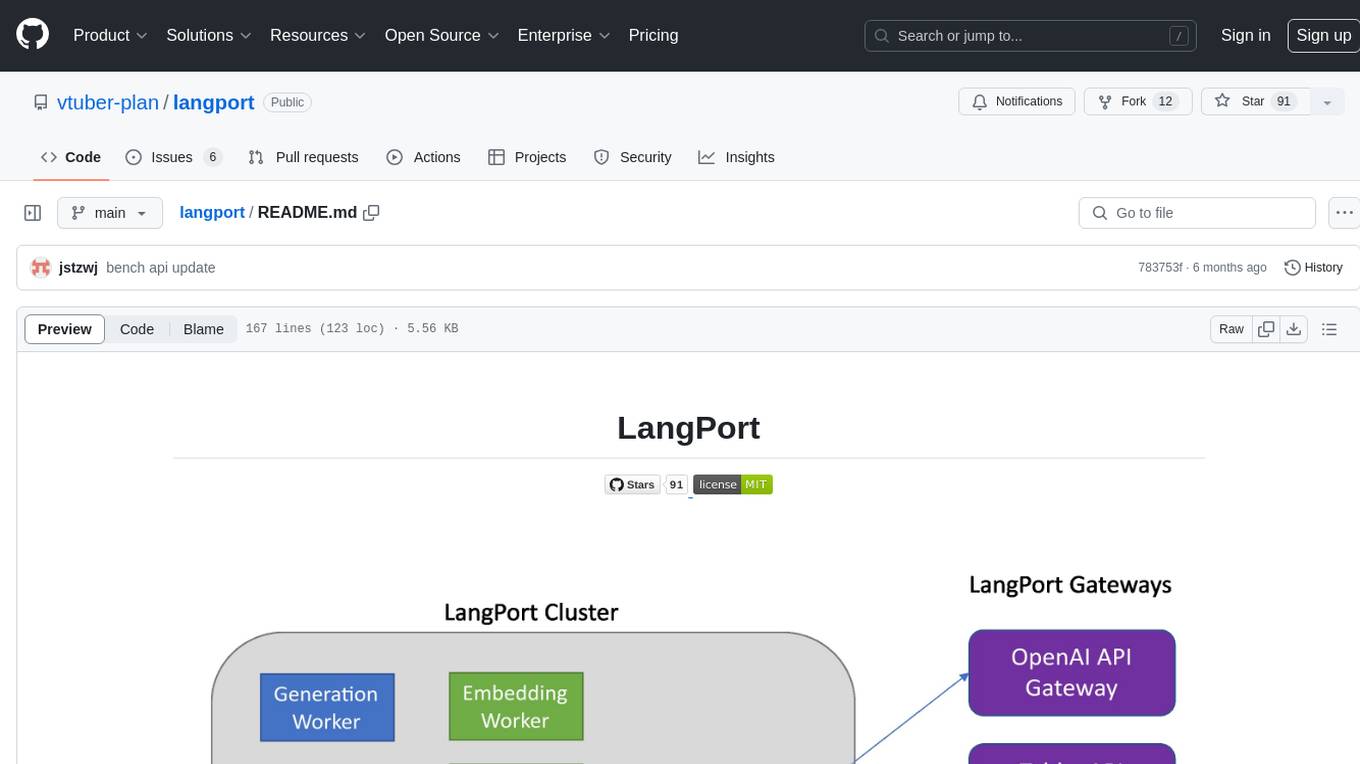

langport

LangPort is an open-source platform for serving large language models. It aims to provide a super fast LLM inference service with core features including Huggingface transformers support, distributed serving system, streaming generation, batch inference, and support for various model architectures. It offers compatibility with OpenAI, FauxPilot, HuggingFace, and Tabby APIs. The project supports model architectures like LLaMa, GLM, GPT2, and GPT Neo, and has been tested with models such as NingYu, Vicuna, ChatGLM, and WizardLM. LangPort also provides features like dynamic batch inference, int4 quantization, and generation logprobs parameter.

EasyLM

EasyLM is a one-stop solution for pre-training, fine-tuning, evaluating, and serving large language models in JAX/Flax. It simplifies the process by leveraging JAX's pjit functionality to scale up training to multiple TPU/GPU accelerators. Built on top of Huggingface's transformers and datasets, EasyLM offers an easy-to-use and customizable codebase for training large language models without the complexity found in other frameworks. It supports sharding model weights and training data across multiple accelerators, enabling multi-TPU/GPU training on a single host or across multiple hosts on Google Cloud TPU Pods. EasyLM currently supports models like LLaMA, LLaMA 2, and LLaMA 3.

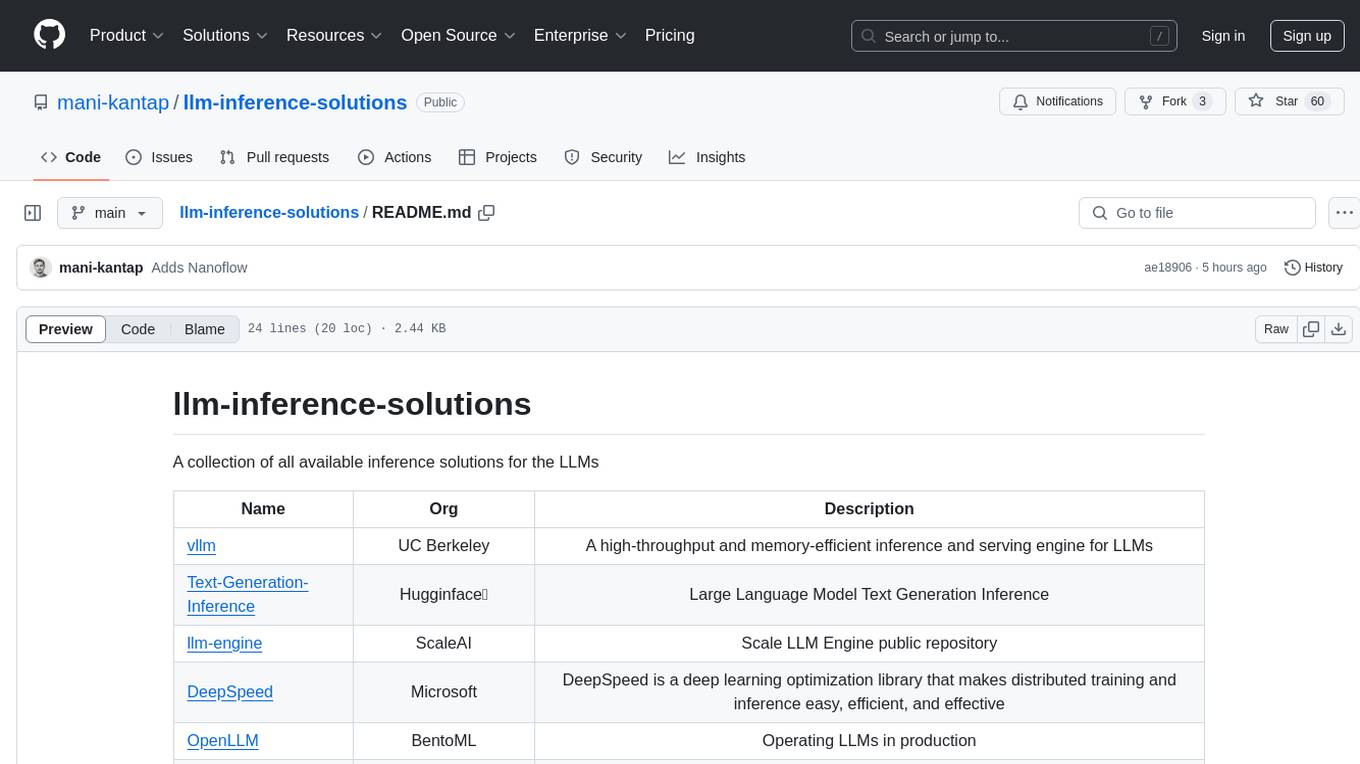

llm-inference-solutions

A collection of available inference solutions for Large Language Models (LLMs) including high-throughput engines, optimization libraries, deployment toolkits, and deep learning frameworks for production environments.

aphrodite-engine

Aphrodite is the official backend engine for PygmalionAI, serving as the inference endpoint for the website. It allows serving Hugging Face-compatible models with fast speeds. Features include continuous batching, efficient K/V management, optimized CUDA kernels, quantization support, distributed inference, and 8-bit KV Cache. The engine requires Linux OS and Python 3.8 to 3.12, with CUDA >= 11 for build requirements. It supports various GPUs, CPUs, TPUs, and Inferentia. Users can limit GPU memory utilization and access full commands via CLI.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

clearml

ClearML is an auto-magical suite of tools designed to streamline AI workflows. It includes modules for experiment management, MLOps/LLMOps, data management, model serving, and more. ClearML offers features like experiment tracking, model serving, orchestration, and automation. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm for remote debugging. ClearML aims to simplify collaboration, automate processes, and enhance visibility in AI projects.

ipex-llm

The `ipex-llm` repository is an LLM acceleration library designed for Intel GPU, NPU, and CPU. It provides seamless integration with various models and tools like llama.cpp, Ollama, HuggingFace transformers, LangChain, LlamaIndex, vLLM, Text-Generation-WebUI, DeepSpeed-AutoTP, FastChat, Axolotl, and more. The library offers optimizations for over 70 models, XPU acceleration, and support for low-bit (FP8/FP6/FP4/INT4) operations. Users can run different models on Intel GPUs, NPU, and CPUs with support for various features like finetuning, inference, serving, and benchmarking.

ai-platform-engineering

The AI Platform Engineering repository provides a collection of tools and resources for building and deploying AI models. It includes libraries for data preprocessing, model training, and model serving. The repository also contains example code and tutorials to help users get started with AI development. Whether you are a beginner or an experienced AI engineer, this repository offers valuable insights and best practices to streamline your AI projects.

ai-accelerator

The AI Accelerator project source code is designed to initialize an OpenShift cluster with a recommended set of operators and components for training, deploying, serving, and monitoring Machine Learning models. It provides core OpenShift features for Data Science environments and can be customized for specific scenarios. The project automates IT infrastructure using GitOps practices, including Git, code review, and CI/CD. ArgoCD Application objects are used to manage the installation of operators on the cluster.

amd-shark-ai

The amdshark-ai repository contains the amdshark Modeling and Serving Libraries, which include sub-projects like shortfin for high performance inference, amdsharktank for model recipes and conversion tools, and amdsharktuner for tuning program performance. Developers can find API documentation, programming guides, and support matrix for various models within the repository.

20 - OpenAI Gpts

Create A Business Model Canvas For Your Business

Let's get started by telling me about your business: What do you offer? Who do you serve? ------------------------------------------------------- Need help Prompt Engineering? Reach out on LinkedIn: StephenHnilica

Il King del Fantacalcio - Esperto di Serie A

Analisi dettagliate e statistiche per il fantacalcio. Strategie, formazioni vincenti, e suggerimenti di mercato per la Serie A. Perfetto per chi cerca il podio nel proprio campionato. Aggiornamenti continui sui giocatori, performance e infortuni. Tutto quello che serve per la tua squadra ideale

Buildwell AI - UK Construction Regs Assistant

Provides Construction Support relating to Planning Permission, Building Regulations, Party Wall Act and Fire Safety in the UK. Obtain instant Guidance for your Construction Project.

World Animals Flight Attendant Uniform

Enjoy the world of anthropomorphic animals and enjoy a banquet in flight attendant uniforms

SQL Server assistant

Expert in SQL Server for database management, optimization, and troubleshooting.

Baci's AI Server

An AI waiter for Baci Bistro & Bar, knowledgeable about the menu and ready to assist.

Software expert

Server admin expert in cPanel, Softaculous, WHM, WordPress, and Elementor Pro.

アダチさん13号(SQLServer篇)

安達孝一さんがSE時代に蓄積してきた、SQL Serverのナレッジやノウハウ等 (SQL Server 2000/2005/2008/2012) について、ご質問頂けます。また、対話内容を基に、ChatGPT(GPT-4)向けの、汎用的な質問文例も作成できます。

CraftGPT

Your expert Minecraft server Java plugin assistant. Whether you're learning the ropes or are an experienced developer, I'm here to help you with Java concepts, coding examples, and any queries you have about Minecraft plugin development.

Gourmet GPT

As a high-class server, I describe dishes with luxury and elegance. Just upload your picture!

Bun Nook Kit App Builder

Expert in BNK server setup, typesafe routes, htmlody, and creating SQLite schemas with BNK.