buster

Buster is an open-source platform for deploying AI data analysts

Stars: 449

Buster is a modern analytics platform designed with AI in mind, focusing on self-serve experiences powered by Large Language Models. It addresses pain points in existing tools by advocating for AI-centric app development, cost-effective data warehousing, improved CI/CD processes, and empowering data teams to create powerful, user-friendly data experiences. The platform aims to revolutionize AI analytics by enabling data teams to build deep integrations and own their entire analytics stack.

README:

A modern data analytics platform that enables teams to interact with their data through AI-powered conversations, beautiful dashboards, and automated insights.

- Node.js 20+

- pnpm 9+

- PostgreSQL (via Supabase local or cloud)

- Clone the repository

git clone https://github.com/buster-so/buster.git

cd buster- Install dependencies

pnpm install- Set up environment variables

cp .env.example .env

# Edit .env with your configuration- Start development servers

turbo devThe application will be available at:

- Web app: http://localhost:3000

- API server: http://localhost:8080

Buster follows a modular monorepo architecture where:

- Packages are standalone building blocks containing reusable logic and types

- Apps assemble packages to create user-facing applications

| App | Description | Stack |

|---|---|---|

@buster-app/web |

Main web application | TanStack Start, React |

@buster-app/server |

API server | Node.js, Hono |

@buster-app/trigger |

Background job processor | Trigger.dev v3 |

@buster-app/cli |

Command-line interface | TypeScript, Bun |

@buster-app/electric-server |

Real-time sync server | Electric SQL |

@buster-app/api |

Legacy API (being migrated) | Rust (deprecated) |

| Package | Purpose |

|---|---|

@buster/database |

Database schema, migrations, and ALL query logic (Drizzle ORM) |

@buster/server-shared |

API contracts, request/response types |

@buster/data-source |

Customer database connectors (PostgreSQL, MySQL, BigQuery, Snowflake) |

@buster/ai |

AI agents and workflows (AI SDK v5) |

@buster/access-controls |

Permission and security logic |

All development commands use Turbo for optimal caching and parallelization:

# Building

turbo build # Build entire monorepo

turbo build --filter=@buster/ai # Build specific package

# Linting

turbo lint # Lint entire monorepo

turbo lint --filter=@buster-app/web # Lint specific app

# Testing

turbo test:unit # Run all unit tests

turbo test:unit --filter=@buster/database # Test specific package

# Development

turbo dev # Start all dev servers

turbo dev --filter=@buster-app/web # Start specific app- Write tests first - Follow test-driven development

-

Check types - Run

turbo buildto ensure type safety -

Lint code - Run

turbo lintto maintain code quality -

Run tests - Run

turbo test:unitbefore committing

Tests are colocated with source files:

-

file.test.ts- Unit tests -

file.int.test.ts- Integration tests

turbo test:unit # Fast, isolated unit tests

turbo test:integration # Tests with external dependenciesFor local development with Supabase:

# Start Supabase locally

npx supabase start

# Run migrations

turbo db:migrate

# Access database

psql "postgresql://postgres:[email protected]:54322/postgres"We follow functional programming principles:

- Pure functions over classes

- Immutable data structures

- Type-safe with Zod schemas

- Modular, composable code

- Create a feature branch

- Write tests for new functionality

- Ensure

turbo build lint test:unitpasses - Submit PR with clear description

- Address review feedback

- Each package/app has its own README.md

- CLAUDE.md files provide AI assistant guidance

- Keep documentation close to code

buster/

├── apps/ # User-facing applications

│ ├── web/ # Main web application

│ ├── server/ # API server

│ ├── trigger/ # Background jobs

│ └── cli/ # Command-line tools

├── packages/ # Reusable modules

│ ├── database/ # Database layer

│ ├── server-shared/ # API contracts

│ ├── data-source/ # Data connectors

│ └── ai/ # AI capabilities

├── turbo.json # Turbo configuration

├── package.json # Root package.json

└── .env.example # Environment template

- Frontend: React, TanStack Start, TailwindCSS

- Backend: Node.js, Hono, TypeScript

- Database: PostgreSQL (Supabase), Drizzle ORM

- AI: OpenAI, Anthropic, AI SDK v5

- Background Jobs: Trigger.dev v3

- Testing: Vitest

- Build: Turborepo, pnpm

Note: The Rust API (apps/api) is legacy code being migrated to TypeScript. All new development should use the TypeScript stack in apps/server.

[License information]

[Support channels and documentation links]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for buster

Similar Open Source Tools

buster

Buster is a modern analytics platform designed with AI in mind, focusing on self-serve experiences powered by Large Language Models. It addresses pain points in existing tools by advocating for AI-centric app development, cost-effective data warehousing, improved CI/CD processes, and empowering data teams to create powerful, user-friendly data experiences. The platform aims to revolutionize AI analytics by enabling data teams to build deep integrations and own their entire analytics stack.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

inspector

A developer tool for testing and debugging Model Context Protocol (MCP) servers. It allows users to test the compliance of their MCP servers with the latest MCP specs, supports various transports like STDIO, SSE, and Streamable HTTP, features an LLM Playground for testing server behavior against different models, provides comprehensive logging and error reporting for MCP server development, and offers a modern developer experience with multiple server connections and saved configurations. The tool is built using Next.js and integrates MCP capabilities, AI SDKs from OpenAI, Anthropic, and Ollama, and various technologies like Node.js, TypeScript, and Next.js.

zcf

ZCF (Zero-Config Claude-Code Flow) is a tool that provides zero-configuration, one-click setup for Claude Code with bilingual support, intelligent agent system, and personalized AI assistant. It offers an interactive menu for easy operations and direct commands for quick execution. The tool supports bilingual operation with automatic language switching and customizable AI output styles. ZCF also includes features like BMad Workflow for enterprise-grade workflow system, Spec Workflow for structured feature development, CCR (Claude Code Router) support for proxy routing, and CCometixLine for real-time usage tracking. It provides smart installation, complete configuration management, and core features like professional agents, command system, and smart configuration. ZCF is cross-platform compatible, supports Windows and Termux environments, and includes security features like dangerous operation confirmation mechanism.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

aigne-doc-smith

AIGNE DocSmith is a powerful AI-driven documentation generation tool that automates the creation of detailed, structured, and multi-language documentation directly from source code. It intelligently analyzes codebase to generate a comprehensive document structure, populates content with high-quality AI-powered generation, supports seamless translation into 12+ languages, integrates with AIGNE Hub for large language models, offers Discuss Kit publishing, automatically updates documentation with source code changes, and allows for individual document optimization.

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

VASA-1-hack

VASA-1-hack is a repository containing the VASA implementation separated from EMOPortraits, with all components properly configured for standalone training. It provides detailed setup instructions, prerequisites, project structure, configuration details, running training modes, troubleshooting tips, monitoring training progress, development information, and acknowledgments. The repository aims to facilitate training volumetric avatar models with configurable parameters and logging levels for efficient debugging and testing.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

quantcoder

QuantCoder is a local-first CLI tool that generates QuantConnect trading algorithms from academic research papers. It uses local LLMs for code generation, refinement, and error fixing. The tool does not require cloud API keys and offers models for reasoning, summarization, and chat. Users can interact with QuantCoder through interactive, CLI, programmatic, and autonomous modes. It also features an evolution mode inspired by AlphaEvolve, backtesting with detailed metrics, library building, and integration with QuantConnect for backtesting and deployment. The tool's architecture includes components for CLI, configuration management, NLP, multi-agent system, evolution engine, self-improving pipeline, library builder, and integration with QuantConnect MCP.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

auto-engineer

Auto Engineer is a tool designed to automate the Software Development Life Cycle (SDLC) by building production-grade applications with a combination of human and AI agents. It offers a plugin-based architecture that allows users to install only the necessary functionality for their projects. The tool guides users through key stages including Flow Modeling, IA Generation, Deterministic Scaffolding, AI Coding & Testing Loop, and Comprehensive Quality Checks. Auto Engineer follows a command/event-driven architecture and provides a modular plugin system for specific functionalities. It supports TypeScript with strict typing throughout and includes a built-in message bus server with a web dashboard for monitoring commands and events.

airstore

Airstore is a filesystem for AI agents that adds any source of data into a virtual filesystem, allowing users to connect services like Gmail, GitHub, Linear, and more, and describe data needs in plain English. Results are presented as files that can be read by Claude Code. Features include smart folders for natural language queries, integrations with various services, executable MCP servers, team workspaces, and local mode operation on user infrastructure. Users can sign up, connect integrations, create smart folders, install the CLI, mount the filesystem, and use with Claude Code to perform tasks like summarizing invoices, identifying unpaid invoices, and extracting data into CSV format.

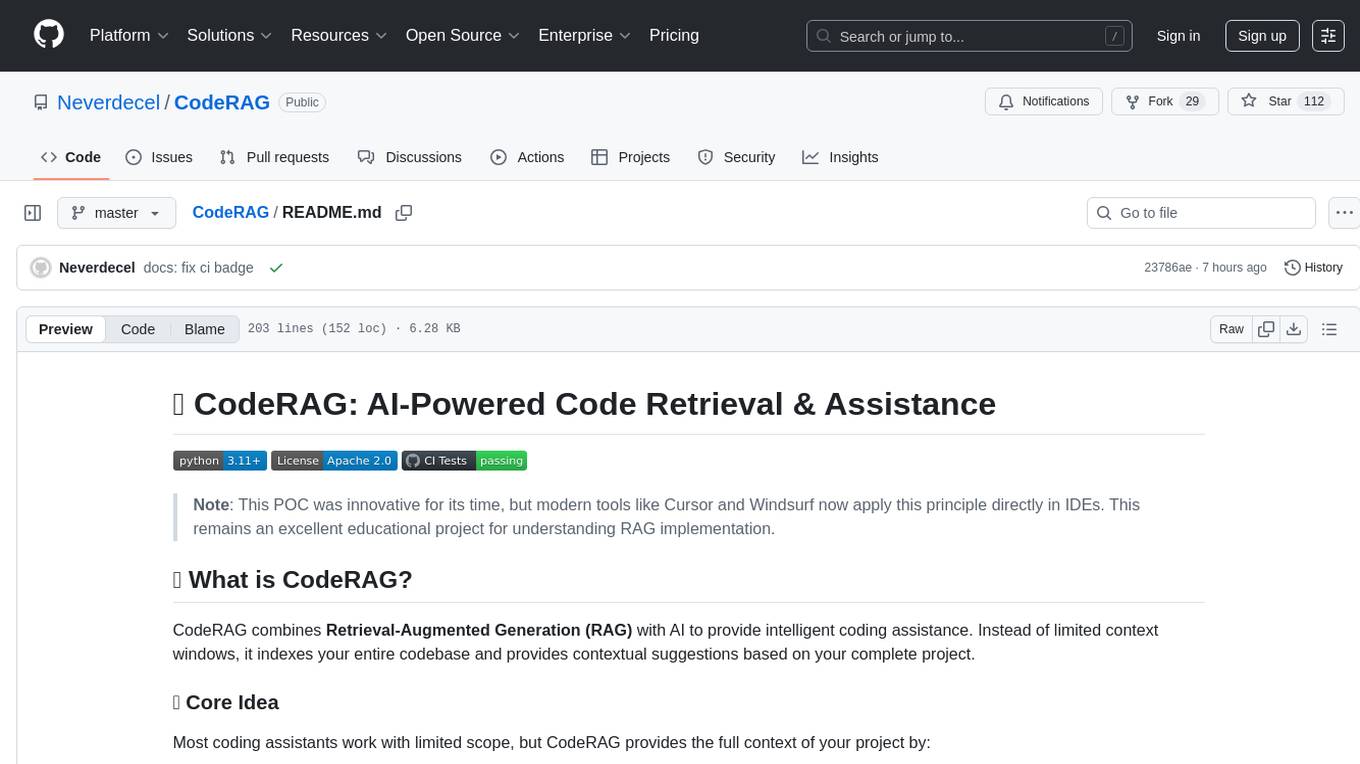

CodeRAG

CodeRAG is an AI-powered code retrieval and assistance tool that combines Retrieval-Augmented Generation (RAG) with AI to provide intelligent coding assistance. It indexes your entire codebase for contextual suggestions based on your complete project, offering real-time indexing, semantic code search, and contextual AI responses. The tool monitors your code directory, generates embeddings for Python files, stores them in a FAISS vector database, matches user queries against the code database, and sends retrieved code context to GPT models for intelligent responses. CodeRAG also features a Streamlit web interface with a chat-like experience for easy usage.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.