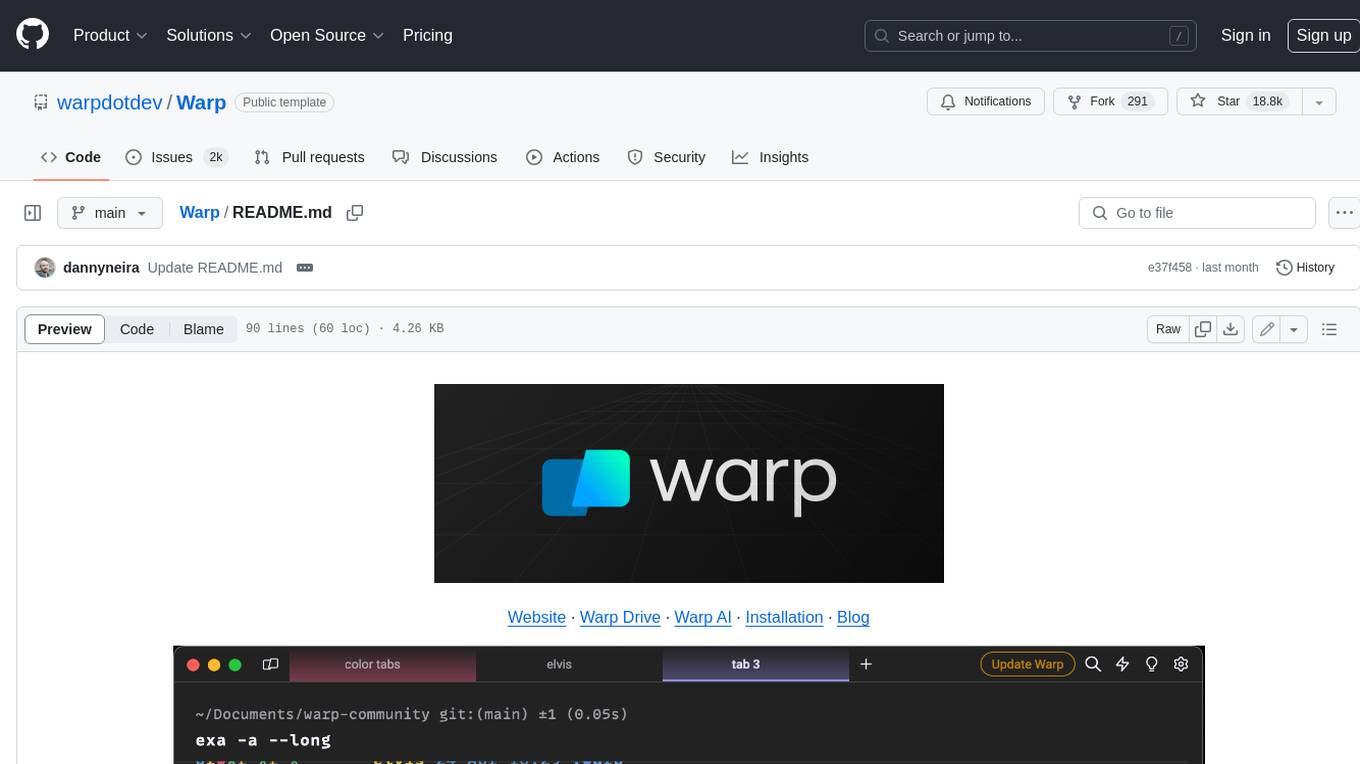

Warp

Warp is a modern, Rust-based terminal with AI built in so you and your team can build great software, faster.

Stars: 21219

Warp is a blazingly-fast modern Rust based GPU-accelerated terminal built to make you and your team more productive. It is available for macOS and Linux users, with plans to support Windows and the Web (WASM) in the future. Warp has a community search page where you can find solutions to common issues, and you can file issue requests in the repo if you can't find a solution. Warp is open-source, and the team is planning to first open-source their Rust UI framework, and then parts and potentially all of their client codebase.

README:

Website · Docs · Install Guide · How Warp Works

This is an issues-only repo for Warp, a modern terminal with AI and your dev team’s knowledge built-in.

Warp is:

- Built with Rust

- GPU-accelerated

- Compatible with zsh, bash, fish, and PowerShell

- Ready to use on MacOS and Linux (Windows waitlist)

You can download Warp and read our docs for platform-specific instructions.

We try to release an update weekly, typically on Thursdays. Read our changelog (release notes).

Please search through our existing issues for your bug (including workarounds) or feature request.

If you can't find a solution above, please file issue requests in this repo! We kindly ask that you please use our issue templates to make the issues easier to track for our team.

We are planning to first open-source our Rust UI framework, and then parts and potentially all of our client codebase. The server portion of Warp will remain closed-source for now.

You can see how we’re thinking about open source here: https://github.com/warpdotdev/Warp/discussions/400

As a side note, we are open-sourcing our extension points as we go. The community has already been contributing new themes. And we’ve just opened our Workflows repository for the community to contribute common useful commands.

Interested in joining the team? See our open roles and feel free to email us: hello at warpdotdev

- See our docs for a walk-through of the features within our app.

- Join our Discord to chat with other users and get immediate help from members of the Warp team.

For anything else, please don't hesitate to reach out via email at hello at warpdotdev

At a high level, we ask everyone to be respectful and empathetic. We follow the GitHub Community Guidelines:

- Be welcoming and open-minded

- Respect each other

- Communicate with empathy

- Be clear and stay on topic

We'd like to call out a few of the open source dependencies that have helped Warp to get off the ground:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Warp

Similar Open Source Tools

Warp

Warp is a blazingly-fast modern Rust based GPU-accelerated terminal built to make you and your team more productive. It is available for macOS and Linux users, with plans to support Windows and the Web (WASM) in the future. Warp has a community search page where you can find solutions to common issues, and you can file issue requests in the repo if you can't find a solution. Warp is open-source, and the team is planning to first open-source their Rust UI framework, and then parts and potentially all of their client codebase.

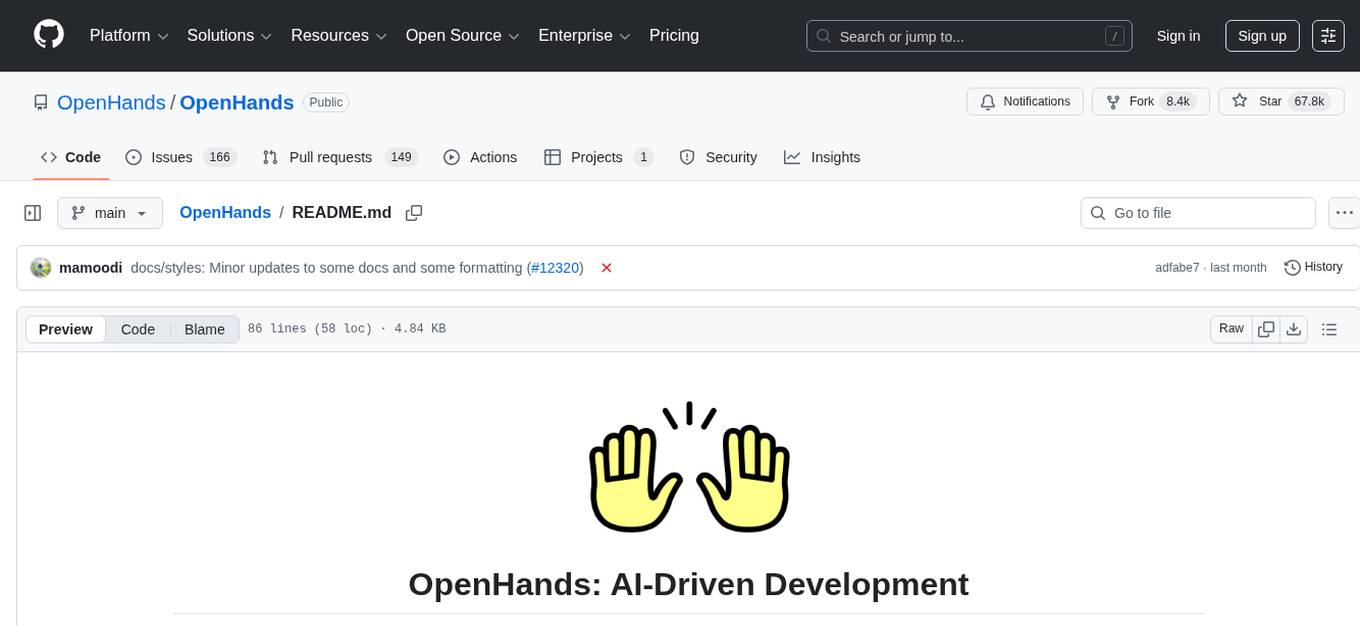

pearai-master

PearAI is an inventory that curates cutting-edge AI tools in one place, offering a unified interface for seamless tool integration. The repository serves as the conglomeration of all PearAI project repositories, including VSCode fork, AI chat functionalities, landing page, documentation, and server. Contributions are welcome through quests and issue tackling, with the project stack including TypeScript/Electron.js, Next.js/React, Python FastAPI, and Axiom for logging/telemetry.

OpenHands

OpenHands is a community focused on AI-driven development, offering a Software Agent SDK, CLI, Local GUI, Cloud deployment, and Enterprise solutions. The SDK is a Python library for defining and running agents, the CLI provides an easy way to start using OpenHands, the Local GUI allows running agents on a laptop with REST API, the Cloud deployment offers hosted infrastructure with integrations, and the Enterprise solution enables self-hosting via Kubernetes with extended support and access to the research team. OpenHands is available under the MIT license.

ainneve

Ainneve is an example game for Evennia, created by the Evennia community as a base for learning and building off of. It is currently in early development stages and undergoing major refactoring. The game provides a starting point for users to explore game systems and world settings, with extensive documentation available. Installation is straightforward, with pre-configured settings and clear instructions for setting up and starting the server. The project welcomes contributions and offers opportunities for users to get involved by checking open issues and joining the community Discord channel. Ainneve is licensed under the BSD license.

open-saas

Open SaaS is a free and open-source React and Node.js template for building SaaS applications. It comes with a variety of features out of the box, including authentication, payments, analytics, and more. Open SaaS is built on top of the Wasp framework, which provides a number of features to make it easy to build SaaS applications, such as full-stack authentication, end-to-end type safety, jobs, and one-command deploy.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

pearai-app

PearAI is an AI-powered code editor designed to enhance development by reducing the amount of coding required. It is a fork of VSCode and the main functionality lies within the 'extension/pearai' submodule. Users can contribute to the project by fixing issues, submitting bugs and feature requests, reviewing source code changes, and improving documentation. The tool aims to streamline the coding process and provide an efficient environment for developers to work in.

digma

Digma is a Continuous Feedback platform that provides code-level insights related to performance, errors, and usage during development. It empowers developers to own their code all the way to production, improving code quality and preventing critical issues. Digma integrates with OpenTelemetry traces and metrics to generate insights in the IDE, helping developers analyze code scalability, bottlenecks, errors, and usage patterns.

dialog

Dialog is an API-focused tool designed to simplify the deployment of Large Language Models (LLMs) for programmers interested in AI. It allows users to deploy any LLM based on the structure provided by dialog-lib, enabling them to spend less time coding and more time training their models. The tool aims to humanize Retrieval-Augmented Generative Models (RAGs) and offers features for better RAG deployment and maintenance. Dialog requires a knowledge base in CSV format and a prompt configuration in TOML format to function effectively. It provides functionalities for loading data into the database, processing conversations, and connecting to the LLM, with options to customize prompts and parameters. The tool also requires specific environment variables for setup and configuration.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

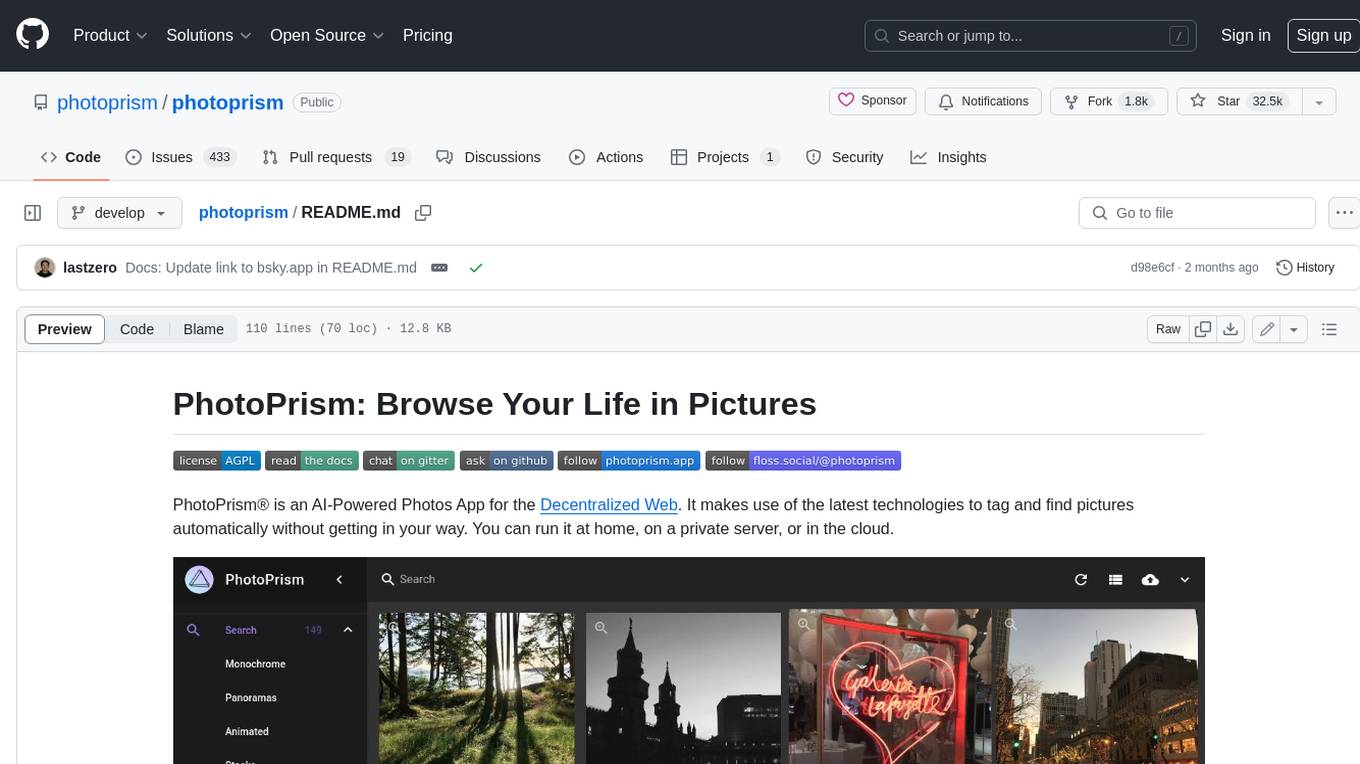

photoprism

PhotoPrism is an AI-powered photos app for the decentralized web. It uses the latest technologies to tag and find pictures automatically without getting in your way. You can run it at home, on a private server, or in the cloud.

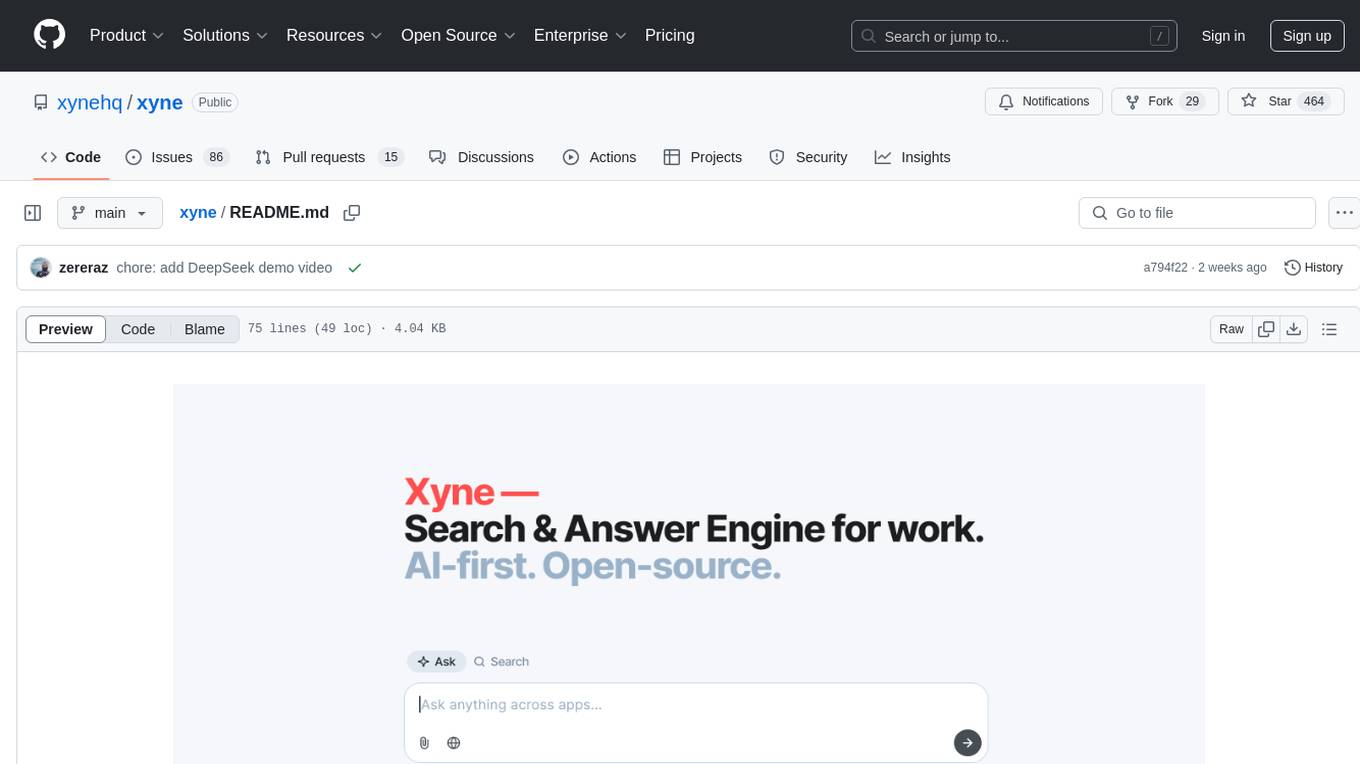

xyne

Xyne is an AI-first Search & Answer Engine for work, serving as an OSS alternative to Glean, Gemini, and MS Copilot. It securely indexes data from various applications like Google Workspace, Atlassian suite, Slack, and Github, providing a Google + ChatGPT-like experience to find information and get up-to-date answers. Users can easily locate files, triage issues, inquire about customers/deals/features/tickets, and discover relevant contacts. Xyne enhances AI models by providing contextual information in a secure, private, and responsible manner, making it the most secure and future-proof solution for integrating AI into work environments.

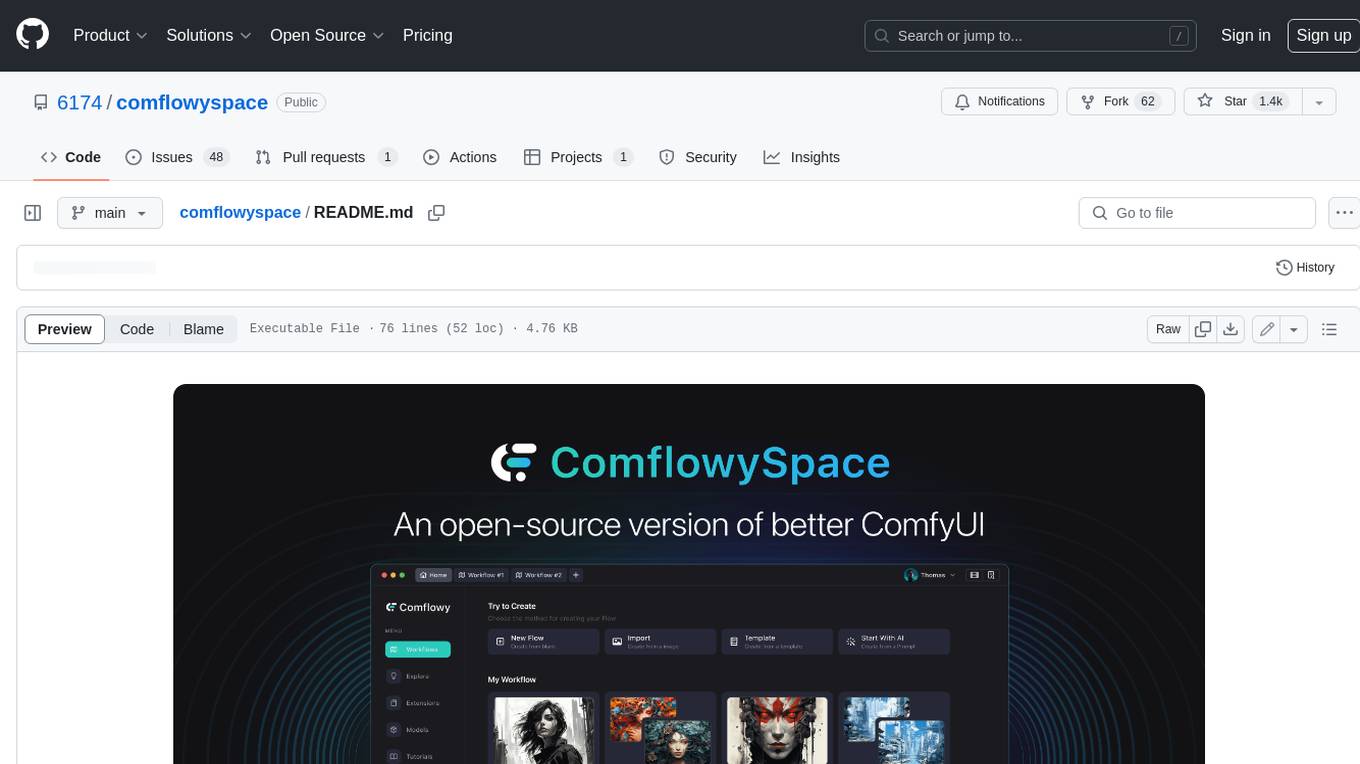

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

max

The Modular Accelerated Xecution (MAX) platform is an integrated suite of AI libraries, tools, and technologies that unifies commonly fragmented AI deployment workflows. MAX accelerates time to market for the latest innovations by giving AI developers a single toolchain that unlocks full programmability, unparalleled performance, and seamless hardware portability.

rakis

Rakis is a decentralized verifiable AI network in the browser where nodes can accept AI inference requests, run local models, verify results, and arrive at consensus without servers. It is open-source, functional, multi-model, multi-chain, and browser-first, allowing anyone to participate in the network. The project implements an embedding-based consensus mechanism for verifiable inference. Users can run their own node on rakis.ai or use the compiled version hosted on Huggingface. The project is meant for educational purposes and is a work in progress.

AppFlowy

AppFlowy.IO is an open-source alternative to Notion, providing users with control over their data and customizations. It aims to offer functionality, data security, and cross-platform native experience to individuals, as well as building blocks and collaboration infra services to enterprises and hackers. The tool is built with Flutter and Rust, supporting multiple platforms and emphasizing long-term maintainability. AppFlowy prioritizes data privacy, reliable native experience, and community-driven extensibility, aiming to democratize the creation of complex workplace management tools.

For similar tasks

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

Mindolph

Mindolph is an open source personal knowledge management software for all desktop platforms. It allows users to create and manage their own files in separate workspaces with saving in their local storage, organize their files as a tree in their workspaces, and have multiple tabs for opening files instead of a single file window. Mindolph supports Mind Map, Markdown, PlantUML, CSV sheet, and plain text file formats. It also has features such as quickly navigating to files and searching text in files under a specific folder, editing mind maps easily and quickly with key shortcuts, supporting themes and providing some pre-defined themes, importing from other mind map formats, and exporting to other file formats.

Warp

Warp is a blazingly-fast modern Rust based GPU-accelerated terminal built to make you and your team more productive. It is available for macOS and Linux users, with plans to support Windows and the Web (WASM) in the future. Warp has a community search page where you can find solutions to common issues, and you can file issue requests in the repo if you can't find a solution. Warp is open-source, and the team is planning to first open-source their Rust UI framework, and then parts and potentially all of their client codebase.

transcriptionstream

Transcription Stream is a self-hosted diarization service that works offline, allowing users to easily transcribe and summarize audio files. It includes a web interface for file management, Ollama for complex operations on transcriptions, and Meilisearch for fast full-text search. Users can upload files via SSH or web interface, with output stored in named folders. The tool requires a NVIDIA GPU and provides various scripts for installation and running. Ports for SSH, HTTP, Ollama, and Meilisearch are specified, along with access details for SSH server and web interface. Customization options and troubleshooting tips are provided in the documentation.

gptscript

GPTScript is a framework that enables Large Language Models (LLMs) to interact with various systems, including local executables, applications with OpenAPI schemas, SDK libraries, or RAG-based solutions. It simplifies the integration of systems with LLMs using minimal prompts. Sample use cases include chatting with a local CLI, OpenAPI compliant endpoint, local files/directories, and running automated workflows.

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

dwata

dwata is an open source desktop app designed to manage all your private data on your laptop, providing offline access, fast search capabilities, and organization features for emails, files, contacts, events, and tasks. It aims to reduce cognitive overhead in daily digital life by offering a centralized platform for personal data management. The tool prioritizes user privacy, with no data being sent outside the user's computer without explicit permission. dwata is still in early development stages and offers integration with AI providers for advanced functionalities.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.