airflow-client-python

Apache Airflow - OpenApi Client for Python

Stars: 346

The Apache Airflow Python Client provides a range of REST API endpoints for managing Airflow metadata objects. It supports CRUD operations for resources, with endpoints accepting and returning JSON. Users can create, read, update, and delete resources. The API design follows conventions with consistent naming and field formats. Update mask is available for patch endpoints to specify fields for update. API versioning is not synchronized with Airflow releases, and changes go through a deprecation phase. The tool supports various authentication methods and error responses follow RFC 7807 format.

README:

To facilitate management, Apache Airflow supports a range of REST API endpoints across its objects. This section provides an overview of the API design, methods, and supported use cases.

Most of the endpoints accept JSON as input and return JSON responses.

This means that you must usually add the following headers to your request:

Content-type: application/json

Accept: application/json

The term resource refers to a single type of object in the Airflow metadata. An API is broken up by its

endpoint's corresponding resource.

The name of a resource is typically plural and expressed in camelCase. Example: dagRuns.

Resource names are used as part of endpoint URLs, as well as in API parameters and responses.

The platform supports Create, Read, Update, and Delete operations on most resources. You can review the standards for these operations and their standard parameters below.

Some endpoints have special behavior as exceptions.

To create a resource, you typically submit an HTTP POST request with the resource's required metadata

in the request body.

The response returns a 201 Created response code upon success with the resource's metadata, including

its internal id, in the response body.

The HTTP GET request can be used to read a resource or to list a number of resources.

A resource's id can be submitted in the request parameters to read a specific resource.

The response usually returns a 200 OK response code upon success, with the resource's metadata in

the response body.

If a GET request does not include a specific resource id, it is treated as a list request.

The response usually returns a 200 OK response code upon success, with an object containing a list

of resources' metadata in the response body.

When reading resources, some common query parameters are usually available. e.g.:

v1/connections?limit=25&offset=25

| Query Parameter | Type | Description |

|---|---|---|

| limit | integer | Maximum number of objects to fetch. Usually 25 by default |

| offset | integer | Offset after which to start returning objects. For use with limit query parameter. |

Updating a resource requires the resource id, and is typically done using an HTTP PATCH request,

with the fields to modify in the request body.

The response usually returns a 200 OK response code upon success, with information about the modified

resource in the response body.

Deleting a resource requires the resource id and is typically executing via an HTTP DELETE request.

The response usually returns a 204 No Content response code upon success.

-

Resource names are plural and expressed in camelCase.

-

Names are consistent between URL parameter name and field name.

-

Field names are in snake_case.

{

\"name\": \"string\",

\"slots\": 0,

\"occupied_slots\": 0,

\"used_slots\": 0,

\"queued_slots\": 0,

\"open_slots\": 0

}Update mask is available as a query parameter in patch endpoints. It is used to notify the

API which fields you want to update. Using update_mask makes it easier to update objects

by helping the server know which fields to update in an object instead of updating all fields.

The update request ignores any fields that aren't specified in the field mask, leaving them with

their current values.

Example:

import requests

resource = requests.get("/resource/my-id").json()

resource["my_field"] = "new-value"

requests.patch("/resource/my-id?update_mask=my_field", data=json.dumps(resource))- API versioning is not synchronized to specific releases of the Apache Airflow.

- APIs are designed to be backward compatible.

- Any changes to the API will first go through a deprecation phase.

You can use a third party client, such as curl, HTTPie, Postman or the Insomnia rest client to test the Apache Airflow API.

Note that you will need to pass credentials data.

For e.g., here is how to pause a DAG with curl, when basic authorization is used:

curl -X PATCH 'https://example.com/api/v1/dags/{dag_id}?update_mask=is_paused' \\

-H 'Content-Type: application/json' \\

--user \"username:password\" \\

-d '{

\"is_paused\": true

}'Using a graphical tool such as Postman or Insomnia, it is possible to import the API specifications directly:

- Download the API specification by clicking the Download button at top of this document.

- Import the JSON specification in the graphical tool of your choice.

- In Postman, you can click the import button at the top

- With Insomnia, you can just drag-and-drop the file on the UI

Note that with Postman, you can also generate code snippets by selecting a request and clicking on the Code button.

Cross-origin resource sharing (CORS) is a browser security feature that restricts HTTP requests that are initiated from scripts running in the browser.

For details on enabling/configuring CORS, see Enabling CORS.

To be able to meet the requirements of many organizations, Airflow supports many authentication methods, and it is even possible to add your own method.

If you want to check which auth backend is currently set, you can use

airflow config get-value api auth_backends command as in the example below.

$ airflow config get-value api auth_backends

airflow.api.auth.backend.basic_authThe default is to deny all requests.

For details on configuring the authentication, see API Authorization.

We follow the error response format proposed in RFC 7807 also known as Problem Details for HTTP APIs. As with our normal API responses, your client must be prepared to gracefully handle additional members of the response.

This indicates that the request has not been applied because it lacks valid authentication credentials for the target resource. Please check that you have valid credentials.

This response means that the server understood the request but refuses to authorize it because it lacks sufficient rights to the resource. It happens when you do not have the necessary permission to execute the action you performed. You need to get the appropriate permissions in other to resolve this error.

This response means that the server cannot or will not process the request due to something that is perceived to be a client error (e.g., malformed request syntax, invalid request message framing, or deceptive request routing). To resolve this, please ensure that your syntax is correct.

This client error response indicates that the server cannot find the requested resource.

Indicates that the request method is known by the server but is not supported by the target resource.

The target resource does not have a current representation that would be acceptable to the user agent, according to the proactive negotiation header fields received in the request, and the server is unwilling to supply a default representation.

The request could not be completed due to a conflict with the current state of the target resource, e.g. the resource it tries to create already exists.

This means that the server encountered an unexpected condition that prevented it from fulfilling the request.

This Python package is automatically generated by the OpenAPI Generator project:

- API version: 2.9.0

- Package version: 2.9.0

- Build package: org.openapitools.codegen.languages.PythonClientCodegen

For more information, please visit https://airflow.apache.org

Python >=3.8

You can install the client using standard Python installation tools. It is hosted

in PyPI with apache-airflow-client package id so the easiest way to get the latest

version is to run:

pip install apache-airflow-clientIf the python package is hosted on a repository, you can install directly using:

pip install git+https://github.com/apache/airflow-client-python.gitThen import the package:

import airflow_client.clientPlease follow the installation procedure and then run the following:

import time

from airflow_client import client

from pprint import pprint

from airflow_client.client.api import config_api

from airflow_client.client.model.config import Config

from airflow_client.client.model.error import Error

# Defining the host is optional and defaults to /api/v1

# See configuration.py for a list of all supported configuration parameters.

configuration = client.Configuration(host="/api/v1")

# The client must configure the authentication and authorization parameters

# in accordance with the API server security policy.

# Examples for each auth method are provided below, use the example that

# satisfies your auth use case.

# Configure HTTP basic authorization: Basic

configuration = client.Configuration(username="YOUR_USERNAME", password="YOUR_PASSWORD")

# Enter a context with an instance of the API client

with client.ApiClient(configuration) as api_client:

# Create an instance of the API class

api_instance = config_api.ConfigApi(api_client)

try:

# Get current configuration

api_response = api_instance.get_config()

pprint(api_response)

except client.ApiException as e:

print("Exception when calling ConfigApi->get_config: %s\n" % e)All URIs are relative to /api/v1

| Class | Method | HTTP request | Description |

|---|---|---|---|

| ConfigApi | get_config | GET /config | Get current configuration |

| ConnectionApi | delete_connection | DELETE /connections/{connection_id} | Delete a connection |

| ConnectionApi | get_connection | GET /connections/{connection_id} | Get a connection |

| ConnectionApi | get_connections | GET /connections | List connections |

| ConnectionApi | patch_connection | PATCH /connections/{connection_id} | Update a connection |

| ConnectionApi | post_connection | POST /connections | Create a connection |

| ConnectionApi | test_connection | POST /connections/test | Test a connection |

| DAGApi | delete_dag | DELETE /dags/{dag_id} | Delete a DAG |

| DAGApi | get_dag | GET /dags/{dag_id} | Get basic information about a DAG |

| DAGApi | get_dag_details | GET /dags/{dag_id}/details | Get a simplified representation of DAG |

| DAGApi | get_dag_source | GET /dagSources/{file_token} | Get a source code |

| DAGApi | get_dags | GET /dags | List DAGs |

| DAGApi | get_task | GET /dags/{dag_id}/tasks/{task_id} | Get simplified representation of a task |

| DAGApi | get_tasks | GET /dags/{dag_id}/tasks | Get tasks for DAG |

| DAGApi | patch_dag | PATCH /dags/{dag_id} | Update a DAG |

| DAGApi | patch_dags | PATCH /dags | Update DAGs |

| DAGApi | post_clear_task_instances | POST /dags/{dag_id}/clearTaskInstances | Clear a set of task instances |

| DAGApi | post_set_task_instances_state | POST /dags/{dag_id}/updateTaskInstancesState | Set a state of task instances |

| DAGRunApi | clear_dag_run | POST /dags/{dag_id}/dagRuns/{dag_run_id}/clear | Clear a DAG run |

| DAGRunApi | delete_dag_run | DELETE /dags/{dag_id}/dagRuns/{dag_run_id} | Delete a DAG run |

| DAGRunApi | get_dag_run | GET /dags/{dag_id}/dagRuns/{dag_run_id} | Get a DAG run |

| DAGRunApi | get_dag_runs | GET /dags/{dag_id}/dagRuns | List DAG runs |

| DAGRunApi | get_dag_runs_batch | POST /dags/~/dagRuns/list | List DAG runs (batch) |

| DAGRunApi | get_upstream_dataset_events | GET /dags/{dag_id}/dagRuns/{dag_run_id}/upstreamDatasetEvents | Get dataset events for a DAG run |

| DAGRunApi | post_dag_run | POST /dags/{dag_id}/dagRuns | Trigger a new DAG run |

| DAGRunApi | set_dag_run_note | PATCH /dags/{dag_id}/dagRuns/{dag_run_id}/setNote | Update the DagRun note. |

| DAGRunApi | update_dag_run_state | PATCH /dags/{dag_id}/dagRuns/{dag_run_id} | Modify a DAG run |

| DagWarningApi | get_dag_warnings | GET /dagWarnings | List dag warnings |

| DatasetApi | get_dataset | GET /datasets/{uri} | Get a dataset |

| DatasetApi | get_dataset_events | GET /datasets/events | Get dataset events |

| DatasetApi | get_datasets | GET /datasets | List datasets |

| DatasetApi | get_upstream_dataset_events | GET /dags/{dag_id}/dagRuns/{dag_run_id}/upstreamDatasetEvents | Get dataset events for a DAG run |

| EventLogApi | get_event_log | GET /eventLogs/{event_log_id} | Get a log entry |

| EventLogApi | get_event_logs | GET /eventLogs | List log entries |

| ImportErrorApi | get_import_error | GET /importErrors/{import_error_id} | Get an import error |

| ImportErrorApi | get_import_errors | GET /importErrors | List import errors |

| MonitoringApi | get_health | GET /health | Get instance status |

| MonitoringApi | get_version | GET /version | Get version information |

| PermissionApi | get_permissions | GET /permissions | List permissions |

| PluginApi | get_plugins | GET /plugins | Get a list of loaded plugins |

| PoolApi | delete_pool | DELETE /pools/{pool_name} | Delete a pool |

| PoolApi | get_pool | GET /pools/{pool_name} | Get a pool |

| PoolApi | get_pools | GET /pools | List pools |

| PoolApi | patch_pool | PATCH /pools/{pool_name} | Update a pool |

| PoolApi | post_pool | POST /pools | Create a pool |

| ProviderApi | get_providers | GET /providers | List providers |

| RoleApi | delete_role | DELETE /roles/{role_name} | Delete a role |

| RoleApi | get_role | GET /roles/{role_name} | Get a role |

| RoleApi | get_roles | GET /roles | List roles |

| RoleApi | patch_role | PATCH /roles/{role_name} | Update a role |

| RoleApi | post_role | POST /roles | Create a role |

| TaskInstanceApi | get_extra_links | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/links | List extra links |

| TaskInstanceApi | get_log | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/logs/{task_try_number} | Get logs |

| TaskInstanceApi | get_mapped_task_instance | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/{map_index} | Get a mapped task instance |

| TaskInstanceApi | get_mapped_task_instances | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/listMapped | List mapped task instances |

| TaskInstanceApi | get_task_instance | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id} | Get a task instance |

| TaskInstanceApi | get_task_instances | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances | List task instances |

| TaskInstanceApi | get_task_instances_batch |

POST /dags/ |

List task instances (batch) |

| TaskInstanceApi | patch_mapped_task_instance | PATCH /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/{map_index} | Updates the state of a mapped task instance |

| TaskInstanceApi | patch_task_instance | PATCH /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id} | Updates the state of a task instance |

| TaskInstanceApi | set_mapped_task_instance_note | PATCH /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/{map_index}/setNote | Update the TaskInstance note. |

| TaskInstanceApi | set_task_instance_note | PATCH /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/setNote | Update the TaskInstance note. |

| UserApi | delete_user | DELETE /users/{username} | Delete a user |

| UserApi | get_user | GET /users/{username} | Get a user |

| UserApi | get_users | GET /users | List users |

| UserApi | patch_user | PATCH /users/{username} | Update a user |

| UserApi | post_user | POST /users | Create a user |

| VariableApi | delete_variable | DELETE /variables/{variable_key} | Delete a variable |

| VariableApi | get_variable | GET /variables/{variable_key} | Get a variable |

| VariableApi | get_variables | GET /variables | List variables |

| VariableApi | patch_variable | PATCH /variables/{variable_key} | Update a variable |

| VariableApi | post_variables | POST /variables | Create a variable |

| XComApi | get_xcom_entries | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/xcomEntries | List XCom entries |

| XComApi | get_xcom_entry | GET /dags/{dag_id}/dagRuns/{dag_run_id}/taskInstances/{task_id}/xcomEntries/{xcom_key} | Get an XCom entry |

- Action

- ActionCollection

- ActionCollectionAllOf

- ActionResource

- BasicDAGRun

- ClassReference

- ClearDagRun

- ClearTaskInstances

- CollectionInfo

- Color

- Config

- ConfigOption

- ConfigSection

- Connection

- ConnectionAllOf

- ConnectionCollection

- ConnectionCollectionAllOf

- ConnectionCollectionItem

- ConnectionTest

- CronExpression

- DAG

- DAGCollection

- DAGCollectionAllOf

- DAGDetail

- DAGDetailAllOf

- DAGRun

- DAGRunCollection

- DAGRunCollectionAllOf

- DagScheduleDatasetReference

- DagState

- DagWarning

- DagWarningCollection

- DagWarningCollectionAllOf

- Dataset

- DatasetCollection

- DatasetCollectionAllOf

- DatasetEvent

- DatasetEventCollection

- DatasetEventCollectionAllOf

- Error

- EventLog

- EventLogCollection

- EventLogCollectionAllOf

- ExtraLink

- ExtraLinkCollection

- HealthInfo

- HealthStatus

- ImportError

- ImportErrorCollection

- ImportErrorCollectionAllOf

- InlineResponse200

- InlineResponse2001

- Job

- ListDagRunsForm

- ListTaskInstanceForm

- MetadatabaseStatus

- PluginCollection

- PluginCollectionAllOf

- PluginCollectionItem

- Pool

- PoolCollection

- PoolCollectionAllOf

- Provider

- ProviderCollection

- RelativeDelta

- Resource

- Role

- RoleCollection

- RoleCollectionAllOf

- SLAMiss

- ScheduleInterval

- SchedulerStatus

- SetDagRunNote

- SetTaskInstanceNote

- Tag

- Task

- TaskCollection

- TaskExtraLinks

- TaskInstance

- TaskInstanceCollection

- TaskInstanceCollectionAllOf

- TaskInstanceReference

- TaskInstanceReferenceCollection

- TaskOutletDatasetReference

- TaskState

- TimeDelta

- Trigger

- TriggerRule

- UpdateDagRunState

- UpdateTaskInstance

- UpdateTaskInstancesState

- User

- UserAllOf

- UserCollection

- UserCollectionAllOf

- UserCollectionItem

- UserCollectionItemRoles

- Variable

- VariableAllOf

- VariableCollection

- VariableCollectionAllOf

- VariableCollectionItem

- VersionInfo

- WeightRule

- XCom

- XComAllOf

- XComCollection

- XComCollectionAllOf

- XComCollectionItem

By default the generated client supports the three authentication schemes:

- Basic

- GoogleOpenID

- Kerberos

However, you can generate client and documentation with your own schemes by adding your own schemes in

the security section of the OpenAPI specification. You can do it with Breeze CLI by adding the

--security-schemes option to the breeze release-management prepare-python-client command.

You can run basic smoke tests to check if the client is working properly - we have a simple test script that uses the API to run the tests. To do that, you need to:

- install the

apache-airflow-clientpackage as described above - install

richPython package - download the test_python_client.py file

- make sure you have test airflow installation running. Do not experiment with your production deployment

- configure your airflow webserver to enable basic authentication

In the

[api]section of yourairflow.cfgset:

[api]

auth_backend = airflow.api.auth.backend.session,airflow.api.auth.backend.basic_authYou can also set it by env variable:

export AIRFLOW__API__AUTH_BACKENDS=airflow.api.auth.backend.session,airflow.api.auth.backend.basic_auth

- configure your airflow webserver to load example dags

In the

[core]section of yourairflow.cfgset:

[core]

load_examples = TrueYou can also set it by env variable: export AIRFLOW__CORE__LOAD_EXAMPLES=True

- optionally expose configuration (NOTE! that this is dangerous setting). The script will happily run with

the default setting, but if you want to see the configuration, you need to expose it.

In the

[webserver]section of yourairflow.cfgset:

[webserver]

expose_config = TrueYou can also set it by env variable: export AIRFLOW__WEBSERVER__EXPOSE_CONFIG=True

- Configure your host/ip/user/password in the

test_python_client.pyfile

import airflow_client

# Configure HTTP basic authorization: Basic

configuration = airflow_client.client.Configuration(

host="http://localhost:8080/api/v1", username="admin", password="admin"

)-

Run scheduler (or dag file processor you have setup with standalone dag file processor) for few parsing loops (you can pass --num-runs parameter to it or keep it running in the background). The script relies on example DAGs being serialized to the DB and this only happens when scheduler runs with

core/load_examplesset to True. -

Run webserver - reachable at the host/port for the test script you want to run. Make sure it had enough time to initialize.

Run python test_python_client.py and you should see colored output showing attempts to connect and status.

If the OpenAPI document is large, imports in client.apis and client.models may fail with a RecursionError indicating the maximum recursion limit has been exceeded. In that case, there are a couple of solutions:

Solution 1: Use specific imports for apis and models like:

from airflow_client.client.api.default_api import DefaultApifrom airflow_client.client.model.pet import Pet

Solution 2: Before importing the package, adjust the maximum recursion limit as shown below:

import sys

sys.setrecursionlimit(1500)

import airflow_client.client

from airflow_client.client.apis import *

from airflow_client.client.models import *For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airflow-client-python

Similar Open Source Tools

airflow-client-python

The Apache Airflow Python Client provides a range of REST API endpoints for managing Airflow metadata objects. It supports CRUD operations for resources, with endpoints accepting and returning JSON. Users can create, read, update, and delete resources. The API design follows conventions with consistent naming and field formats. Update mask is available for patch endpoints to specify fields for update. API versioning is not synchronized with Airflow releases, and changes go through a deprecation phase. The tool supports various authentication methods and error responses follow RFC 7807 format.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

OrChat

OrChat is a powerful CLI tool for chatting with AI models through OpenRouter. It offers features like universal model access, interactive chat with real-time streaming responses, rich markdown rendering, agentic shell access, security gating, performance analytics, command auto-completion, pricing display, auto-update system, multi-line input support, conversation management, auto-summarization, session persistence, web scraping, file and media support, smart thinking mode, conversation export, customizable themes, interactive input features, and more.

caddy-defender

The Caddy Defender plugin is a middleware for Caddy that allows you to block or manipulate requests based on the client's IP address. It provides features such as IP range filtering, predefined IP ranges for popular AI services, custom IP ranges configuration, and multiple responder backends for different actions like blocking, custom responses, dropping connections, returning garbage data, redirecting, and tarpitting to stall bots. The plugin can be easily installed using Docker or built with `xcaddy`. Configuration is done through the Caddyfile syntax with various options for responders, IP ranges, custom messages, and URLs.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

legacy-use

Legacy-use is a tool that transforms legacy applications into modern REST APIs using AI. It allows users to dynamically generate and customize API endpoints for legacy or desktop applications, access systems running legacy software, track and resolve issues with built-in observability tools, ensure secure and compliant automation, choose model providers independently, and deploy with enterprise-grade security and compliance. The tool provides a quick setup process, automatic API key generation, and supports Windows VM automation. It offers a user-friendly interface for adding targets, running jobs, and writing effective prompts. Legacy-use also supports various connectivity technologies like OpenVPN, Tailscale, WireGuard, VNC, RDP, and TeamViewer. Telemetry data is collected anonymously to improve the product, and users can opt-out of tracking. Optional configurations include enabling OpenVPN target creation and displaying backend endpoints documentation. Contributions to the project are welcome.

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

indexify

Indexify is an open-source engine for building fast data pipelines for unstructured data (video, audio, images, and documents) using reusable extractors for embedding, transformation, and feature extraction. LLM Applications can query transformed content friendly to LLMs by semantic search and SQL queries. Indexify keeps vector databases and structured databases (PostgreSQL) updated by automatically invoking the pipelines as new data is ingested into the system from external data sources. **Why use Indexify** * Makes Unstructured Data **Queryable** with **SQL** and **Semantic Search** * **Real-Time** Extraction Engine to keep indexes **automatically** updated as new data is ingested. * Create **Extraction Graph** to describe **data transformation** and extraction of **embedding** and **structured extraction**. * **Incremental Extraction** and **Selective Deletion** when content is deleted or updated. * **Extractor SDK** allows adding new extraction capabilities, and many readily available extractors for **PDF**, **Image**, and **Video** indexing and extraction. * Works with **any LLM Framework** including **Langchain**, **DSPy**, etc. * Runs on your laptop during **prototyping** and also scales to **1000s of machines** on the cloud. * Works with many **Blob Stores**, **Vector Stores**, and **Structured Databases** * We have even **Open Sourced Automation** to deploy to Kubernetes in production.

unity-mcp

MCP for Unity is a tool that acts as a bridge, enabling AI assistants to interact with the Unity Editor via a local MCP Client. Users can instruct their LLM to manage assets, scenes, scripts, and automate tasks within Unity. The tool offers natural language control, powerful tools for asset management, scene manipulation, and automation of workflows. It is extensible and designed to work with various MCP Clients, providing a range of functions for precise text edits, script management, GameObject operations, and more.

automem

AutoMem is a production-grade long-term memory system for AI assistants, achieving 90.53% accuracy on the LoCoMo benchmark. It combines FalkorDB (Graph) and Qdrant (Vectors) storage systems to store, recall, connect, learn, and perform with memories. AutoMem enables AI assistants to remember, connect, and evolve their understanding over time, similar to human long-term memory. It implements techniques from peer-reviewed memory research and offers features like multi-hop bridge discovery, knowledge graphs that evolve, 9-component hybrid scoring, memory consolidation cycles, background intelligence, 11 relationship types, and more. AutoMem is benchmark-proven, research-validated, and production-ready, with features like sub-100ms recall, concurrent writes, automatic retries, health monitoring, dual storage redundancy, and automated backups.

chat-ollama

ChatOllama is an open-source chatbot based on LLMs (Large Language Models). It supports a wide range of language models, including Ollama served models, OpenAI, Azure OpenAI, and Anthropic. ChatOllama supports multiple types of chat, including free chat with LLMs and chat with LLMs based on a knowledge base. Key features of ChatOllama include Ollama models management, knowledge bases management, chat, and commercial LLMs API keys management.

RealtimeSTT_LLM_TTS

RealtimeSTT is an easy-to-use, low-latency speech-to-text library for realtime applications. It listens to the microphone and transcribes voice into text, making it ideal for voice assistants and applications requiring fast and precise speech-to-text conversion. The library utilizes Voice Activity Detection, Realtime Transcription, and Wake Word Activation features. It supports GPU-accelerated transcription using PyTorch with CUDA support. RealtimeSTT offers various customization options for different parameters to enhance user experience and performance. The library is designed to provide a seamless experience for developers integrating speech-to-text functionality into their applications.

pocketpaw

PocketPaw is a lightweight and user-friendly tool designed for managing and organizing your digital assets. It provides a simple interface for users to easily categorize, tag, and search for files across different platforms. With PocketPaw, you can efficiently organize your photos, documents, and other files in a centralized location, making it easier to access and share them. Whether you are a student looking to organize your study materials, a professional managing project files, or a casual user wanting to declutter your digital space, PocketPaw is the perfect solution for all your file management needs.

For similar tasks

airflow-client-python

The Apache Airflow Python Client provides a range of REST API endpoints for managing Airflow metadata objects. It supports CRUD operations for resources, with endpoints accepting and returning JSON. Users can create, read, update, and delete resources. The API design follows conventions with consistent naming and field formats. Update mask is available for patch endpoints to specify fields for update. API versioning is not synchronized with Airflow releases, and changes go through a deprecation phase. The tool supports various authentication methods and error responses follow RFC 7807 format.

HuggingFists

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

modal-client

The Modal Python library provides convenient, on-demand access to serverless cloud compute from Python scripts on your local computer. It allows users to easily integrate serverless cloud computing into their Python scripts, providing a seamless experience for accessing cloud resources. The library simplifies the process of interacting with cloud services, enabling developers to focus on their applications' logic rather than infrastructure management. With detailed documentation and support available through the Modal Slack channel, users can quickly get started and leverage the power of serverless computing in their projects.

MEGREZ

MEGREZ is a modern and elegant open-source high-performance computing platform that efficiently manages GPU resources. It allows for easy container instance creation, supports multiple nodes/multiple GPUs, modern UI environment isolation, customizable performance configurations, and user data isolation. The platform also comes with pre-installed deep learning environments, supports multiple users, features a VSCode web version, resource performance monitoring dashboard, and Jupyter Notebook support.

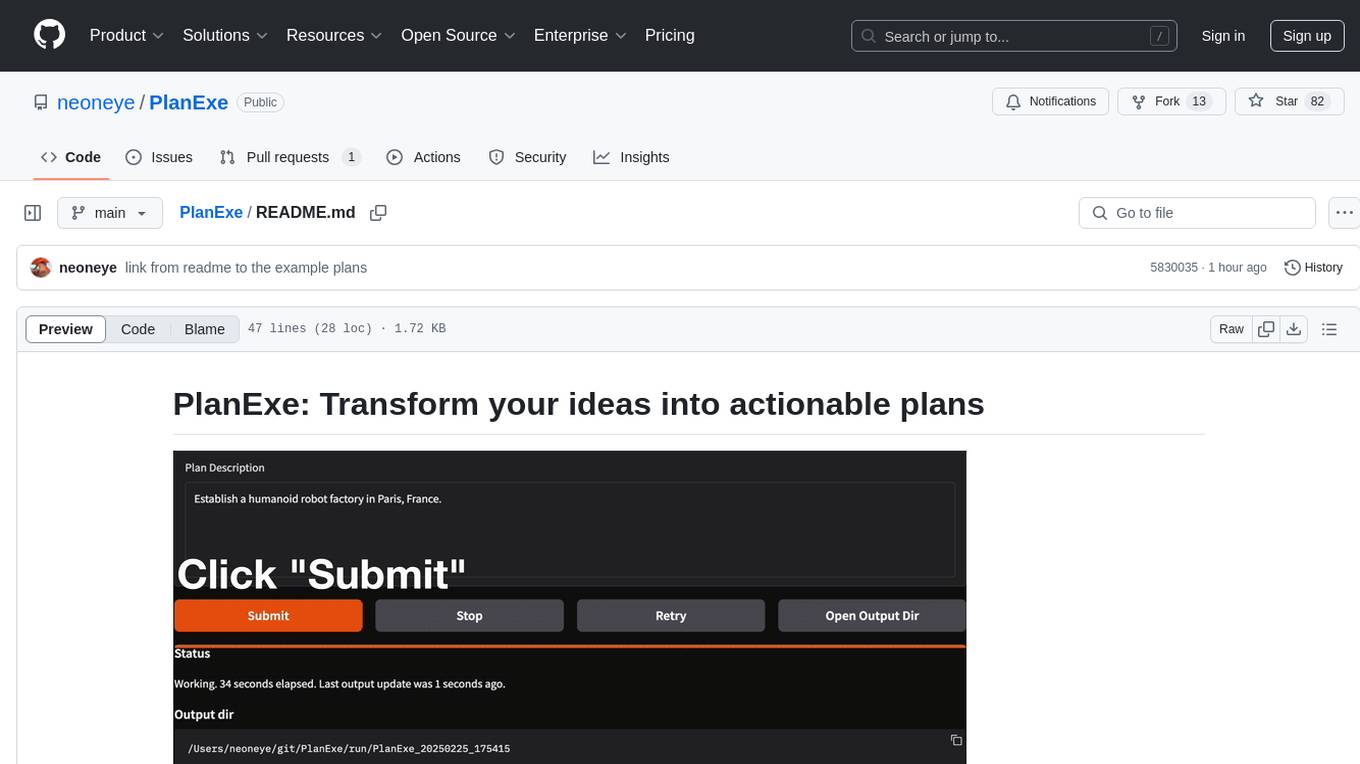

PlanExe

PlanExe is a planning AI tool that helps users generate detailed plans based on vague descriptions. It offers a Gradio-based web interface for easy input and output. Users can choose between running models in the cloud or locally on a high-end computer. The tool aims to provide a straightforward path to planning various tasks efficiently.

cortex.cpp

Cortex.cpp is an open-source platform designed as the brain for robots, offering functionalities such as vision, speech, language, tabular data processing, and action. It provides an AI platform for running AI models with multi-engine support, hardware optimization with automatic GPU detection, and an OpenAI-compatible API. Users can download models from the Hugging Face model hub, run models, manage resources, and access advanced features like multiple quantizations and engine management. The tool is under active development, promising rapid improvements for users.

sre

SmythOS is an operating system designed for building, deploying, and managing intelligent AI agents at scale. It provides a unified SDK and resource abstraction layer for various AI services, making it easy to scale and flexible. With an agent-first design, developer-friendly SDK, modular architecture, and enterprise security features, SmythOS offers a robust foundation for AI workloads. The system is built with a philosophy inspired by traditional operating system kernels, ensuring autonomy, control, and security for AI agents. SmythOS aims to make shipping production-ready AI agents accessible and open for everyone in the coming Internet of Agents era.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.