HuggingFists

A low-code data flow tool that allows for convenient use of LLM and HuggingFace models, with some features considered as a low-code version of Langchain.

Stars: 154

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

README:

中文 | English

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. Some of its functions can be considered as a low-code version of Langchain. Currently, it does not support model training scenarios, but this will be developed and supplemented in the future.

**Linux:**Linux system 3.10.0-957.21.3.el7.x86_64 At least 4 cores and 8GB of RAM; the system uses Containerd containers, and containers and images will be stored in the /data directory.

**Windows:**Windows 11 is recommended.

Linux Clone the project files from git using: git clone https://github.com/Datayoo/HuggingFists.git, or download directly as a zip file. Note that when cloning the project on a Windows operating system, the '\n' in Linux script files will be replaced with '\r\n'. When copying the project to a Linux system, the scripts will not execute due to the difference in '\n'. Developers using IDEA can refer to configuring Git to handle line endings to resolve this issue. Navigate to sengee.community.linux and run the installation script with bash install.sh. After the script execution is complete, you can test if the system is correctly installed by using curl http://localhost:38172. Once the installation is finished, you can access the tool's interface by visiting the URL: "http://serverIP:38172". If you are unable to access the page externally, you can try restarting the server once, as the operator platform will start automatically on boot! For friends in China who experience slow access to GitHub, you can download the files from https://share.weiyun.com/mmmowpEX.

Windows For Windows users, you can download the Windows version from the following link: https://share.weiyun.com/2eDVeN8Q. See Windows installation instructions

Used to provide a macroscopic display of various resources and operational results within the system.

Used to manage various data sources that may be involved in read/write operations within the system. Types include databases, file systems, and applications, among others. Users can select specific data source types through the interface to create data source instances. Data source instances allow basic browsing and management functions of the data sources. Additionally, input/output operators can reference data source instances as the target for reading and writing.

Used for designing, debugging, and managing various data processing workflows oriented towards business operations. Users can drag operators, connect ports, and build data processing workflows. A data processing workflow can be understood as a segment of executable code. Unlike many popular data processing and analysis tools, the operators in the HuggingFists system have clear input/output ports for accessing and writing datasets. Each input port can connect to multiple preceding ports, and each output port can write to multiple subsequent ports simultaneously. Clicking on a port provides insight into the input/output structure of the data, similar to understanding the input and return structures of a function call. Additionally, HuggingFists is one of the few low-code tools that provides breakpoint debugging functionality. When defining a workflow, users can set breakpoints by clicking on operator ports. Breakpoint debugging greatly assists users in writing correct data processing workflows. Furthermore, HuggingFists offers concepts such as branch selection, function calls, workflow variables, context variables, parameter variables, and more to help users complete complex data processing workflow development.

Used to create and schedule workflow jobs, set the execution cycle of jobs to meet the production needs of business systems. Jobs can only be created for formally published workflows; unpublished workflows are considered as drafts and cannot be scheduled for job execution. Immediate jobs execute immediately after creation, while scheduled jobs adhere to specified scheduling plans. Each time a job is scheduled, a task is generated, and the system retains relevant information about task execution, such as logs, running status, output results, and more.

Used to manage the resources environment required for the system to run, such as managing work nodes and service settings. Work node management oversees the resources during job execution, where workflows are dispatched to work nodes for interpretation and execution. It supports horizontal scaling to multiple work nodes when computational resources are insufficient. Service settings management provides management of HTTP proxies.

Utilized for managing various data artifact resources in the system, such as connector management and operator management. Users are allowed to expand connectors and operators based on their requirements. Relevant development standards are currently being organized. Connectors are used to establish connections with data sources, with each type of data source having one or more connector implementations. In some cases, different versions of data sources may require different connector implementations. Operators are used to implement various functionalities, similar to a function in a programming language. Users connect operators to form data processing logic and complete data processing tasks.

Used to manage various accounts for users, such as data source accounts and API accounts. Account management provided by HuggingFists can be accessed in the top right corner of the system under "User" -> "Personal Settings" -> "Account Resources". Here, users can centrally manage their various accounts. When creating a data source, users need to fill in the account information, which should be created in advance here. When the account is required, users can select the appropriate account. Initially, this account management model may seem cumbersome, but since many accounts are often reused in multiple data source instances or operator instances, this centralized management approach allows for updating account passwords at minimal cost when necessary for security reasons.

To access Hugging Face models through the Inference API, you first need to register an account on the Hugging Face website. You can register an account by following this link: https://huggingface.co/join. Once registered successfully, apply for a dedicated access token by navigating to your profile -> Settings -> Access Tokens in the top right corner of the interface.

Next, in the Hugging Face system (not HuggingFists), go to your profile -> Personal Settings -> Resource Accounts and add a Hugging Face access account.After entering the resource account interface, select to add a resource account, which will bring up the following screen:

Choose the Hugging Face type, and fill in the access token you obtained into the "Access token" input box.

After filling it in, submit the form to create the account successfully. Sometimes, if you are in an intranet environment and cannot directly access the Hugging Face website, you can configure an HTTP proxy to bypass the local network restrictions.

In the HuggingFists system, navigate to "Environment Management" -> "Service Configuration" module. Click on "Create Service Configuration," which will bring up the following interface:

Select the "Network HTTP Proxy Service" type, fill in the proxy-related information, and submit to save the proxy configuration.

The preparation work is ready, and we can now try using Hugging Face operators to access models to meet business requirements. Below, let's look at two related examples, one using natural language models and the other using image-related models. Let's start with an example using natural language models.

The diagram above illustrates a process example that reads internet news, extracts text content from the news, and then performs three tasks: text summarization, text sentiment classification, and named entity recognition. The red box-selected section in the diagram represents the operator tree that can be used in the process definition; the blue section is the process definition panel; and the green section is the operator's attribute configuration and help area.

As shown in the diagram, when selecting a Hugging Face summarization extraction operator, the green box on the right displays its configurable attributes and documentation. The first two boxes in the attribute section input the previously prepared HTTP proxy and Hugging Face account. The subsequent parameter boxes can be set based on the operator's help documentation.

Once all processes are dragged and defined in this way, you can click the debug or execute process button at the top of the blue area. Now, let's look at an example using an image-related model.

This example can also be detailed in the video mentioned above. It mainly demonstrates three tasks: object recognition, image segmentation, and image classification performed on a single image, involving three different models. In the examples above, several models are demonstrated. However, which model works best in practice? Users will need to research and compare to determine the most effective model for their specific needs.

Compared to accessing Hugging Face models through the Inference API, deploying models locally requires a longer preparation time but offers more control over costs and security. Below, we briefly introduce how to access locally deployed Hugging Face models using HuggingFists. Firstly, select a model you wish to deploy locally, and then navigate to the model's "Files and versions" page, as shown below:

From the image, we can see that there are many files related to the model, typically required for model loading and execution, aside from documentation. Therefore, it's necessary to download all relevant files in advance and store them in the same folder. As Hugging Face does not currently provide a bulk download feature for files, manual downloading of each file is required (this is the most cumbersome aspect of local model deployment).

Once the model is downloaded, create the application flow for the model. The process of creating the flow and building it is similar to using Inference API operators, with the only difference being that when selecting operators, you need to choose those with "Pte" in their names. These operators support local model invocation. The main differences between the two types of operators are as follows:

In the case of using locally deployed models, there is no longer a need for an HTTP proxy or a Hugging Face account. Instead, you select a local folder path. The downloaded model resides within this folder. Generally, the operator does not pull additional files during the call, but our team found that in some cases, additional model files needed to be downloaded at runtime, which can slow down the operator's startup speed. Additionally, two attributes worth noting are the Python script snippet and the computing device. Due to the vast number of models on the Hugging Face website, some models may have subtle differences when called. If there are issues with starting the model, adjusting the Python script snippet to ensure the model loads and executes properly may be necessary. The computing device attribute specifies on which computing unit (CPU or GPU) the model runs on the local machine. This parameter can be set based on the local machine's configuration. Other relevant operator attributes can be configured following the operator's instructions. Once configured, the flow can be driven to use the model locally.

The Hugging Face website provides a platform where practitioners worldwide share various datasets for convenient use in model training or testing. HuggingFists offers a connector specifically for the Hugging Face website, allowing users to select suitable datasets and store them in a designated storage system for easy application of this data. To access datasets on Hugging Face, follow these steps:

In the data source functional module, select the Application Tab page and create a Hugging Face data source by selecting the HuggingFace connector and configuring access accounts and access proxies as needed. See the image below:

After clicking the "Submit" button, a Hugging Face data source will be created. You can browse the data source by clicking the "View Data" button and select the appropriate datasets.

Once you have selected the desired dataset, you can define a data processing flow to read the data from the dataset and store it in a database or file.

Once you have selected the desired dataset, you can define a data processing flow to read the data from the dataset and store it in a database or file.

By following these steps, users can easily access datasets on Hugging Face and incorporate them into their data processing workflows for various applications.

By following these steps, users can easily access datasets on Hugging Face and incorporate them into their data processing workflows for various applications.

| Video Link | Content Description |

|---|---|

| https://www.bilibili.com/video/BV1Ku4y1r72H/ | How to use various models from HuggingFace with low-code. Provides examples of using a natural language model and a computer vision model. The examples involve the process of creating accounts and HTTP proxies. |

| https://www.bilibili.com/video/BV1G84y1m79m/ | How to use various datasets from HuggingFace with low-code. |

| https://www.bilibili.com/video/BV1oy4y1A7Bd/ | How to desaturate, rotate, and crop images using low-code. |

| https://www.bilibili.com/video/BV1SP411W7kv/ | How to extract text information from HTML using low-code. |

| https://www.bilibili.com/video/BV138411Q7ia/ | How to read data from MySQL using low-code. |

| https://www.bilibili.com/video/BV1qN4y1R7Pt/ | How to read data from MySQL using flow variables with low-code. |

| https://www.bilibili.com/video/BV1ok4y1A7ZM/ | How to read data from MySQL using context variables with low-code. |

| https://www.bilibili.com/video/BV19F411X7D1/ | How to extract text from Visio files using low-code. |

| https://www.bilibili.com/video/BV1RN41127vt/ | How to extract relationships from Visio files using low-code. |

| https://www.bilibili.com/video/BV1qV41157HH/ | How to extract text and images from Word documents using low-code. |

| https://www.bilibili.com/video/BV1X94y1C7Bh/ | How to extract tables from Word documents using low-code. |

| https://www.bilibili.com/video/BV1Tg4y1J7WV/ | How to extract text from PDFs using low-code. |

| https://www.bilibili.com/video/BV1jk4y1V7nK/ | How to read data from Excel using low-code. |

| https://www.bilibili.com/video/BV1Ks4y1c7bz/ | How to write data to a MySQL table using low-code. |

| https://www.bilibili.com/video/BV16u411b79b/ | How to clear MySQL tables and perform multi-table writes simultaneously using low-code. |

| https://www.bilibili.com/video/BV1Ps4y117pS/ | How to read and write Avro files using low-code. |

| https://www.bilibili.com/video/BV14u411Y7Z3/ | How to read XML-formatted data. |

| https://www.bilibili.com/video/BV1D8411o7x6/ | How to read JSON-formatted data. |

| https://www.bilibili.com/video/BV1yV4y1Q7uT/ | How to debug processes using breakpoints on the platform. |

| https://www.bilibili.com/video/BV17k4y1h79M/ | How to extract entity names using low-code. |

| https://www.bilibili.com/video/BV1vw411U7ZV/ | How to extract time information from text using low-code. |

| https://www.bilibili.com/video/BV1Vj411y7j4/ | How to extract phone numbers from text using low-code. |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for HuggingFists

Similar Open Source Tools

HuggingFists

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

airbyte-platform

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's low-code Connector Development Kit (CDK). Airbyte is used by data engineers and analysts at companies of all sizes to move data for a variety of purposes, including data warehousing, data analysis, and machine learning.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

kdbai-samples

KDB.AI is a time-based vector database that allows developers to build scalable, reliable, and real-time applications by providing advanced search, recommendation, and personalization for Generative AI applications. It supports multiple index types, distance metrics, top-N and metadata filtered retrieval, as well as Python and REST interfaces. The repository contains samples demonstrating various use-cases such as temporal similarity search, document search, image search, recommendation systems, sentiment analysis, and more. KDB.AI integrates with platforms like ChatGPT, Langchain, and LlamaIndex. The setup steps require Unix terminal, Python 3.8+, and pip installed. Users can install necessary Python packages and run Jupyter notebooks to interact with the samples.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

llmops-promptflow-template

LLMOps with Prompt flow is a template and guidance for building LLM-infused apps using Prompt flow. It provides centralized code hosting, lifecycle management, variant and hyperparameter experimentation, A/B deployment, many-to-many dataset/flow relationships, multiple deployment targets, comprehensive reporting, BYOF capabilities, configuration-based development, local prompt experimentation and evaluation, endpoint testing, and optional Human-in-loop validation. The tool is customizable to suit various application needs.

LLMonFHIR

LLMonFHIR is an iOS application that utilizes large language models (LLMs) to interpret and provide context around patient data in the Fast Healthcare Interoperability Resources (FHIR) format. It connects to the OpenAI GPT API to analyze FHIR resources, supports multiple languages, and allows users to interact with their health data stored in the Apple Health app. The app aims to simplify complex health records, provide insights, and facilitate deeper understanding through a conversational interface. However, it is an experimental app for informational purposes only and should not be used as a substitute for professional medical advice. Users are advised to verify information provided by AI models and consult healthcare professionals for personalized advice.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

intelligence-toolkit

The Intelligence Toolkit is a suite of interactive workflows designed to help domain experts make sense of real-world data by identifying patterns, themes, relationships, and risks within complex datasets. It utilizes generative AI (GPT models) to create reports on findings of interest. The toolkit supports analysis of case, entity, and text data, providing various interactive workflows for different intelligence tasks. Users are expected to evaluate the quality of data insights and AI interpretations before taking action. The system is designed for moderate-sized datasets and responsible use of personal case data. It uses the GPT-4 model from OpenAI or Azure OpenAI APIs for generating reports and insights.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

cymbal-air-toolbox-demo

Cymbal Air Toolbox Demo is a project that provides a production-quality reference implementation for building Agentic applications using Agents and Retrieval Augmented Generation (RAG) to query and interact with data stored in Google Cloud Databases. The demo showcases a customer service assistant for a fictional airline, Cymbal Air, assisting travelers with flight management and providing information about San Francisco International Airport (SFO). It utilizes techniques like Retrieval Augmented Generation (RAG) and Agent-based Orchestration to enhance responses and handle a wide variety of queries. The architecture includes components like the user-facing application, MCP Toolbox middleware server, and a database, offering advantages such as better security, scalability, and recall for agents.

csghub-server

CSGHub Server is a part of the open source and reliable large model assets management platform - CSGHub. It focuses on management of models, datasets, and other LLM assets through REST API. Key features include creation and management of users and organizations, auto-tagging of model and dataset labels, search functionality, online preview of dataset files, content moderation for text and image, download of individual files, tracking of model and dataset activity data. The tool is extensible and customizable, supporting different git servers, flexible LFS storage system configuration, and content moderation options. The roadmap includes support for more Git servers, Git LFS, dataset online viewer, model/dataset auto-tag, S3 protocol support, model format conversion, and model one-click deploy. The project is licensed under Apache 2.0 and welcomes contributions.

ai2apps

AI2Apps is a visual IDE for building LLM-based AI agent applications, enabling developers to efficiently create AI agents through drag-and-drop, with features like design-to-development for rapid prototyping, direct packaging of agents into apps, powerful debugging capabilities, enhanced user interaction, efficient team collaboration, flexible deployment, multilingual support, simplified product maintenance, and extensibility through plugins.

dstoolkit-text2sql-and-imageprocessing

This repository provides sample code for improving RAG applications with rich data sources including SQL Warehouses and documents analysed with Azure Document Intelligence. It includes components for Text2SQL generation and querying, linking Azure Document Intelligence with AI Search for processing complex documents, and deploying AI search indexes. The plugins and skills aim to enhance response quality in RAG applications by accessing and pulling data from SQL tables, drawing insights from complex charts and images, and intelligently grouping similar sentences.

NaLLM

The NaLLM project repository explores the synergies between Neo4j and Large Language Models (LLMs) through three primary use cases: Natural Language Interface to a Knowledge Graph, Creating a Knowledge Graph from Unstructured Data, and Generating a Report using static and LLM data. The repository contains backend and frontend code organized for easy navigation. It includes blog posts, a demo database, instructions for running demos, and guidelines for contributing. The project aims to showcase the potential of Neo4j and LLMs in various applications.

For similar tasks

HuggingFists

HuggingFists is a low-code data flow tool that enables convenient use of LLM and HuggingFace models. It provides functionalities similar to Langchain, allowing users to design, debug, and manage data processing workflows, create and schedule workflow jobs, manage resources environment, and handle various data artifact resources. The tool also offers account management for users, allowing centralized management of data source accounts and API accounts. Users can access Hugging Face models through the Inference API or locally deployed models, as well as datasets on Hugging Face. HuggingFists supports breakpoint debugging, branch selection, function calls, workflow variables, and more to assist users in developing complex data processing workflows.

Mercury

Mercury is a code efficiency benchmark designed for code synthesis tasks. It includes 1,889 programming tasks of varying difficulty levels and provides test case generators for comprehensive evaluation. The benchmark aims to assess the efficiency of large language models in generating code solutions.

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

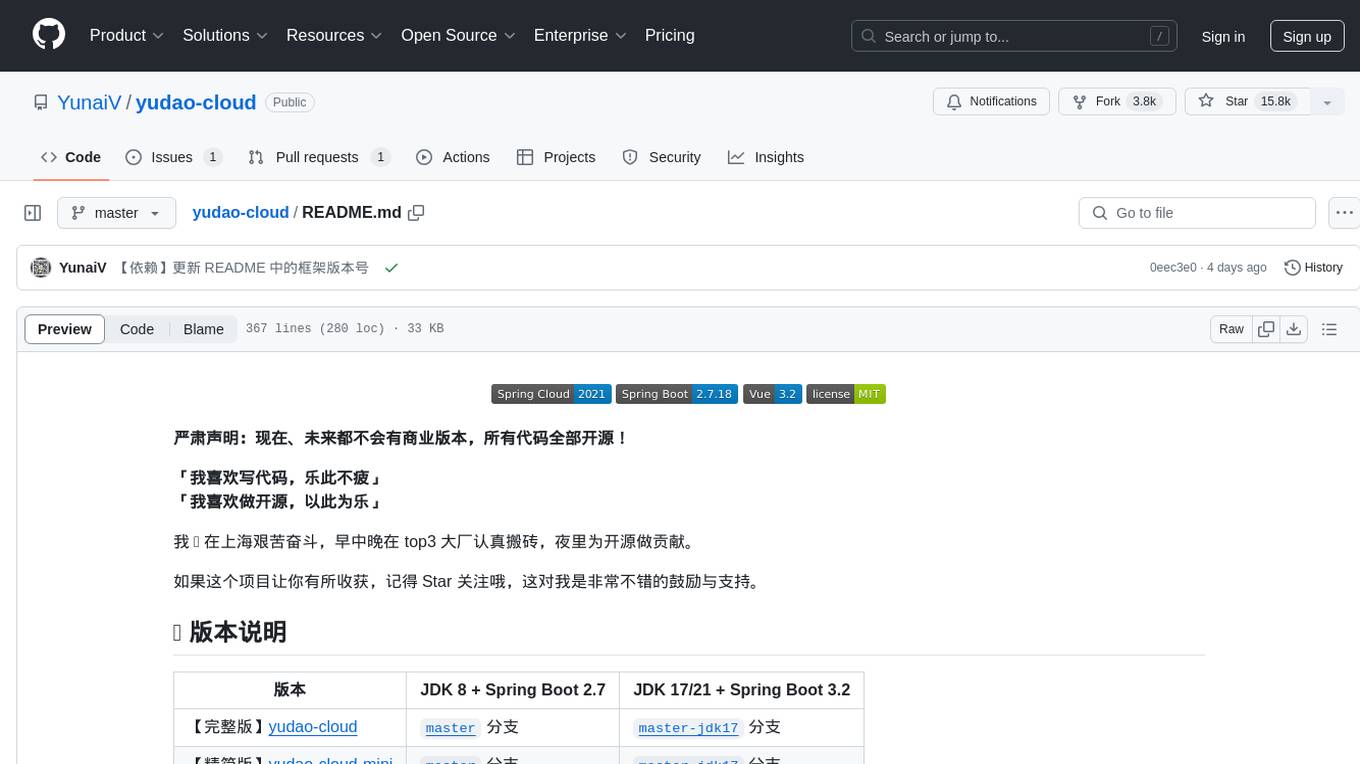

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

yudao-boot-mini

yudao-boot-mini is an open-source project focused on developing a rapid development platform for developers in China. It includes features like system functions, infrastructure, member center, data reports, workflow, mall system, WeChat official account, CRM, ERP, etc. The project is based on Spring Boot with Java backend and Vue for frontend. It offers various functionalities such as user management, role management, menu management, department management, workflow management, payment system, code generation, API documentation, database documentation, file service, WebSocket integration, message queue, Java monitoring, and more. The project is licensed under the MIT License, allowing both individuals and enterprises to use it freely without restrictions.

psychic

Finic is an open source python-based integration platform designed to simplify integration workflows for both business users and developers. It offers a drag-and-drop UI, a dedicated Python environment for each workflow, and generative AI features to streamline transformation tasks. With a focus on decoupling integration from product code, Finic aims to provide faster and more flexible integrations by supporting custom connectors. The tool is open source and allows deployment to users' own cloud environments with minimal legal friction.

finic

Finic is an open source python-based integration platform designed for business users to create v1 integrations with minimal code, while also being flexible for developers to build complex integrations directly in python. It offers a low-code web UI, a dedicated Python environment for each workflow, and generative AI features. Finic decouples integration from product code, supports custom connectors, and is open source. It is not an ETL tool but focuses on integrating functionality between applications via APIs or SFTP, and it is not a workflow automation tool optimized for complex use cases.

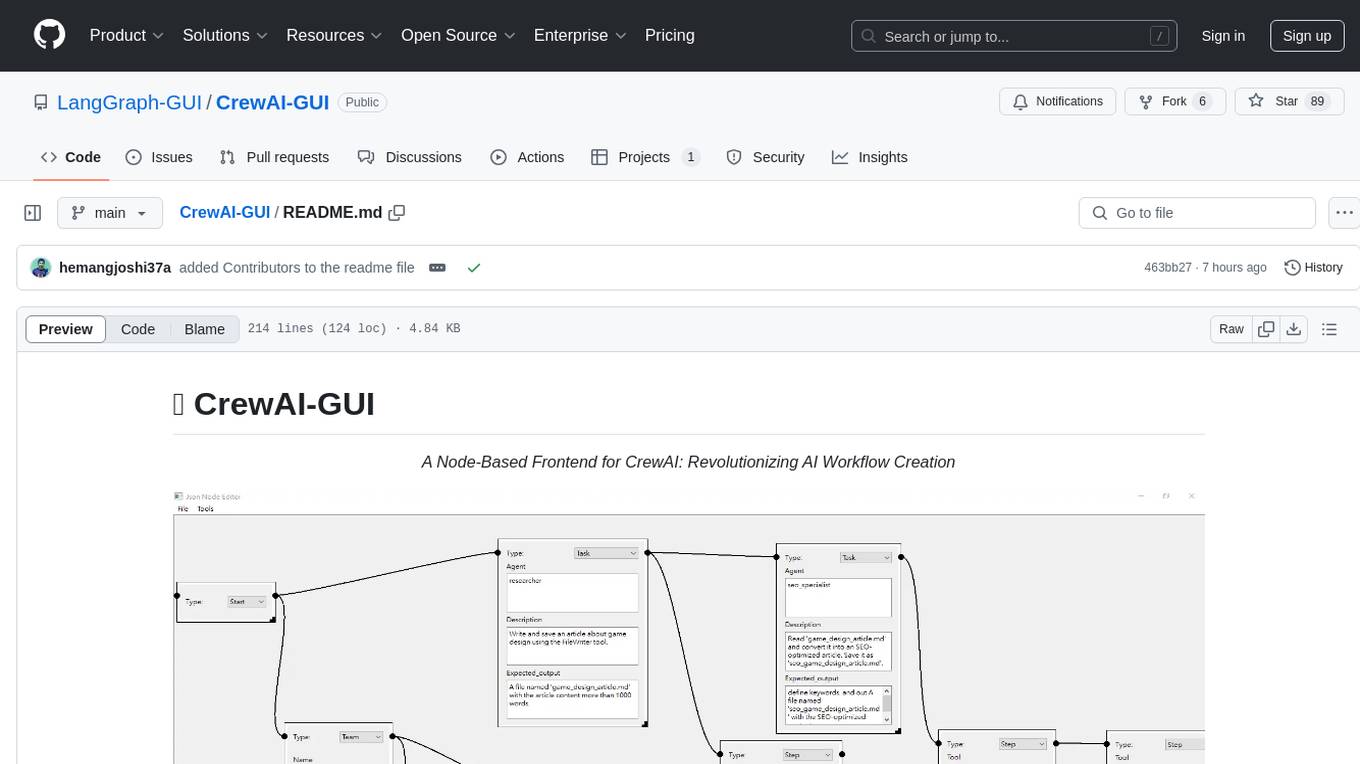

CrewAI-GUI

CrewAI-GUI is a Node-Based Frontend tool designed to revolutionize AI workflow creation. It empowers users to design complex AI agent interactions through an intuitive drag-and-drop interface, export designs to JSON for modularity and reusability, and supports both GPT-4 API and Ollama for flexible AI backend. The tool ensures cross-platform compatibility, allowing users to create AI workflows on Windows, Linux, or macOS efficiently.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.