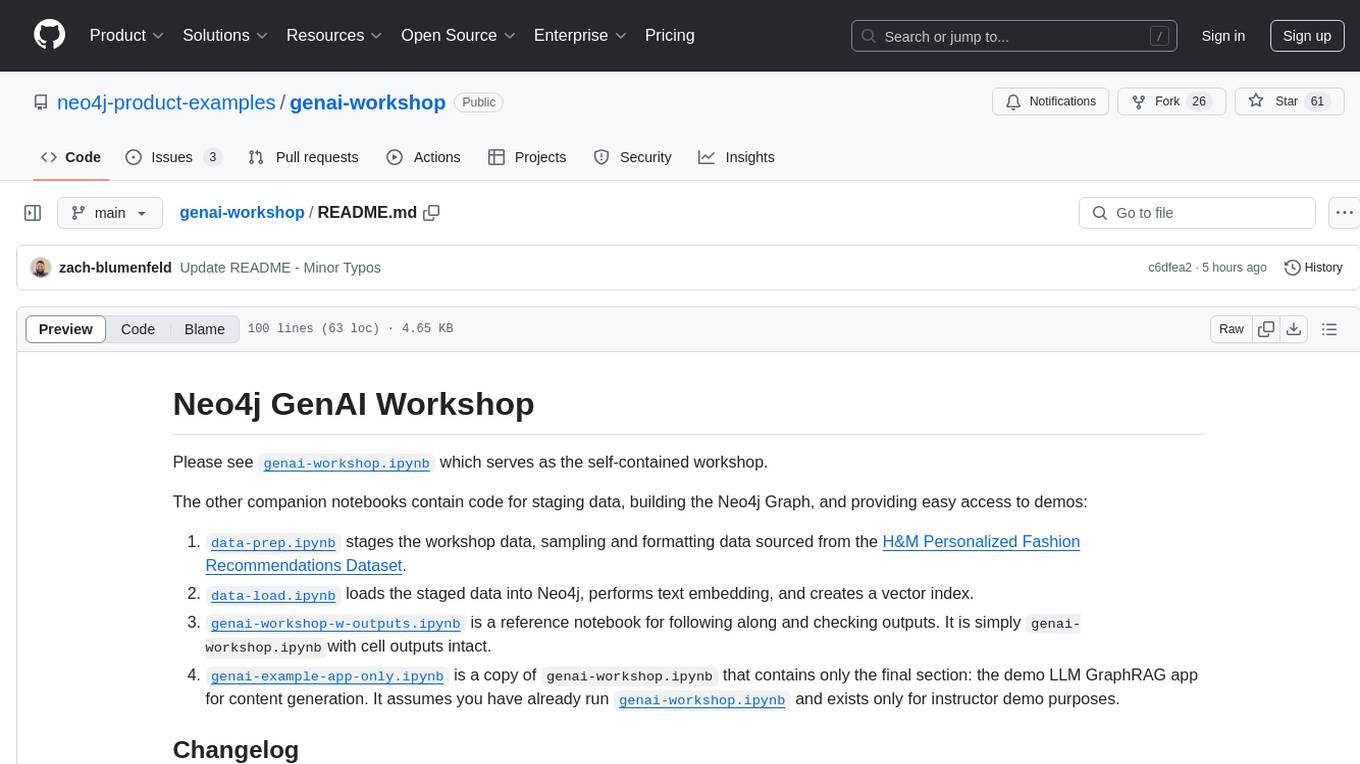

genai-workshop

Generative AI Deep Dive Workshops

Stars: 61

The Neo4j GenAI Workshop repository contains notebooks for a workshop focusing on building a Neo4j Graph, text embedding, and providing demos for content generation. The workshop includes data staging, loading, and exploration using Cypher queries. It also covers improvements in LLM response quality, GPT-4 usage, and vector search speed. The repository has undergone multiple updates to enhance course quality, simplify content, and provide better explainers and examples.

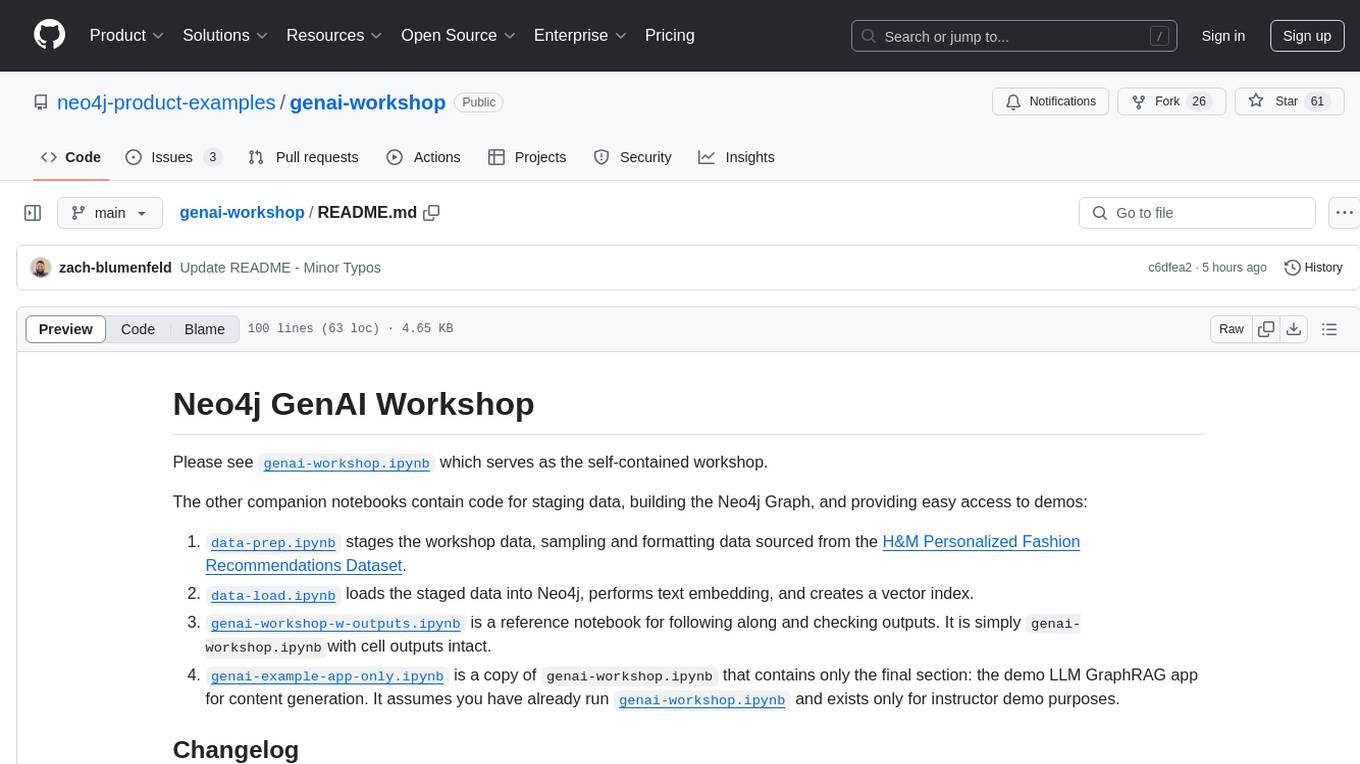

README:

Please see genai-workshop.ipynb which serves as the self-contained workshop.

The other companion notebooks contain code for staging data, building the Neo4j Graph, and providing easy access to demos:

-

data-prep.ipynbstages the workshop data, sampling and formatting data sourced from the H&M Personalized Fashion Recommendations Dataset. -

data-load.ipynbloads the staged data into Neo4j, performs text embedding, and creates a vector index. -

genai-workshop-w-outputs.ipynbis a reference notebook for following along and checking outputs. It is simplygenai-workshop.ipynbwith cell outputs intact. -

genai-example-app-only.ipynbis a copy ofgenai-workshop.ipynbthat contains only the final section: the demo LLM GraphRAG app for content generation. It assumes you have already rungenai-workshop.ipynband exists only for instructor demo purposes.

-

Transition from using Neo4j Sandbox to AuraDS

-

Split out data loading

- Split out data load into a separate notebook

- Live workshops now begin with the dataset pre-loaded to cut down on time and spend more of the course walking through GraphRAG. the data-load.ipynb is kept for reference and replication.

- Remove

neo4j_toolsPython package. the functions/utilities are now included in data-load.ipynb

-

Updated Workshop Slides

-

Added more query exploration & improved explainer queries

- Add Browser-based graph exploration in beginning of workshop

- Include database tips & more Cypher queries in multiple steps

- Update explainer markdown and code cells for graph patterns and GDS for clarity

- Various other minor adjustments to markdown and code to improve course quality

-

Added

genai-workshop-with-outputs.ipynband GitHub Actions Workflow-

genai-workshp.ipynbis now maintained with cleared outputs for better workshop experience and easier PR review - A GitHub actions workflow automatically tests data loading, workshop, and example-only notebooks and auto-commits

genai-workshop-with-outputs.ipynbfile for each PR.

-

-

improve LLM response quality and cleaned up code for LLM chains and vector stores

- parameterizing customer id so don't need to recreate chains & stores for each customer

- updated prompts to better account for seasonality and use all retrieved data

- update to use gpt-4o

-

Improve text embedding speed and reduce code by transitioning to native

genai.vectorCypher functions -

Updated slides

-

Various other minor adjustments to markdown and code to improve course quality

-

(fix) Add

langchain_communityto the libraries that are pip installed in the notebooks -

Simplify and Shorten Course

- Shortened GDS section to just three cells to run

- Condensed Vector Search Section

- Condensed Loading to Single Notebook Cell

- Switched Recommendation Retriever to a Simple KG Query

- Adding

neo4j_toolsPackage to hold convenience functions for loading data and reduce code footprint in main workshop notebook - Updated to GPT-4 throughout

- General Notebook Cleaning - Removed duplicate load statements, updating to newest llm packages, etc.

-

Provide Better Explainers & Examples

- Add A Chain for Printing Final Prompt to LLM with retrieval data to better explain process.

- Added Differentiated Names for Customer Examples in Demo App.

-

Added Additional Resources

- Added workshop slides

- Added "demo only" notebook

- Initial 5-part course with

- Building the knowledge graph

- Vector search & text embedding

- Graph patterns to improve semantic search

- knowledge graph inference & ML

- Building the LLM chain and demo app for generating content

Contributions are welcome! To contribute please:

- Make a PR with a descriptive name

- If you are updating

genai-workshop.ipynbplease ensure to clear all outputs before committing. - Do not alter the

genai-workshop-w-outputs.ipynbfile. This file is autogenerated upon creating/updating PRs.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for genai-workshop

Similar Open Source Tools

genai-workshop

The Neo4j GenAI Workshop repository contains notebooks for a workshop focusing on building a Neo4j Graph, text embedding, and providing demos for content generation. The workshop includes data staging, loading, and exploration using Cypher queries. It also covers improvements in LLM response quality, GPT-4 usage, and vector search speed. The repository has undergone multiple updates to enhance course quality, simplify content, and provide better explainers and examples.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

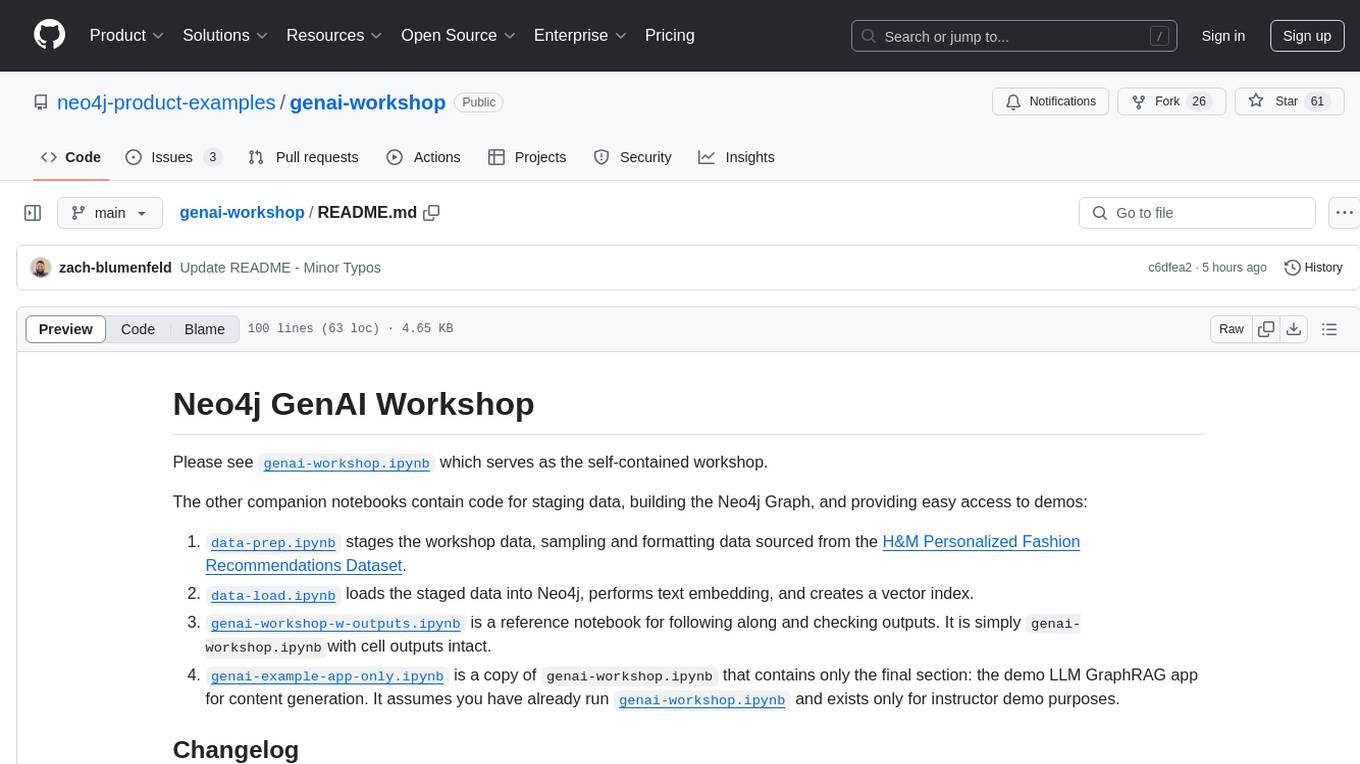

GraphRAG-Local-UI

GraphRAG Local with Interactive UI is an adaptation of Microsoft's GraphRAG, tailored to support local models and featuring a comprehensive interactive user interface. It allows users to leverage local models for LLM and embeddings, visualize knowledge graphs in 2D or 3D, manage files, settings, and queries, and explore indexing outputs. The tool aims to be cost-effective by eliminating dependency on costly cloud-based models and offers flexible querying options for global, local, and direct chat queries.

cognita

Cognita is an open-source framework to organize your RAG codebase along with a frontend to play around with different RAG customizations. It provides a simple way to organize your codebase so that it becomes easy to test it locally while also being able to deploy it in a production ready environment. The key issues that arise while productionizing RAG system from a Jupyter Notebook are: 1. **Chunking and Embedding Job** : The chunking and embedding code usually needs to be abstracted out and deployed as a job. Sometimes the job will need to run on a schedule or be trigerred via an event to keep the data updated. 2. **Query Service** : The code that generates the answer from the query needs to be wrapped up in a api server like FastAPI and should be deployed as a service. This service should be able to handle multiple queries at the same time and also autoscale with higher traffic. 3. **LLM / Embedding Model Deployment** : Often times, if we are using open-source models, we load the model in the Jupyter notebook. This will need to be hosted as a separate service in production and model will need to be called as an API. 4. **Vector DB deployment** : Most testing happens on vector DBs in memory or on disk. However, in production, the DBs need to be deployed in a more scalable and reliable way. Cognita makes it really easy to customize and experiment everything about a RAG system and still be able to deploy it in a good way. It also ships with a UI that makes it easier to try out different RAG configurations and see the results in real time. You can use it locally or with/without using any Truefoundry components. However, using Truefoundry components makes it easier to test different models and deploy the system in a scalable way. Cognita allows you to host multiple RAG systems using one app. ### Advantages of using Cognita are: 1. A central reusable repository of parsers, loaders, embedders and retrievers. 2. Ability for non-technical users to play with UI - Upload documents and perform QnA using modules built by the development team. 3. Fully API driven - which allows integration with other systems. > If you use Cognita with Truefoundry AI Gateway, you can get logging, metrics and feedback mechanism for your user queries. ### Features: 1. Support for multiple document retrievers that use `Similarity Search`, `Query Decompostion`, `Document Reranking`, etc 2. Support for SOTA OpenSource embeddings and reranking from `mixedbread-ai` 3. Support for using LLMs using `Ollama` 4. Support for incremental indexing that ingests entire documents in batches (reduces compute burden), keeps track of already indexed documents and prevents re-indexing of those docs.

reductstore

ReductStore is a high-performance time series database designed for storing and managing large amounts of unstructured blob data. It offers features such as real-time querying, batching data, and HTTP(S) API for edge computing, computer vision, and IoT applications. The database ensures data integrity, implements retention policies, and provides efficient data access, making it a cost-effective solution for applications requiring unstructured data storage and access at specific time intervals.

merlin

Merlin is a groundbreaking model capable of generating natural language responses intricately linked with object trajectories of multiple images. It excels in predicting and reasoning about future events based on initial observations, showcasing unprecedented capability in future prediction and reasoning. Merlin achieves state-of-the-art performance on the Future Reasoning Benchmark and multiple existing multimodal language models benchmarks, demonstrating powerful multi-modal general ability and foresight minds.

poml

POML (Prompt Orchestration Markup Language) is a novel markup language designed to bring structure, maintainability, and versatility to advanced prompt engineering for Large Language Models (LLMs). It addresses common challenges in prompt development, such as lack of structure, complex data integration, format sensitivity, and inadequate tooling. POML provides a systematic way to organize prompt components, integrate diverse data types seamlessly, and manage presentation variations, empowering developers to create more sophisticated and reliable LLM applications.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

radicalbit-ai-monitoring

The Radicalbit AI Monitoring Platform provides a comprehensive solution for monitoring Machine Learning and Large Language models in production. It helps proactively identify and address potential performance issues by analyzing data quality, model quality, and model drift. The repository contains files and projects for running the platform, including UI, API, SDK, and Spark components. Installation using Docker compose is provided, allowing deployment with a K3s cluster and interaction with a k9s container. The platform documentation includes a step-by-step guide for installation and creating dashboards. Community engagement is encouraged through a Discord server. The roadmap includes adding functionalities for batch and real-time workloads, covering various model types and tasks.

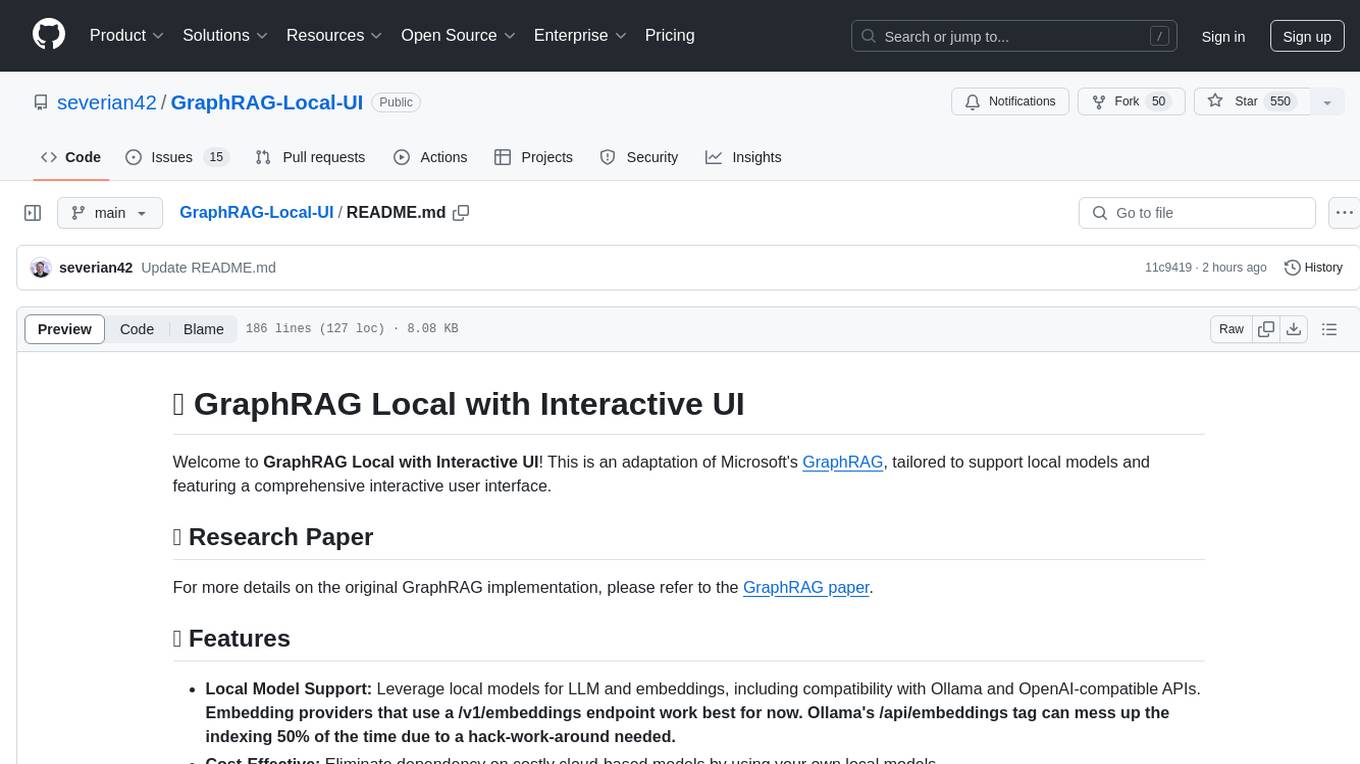

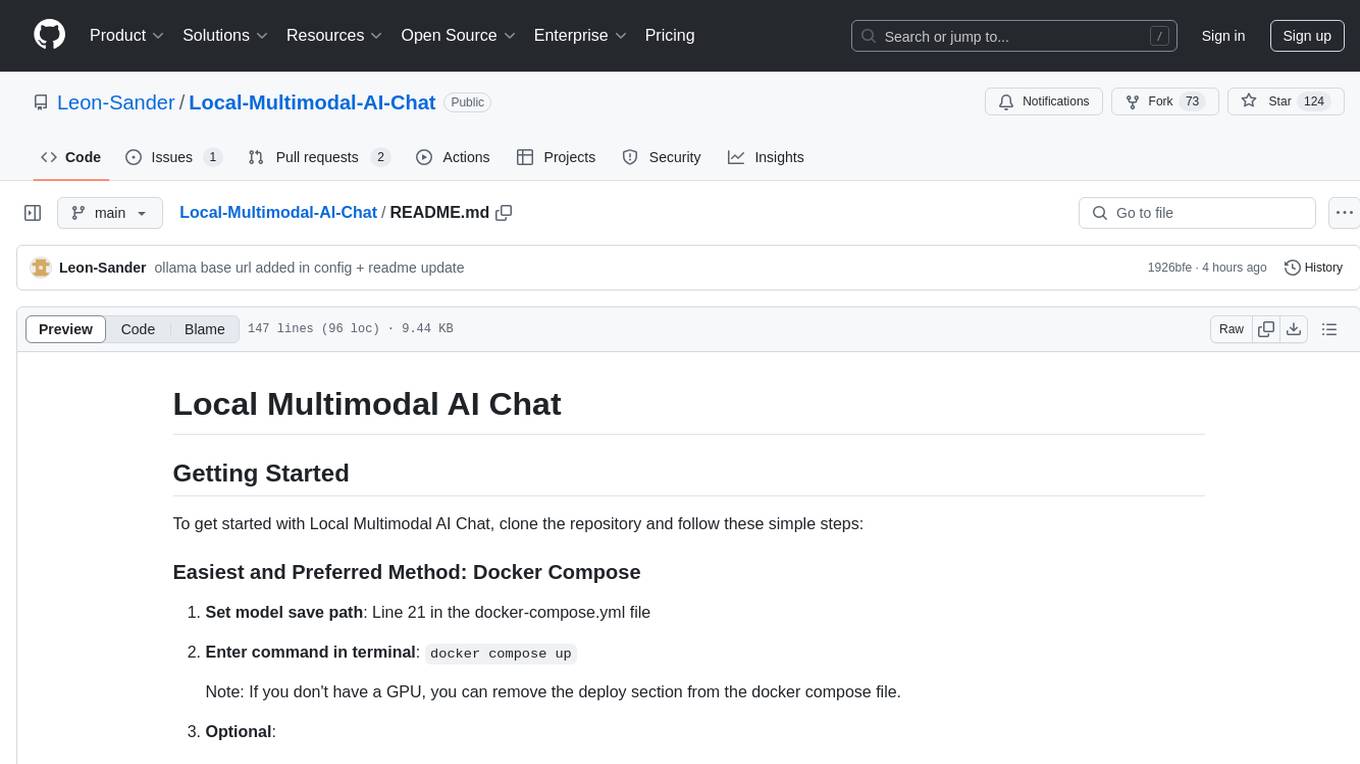

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

multimodal-chat

Yet Another Chatbot is a sophisticated multimodal chat interface powered by advanced AI models and equipped with a variety of tools. This chatbot can search and browse the web in real-time, query Wikipedia for information, perform news and map searches, execute Python code, compose long-form articles mixing text and images, generate, search, and compare images, analyze documents and images, search and download arXiv papers, save conversations as text and audio files, manage checklists, and track personal improvements. It offers tools for web interaction, Wikipedia search, Python scripting, content management, image handling, arXiv integration, conversation generation, file management, personal improvement, and checklist management.

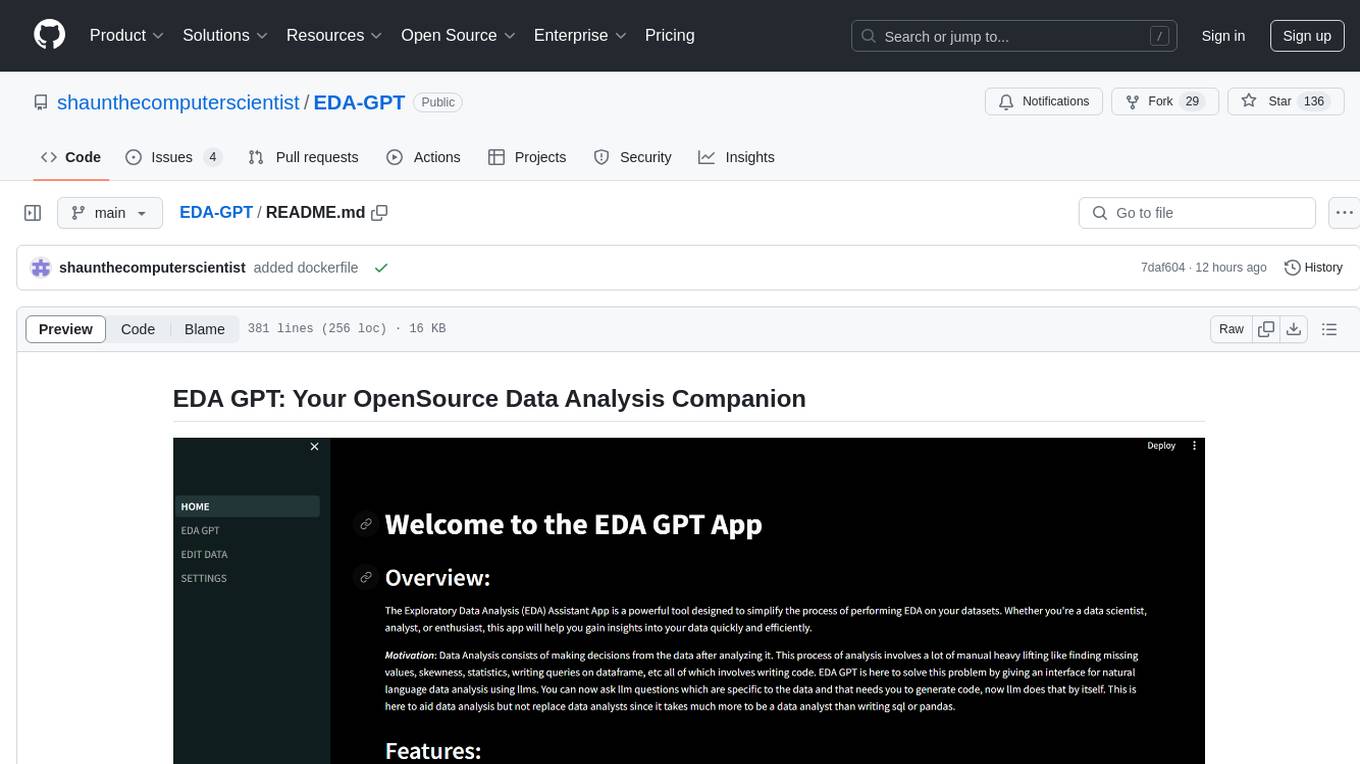

EDA-GPT

EDA GPT is an open-source data analysis companion that offers a comprehensive solution for structured and unstructured data analysis. It streamlines the data analysis process, empowering users to explore, visualize, and gain insights from their data. EDA GPT supports analyzing structured data in various formats like CSV, XLSX, and SQLite, generating graphs, and conducting in-depth analysis of unstructured data such as PDFs and images. It provides a user-friendly interface, powerful features, and capabilities like comparing performance with other tools, analyzing large language models, multimodal search, data cleaning, and editing. The tool is optimized for maximal parallel processing, searching internet and documents, and creating analysis reports from structured and unstructured data.

ComfyUI-Tara-LLM-Integration

Tara is a powerful node for ComfyUI that integrates Large Language Models (LLMs) to enhance and automate workflow processes. With Tara, you can create complex, intelligent workflows that refine and generate content, manage API keys, and seamlessly integrate various LLMs into your projects. It comprises nodes for handling OpenAI-compatible APIs, saving and loading API keys, composing multiple texts, and using predefined templates for OpenAI and Groq. Tara supports OpenAI and Grok models with plans to expand support to together.ai and Replicate. Users can install Tara via Git URL or ComfyUI Manager and utilize it for tasks like input guidance, saving and loading API keys, and generating text suitable for chaining in workflows.

PulsarRPA

PulsarRPA is a high-performance, distributed, open-source Robotic Process Automation (RPA) framework designed to handle large-scale RPA tasks with ease. It provides a comprehensive solution for browser automation, web content understanding, and data extraction. PulsarRPA addresses challenges of browser automation and accurate web data extraction from complex and evolving websites. It incorporates innovative technologies like browser rendering, RPA, intelligent scraping, advanced DOM parsing, and distributed architecture to ensure efficient, accurate, and scalable web data extraction. The tool is open-source, customizable, and supports cutting-edge information extraction technology, making it a preferred solution for large-scale web data extraction.

nucliadb

NucliaDB is a robust database that allows storing and searching on unstructured data. It is an out of the box hybrid search database, utilizing vector, full text and graph indexes. NucliaDB is written in Rust and Python. We designed it to index large datasets and provide multi-teanant support. When utilizing NucliaDB with Nuclia cloud, you are able to the power of an NLP database without the hassle of data extraction, enrichment and inference. We do all the hard work for you.

For similar tasks

octopus-v4

The Octopus-v4 project aims to build the world's largest graph of language models, integrating specialized models and training Octopus models to connect nodes efficiently. The project focuses on identifying, training, and connecting specialized models. The repository includes scripts for running the Octopus v4 model, methods for managing the graph, training code for specialized models, and inference code. Environment setup instructions are provided for Linux with NVIDIA GPU. The Octopus v4 model helps users find suitable models for tasks and reformats queries for effective processing. The project leverages Language Large Models for various domains and provides benchmark results. Users are encouraged to train and add specialized models following recommended procedures.

genai-workshop

The Neo4j GenAI Workshop repository contains notebooks for a workshop focusing on building a Neo4j Graph, text embedding, and providing demos for content generation. The workshop includes data staging, loading, and exploration using Cypher queries. It also covers improvements in LLM response quality, GPT-4 usage, and vector search speed. The repository has undergone multiple updates to enhance course quality, simplify content, and provide better explainers and examples.

nexus

Nexus is a virtual filesystem server designed for AI agents, offering a unified path-based API that integrates files, databases, APIs, and SaaS tools. It provides built-in permissions, memory management, semantic search, and skills lifecycle management. The Nexus Control Panel allows humans to curate files, memories, permissions, and integrations for agents, ensuring oversight and control while agents focus on execution. The repository includes the open-source server, SDK, CLI, and examples, catering to the needs of AI-native filesystems for cognitive agents.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

discourse-ai

Discourse AI is a plugin for the Discourse forum software that uses artificial intelligence to improve the user experience. It can automatically generate content, moderate posts, and answer questions. This can free up moderators and administrators to focus on other tasks, and it can help to create a more engaging and informative community.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

genai-for-marketing

This repository provides a deployment guide for utilizing Google Cloud's Generative AI tools in marketing scenarios. It includes step-by-step instructions, examples of crafting marketing materials, and supplementary Jupyter notebooks. The demos cover marketing insights, audience analysis, trendspotting, content search, content generation, and workspace integration. Users can access and visualize marketing data, analyze trends, improve search experience, and generate compelling content. The repository structure includes backend APIs, frontend code, sample notebooks, templates, and installation scripts.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.