pup

A Go-based command-line wrapper for easy interaction with Datadog APIs. Perfectly fit for an AI agent to use.

Stars: 191

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

README:

NOTICE: This is in Preview mode, we are fine tuning the interactions and bugs that arise. Please file issues or submit PRs. Thank you for your early interest!

A Go-based command-line wrapper for easy interaction with Datadog APIs.

- Native Go Implementation: Fast, cross-platform binary

- OAuth2 Authentication: Secure browser-based login with PKCE protection

- API Key Support: Traditional API key authentication still available

- Simple Commands: Intuitive CLI for common Datadog operations

- JSON Output: Structured output for easy parsing and automation

- Dynamic Client Registration: Each installation gets unique OAuth credentials

Pup implements 38 of 85+ available Datadog APIs (44.7% coverage).

Summary:

- ✅ 35 Working - Fully implemented and functional

- ⏳ 3 Planned - Skeleton implementation, endpoints pending

- ❌ 48+ Not Implemented - Available in Datadog but not yet in pup

See docs/COMMANDS.md for detailed command reference.

💡 Tip: Use Ctrl/Cmd+F to search for specific APIs. Request features via GitHub Issues.

📊 Core Observability (6/9 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Metrics | ✅ |

metrics search, metrics query, metrics list, metrics get

|

V1 and V2 APIs supported |

| Logs | ✅ |

logs search, logs list, logs aggregate

|

V1 and V2 APIs supported |

| Traces | ✅ |

traces search, traces list, traces aggregate

|

APM traces support |

| Events | ✅ |

events list, events search, events get

|

Infrastructure event management |

| RUM | ✅ |

rum apps, rum sessions, rum metrics list/get, rum retention-filters list/get

|

Apps, sessions, metrics, retention filters (create/update pending) |

| APM Services | ✅ |

apm services, apm entities, apm dependencies, apm flow-map

|

Services stats, operations, resources; entity queries; dependencies; flow visualization |

| Profiling | ❌ | - | Not yet implemented |

| Session Replay | ❌ | - | Not yet implemented |

| Spans Metrics | ❌ | - | Not yet implemented |

🔔 Monitoring & Alerting (6/9 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Monitors | ✅ |

monitors list, monitors get, monitors delete, monitors search

|

Full CRUD support with advanced search |

| Dashboards | ✅ |

dashboards list, dashboards get, dashboards delete, dashboards url

|

Full management capabilities |

| SLOs | ✅ |

slos list, slos get, slos create, slos update, slos delete, slos corrections

|

Full CRUD plus corrections |

| Synthetics | ✅ |

synthetics tests list, synthetics locations list

|

Test management support |

| Downtimes | ✅ |

downtime list, downtime get, downtime cancel

|

Full downtime management |

| Notebooks | ✅ |

notebooks list, notebooks get, notebooks delete

|

Investigation notebooks supported |

| Dashboard Lists | ❌ | - | Not yet implemented |

| Powerpacks | ❌ | - | Not yet implemented |

| Workflow Automation | ❌ | - | Not yet implemented |

🔒 Security & Compliance (6/9 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Security Monitoring | ✅ |

security rules list, security signals list, security findings search

|

Rules, signals, findings with advanced search |

| Static Analysis | ✅ |

static-analysis ast, static-analysis custom-rulesets, static-analysis sca, static-analysis coverage

|

Code security analysis |

| Audit Logs | ✅ |

audit-logs list, audit-logs search

|

Full audit log search and listing |

| Data Governance | ✅ | data-governance scanner-rules list |

Sensitive data scanner rules |

| Application Security | ❌ | - | Not yet implemented |

| CSM Threats | ❌ | - | Not yet implemented |

| Cloud Security (CSPM) | ❌ | - | Not yet implemented |

| Sensitive Data Scanner | ❌ | - | Not yet implemented |

☁️ Infrastructure & Cloud (6/8 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Infrastructure | ✅ |

infrastructure hosts list, infrastructure hosts get

|

Host inventory management |

| Tags | ✅ |

tags list, tags get, tags add, tags update, tags delete

|

Host tag operations |

| Network | ⏳ |

network flows list, network devices list

|

Placeholder - API endpoints pending |

| Cloud (AWS) | ✅ | cloud aws list |

AWS integration management |

| Cloud (GCP) | ✅ | cloud gcp list |

GCP integration management |

| Cloud (Azure) | ✅ | cloud azure list |

Azure integration management |

| Containers | ❌ | - | Not yet implemented |

| Processes | ❌ | - | Not yet implemented |

🚨 Incident & Operations (6/7 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Incidents | ✅ |

incidents list, incidents get, incidents attachments

|

Incident management with attachment support |

| On-Call (Teams) | ✅ |

on-call teams (CRUD, memberships with roles) |

Full team management system with admin/member roles |

| Case Management | ✅ |

cases (create, search, assign, archive, projects) |

Complete case management with priorities P1-P5 |

| Error Tracking | ✅ |

error-tracking issues search, error-tracking issues get

|

Error issue search and details |

| Service Catalog | ✅ |

service-catalog list, service-catalog get

|

Service registry management |

| Scorecards | ✅ |

scorecards list, scorecards get

|

Service quality scores |

| Incident Services/Teams | ❌ | - | Not yet implemented |

🔧 CI/CD & Development (1/3 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| CI Visibility | ✅ |

cicd pipelines list, cicd events list

|

CI/CD pipeline visibility and events |

| Test Optimization | ❌ | - | Not yet implemented |

| DORA Metrics | ❌ | - | Not yet implemented |

👥 Organization & Access (5/6 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Users | ✅ |

users list, users get, users roles

|

User and role management |

| Organizations | ✅ |

organizations get, organizations list

|

Organization settings management |

| API Keys | ✅ |

api-keys list, api-keys get, api-keys create, api-keys delete

|

Full API key CRUD |

| App Keys | ✅ |

app-keys list, app-keys get, app-keys register, app-keys unregister

|

App key registration for Action Connections |

| Service Accounts | ✅ | - | Managed via users commands |

| Roles | ❌ | - | Only list via users |

⚙️ Platform & Configuration (7/9 implemented)

| API Domain | Status | Pup Commands | Notes |

|---|---|---|---|

| Usage Metering | ✅ |

usage summary, usage hourly

|

Usage and billing metrics |

| Cost Management | ✅ |

cost projected, cost attribution, cost by-org

|

Cost attribution by tags and organizations |

| Product Analytics | ✅ | product-analytics events send |

Server-side product analytics events |

| Integrations | ✅ |

integrations slack, integrations pagerduty, integrations webhooks

|

Third-party integrations support |

| Observability Pipelines | ⏳ |

obs-pipelines list, obs-pipelines get

|

Placeholder - API endpoints pending |

| Miscellaneous | ✅ |

misc ip-ranges, misc status

|

IP ranges and status |

| Key Management | ❌ | - | Not yet implemented |

| IP Allowlist | ❌ | - | Not yet implemented |

brew tap datadog/pack

brew install datadog/pack/pupgo install github.com/DataDog/pup@latestDownload pre-built binaries from the latest release.

Pup supports two authentication methods. OAuth2 is preferred and will be used automatically if you've logged in.

OAuth2 provides secure, browser-based authentication with automatic token refresh.

# Set your Datadog site (optional)

export DD_SITE="datadoghq.com" # Defaults to datadoghq.com

# Login via browser

pup auth login

# Use any command - OAuth tokens are used automatically

pup monitors list

# Check status

pup auth status

# Logout

pup auth logoutToken Storage: Tokens are stored securely in your system's keychain (macOS Keychain, Windows Credential Manager, Linux Secret Service). Set DD_TOKEN_STORAGE=file to use file-based storage instead.

Note: OAuth2 requires Dynamic Client Registration (DCR) to be enabled on your Datadog site. If DCR is not available yet, use API key authentication.

See docs/OAUTH2.md for detailed OAuth2 documentation.

If OAuth2 tokens are not available, Pup automatically falls back to API key authentication.

export DD_API_KEY="your-datadog-api-key"

export DD_APP_KEY="your-datadog-application-key"

export DD_SITE="datadoghq.com" # Optional, defaults to datadoghq.com

# Use any command - API keys are used automatically

pup monitors listPup checks for authentication in this order:

-

OAuth2 tokens (from

pup auth login) - Used if valid tokens exist -

API keys (from

DD_API_KEYandDD_APP_KEY) - Used if OAuth tokens not available

# OAuth2 login (recommended)

pup auth login

# Check authentication status

pup auth status

# Refresh access token

pup auth refresh

# Logout

pup auth logoutpup test# List all monitors

pup monitors list

# Get specific monitor

pup monitors get 12345678

# Delete monitor

pup monitors delete 12345678 --yes# Search metrics using classic query syntax (v1 API)

pup metrics search --query="avg:system.cpu.user{*}" --from="1h"

# Query time-series data (v2 API)

pup metrics query --query="avg:system.cpu.user{*}" --from="1h"

# List available metrics

pup metrics list --filter="system.*"# List all dashboards

pup dashboards list

# Get dashboard details

pup dashboards get abc-123-def

# Delete dashboard

pup dashboards delete abc-123-def --yes# List all SLOs

pup slos list

# Get SLO details

pup slos get abc-123

# Delete SLO

pup slos delete abc-123 --yes# List all incidents

pup incidents list

# Get incident details

pup incidents get abc-123-def-

-o, --output: Output format (json, table, yaml) - default: json -

-y, --yes: Skip confirmation prompts for destructive operations

-

DD_API_KEY: Datadog API key (optional if using OAuth2) -

DD_APP_KEY: Datadog Application key (optional if using OAuth2) -

DD_SITE: Datadog site (default: datadoghq.com) -

DD_AUTO_APPROVE: Auto-approve destructive operations (true/false) -

DD_TOKEN_STORAGE: Token storage backend (keychain or file, default: auto-detect)

# Run tests

go test ./...

# Build

go build -o pup .

# Run without building

go run main.go monitors listApache License 2.0 - see LICENSE for details.

For detailed documentation, see CLAUDE.md.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pup

Similar Open Source Tools

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

spiceai

Spice is a portable runtime written in Rust that offers developers a unified SQL interface to materialize, accelerate, and query data from any database, data warehouse, or data lake. It connects, fuses, and delivers data to applications, machine-learning models, and AI-backends, functioning as an application-specific, tier-optimized Database CDN. Built with industry-leading technologies such as Apache DataFusion, Apache Arrow, Apache Arrow Flight, SQLite, and DuckDB. Spice makes it fast and easy to query data from one or more sources using SQL, co-locating a managed dataset with applications or machine learning models, and accelerating it with Arrow in-memory, SQLite/DuckDB, or attached PostgreSQL for fast, high-concurrency, low-latency queries.

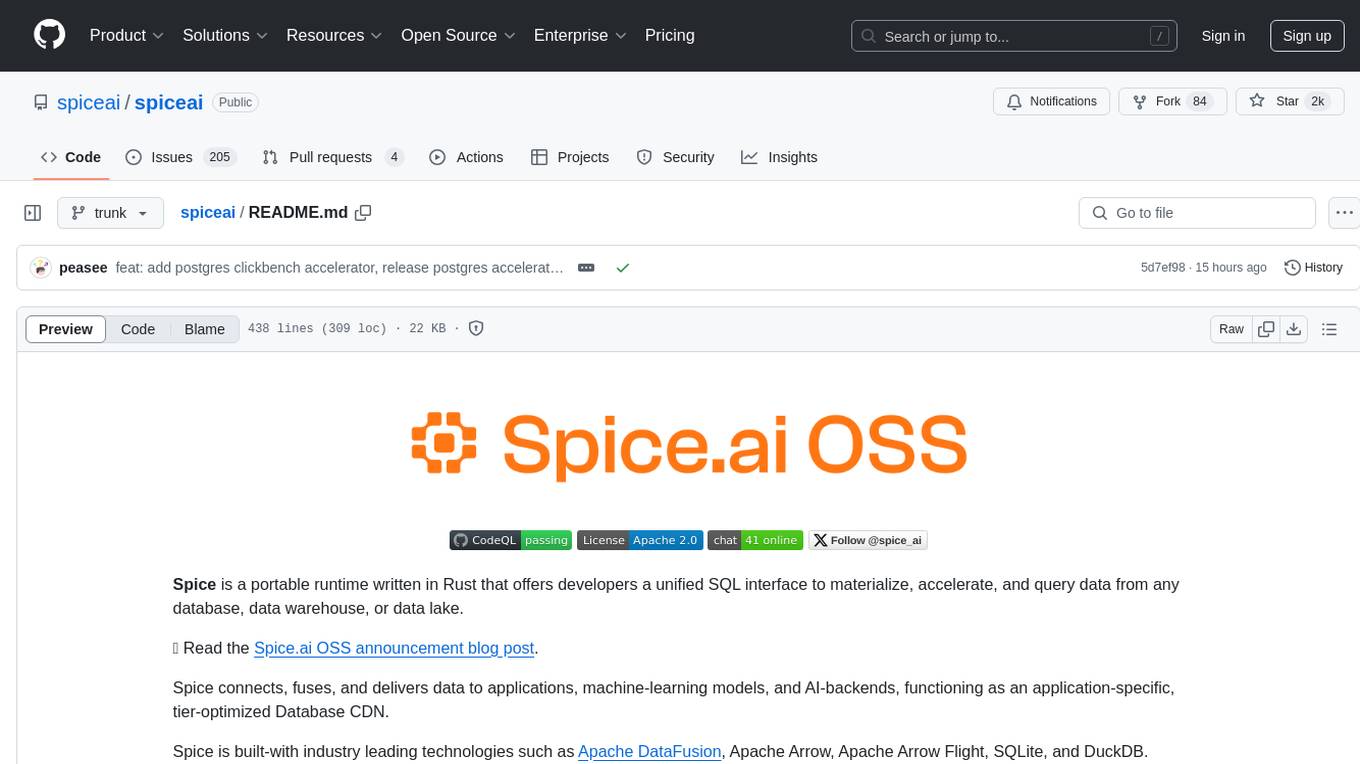

Backlog.md

Backlog.md is a Markdown-native Task Manager & Kanban visualizer for any Git repository. It turns any folder with a Git repo into a self-contained project board powered by plain Markdown files and a zero-config CLI. Features include managing tasks as plain .md files, private & offline usage, instant terminal Kanban visualization, board export, modern web interface, AI-ready CLI, rich query commands, cross-platform support, and MIT-licensed open-source. Users can create tasks, view board, assign tasks to AI, manage documentation, make decisions, and configure settings easily.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

commands

Production-ready slash commands for Claude Code that accelerate development through intelligent automation and multi-agent orchestration. Contains 52 commands organized into workflows and tools categories. Workflows orchestrate complex tasks with multiple agents, while tools provide focused functionality for specific development tasks. Commands can be used with prefixes for organization or flattened for convenience. Best practices include using workflows for complex tasks and tools for specific scopes, chaining commands strategically, and providing detailed context for effective usage.

agentic

Agentic is a standard AI functions/tools library optimized for TypeScript and LLM-based apps, compatible with major AI SDKs. It offers a set of thoroughly tested AI functions that can be used with favorite AI SDKs without writing glue code. The library includes various clients for services like Bing web search, calculator, Clearbit data resolution, Dexa podcast questions, and more. It also provides compound tools like SearchAndCrawl and supports multiple AI SDKs such as OpenAI, Vercel AI SDK, LangChain, LlamaIndex, Firebase Genkit, and Dexa Dexter. The goal is to create minimal clients with strongly-typed TypeScript DX, composable AIFunctions via AIFunctionSet, and compatibility with major TS AI SDKs.

squirrelscan

Squirrelscan is a website audit tool designed for SEO, performance, and security audits. It offers 230+ rules across 21 categories, AI-native design for Claude Code and AI workflows, smart incremental crawling, and multiple output formats. It provides E-E-A-T auditing, crawl history tracking, and is developer-friendly with a CLI. Users can run audits in the terminal, integrate with AI coding agents, or pipe output to AI assistants. The tool is available for macOS, Linux, Windows, npm, and npx installations, and is suitable for autonomous AI workflows.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

terminator

Terminator is an AI-powered desktop automation tool that is open source, MIT-licensed, and cross-platform. It works across all apps and browsers, inspired by GitHub Actions & Playwright. It is 100x faster than generic AI agents, with over 95% success rate and no vendor lock-in. Users can create automations that work across any desktop app or browser, achieve high success rates without costly consultant armies, and pre-train workflows as deterministic code.

agents

Cloudflare Agents is a framework for building intelligent, stateful agents that persist, think, and evolve at the edge of the network. It allows for maintaining persistent state and memory, real-time communication, processing and learning from interactions, autonomous operation at global scale, and hibernating when idle. The project is actively evolving with focus on core agent framework, WebSocket communication, HTTP endpoints, React integration, and basic AI chat capabilities. Future developments include advanced memory systems, WebRTC for audio/video, email integration, evaluation framework, enhanced observability, and self-hosting guide.

vscode-unify-chat-provider

The 'vscode-unify-chat-provider' repository is a tool that integrates multiple LLM API providers into VS Code's GitHub Copilot Chat using the Language Model API. It offers free tier access to mainstream models, perfect compatibility with major LLM API formats, deep adaptation to API features, best performance with built-in parameters, out-of-the-box configuration, import/export support, great UX, and one-click use of various models. The tool simplifies model setup, migration, and configuration for users, providing a seamless experience within VS Code for utilizing different language models.

aikit

AIKit is a comprehensive platform for hosting, deploying, building, and fine-tuning large language models (LLMs). It offers inference using LocalAI, extensible fine-tuning interface, and OCI packaging for distributing models. AIKit supports various models, multi-modal model and image generation, Kubernetes deployment, and supply chain security. It can run on AMD64 and ARM64 CPUs, NVIDIA GPUs, and Apple Silicon (experimental). Users can quickly get started with AIKit without a GPU and access pre-made models. The platform is OpenAI API compatible and provides easy-to-use configuration for inference and fine-tuning.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

aikit

AIKit is a one-stop shop to quickly get started to host, deploy, build and fine-tune large language models (LLMs). AIKit offers two main capabilities: Inference: AIKit uses LocalAI, which supports a wide range of inference capabilities and formats. LocalAI provides a drop-in replacement REST API that is OpenAI API compatible, so you can use any OpenAI API compatible client, such as Kubectl AI, Chatbot-UI and many more, to send requests to open-source LLMs! Fine Tuning: AIKit offers an extensible fine tuning interface. It supports Unsloth for fast, memory efficient, and easy fine-tuning experience.

rag-web-ui

RAG Web UI is an intelligent dialogue system based on RAG (Retrieval-Augmented Generation) technology. It helps enterprises and individuals build intelligent Q&A systems based on their own knowledge bases. By combining document retrieval and large language models, it delivers accurate and reliable knowledge-based question-answering services. The system is designed with features like intelligent document management, advanced dialogue engine, and a robust architecture. It supports multiple document formats, async document processing, multi-turn contextual dialogue, and reference citations in conversations. The architecture includes a backend stack with Python FastAPI, MySQL + ChromaDB, MinIO, Langchain, JWT + OAuth2 for authentication, and a frontend stack with Next.js, TypeScript, Tailwind CSS, Shadcn/UI, and Vercel AI SDK for AI integration. Performance optimization includes incremental document processing, streaming responses, vector database performance tuning, and distributed task processing. The project is licensed under the Apache-2.0 License and is intended for learning and sharing RAG knowledge only, not for commercial purposes.

portkey-python-sdk

The Portkey Python SDK is a control panel for AI apps that allows seamless integration of Portkey's advanced features with OpenAI methods. It provides features such as AI gateway for unified API signature, interoperability, automated fallbacks & retries, load balancing, semantic caching, virtual keys, request timeouts, observability with logging, requests tracing, custom metadata, feedback collection, and analytics. Users can make requests to OpenAI using Portkey SDK and also use async functionality. The SDK is compatible with OpenAI SDK methods and offers Portkey-specific methods like feedback and prompts. It supports various providers and encourages contributions through Github issues or direct contact via email or Discord.

For similar tasks

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

radicalbit-ai-monitoring

The Radicalbit AI Monitoring Platform provides a comprehensive solution for monitoring Machine Learning and Large Language models in production. It helps proactively identify and address potential performance issues by analyzing data quality, model quality, and model drift. The repository contains files and projects for running the platform, including UI, API, SDK, and Spark components. Installation using Docker compose is provided, allowing deployment with a K3s cluster and interaction with a k9s container. The platform documentation includes a step-by-step guide for installation and creating dashboards. Community engagement is encouraged through a Discord server. The roadmap includes adding functionalities for batch and real-time workloads, covering various model types and tasks.

niledatabase

Nile is a serverless Postgres database designed for modern SaaS applications. It virtualizes tenants/customers/organizations into Postgres to enable native tenant data isolation, performance isolation, per-tenant backups, and tenant placement on shared or dedicated compute globally. With Nile, you can manage multiple tenants effortlessly, without complex permissions or buggy scripts. Additionally, it offers opt-in user management capabilities, customer-specific vector embeddings, and instant tenant admin dashboards. Built for the cloud, Nile provides a true serverless experience with effortless scaling.

cube

Cube is a semantic layer for building data applications, helping data engineers and application developers access data from modern data stores, organize it into consistent definitions, and deliver it to every application. It works with SQL-enabled data sources, providing sub-second latency and high concurrency for API requests. Cube addresses SQL code organization, performance, and access control issues in data applications, enabling efficient data modeling, access control, and performance optimizations for various tools like embedded analytics, dashboarding, reporting, and data notebooks.

bagofwords

Bag of words is an open-source AI platform that helps data teams deploy and manage chat-with-your-data agents in a controlled, reliable, and self-learning environment. It enables users to create charts, tables, and dashboards by chatting with their data, capture AI decisions and user feedback, automatically improve AI quality, integrate with various data sources and APIs, and ensure governance and integrations. The platform supports self-hosting in VPC via VMs, Docker/Compose, or Kubernetes, and offers additional integrations for AI Analyst in Slack, Excel, Google Sheets, and more. Users can start in minutes and scale to org-wide analytics.

rill

Rill delivers rapid, self-service dashboards for data engineers and analysts directly on raw data lakes, ensuring reliable, fast-loading dashboards with accurate, real-time metrics. It comes with an embedded in-memory database for lightning-fast performance and supports bringing your own OLAP engine. Rill implements BI-as-code through SQL-based definitions, YAML configuration, Git integration, and CLI tools. Its metrics layer provides a unified way to define, compute, and serve business metrics, while AI agents can access fresh metrics instantly for precise decision-making and intelligent automation.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

For similar jobs

flux-aio

Flux All-In-One is a lightweight distribution optimized for running the GitOps Toolkit controllers as a single deployable unit on Kubernetes clusters. It is designed for bare clusters, edge clusters, clusters with restricted communication, clusters with egress via proxies, and serverless clusters. The distribution follows semver versioning and provides documentation for specifications, installation, upgrade, OCI sync configuration, Git sync configuration, and multi-tenancy configuration. Users can deploy Flux using Timoni CLI and a Timoni Bundle file, fine-tune installation options, sync from public Git repositories, bootstrap repositories, and uninstall Flux without affecting reconciled workloads.

paddler

Paddler is an open-source load balancer and reverse proxy designed specifically for optimizing servers running llama.cpp. It overcomes typical load balancing challenges by maintaining a stateful load balancer that is aware of each server's available slots, ensuring efficient request distribution. Paddler also supports dynamic addition or removal of servers, enabling integration with autoscaling tools.

DaoCloud-docs

DaoCloud Enterprise 5.0 Documentation provides detailed information on using DaoCloud, a Certified Kubernetes Service Provider. The documentation covers current and legacy versions, workflow control using GitOps, and instructions for opening a PR and previewing changes locally. It also includes naming conventions, writing tips, references, and acknowledgments to contributors. Users can find guidelines on writing, contributing, and translating pages, along with using tools like MkDocs, Docker, and Poetry for managing the documentation.

ztncui-aio

This repository contains a Docker image with ZeroTier One and ztncui to set up a standalone ZeroTier network controller with a web user interface. It provides features like Golang auto-mkworld for generating a planet file, supports local persistent storage configuration, and includes a public file server. Users can build the Docker image, set up the container with specific environment variables, and manage the ZeroTier network controller through the web interface.

devops-gpt

DevOpsGPT is a revolutionary tool designed to streamline your workflow and empower you to build systems and automate tasks with ease. Tired of spending hours on repetitive DevOps tasks? DevOpsGPT is here to help! Whether you're setting up infrastructure, speeding up deployments, or tackling any other DevOps challenge, our app can make your life easier and more productive. With DevOpsGPT, you can expect faster task completion, simplified workflows, and increased efficiency. Ready to experience the DevOpsGPT difference? Visit our website, sign in or create an account, start exploring the features, and share your feedback to help us improve. DevOpsGPT will become an essential tool in your DevOps toolkit.

ChatOpsLLM

ChatOpsLLM is a project designed to empower chatbots with effortless DevOps capabilities. It provides an intuitive interface and streamlined workflows for managing and scaling language models. The project incorporates robust MLOps practices, including CI/CD pipelines with Jenkins and Ansible, monitoring with Prometheus and Grafana, and centralized logging with the ELK stack. Developers can find detailed documentation and instructions on the project's website.

aiops-modules

AIOps Modules is a collection of reusable Infrastructure as Code (IAC) modules that work with SeedFarmer CLI. The modules are decoupled and can be aggregated using GitOps principles to achieve desired use cases, removing heavy lifting for end users. They must be generic for reuse in Machine Learning and Foundation Model Operations domain, adhering to SeedFarmer Guide structure. The repository includes deployment steps, project manifests, and various modules for SageMaker, Mlflow, FMOps/LLMOps, MWAA, Step Functions, EKS, and example use cases. It also supports Industry Data Framework (IDF) and Autonomous Driving Data Framework (ADDF) Modules.

3FS

The Fire-Flyer File System (3FS) is a high-performance distributed file system designed for AI training and inference workloads. It leverages modern SSDs and RDMA networks to provide a shared storage layer that simplifies development of distributed applications. Key features include performance, disaggregated architecture, strong consistency, file interfaces, data preparation, dataloaders, checkpointing, and KVCache for inference. The system is well-documented with design notes, setup guide, USRBIO API reference, and P specifications. Performance metrics include peak throughput, GraySort benchmark results, and KVCache optimization. The source code is available on GitHub for cloning and installation of dependencies. Users can build 3FS and run test clusters following the provided instructions. Issues can be reported on the GitHub repository.