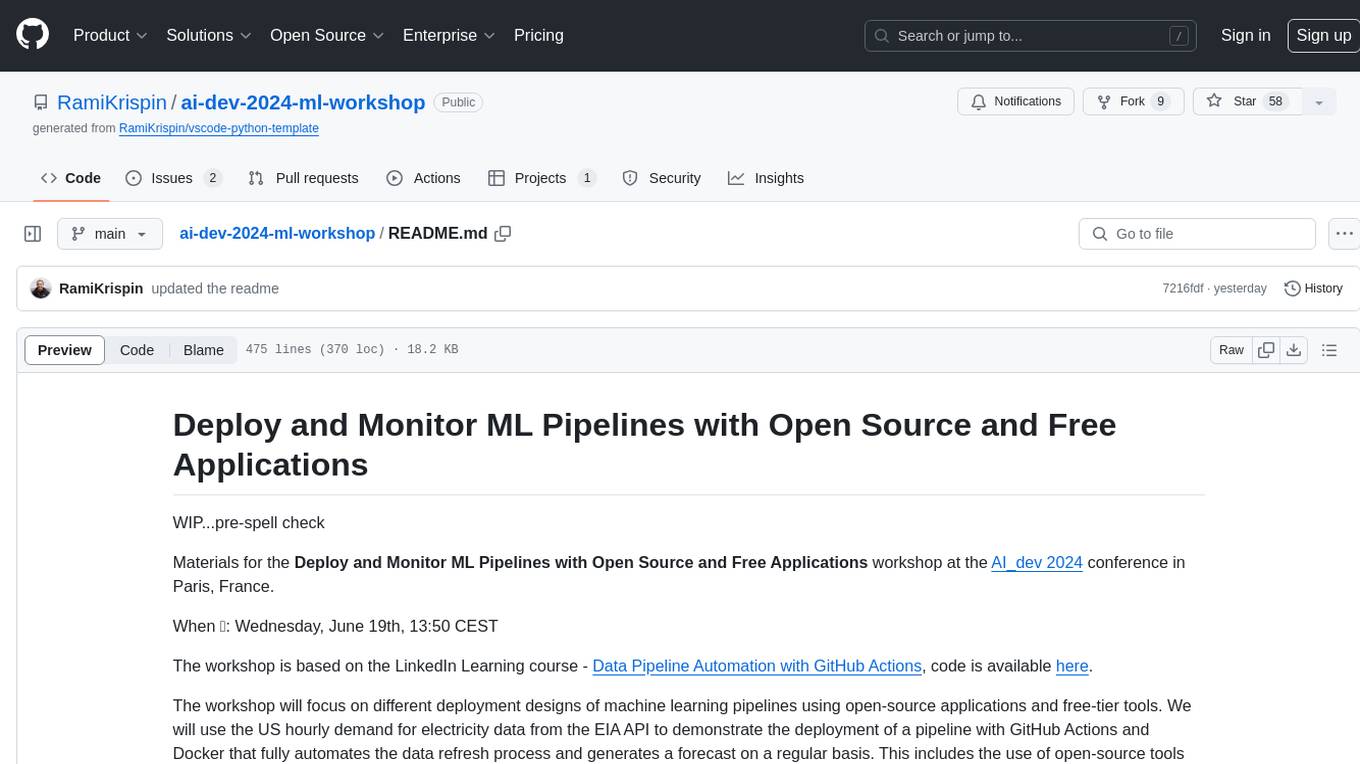

ai-dev-2024-ml-workshop

Materials for the AI Dev 2024 conference workshop "Deploy and Monitor ML Pipelines with Python, Open Source, and Free Applications"

Stars: 93

The 'ai-dev-2024-ml-workshop' repository contains materials for the Deploy and Monitor ML Pipelines workshop at the AI_dev 2024 conference in Paris, focusing on deployment designs of machine learning pipelines using open-source applications and free-tier tools. It demonstrates automating data refresh and forecasting using GitHub Actions and Docker, monitoring with MLflow and YData Profiling, and setting up a monitoring dashboard with Quarto doc on GitHub Pages.

README:

Materials for the Deploy and Monitor ML Pipelines with Open Source and Free Applications workshop at the AI_dev 2024 conference in Paris, France.

When 📆: Wednesday, June 19th, 13:50 CEST

The workshop is based on the LinkedIn Learning course - Data Pipeline Automation with GitHub Actions, code is available here.

The workshop will focus on different deployment designs of machine learning pipelines using open-source applications and free-tier tools. We will use the US hourly demand for electricity data from the EIA API to demonstrate the deployment of a pipeline with GitHub Actions and Docker that fully automates the data refresh process and generates a forecast on a regular basis. This includes the use of open-source tools such as MLflow and YData Profiling to monitor the health of the data and the model's success. Last but not least, we will use Quarto doc to set up the monitoring dashboard and deploy it on GitHub Pages.

The Seine River, Paris (created with Midjourney)- Milestones

- Scope

- Set a Development Environment

- Data Pipeline

- Forecasting Models

- Metadata

- Dashboard

- Deployment

- Resources

- License

To organize and track the project requirements, we will set up a GitHub Project, create general milestones, and use issues to define sub-milestone. For setting up a data/ML pipeline, we will define the following milestones:

- Define scope and requirements:

- Pipeline scope

- Forecasting scope

- General tools and requirements

- Set a development environment:

- Set a Docker image

- Update the Dev Containers settings

- Data pipeline prototype:

- Create pipeline schema/draft

- Build a prototype

- Test deployment on GitHub Actions

- Set forecasting models:

- Create an MLflow experiment

- Set backtesting function

- Define forecasting models

- Test and evaluate the models' performance

- Select the best model for deployment

- Set a Quarto dashboard:

- Create a Quarto dashboard

- Track the data and forecast

- Monitor performance

- Productionize the pipeline:

- Clean the code

- Define unit tests

- Deploy the pipeline and dashboard to GitHub Actions and GitHub Pages:

- Create a GitHub Actions workflow

- Refresh the data and forecast

- Update the dashboard

The milestones are available in the repository issues section, and you can track them on the project tracker.

The project trackerGoal: Forecast the hourly demand for electricity in the California Independent System Operator subregion (CISO).

This includes the following four providers:

- Pacific Gas and Electric (PGAE)

- Southern California Edison (SCE)

- San Diego Gas and Electric (SDGE)

- Valley Electric Association (VEA)

Forecast Horizon: 24 hours Refresh: Every 24 hours

The data is available on the EIA API, the API dashboard provides the GET setting to pull the above series.

The GET request details from the EIA API dashboard- The following functions:

- Data backfill function

- Data refresh function

- Forecast function

- Metadata function

- Docker image

- EIA API key

To make the deployment to GitHub Actions seamless, we will use Docker. In addition, we will set up a development environment using VScode and the Dev Containers extension.

To make the deployment to GitHub Actions seamless, we will use Docker. This will enable us to ship our code to GitHub Actions using the same environment we used to develop and test our code. We will use the below Dockerfile to set the environment:

FROM python:3.10-slim AS builder

ARG QUARTO_VER="1.5.45"

ARG VENV_NAME="ai_dev_workshop"

ENV QUARTO_VER=$QUARTO_VER

ENV VENV_NAME=$VENV_NAME

RUN mkdir requirements

COPY install_requirements.sh requirements/

COPY requirements.txt requirements/

RUN bash ./requirements/install_requirements.sh $VENV_NAME

FROM python:3.10-slim

ARG QUARTO_VER="1.5.45"

ARG VENV_NAME="ai_dev_workshop"

ENV QUARTO_VER=$QUARTO_VER

ENV VENV_NAME=$VENV_NAME

COPY --from=builder /opt/$VENV_NAME /opt/$VENV_NAME

COPY install_requirements.sh install_quarto.sh install_dependencies.sh requirements/

RUN bash ./requirements/install_dependencies.sh

RUN bash ./requirements/install_quarto.sh $QUARTO_VER

RUN echo "source /opt/$VENV_NAME/bin/activate" >> ~/.bashrcWe will use the Python slim image as our baseline, along with a Multi-Stage build approach, to make the image size as minimal as possible.

More about Multi-Stage is available

To make the image size as minimal as possible, we will use the Python slim image as our baseline along with a Multi-Stage build approach.

More details about the Multi-Stage build are available in the Docker documentation and this tutorial.

We will use the below Bash script (build_image.sh) to build and push the image to the Docker Hub:

#!/bin/bash

echo "Build the docker"

# Identify the CPU type (M1 vs Intel)

if [[ $(uname -m) == "aarch64" ]] ; then

CPU="arm64"

elif [[ $(uname -m) == "arm64" ]] ; then

CPU="arm64"

else

CPU="amd64"

fi

label="ai-dev"

tag="$CPU.0.0.1"

image="rkrispin/$label:$tag"

docker build . -f Dockerfile \

--progress=plain \

--build-arg QUARTO_VER="1.5.45" \

--build-arg VENV_NAME="ai_dev_workshop" \

-t $image

if [[ $? = 0 ]] ; then

echo "Pushing docker..."

docker push $image

else

echo "Docker build failed"

fiThe Dockerfile and its supporting files are under the docker folder.

Note: GitHub Actions, by default, does not support ARM64 processer but AMD64 (e.g., Intel). Therefore, if you are using Apple Silicon (M1/M2/M3) or any other ARM64-based machine, you will have to use Docker BuildX or similar to build the image to AMD64 architecture.

We will use the following devcontainer.json file to set the development environment:

{

"name": "AI-Dev Workshop",

"image": "docker.io/rkrispin/ai-dev:amd64.0.0.1",

"customizations": {

"settings": {

"python.defaultInterpreterPath": "/opt/ai_dev_workshop/bin/python3",

"python.selectInterpreter": "/opt/ai_dev_workshop/bin/python3"

},

"vscode": {

"extensions": [

// Documentation Extensions

"quarto.quarto",

"purocean.drawio-preview",

"redhat.vscode-yaml",

"yzhang.markdown-all-in-one",

// Docker Supporting Extensions

"ms-azuretools.vscode-docker",

"ms-vscode-remote.remote-containers",

// Python Extensions

"ms-python.python",

"ms-toolsai.jupyter",

// Github Actions

"github.vscode-github-actions"

]

}

},

"remoteEnv": {

"EIA_API_KEY": "${localEnv:EIA_API_KEY}"

}

}

If you want to learn more about setting up a dockerized development environment with the Dev Containers extension, please check the Python and R tutorials.

Once we have a clear scope, we can start designing the pipeline. The pipeline has the following two main components:

- Data refresh

- Forecasting model

I typically start with drafting the process using paper and pencil (or the electronic version using iPad and Apple Pencil 😎). This helps me to understand better what functions I need to build:

The data pipeline draftDrawing the pipeline components and sub-components helps us plan the required functions that we need to build.

Drawing the pipeline components and sub-components helps us plan the required functions that we need to build. Once the pipeline is ready, I usually create a design blueprint to document the process:

The pipeline final designThe pipeline will have the following two components:

- Data refresh function to keep the data up-to-date

- Forecast refresh to keep the forecast up-to-date

In addition, we will use the following two functions locally to prepare the data and models:

- Backfill function to initiate (or reset) the data pipeline

- Backtesting function to train, test, and evaluate time series models

We will set the pipeline to render and deploy a dashboard on GitHub pages whenever we refresh the data or the forecast.

We will use a JSON file to define the pipeline settings. This will enable us to seamlessly modify or update the pipeline without changing the functions. For example, we will define the required series from the EIA API under the series section:

"series": [

{

"parent_id": "CISO",

"parent_name": "California Independent System Operator",

"subba_id": "PGAE",

"subba_name": "Pacific Gas and Electric"

},

{

"parent_id": "CISO",

"parent_name": "California Independent System Operator",

"subba_id": "SCE",

"subba_name": "Southern California Edison"

},

{

"parent_id": "CISO",

"parent_name": "California Independent System Operator",

"subba_id": "SDGE",

"subba_name": "San Diego Gas and Electric"

},

{

"parent_id": "CISO",

"parent_name": "California Independent System Operator",

"subba_id": "VEA",

"subba_name": "Valley Electric Association"

}

]Last but not least, we will create two CSV files to store the data and the metadata.

To pull the data from the EIA API, we will use Python libraries such as requests, datetime, and pandas to send GET requests to the API and process the data.

All the supporting functions to call the API and process the data are under the eia_api.py and eia_data.py files.

The second component of the pipeline is setting up the forecasting models, this includes:

- Create a backtesting framework to test and evaluate models performance

- Set an experiment with MLflow. This includes the following steps:

- Define models

- Define backtesting settings

- Run the models and Log their performance

- Log for each series the best model

- For the demonstration, we will use the following two models from the Darts library:

We will use different flavors of those models, create a "horse race" between them, and select the one that performs best.

Note: We will run the backtesting process locally to avoid unnecessary compute time.

We will run an experiment with MLflow using different flavors of those models and evaluate which one performs best for each series. We will use the settings.json file to store the model's settings and backtesting parameters:

"models": {

"model1": {

"model": "LinearRegressionModel",

"model_label": "model1",

"comments": "LM model with lags, training with 2 years of history",

"num_samples": 100,

"lags": [

-24,

-168,

-8760

],

"likelihood": "quantile",

"train": 17520

},

"model2": {

"model": "LinearRegressionModel",

"model_label": "model2",

"comments": "LM model with lags, training with 3 years of history",

"num_samples": 100,

"lags": [

-24,

-168,

-8760

],

"likelihood": "quantile",

"train": 26280

},

"model3": {

"model": "LinearRegressionModel",

"model_label": "model3",

"comments": "Model 2 with lag 1",

"num_samples": 100,

"lags": [

-1,

-24,

-168,

-8760

],

"likelihood": "quantile",

"train": 26280

},

.

.

.

"model6": {

"model": "XGBModel",

"model_label": "model6",

"comments": "XGBoost with lags",

"num_samples": 100,

"lags": [

-1,

-2,

-3,

-24,

-48,

-168,

-336,

-8760

],

"likelihood": "quantile",

"train": 17520

},

"model7": {

"model": "XGBModel",

"model_label": "model7",

"comments": "XGBoost with lags",

"num_samples": 100,

"lags": [

-1,

-2,

-3,

-24,

-48,

-168

],

"likelihood": "quantile",

"train": 17520

}We will use MLflow to track the backtesting results and compare between the models:

The pipeline final designBy default, the backtesting process logged the best model for each series by the MAPE error matric. We will use this log for the model selection during the deployment.

Setting logs and metadata collection enables us to monitor the health of the pipeline and identify problems when they occur. Here are some of the metrics we will collect:

- Data refresh log: Track the data refresh process and log critical metrics such as the time of the refresh, the time range of the data points, unit test results, etc.

- Forecasting models: Define the selected model per series based on the backtesting evaluation results

- Forecast refresh log: Track the forecasting models refresh. This includes the time of refresh, forecast label, and performance metrics

After we set the pipeline's data and forecast refresh functions, the last step is to set a dashboard that presents the outputs (e.g., data, forecast, metadata, etc.). For this task, we will use a Quarto dashboard to set a simple dashboard that presents the most recent forecast and the pipeline metadata:

The pipeline Quarto dashboardThe dashboard is static, so we can deploy it on GitHub Pages as a static website. We will set the pipeline to rerender the dashboard and deploy it on GitHub Pages every time new data is available. The dashboard code and website are available here and here, respectively.

The last step of this pipeline setting process is to deploy it to GitHub Actions. We will use the following workflow to deploy the pipeline:

data_refresh.yml

name: Data Refresh

on:

schedule:

- cron: "0 */1 * * *"

jobs:

refresh-the-dashboard:

runs-on: ubuntu-22.04

container:

image: docker.io/rkrispin/ai-dev:amd64.0.0.2

steps:

- name: checkout_repo

uses: actions/checkout@v3

with:

ref: "main"

- name: Data Refresh

run: bash ./functions/data_refresh_py.sh

env:

EIA_API_KEY: ${{ secrets.EIA_API_KEY }}

USER_EMAIL: ${{ secrets.USER_EMAIL }}

USER_NAME: ${{ secrets.USER_NAME }}This simple workflow is set to run every hour. It uses the project image - docker.io/rkrispin/ai-dev:amd64.0.0.2 as the environment. We use the built-in action - actions/checkout@v3to check out the repo, access it, commit chnages and write it back to the repo.

Last but not least, we will execute the following bash script:

data_refresh_py.sh

#!/usr/bin/env bash

source /opt/$VENV_NAME/bin/activate

rm -rf ./functions/data_refresh_py_files

rm ./functions/data_refresh_py.html

quarto render ./functions/data_refresh_py.qmd --to html

rm -rf docs/data_refresh/

mkdir docs/data_refresh

cp ./functions/data_refresh_py.html ./docs/data_refresh/

cp -R ./functions/data_refresh_py_files ./docs/data_refresh/

echo "Finish"

p=$(pwd)

git config --global --add safe.directory $p

# Render the Quarto dashboard

if [[ "$(git status --porcelain)" != "" ]]; then

quarto render functions/index.qmd

cp functions/index.html docs/index.html

rm -rf docs/index_files

cp -R functions/index_files/ docs/

rm functions/index.html

rm -rf functions/index_files

git config --global user.name $USER_NAME

git config --global user.email $USER_EMAIL

git add data/*

git add docs/*

git commit -m "Auto update of the data"

git push origin main

else

echo "Nothing to commit..."

fiThis bash script renders the quarto doc with the data and forecast refresh functions. It then checks if new data points are available, and if so, it will render the dashboard and commit the changes (e.g., append changes to the CSV files).

Note that you will need to set the following three secrets:

-

EIA_API_KEY- the EIA API key -

USER_EMAIL- the email address associated with the GitHub account -

USER_NAME- the GitHub account user name

- Docker documentation: https://docs.docker.com/

- Dev Containers Extension: https://marketplace.visualstudio.com/items?itemName=ms-vscode-remote.remote-containers

- GitHub Actions documentation: https://docs.github.com/en/actions

This tutorial is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-dev-2024-ml-workshop

Similar Open Source Tools

ai-dev-2024-ml-workshop

The 'ai-dev-2024-ml-workshop' repository contains materials for the Deploy and Monitor ML Pipelines workshop at the AI_dev 2024 conference in Paris, focusing on deployment designs of machine learning pipelines using open-source applications and free-tier tools. It demonstrates automating data refresh and forecasting using GitHub Actions and Docker, monitoring with MLflow and YData Profiling, and setting up a monitoring dashboard with Quarto doc on GitHub Pages.

empower-functions

Empower Functions is a family of large language models (LLMs) that provide GPT-4 level capabilities for real-world 'tool using' use cases. These models offer compatibility support to be used as drop-in replacements, enabling interactions with external APIs by recognizing when a function needs to be called and generating JSON containing necessary arguments based on user inputs. This capability is crucial for building conversational agents and applications that convert natural language into API calls, facilitating tasks such as weather inquiries, data extraction, and interactions with knowledge bases. The models can handle multi-turn conversations, choose between tools or standard dialogue, ask for clarification on missing parameters, integrate responses with tool outputs in a streaming fashion, and efficiently execute multiple functions either in parallel or sequentially with dependencies.

otto-m8

otto-m8 is a flowchart based automation platform designed to run deep learning workloads with minimal to no code. It provides a user-friendly interface to spin up a wide range of AI models, including traditional deep learning models and large language models. The tool deploys Docker containers of workflows as APIs for integration with existing workflows, building AI chatbots, or standalone applications. Otto-m8 operates on an Input, Process, Output paradigm, simplifying the process of running AI models into a flowchart-like UI.

CoPilot

TigerGraph CoPilot is an AI assistant that combines graph databases and generative AI to enhance productivity across various business functions. It includes three core component services: InquiryAI for natural language assistance, SupportAI for knowledge Q&A, and QueryAI for GSQL code generation. Users can interact with CoPilot through a chat interface on TigerGraph Cloud and APIs. CoPilot requires LLM services for beta but will support TigerGraph's LLM in future releases. It aims to improve contextual relevance and accuracy of answers to natural-language questions by building knowledge graphs and using RAG. CoPilot is extensible and can be configured with different LLM providers, graph schemas, and LangChain tools.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration, command line interface, push to Hugging Face Hub, and system message control. Users can define generation tasks using YAML configuration files or programmatically using Python code. Promptwright integrates with LiteLLM for LLM providers and supports automatic dataset upload to Hugging Face Hub. The library is not responsible for the content generated by models and advises users to review the data before using it in production environments.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using a local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration for tasks, command line interface for running tasks, push to Hugging Face Hub for dataset upload, and system message control. Users can define generation tasks using YAML configuration or Python code. Promptwright integrates with LiteLLM to interface with LLM providers and supports automatic dataset upload to Hugging Face Hub.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

palimpzest

Palimpzest (PZ) is a tool for managing and optimizing workloads, particularly for data processing tasks. It provides a CLI tool and Python demos for users to register datasets, run workloads, and access results. Users can easily initialize their system, register datasets, and manage configurations using the CLI commands provided. Palimpzest also supports caching intermediate results and configuring for parallel execution with remote services like OpenAI and together.ai. The tool aims to streamline the workflow of working with datasets and optimizing performance for data extraction tasks.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

AutoNode

AutoNode is a self-operating computer system designed to automate web interactions and data extraction processes. It leverages advanced technologies like OCR (Optical Character Recognition), YOLO (You Only Look Once) models for object detection, and a custom site-graph to navigate and interact with web pages programmatically. Users can define objectives, create site-graphs, and utilize AutoNode via API to automate tasks on websites. The tool also supports training custom YOLO models for object detection and OCR for text recognition on web pages. AutoNode can be used for tasks such as extracting product details, automating web interactions, and more.

airflow-ai-sdk

This repository contains an SDK for working with LLMs from Apache Airflow, based on Pydantic AI. It allows users to call LLMs and orchestrate agent calls directly within their Airflow pipelines using decorator-based tasks. The SDK leverages the familiar Airflow `@task` syntax with extensions like `@task.llm`, `@task.llm_branch`, and `@task.agent`. Users can define tasks that call language models, orchestrate multi-step AI reasoning, change the control flow of a DAG based on LLM output, and support various models in the Pydantic AI library. The SDK is designed to integrate LLM workflows into Airflow pipelines, from simple LLM calls to complex agentic workflows.

llm-strategy

The 'llm-strategy' repository implements the Strategy Pattern using Large Language Models (LLMs) like OpenAI’s GPT-3. It provides a decorator 'llm_strategy' that connects to an LLM to implement abstract methods in interface classes. The package uses doc strings, type annotations, and method/function names as prompts for the LLM and can convert the responses back to Python data. It aims to automate the parsing of structured data by using LLMs, potentially reducing the need for manual Python code in the future.

chatmemory

ChatMemory is a simple yet powerful long-term memory manager that facilitates communication between AI and users. It organizes conversation data into history, summary, and knowledge entities, enabling quick retrieval of context and generation of clear, concise answers. The tool leverages vector search on summaries/knowledge and detailed history to provide accurate responses. It balances speed and accuracy by using lightweight retrieval and fallback detailed search mechanisms, ensuring efficient memory management and response generation beyond mere data retrieval.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

marqo

Marqo is more than a vector database, it's an end-to-end vector search engine for both text and images. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings.

xFinder

xFinder is a model specifically designed for key answer extraction from large language models (LLMs). It addresses the challenges of unreliable evaluation methods by optimizing the key answer extraction module. The model achieves high accuracy and robustness compared to existing frameworks, enhancing the reliability of LLM evaluation. It includes a specialized dataset, the Key Answer Finder (KAF) dataset, for effective training and evaluation. xFinder is suitable for researchers and developers working with LLMs to improve answer extraction accuracy.

For similar tasks

ai-dev-2024-ml-workshop

The 'ai-dev-2024-ml-workshop' repository contains materials for the Deploy and Monitor ML Pipelines workshop at the AI_dev 2024 conference in Paris, focusing on deployment designs of machine learning pipelines using open-source applications and free-tier tools. It demonstrates automating data refresh and forecasting using GitHub Actions and Docker, monitoring with MLflow and YData Profiling, and setting up a monitoring dashboard with Quarto doc on GitHub Pages.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.