MCP-Bridge

A middleware to provide an openAI compatible endpoint that can call MCP tools

Stars: 308

MCP-Bridge is a middleware tool designed to provide an openAI compatible endpoint for calling MCP tools. It acts as a bridge between the OpenAI API and MCP tools, allowing developers to leverage MCP tools through the OpenAI API interface. The tool facilitates the integration of MCP tools with the OpenAI API by providing endpoints for interaction. It supports non-streaming and streaming chat completions with MCP, as well as non-streaming completions without MCP. The tool is designed to work with inference engines that support tool call functionalities, such as vLLM and ollama. Installation can be done using Docker or manually, and the application can be run to interact with the OpenAI API. Configuration involves editing the config.json file to add new MCP servers. Contributions to the tool are welcome under the MIT License.

README:

MCP-Bridge acts as a bridge between the OpenAI API and MCP (MCP) tools, allowing developers to leverage MCP tools through the OpenAI API interface.

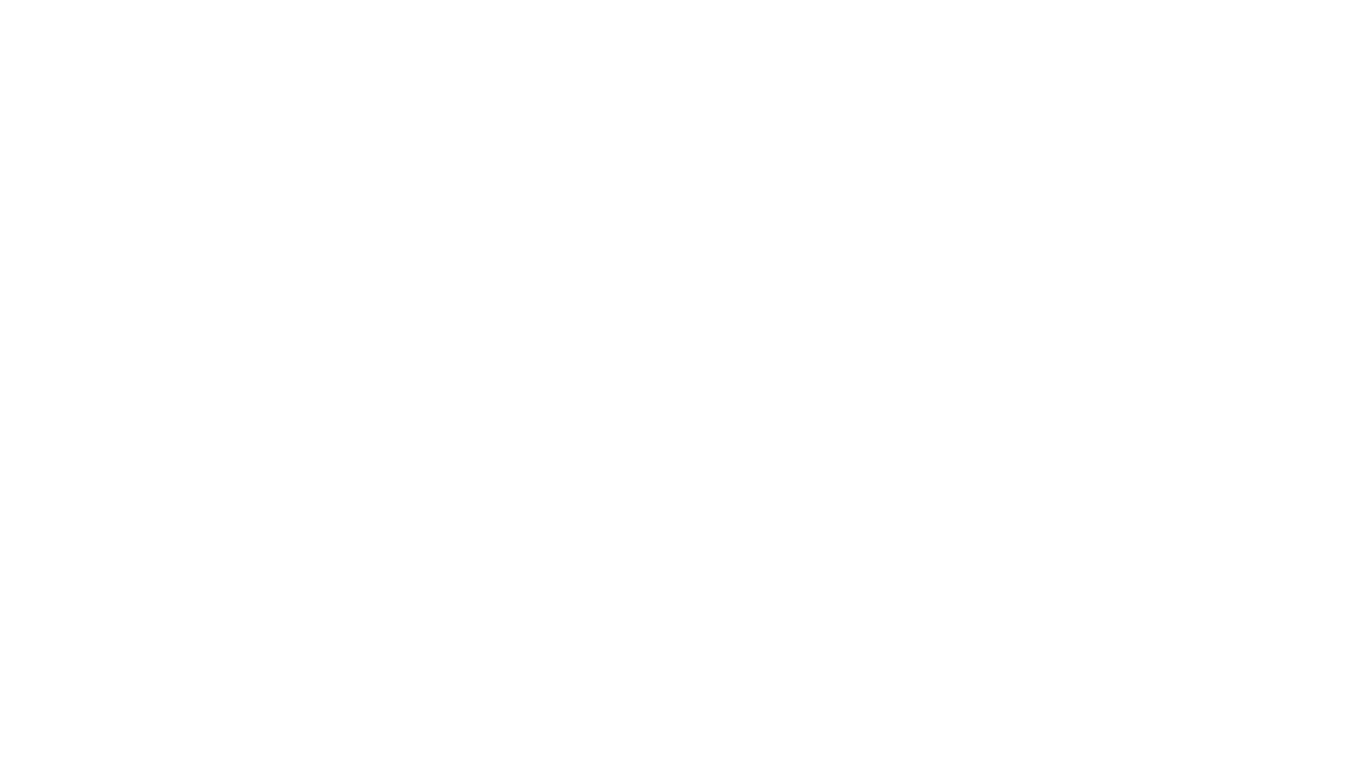

MCP-Bridge is designed to facilitate the integration of MCP tools with the OpenAI API. It provides a set of endpoints that can be used to interact with MCP tools in a way that is compatible with the OpenAI API. This allows you to use any client with any MCP tool without explicit support for MCP. For example, see this example of using Open Web UI with the official MCP fetch tool.

working features:

-

non streaming chat completions with MCP

-

streaming chat completions with MCP

-

non streaming completions without MCP

-

MCP tools

-

MCP sampling

-

SSE Bridge for external clients

planned features:

-

streaming completions are not implemented yet

-

MCP resources are planned to be supported

The recommended way to install MCP-Bridge is to use Docker. See the example compose.yml file for an example of how to set up docker.

Note that this requires an inference engine with tool call support. I have tested this with vLLM with success, though ollama should also be compatible.

-

Clone the repository

-

Edit the compose.yml file

You will need to add a reference to the config.json file in the compose.yml file. Pick any of

- add the config.json file to the same directory as the compose.yml file and use a volume mount (you will need to add the volume manually)

- add a http url to the environment variables to download the config.json file from a url

- add the config json directly as an environment variable

see below for an example of each option:

environment:

- MCP_BRIDGE__CONFIG__FILE=config.json # mount the config file for this to work

- MCP_BRIDGE__CONFIG__HTTP_URL=http://10.88.100.170:8888/config.json

- MCP_BRIDGE__CONFIG__JSON={"inference_server":{"base_url":"http://example.com/v1","api_key":"None"},"mcp_servers":{"fetch":{"command":"uvx","args":["mcp-server-fetch"]}}}The mount point for using the config file would look like:

volumes:

- ./config.json:/mcp_bridge/config.json- run the service

docker-compose up --build -d

If you want to run the application without docker, you will need to install the requirements and run the application manually.

-

Clone the repository

-

Set up a dependencies:

uv sync- Create a config.json file in the root directory

Here is an example config.json file:

{

"inference_server": {

"base_url": "http://example.com/v1",

"api_key": "None"

},

"mcp_servers": {

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

}- Run the application:

uv run mcp_bridge/main.pyOnce the application is running, you can interact with it using the OpenAI API.

View the documentation at http://yourserver:8000/docs. There is an endpoint to list all the MCP tools available on the server, which you can use to test the application configuration.

MCP-Bridge exposes many rest api endpoints for interacting with all of the native MCP primatives. This lets you outsource the complexity of dealing with MCP servers to MCP-Bridge without comprimising on functionality. See the openapi docs for examples of how to use this functionality.

MCP-Bridge also provides an SSE bridge for external clients. This lets external chat apps with explicit MCP support use MCP-Bridge as a MCP server. Point your client at the SSE endpoint (http://yourserver:8000/mcp-server/sse) and you should be able to see all the MCP tools available on the server.

This also makes it easy to test if your configuration is working correctly. You can use wong2/mcp-cli to test your configuration. npx @wong2/mcp-cli --sse http://localhost:8000/mcp-server/sse

If you want to use the tools inside of claude desktop or other STDIO only MCP clients, you can do this with a tool such as lightconetech/mcp-gateway

To add new MCP servers, edit the config.json file.

an example config.json file with most of the options explicitly set:

{

"inference_server": {

"base_url": "http://localhost:8000/v1",

"api_key": "None"

},

"sampling": {

"timeout": 10,

"models": [

{

"model": "gpt-4o",

"intelligence": 0.8,

"cost": 0.9,

"speed": 0.3

},

{

"model": "gpt-4o-mini",

"intelligence": 0.4,

"cost": 0.1,

"speed": 0.7

}

]

},

"mcp_servers": {

"fetch": {

"command": "uvx",

"args": [

"mcp-server-fetch"

]

}

},

"network": {

"host": "0.0.0.0",

"port": 9090

},

"logging": {

"log_level": "DEBUG"

}

}| Section | Description |

|---|---|

| inference_server | The inference server configuration |

| mcp_servers | The MCP servers configuration |

| network | uvicorn network configuration |

| logging | The logging configuration |

If you encounter any issues please open an issue or join the discord.

There is also documentation available here.

The application sits between the OpenAI API and the inference engine. An incoming request is modified to include tool definitions for all MCP tools available on the MCP servers. The request is then forwarded to the inference engine, which uses the tool definitions to create tool calls. MCP bridge then manage the calls to the tools. The request is then modified to include the tool call results, and is returned to the inference engine again so the LLM can create a response. Finally, the response is returned to the OpenAI API.

sequenceDiagram

participant OpenWebUI as Open Web UI

participant MCPProxy as MCP Proxy

participant MCPserver as MCP Server

participant InferenceEngine as Inference Engine

OpenWebUI ->> MCPProxy: Request

MCPProxy ->> MCPserver: list tools

MCPserver ->> MCPProxy: list of tools

MCPProxy ->> InferenceEngine: Forward Request

InferenceEngine ->> MCPProxy: Response

MCPProxy ->> MCPserver: call tool

MCPserver ->> MCPProxy: tool response

MCPProxy ->> InferenceEngine: llm uses tool response

InferenceEngine ->> MCPProxy: Response

MCPProxy ->> OpenWebUI: Return ResponseContributions to MCP-Bridge are welcome! To contribute, please follow these steps:

- Fork the repository.

- Create a new branch for your feature or bug fix.

- Make your changes and commit them.

- Push your changes to your fork.

- Create a pull request to the main repository.

MCP-Bridge is licensed under the MIT License. See the LICENSE file for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for MCP-Bridge

Similar Open Source Tools

MCP-Bridge

MCP-Bridge is a middleware tool designed to provide an openAI compatible endpoint for calling MCP tools. It acts as a bridge between the OpenAI API and MCP tools, allowing developers to leverage MCP tools through the OpenAI API interface. The tool facilitates the integration of MCP tools with the OpenAI API by providing endpoints for interaction. It supports non-streaming and streaming chat completions with MCP, as well as non-streaming completions without MCP. The tool is designed to work with inference engines that support tool call functionalities, such as vLLM and ollama. Installation can be done using Docker or manually, and the application can be run to interact with the OpenAI API. Configuration involves editing the config.json file to add new MCP servers. Contributions to the tool are welcome under the MIT License.

chat-mcp

A Cross-Platform Interface for Large Language Models (LLMs) utilizing the Model Context Protocol (MCP) to connect and interact with various LLMs. The desktop app, built on Electron, ensures compatibility across Linux, macOS, and Windows. It simplifies understanding MCP principles, facilitates testing of multiple servers and LLMs, and supports dynamic LLM configuration and multi-client management. The UI can be extracted for web use, ensuring consistency across web and desktop versions.

tuui

TUUI is a desktop MCP client designed for accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration. It is an LLM chat desktop application based on MCP, created using AI-generated components with strict syntax checks and naming conventions. The tool integrates AI tools via MCP, orchestrates LLM APIs, supports automated application testing, TypeScript, multilingual, layout management, global state management, and offers quick support through the GitHub community and official documentation.

mcp-server-qdrant

The mcp-server-qdrant repository is an official Model Context Protocol (MCP) server designed for keeping and retrieving memories in the Qdrant vector search engine. It acts as a semantic memory layer on top of the Qdrant database. The server provides tools like 'qdrant-store' for storing information in the database and 'qdrant-find' for retrieving relevant information. Configuration is done using environment variables, and the server supports different transport protocols. It can be installed using 'uvx' or Docker, and can also be installed via Smithery for Claude Desktop. The server can be used with Cursor/Windsurf as a code search tool by customizing tool descriptions. It can store code snippets and help developers find specific implementations or usage patterns. The repository is licensed under the Apache License 2.0.

MCPJungle

MCPJungle is a self-hosted MCP Gateway for private AI agents, serving as a registry for Model Context Protocol Servers. Developers use it to manage servers and tools centrally, while clients discover and consume tools from a single 'Gateway' MCP Server. Suitable for developers using MCP Clients like Claude & Cursor, building production-grade AI Agents, and organizations managing client-server interactions. The tool allows quick start, installation, usage, server and client setup, connection to Claude and Cursor, enabling/disabling tools, managing tool groups, authentication, enterprise features like access control and OpenTelemetry metrics. Limitations include lack of long-running connections to servers and no support for OAuth flow. Contributions are welcome.

mcp

Enable AI agents to operate reliably within real workflows. This MCP is monday.com's open framework for connecting agents into your work OS - giving them secure access to structured data, tools to take action, and the context needed to make smart decisions. The repository provides a comprehensive set of tools for AI agent developers who want to integrate with monday.com, including a plug-and-play server implementation for the Model Context Protocol (MCP) and a powerful set of tools for building AI agents that interact with the monday.com API. Users can choose between a hosted MCP service for fast and reliable connection or run the MCP locally for customization and offline development. The repository also offers advanced tools like Dynamic API Tools for full access to the monday.com GraphQL API, enabling complex reports, batch operations, and deep integration with monday.com's features.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

mcp-server

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

mcp

The Snowflake Cortex AI Model Context Protocol (MCP) Server provides tooling for Snowflake Cortex AI, object management, and SQL orchestration. It supports capabilities such as Cortex Search, Cortex Analyst, Cortex Agent, Object Management, SQL Execution, and Semantic View Querying. Users can connect to Snowflake using various authentication methods like username/password, key pair, OAuth, SSO, and MFA. The server is client-agnostic and works with MCP Clients like Claude Desktop, Cursor, fast-agent, Microsoft Visual Studio Code + GitHub Copilot, and Codex. It includes tools for Object Management (creating, dropping, describing, listing objects), SQL Execution (executing SQL statements), and Semantic View Querying (discovering, querying Semantic Views). Troubleshooting can be done using the MCP Inspector tool.

computer-use-mcp

The computer-use-mcp repository is a model context protocol server that allows Claude to control your computer. It is similar to computer use but is easy to set up and use locally. Users should be cautious as the server gives the model complete control of the computer, similar to giving a hyperactive toddler access. The tool communicates with the computer using nut.js and follows Anthropic's official computer use guide with a focus on keyboard shortcuts.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

apify-mcp-server

The Apify MCP Server enables AI agents to extract data from various websites using ready-made scrapers and automation tools. It supports OAuth for easy connection from clients like Claude.ai or Visual Studio Code. The server also supports Skyfire agentic payments for AI agents to pay for Actor runs without an API token. Compatible with various clients adhering to the Model Context Protocol, it allows dynamic tool discovery and interaction with Apify Actors. The server provides tools for interacting with Apify Actors, dynamic tool discovery, and telemetry data collection. It offers a set of example prompts and resources for users to explore and interact with Apify through MCP.

mcp-server

The Strands Agents MCP Server is a model-driven approach to building AI agents in just a few lines of code. It provides curated documentation access to GenAI tools via llms.txt files, enabling AI coding assistants to search and retrieve relevant documentation with intelligent ranking. Features include smart document search, curated content indexing, on-demand fetching, snippet generation, and real URL support. The server can be used with various applications that support MCP servers, such as Amazon Q Developer CLI, Anthropic Claude Code, Cline, and Cursor. Users can quickly test the MCP server using the MCP Inspector and follow the provided steps to configure their MCP client and start using the documentation tools. The project welcomes contributions and is licensed under the Apache License 2.0.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

joinly

joinly.ai is a connector middleware designed to enable AI agents to actively participate in video calls, providing essential meeting tools for AI agents to perform tasks and interact in real time. It supports live interaction, conversational flow, cross-platform compatibility, bring-your-own-LLM, and choose-your-preferred-TTS/STT services. The tool is 100% open-source, self-hosted, and privacy-first, aiming to make meetings accessible to AI agents by joining and participating in video calls.

Figma-Context-MCP

Figma-Context-MCP is a plugin for Figma that allows users to easily manage and switch between multiple design contexts within a single Figma file. This tool simplifies the process of working on different design variations or versions by providing a seamless way to organize and switch between them. With Figma-Context-MCP, designers can streamline their workflow and improve collaboration by keeping all design contexts in one place and easily accessible. This plugin enhances productivity and efficiency for Figma users who frequently work on multiple design iterations or versions within a project.

For similar tasks

MCP-Bridge

MCP-Bridge is a middleware tool designed to provide an openAI compatible endpoint for calling MCP tools. It acts as a bridge between the OpenAI API and MCP tools, allowing developers to leverage MCP tools through the OpenAI API interface. The tool facilitates the integration of MCP tools with the OpenAI API by providing endpoints for interaction. It supports non-streaming and streaming chat completions with MCP, as well as non-streaming completions without MCP. The tool is designed to work with inference engines that support tool call functionalities, such as vLLM and ollama. Installation can be done using Docker or manually, and the application can be run to interact with the OpenAI API. Configuration involves editing the config.json file to add new MCP servers. Contributions to the tool are welcome under the MIT License.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

chat-ai

A Seamless Slurm-Native Solution for HPC-Based Services. This repository contains the stand-alone web interface of Chat AI, which can be set up independently to act as a wrapper for an OpenAI-compatible API endpoint. It consists of two Docker containers, 'front' and 'back', providing a ReactJS app served by ViteJS and a wrapper for message requests to prevent CORS errors. Configuration files allow setting port numbers, backend paths, models, user data, default conversation settings, and more. The 'back' service interacts with an OpenAI-compatible API endpoint using configurable attributes in 'back.json'. Customization options include creating a path for available models and setting the 'modelsPath' in 'front.json'. Acknowledgements to contributors and the Chat AI community are included.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.