torch-rechub

A Lighting Pytorch Framework for Recommendation Models (PyTorch推荐算法框架), Easy-to-use and Easy-to-extend. https://datawhalechina.github.io/torch-rechub/

Stars: 769

Torch-RecHub is a lightweight, efficient, and user-friendly PyTorch recommendation system framework. It provides easy-to-use solutions for industrial-level recommendation systems, with features such as generative recommendation models, modular design for adding new models and datasets, PyTorch-based implementation for GPU acceleration, a rich library of 30+ classic and cutting-edge recommendation algorithms, standardized data loading, training, and evaluation processes, easy configuration through files or command-line parameters, reproducibility of experimental results, ONNX model export for production deployment, cross-engine data processing with PySpark support, and experiment visualization and tracking with integrated tools like WandB, SwanLab, and TensorBoardX.

README:

【

English | 简体中文

在线文档: https://datawhalechina.github.io/torch-rechub/zh/

Torch-RecHub —— 10 行代码实现工业级推荐系统。30+ 主流模型开箱即用,支持一键 ONNX 部署,让你专注于业务而非工程。

- 生成式推荐模型: LLM时代下,可以复现部分生成式推荐模型

- 模块化设计: 易于添加新的模型、数据集和评估指标。

- 基于 PyTorch: 利用 PyTorch 的动态图和 GPU 加速能力。

- 丰富的模型库: 涵盖 30+ 经典和前沿推荐算法(召回、排序、多任务、生成式推荐等)。

- 标准化流程: 提供统一的数据加载、训练和评估流程。

- 易于配置: 通过配置文件或命令行参数轻松调整实验设置。

- 可复现性: 旨在确保实验结果的可复现性。

- ONNX 导出: 支持将训练好的模型导出为 ONNX 格式,便于部署到生产环境。

- 跨引擎数据处理: 现已支持基于 PySpark 的数据处理与转换,方便在大数据管道中落地。

- 实验可视化与跟踪: 内置 WandB、SwanLab、TensorBoardX 三种可视化/追踪工具的统一集成。

- Python 3.9+

- PyTorch 1.7+ (建议使用支持 CUDA 的版本以获得 GPU 加速)

- NumPy

- Pandas

- SciPy

- Scikit-learn

稳定版(推荐用户使用):

pip install torch-rechub最新版:

# 首先安装 uv(如果尚未安装)

pip install uv

# 克隆并安装

git clone https://github.com/datawhalechina/torch-rechub.git

cd torch-rechub

uv sync以下是一个简单的示例,展示如何在 MovieLens 数据集上训练模型(例如 DSSM):

# 克隆仓库(如果使用最新版)

git clone https://github.com/datawhalechina/torch-rechub.git

cd torch-rechub

uv sync

# 运行示例

python examples/matching/run_ml_dssm.py

# 或使用自定义参数:

python examples/matching/run_ml_dssm.py --model_name dssm --device 'cuda:0' --learning_rate 0.001 --epoch 50 --batch_size 4096 --weight_decay 0.0001 --save_dir 'saved/dssm_ml-100k'训练完成后,模型文件将保存在 saved/dssm_ml-100k 目录下(或你配置的其他目录)。

torch-rechub/ # 根目录

├── README.md # 项目文档

├── pyproject.toml # 项目配置和依赖

├── torch_rechub/ # 核心代码库

│ ├── basic/ # 基础组件

│ │ ├── activation.py # 激活函数

│ │ ├── features.py # 特征工程

│ │ ├── layers.py # 神经网络层

│ │ ├── loss_func.py # 损失函数

│ │ └── metric.py # 评估指标

│ ├── models/ # 推荐模型实现

│ │ ├── matching/ # 召回模型(DSSM/MIND/GRU4Rec等)

│ │ ├── ranking/ # 排序模型(WideDeep/DeepFM/DIN等)

│ │ └── multi_task/ # 多任务模型(MMoE/ESMM等)

│ ├── trainers/ # 训练框架

│ │ ├── ctr_trainer.py # CTR预测训练器

│ │ ├── match_trainer.py # 召回模型训练器

│ │ └── mtl_trainer.py # 多任务学习训练器

│ └── utils/ # 工具函数

│ ├── data.py # 数据处理工具

│ ├── match.py # 召回工具

│ ├── mtl.py # 多任务工具

│ └── onnx_export.py # ONNX 导出工具

├── examples/ # 示例脚本

│ ├── matching/ # 召回任务示例

│ ├── ranking/ # 排序任务示例

│ └── generative/ # 生成式推荐示例(HSTU、HLLM 等)

├── docs/ # 文档(VitePress,多语言)

├── tutorials/ # Jupyter教程

├── tests/ # 单元测试

├── config/ # 配置文件

└── scripts/ # 工具脚本

本框架目前支持 30+ 主流推荐模型:

| 模型 | 论文 | 简介 |

|---|---|---|

| DeepFM | IJCAI 2017 | FM + Deep 联合训练 |

| Wide&Deep | DLRS 2016 | 记忆 + 泛化能力结合 |

| DCN | KDD 2017 | 显式特征交叉网络 |

| DCN-v2 | WWW 2021 | 增强版交叉网络 |

| DIN | KDD 2018 | 注意力机制捕捉用户兴趣 |

| DIEN | AAAI 2019 | 兴趣演化建模 |

| BST | DLP-KDD 2019 | Transformer 序列建模 |

| AFM | IJCAI 2017 | 注意力因子分解机 |

| AutoInt | CIKM 2019 | 自动特征交互学习 |

| FiBiNET | RecSys 2019 | 特征重要性 + 双线性交互 |

| DeepFFM | RecSys 2019 | 场感知因子分解机 |

| EDCN | KDD 2021 | 增强型交叉网络 |

|

| 模型 | 论文 | 简介 |

|---|---|---|

| DSSM | CIKM 2013 | 经典双塔召回模型 |

| YoutubeDNN | RecSys 2016 | YouTube 深度召回 |

| YoutubeSBC | RecSys 2019 | 采样偏差校正版本 |

| MIND | CIKM 2019 | 多兴趣动态路由 |

| SINE | WSDM 2021 | 稀疏兴趣网络 |

| GRU4Rec | ICLR 2016 | GRU 序列推荐 |

| SASRec | ICDM 2018 | 自注意力序列推荐 |

| NARM | CIKM 2017 | 神经注意力会话推荐 |

| STAMP | KDD 2018 | 短期注意力记忆优先 |

| ComiRec | KDD 2020 | 可控多兴趣推荐 |

| 模型 | 论文 | 简介 |

|---|---|---|

| ESMM | SIGIR 2018 | 全空间多任务建模 |

| MMoE | KDD 2018 | 多门控专家混合 |

| PLE | RecSys 2020 | 渐进式分层提取 |

| AITM | KDD 2021 | 自适应信息迁移 |

| SharedBottom | - | 经典多任务共享底层 |

框架内置了对以下常见数据集格式的支持或提供了处理脚本:

- MovieLens

- Amazon

- Criteo

- Avazu

- Census-Income

- BookCrossing

- Ali-ccp

- Yidian

- ...

我们期望的数据格式通常是包含以下字段的交互文件:

- 用户 ID

- 物品 ID

- 评分(可选)

- 时间戳(可选)

具体格式要求请参考 tutorials 目录下的示例代码。

你可以方便地集成你自己的数据集,只需确保它符合框架要求的数据格式,或编写自定义的数据加载器。

所有模型使用案例参考 /examples

from torch_rechub.models.ranking import DeepFM

from torch_rechub.trainers import CTRTrainer

from torch_rechub.utils.data import DataGenerator

dg = DataGenerator(x, y)

train_dataloader, val_dataloader, test_dataloader = dg.generate_dataloader(split_ratio=[0.7, 0.1], batch_size=256)

model = DeepFM(deep_features=deep_features, fm_features=fm_features, mlp_params={"dims": [256, 128], "dropout": 0.2, "activation": "relu"})

ctr_trainer = CTRTrainer(model)

ctr_trainer.fit(train_dataloader, val_dataloader)

auc = ctr_trainer.evaluate(ctr_trainer.model, test_dataloader)

ctr_trainer.export_onnx("deepfm.onnx")from torch_rechub.models.multi_task import SharedBottom, ESMM, MMOE, PLE, AITM

from torch_rechub.trainers import MTLTrainer

task_types = ["classification", "classification"]

model = MMOE(features, task_types, 8, expert_params={"dims": [32,16]}, tower_params_list=[{"dims": [32, 16]}, {"dims": [32, 16]}])

mtl_trainer = MTLTrainer(model)

mtl_trainer.fit(train_dataloader, val_dataloader)

auc = ctr_trainer.evaluate(ctr_trainer.model, test_dataloader)

mtl_trainer.export_onnx("mmoe.onnx")from torch_rechub.models.matching import DSSM

from torch_rechub.trainers import MatchTrainer

from torch_rechub.utils.data import MatchDataGenerator

dg = MatchDataGenerator(x, y)

train_dl, test_dl, item_dl = dg.generate_dataloader(test_user, all_item, batch_size=256)

model = DSSM(user_features, item_features, temperature=0.02,

user_params={

"dims": [256, 128, 64],

"activation": 'prelu',

},

item_params={

"dims": [256, 128, 64],

"activation": 'prelu',

})

match_trainer = MatchTrainer(model)

match_trainer.fit(train_dl)

match_trainer.export_onnx("dssm.onnx")

# 双塔模型可分别导出用户塔和物品塔:

# match_trainer.export_onnx("user_tower.onnx", mode="user")

# match_trainer.export_onnx("dssm_item.onnx", tower="item")# 可视化模型架构(需要安装: pip install torch-rechub[visualization])

graph = ctr_trainer.visualization(depth=4) # 生成计算图

ctr_trainer.visualization(save_path="model.pdf", dpi=300) # 保存为高清 PDF感谢所有的贡献者!

我们欢迎各种形式的贡献!请查看 CONTRIBUTING.md 了解详细的贡献指南。

我们也欢迎通过 Issues 报告 Bug 或提出功能建议。

本项目采用 MIT 许可证。

如果你在研究或工作中使用了本框架,请考虑引用:

@misc{torch_rechub,

title = {Torch-RecHub},

author = {Datawhale},

year = {2022},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/datawhalechina/torch-rechub}},

note = {A PyTorch-based recommender system framework providing easy-to-use and extensible solutions}

}- 项目负责人: 1985312383

- GitHub Disscussions

最后更新: [2025-12-11]

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for torch-rechub

Similar Open Source Tools

torch-rechub

Torch-RecHub is a lightweight, efficient, and user-friendly PyTorch recommendation system framework. It provides easy-to-use solutions for industrial-level recommendation systems, with features such as generative recommendation models, modular design for adding new models and datasets, PyTorch-based implementation for GPU acceleration, a rich library of 30+ classic and cutting-edge recommendation algorithms, standardized data loading, training, and evaluation processes, easy configuration through files or command-line parameters, reproducibility of experimental results, ONNX model export for production deployment, cross-engine data processing with PySpark support, and experiment visualization and tracking with integrated tools like WandB, SwanLab, and TensorBoardX.

tradecat

TradeCat is a comprehensive data analysis and trading platform designed for cryptocurrency, stock, and macroeconomic data. It offers a wide range of features including multi-market data collection, technical indicator modules, AI analysis, signal detection engine, Telegram bot integration, and more. The platform utilizes technologies like Python, TimescaleDB, TA-Lib, Pandas, NumPy, and various APIs to provide users with valuable insights and tools for trading decisions. With a modular architecture and detailed documentation, TradeCat aims to empower users in making informed trading decisions across different markets.

LunaBox

LunaBox is a lightweight, fast, and feature-rich tool for managing and tracking visual novels, with the ability to customize game categories, automatically track playtime, generate personalized reports through AI analysis, import data from other platforms, backup data locally or on cloud services, and ensure privacy and security by storing sensitive data locally. The tool supports multi-dimensional statistics, offers a variety of customization options, and provides a user-friendly interface for easy navigation and usage.

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

ai_quant_trade

The ai_quant_trade repository is a comprehensive platform for stock AI trading, offering learning, simulation, and live trading capabilities. It includes features such as factor mining, traditional strategies, machine learning, deep learning, reinforcement learning, graph networks, and high-frequency trading. The repository provides tools for monitoring stocks, stock recommendations, and deployment tools for live trading. It also features new functionalities like sentiment analysis using StructBERT, reinforcement learning for multi-stock trading with a 53% annual return, automatic factor mining with 5000 factors, customized stock monitoring software, and local deep reinforcement learning strategies.

SwanLab

SwanLab is an open-source, lightweight AI experiment tracking tool that provides a platform for tracking, comparing, and collaborating on experiments, aiming to accelerate the research and development efficiency of AI teams by 100 times. It offers a friendly API and a beautiful interface, combining hyperparameter tracking, metric recording, online collaboration, experiment link sharing, real-time message notifications, and more. With SwanLab, researchers can document their training experiences, seamlessly communicate and collaborate with collaborators, and machine learning engineers can develop models for production faster.

Qbot

Qbot is an AI-oriented automated quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It provides a full closed-loop process from data acquisition, strategy development, backtesting, simulation trading to live trading. The platform emphasizes AI strategies such as machine learning, reinforcement learning, and deep learning, combined with multi-factor models to enhance returns. Users with some Python knowledge and trading experience can easily utilize the platform to address trading pain points and gaps in the market.

HivisionIDPhotos

HivisionIDPhoto is a practical algorithm for intelligent ID photo creation. It utilizes a comprehensive model workflow to recognize, cut out, and generate ID photos for various user photo scenarios. The tool offers lightweight cutting, standard ID photo generation based on different size specifications, six-inch layout photo generation, beauty enhancement (waiting), and intelligent outfit swapping (waiting). It aims to solve emergency ID photo creation issues.

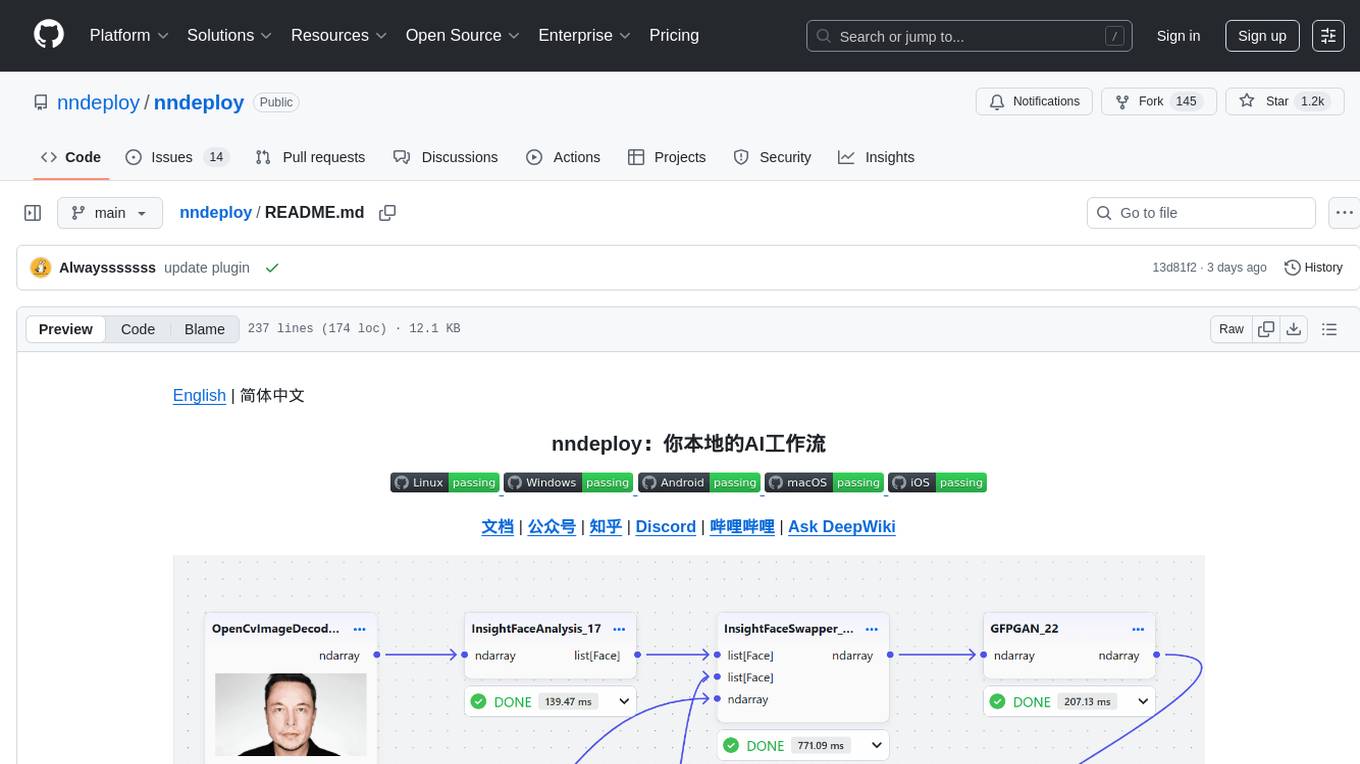

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

k8m

k8m is an AI-driven Mini Kubernetes AI Dashboard lightweight console tool designed to simplify cluster management. It is built on AMIS and uses 'kom' as the Kubernetes API client. k8m has built-in Qwen2.5-Coder-7B model interaction capabilities and supports integration with your own private large models. Its key features include miniaturized design for easy deployment, user-friendly interface for intuitive operation, efficient performance with backend in Golang and frontend based on Baidu AMIS, pod file management for browsing, editing, uploading, downloading, and deleting files, pod runtime management for real-time log viewing, log downloading, and executing shell commands within pods, CRD management for automatic discovery and management of CRD resources, and intelligent translation and diagnosis based on ChatGPT for YAML property translation, Describe information interpretation, AI log diagnosis, and command recommendations, providing intelligent support for managing k8s. It is cross-platform compatible with Linux, macOS, and Windows, supporting multiple architectures like x86 and ARM for seamless operation. k8m's design philosophy is 'AI-driven, lightweight and efficient, simplifying complexity,' helping developers and operators quickly get started and easily manage Kubernetes clusters.

LLM-TPU

LLM-TPU project aims to deploy various open-source generative AI models on the BM1684X chip, with a focus on LLM. Models are converted to bmodel using TPU-MLIR compiler and deployed to PCIe or SoC environments using C++ code. The project has deployed various open-source models such as Baichuan2-7B, ChatGLM3-6B, CodeFuse-7B, DeepSeek-6.7B, Falcon-40B, Phi-3-mini-4k, Qwen-7B, Qwen-14B, Qwen-72B, Qwen1.5-0.5B, Qwen1.5-1.8B, Llama2-7B, Llama2-13B, LWM-Text-Chat, Mistral-7B-Instruct, Stable Diffusion, Stable Diffusion XL, WizardCoder-15B, Yi-6B-chat, Yi-34B-chat. Detailed model deployment information can be found in the 'models' subdirectory of the project. For demonstrations, users can follow the 'Quick Start' section. For inquiries about the chip, users can contact SOPHGO via the official website.

vscode-antigravity-cockpit

VS Code extension for monitoring Google Antigravity AI model quotas. It provides a webview dashboard, QuickPick mode, quota grouping, automatic grouping, renaming, card view, drag-and-drop sorting, status bar monitoring, threshold notifications, and privacy mode. Users can monitor quota status, remaining percentage, countdown, reset time, progress bar, and model capabilities. The extension supports local and authorized quota monitoring, multiple account authorization, and model wake-up scheduling. It also offers settings customization, user profile display, notifications, and group functionalities. Users can install the extension from the Open VSX Marketplace or via VSIX file. The source code can be built using Node.js and npm. The project is open-source under the MIT license.

KubeDoor

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

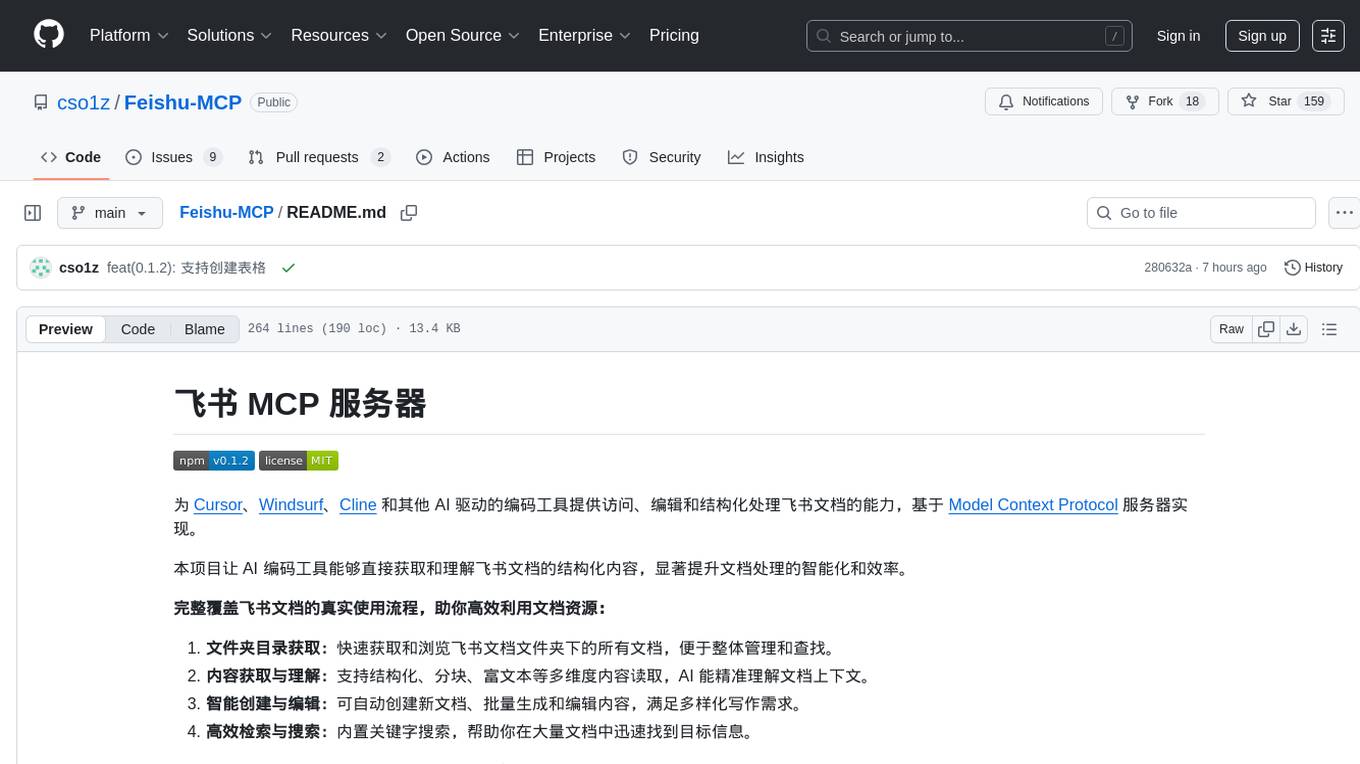

Feishu-MCP

Feishu-MCP is a server that provides access, editing, and structured processing capabilities for Feishu documents for Cursor, Windsurf, Cline, and other AI-driven coding tools, based on the Model Context Protocol server. This project enables AI coding tools to directly access and understand the structured content of Feishu documents, significantly improving the intelligence and efficiency of document processing. It covers the real usage process of Feishu documents, allowing efficient utilization of document resources, including folder directory retrieval, content retrieval and understanding, smart creation and editing, efficient search and retrieval, and more. It enhances the intelligent access, editing, and searching of Feishu documents in daily usage, improving content processing efficiency and experience.

For similar tasks

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.