KubeDoor

基于AI推荐+专家经验的K8S负载感知调度与容量管控系统

Stars: 272

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

README:

国内用户如果访问异常,可以访问Gitee同步站:https://gitee.com/starsl/KubeDoor

🌼花折 - KubeDoor 是一个使用Python + Vue开发,基于K8S准入控制机制的微服务资源管控平台,以及支持多K8S集群统一远程存储、监控、告警、通知、展示的一站式K8S监控平台,并且专注微服务每日高峰时段的资源视角,实现了微服务的资源分析统计与强管控,确保微服务资源的资源申请率和真实使用率一致。

- 🌊支持多K8S集群统一远程存储、监控、告警、通知、展示的一站式K8S监控方案。

- 📀Helm一键部署完成监控、采集、展示、告警、通知(多K8S集群监控从未如此简单✨)。

- 🚀基于VictoriaMetrics全套方案实现多K8S统一监控,统一告警规则管理,实现免配置完整指标采集。

- 🎨WEBUI集成了K8S节点监控看板与K8S资源监控看板,均支持在单一看板中查看各个K8S集群的资源情况。

- 📐集成了大量K8S资源,JVM资源与K8S节点的告警规则,并支持统一维护管理,支持对接企微,钉钉,飞书告警通知及灵活的@机制。

- 🎭实时监控管理页面,对K8S资源,节点资源统一监控展示的Grafana看板。

- ⏱️支持即时、定时、周期性任务执行微服务的扩缩容和重启操作。

- 🦄K8S微服务统一告警分析与处理页面,告警按天智能聚合,相同告警按日累计计数,每日告警清晰明了。

- 🕹️支持对POD进行隔离,删除,Java dump,jstack,jfr,JVM数据采集分析等操作,并通知到群。

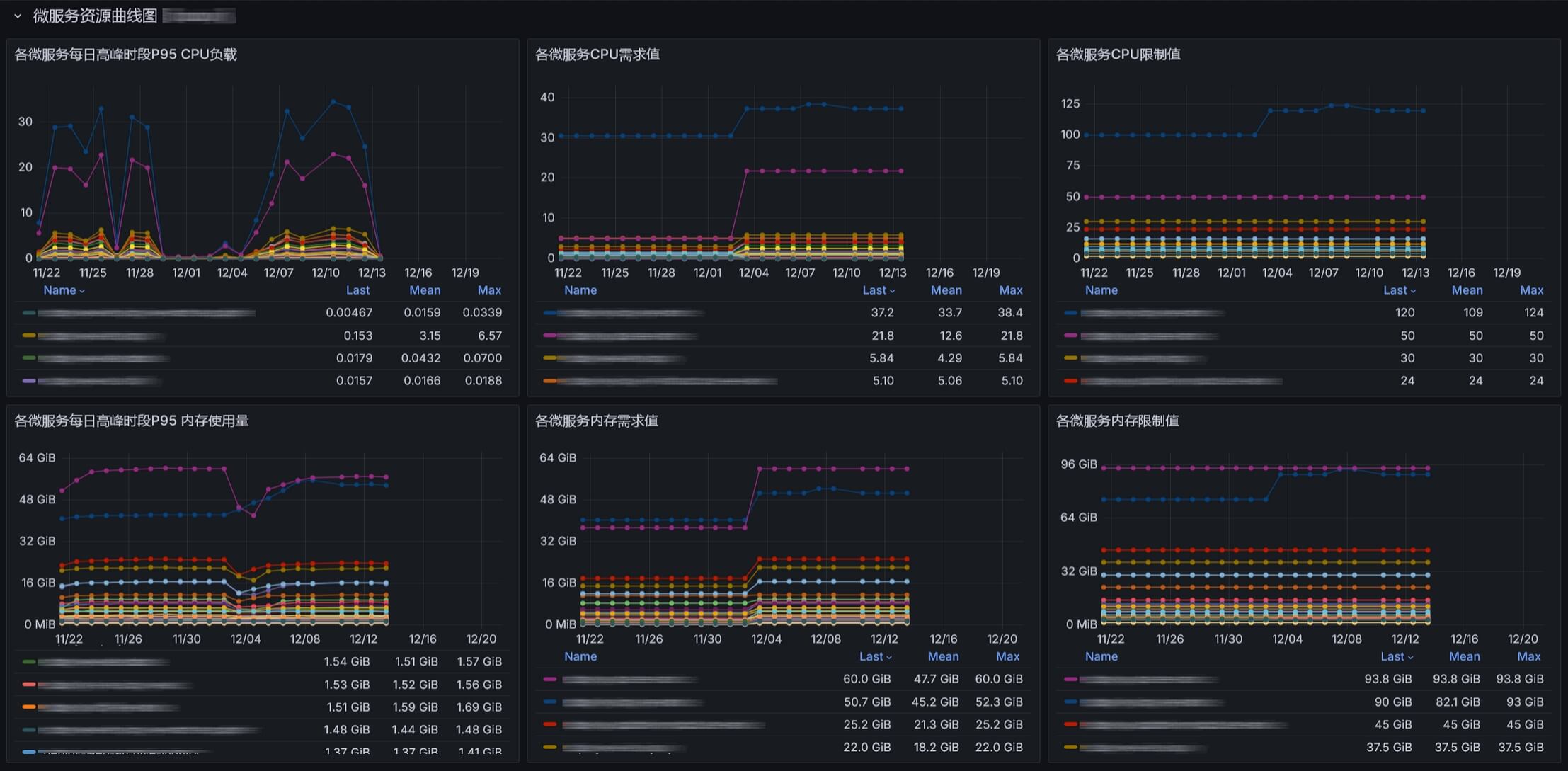

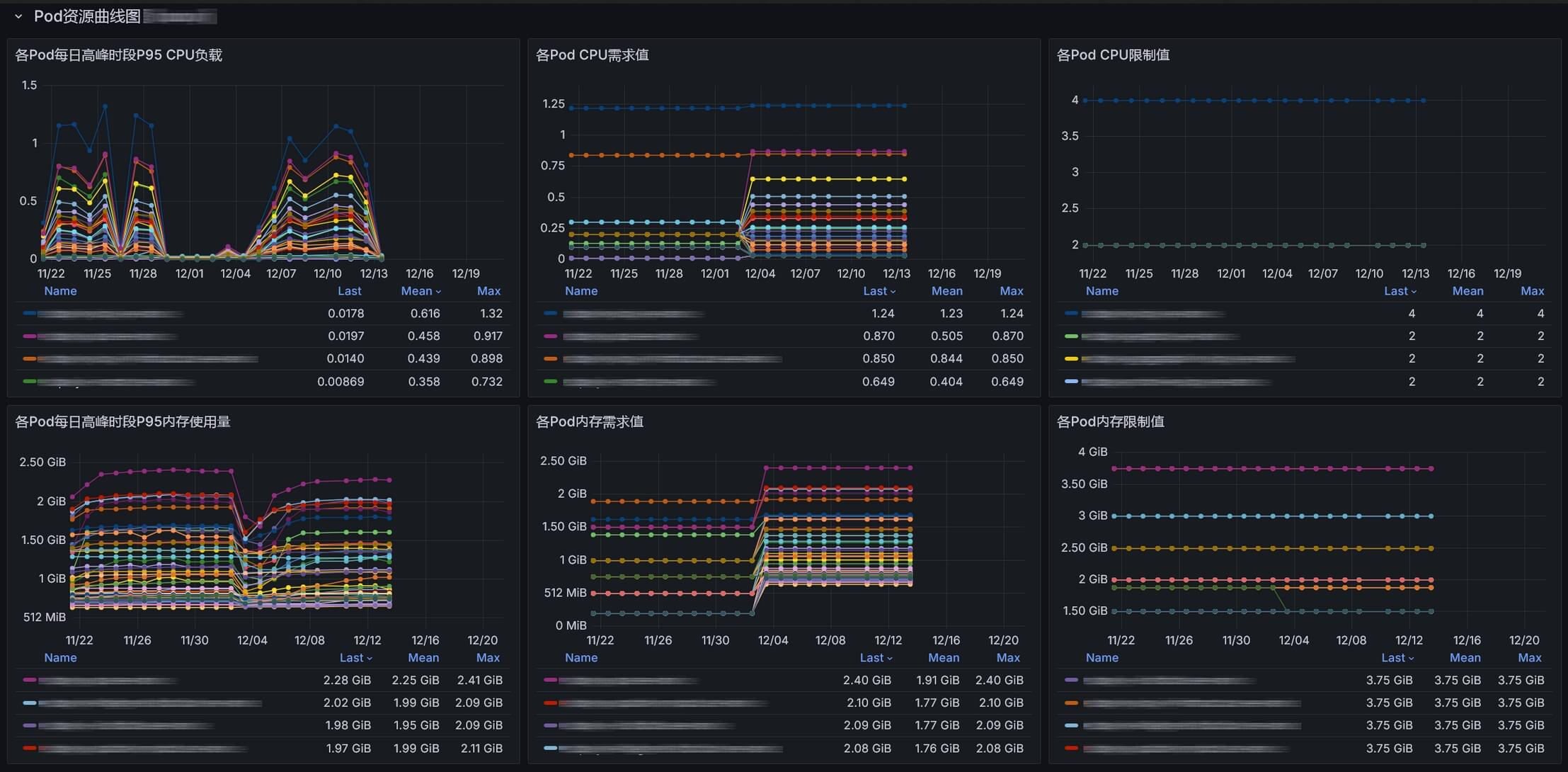

- ⚙️对微服务每日高峰期的P95资源展示,以及对Pod数、资源限制值的维护管理。

- 🎨基于日维度采集每日高峰时段P95的资源数据,可以很好的观察各微服务长期的资源变化情况,即使查看1年的数据也很流畅。

- 🏅高峰时段全局资源统计与各资源TOP10

- 🔎命名空间级别高峰时段P95资源使用量与资源消耗占整体资源的比例

- 🧿微服务级别高峰期整体资源与使用率分析

- 📈微服务与Pod级别的资源曲线图(需求值,限制值,使用值)

- ✨基于准入控制机制实现K8S微服务资源的真实使用率和资源申请需求值保持一致,具有非常重要的意义。

- 🌊K8S调度器通过真实的资源需求值就能够更精确地将Pod调度到合适的节点上,避免资源碎片,实现节点的资源均衡。

- ♻K8S自动扩缩容也依赖资源需求值来判断,真实的需求值可以更精准的触发扩缩容操作。

- 🛡K8S的保障服务质量(QoS机制)与需求值结合,真实需求值的Pod会被优先保留,保证关键服务的正常运行。

- ❤️Agent管理页面:更新,维护Agent状态,配置采集与管控。

- 🔒基于NGINX basic认证,支持LDAP,支持所有操作审计日志与通知。

- 📊所有看板基于Grafana创建,并整合到前端UI内,使得数据分析可以快速实现更优雅的展示。

### 【下载helm包】

wget https://StarsL.cn/kubedoor/kubedoor-1.0.0.tgz

tar -zxvf kubedoor-1.0.0.tgz

cd kubedoor

### 【master端安装】

# 编辑values-master.yaml文件,请仔细阅读注释,根据描述修改配置内容。

# try

helm install kubedoor . --namespace kubedoor --create-namespace --values values-master.yaml --dry-run --debug

# install

helm install kubedoor . --namespace kubedoor --create-namespace --values values-master.yaml

### 【agent端安装】

# 编辑values-agent.yaml文件,请仔细阅读注释,根据描述修改配置内容。

helm install kubedoor-agent . --namespace kubedoor --create-namespace --values values-agent.yaml --set tsdb.external_labels_value=kmw-prod-kunlun

-

使用K8S节点IP + kubedoor-web的NodePort访问,默认账号密码都是

kubedoor -

点击

agent管理,先开启自动采集,设置好高峰期时段,再执行采集,输入需要采集的历史数据时长,点击采集并更新,即可采集历史数据并更新高峰时段数据到管控表。默认会从Prometheus采集10天数据(建议采集1个月),并将10天内最大资源消耗日的数据写入到管控表,如果耗时较长,请等待采集完成或缩短采集时长。重复执行

采集并更新不会导致重复写入数据,请放心使用,每次采集后都会自动将10天内最大资源消耗日的数据写入到管控表。

- 📅KubeDoor 项目进度

- 🥈英文版发布

- 🏅微服务AI评分:根据资源使用情况,发现资源浪费的问题,结合AI缩容,降本增效,做AI综合评分,接入K8S异常AI分析能力。

- 🏅微服务AI缩容:基于微服务高峰期的资源信息,对接AI分析与专家经验,计算微服务Pod数是否合理,生成缩容指令与统计。

- 🏅根据K8S节点资源使用率做节点管控与调度分析

- ✅采集更多的微服务资源信息: QPS/JVM/GC

- ✅针对微服务Pod做精细化操作:隔离、删除、dump、jstack、jfr、jvm

- ✅K8S资源告警管理,按日智能聚合。

- ✅多K8S支持:在统一的WebUI对多K8S做管控和资源分析展示。

- ✅集成K8S实时监控能力,实现一键部署,整合K8S实时资源看板。

感谢如下优秀的项目,没有这些项目,不可能会有KubeDoor:

-

前后端技术栈

-

基础服务

-

特别鸣谢

- CassTime:KubeDoor的诞生离不开🦄开思的支持。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for KubeDoor

Similar Open Source Tools

KubeDoor

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

easyaiot

EasyAIoT is an AI cloud platform designed to support camera integration, annotation, training, inference, data collection, analysis, alerts, recording, storage, and deployment. It aims to provide a zero-threshold AI experience for everyone, with a focus on cameras below a hundred levels. The platform consists of five core projects: WEB module for frontend management, DEVICE module for device management, VIDEO module for video processing, AI module for AI analysis, and TASK module for high-performance task execution. EasyAIoT combines Java, Python, and C++ to create a versatile and user-friendly AIoT platform.

get_jobs

Get Jobs is a tool designed to help users find and apply for job positions on various recruitment platforms in China. It features AI job matching, automatic cover letter generation, multi-platform job application, automated filtering of inactive HR and headhunter positions, real-time WeChat message notifications, blacklisted company updates, driver adaptation for Win11, centralized configuration, long-lasting cookie login, XPathHelper plugin, global logging, and more. The tool supports platforms like Boss直聘, 猎聘, 拉勾, 51job, and 智联招聘. Users can configure the tool for customized job searches and applications.

godoos

GodoOS is an efficient intranet office operating system that includes various office tools such as word/excel/ppt/pdf/internal chat/whiteboard/mind map, with native file storage support. The platform interface mimics the Windows style, making it easy to operate while maintaining low resource consumption and high performance. It automatically connects to intranet users without registration, enabling instant communication and file sharing. The flexible and highly configurable app store allows for unlimited expansion.

douyin-chatgpt-bot

Douyin ChatGPT Bot is an AI-driven system for automatic replies on Douyin, including comment and private message replies. It offers features such as comment filtering, customizable robot responses, and automated account management. The system aims to enhance user engagement and brand image on the Douyin platform, providing a seamless experience for managing interactions with followers and potential customers.

bk-lite

Blueking Lite is an AI First lightweight operation product with low deployment resource requirements, low usage costs, and progressive experience, providing essential tools for operation administrators.

all-in-rag

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

aituber-kit

AITuber-Kit is a tool that enables users to interact with AI characters, conduct AITuber live streams, and engage in external integration modes. Users can easily converse with AI characters using various LLM APIs, stream on YouTube with AI character reactions, and send messages to server apps via WebSocket. The tool provides settings for API keys, character configurations, voice synthesis engines, and more. It supports multiple languages and allows customization of VRM models and background images. AITuber-Kit follows the MIT license and offers guidelines for adding new languages to the project.

GoMaxAI-ChatGPT-Midjourney-Pro

GoMaxAI Pro is an AI-powered application for personal, team, and enterprise private operations. It supports various models like ChatGPT, Claude, Gemini, Kimi, Wenxin Yiyuan, Xunfei Xinghuo, Tsinghua Zhipu, Suno-v3.5, and Luma-video. The Pro version offers a new UI interface, member points system, management backend, homepage features, support for various content formats, AI video capabilities, SAAS multi-opening function, bug fixes, and more. It is built using web frontend with Vue3, mobile frontend with Uniapp, management frontend with Vue3, backend with Nodejs, and uses MySQL5.7(+) + Redis for data support. It can be deployed on Linux, Windows, or MacOS, with data storage options including local storage, Aliyun OSS, Tencent Cloud COS, and Chevereto image bed.

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

Snap-Solver

Snap-Solver is a revolutionary AI tool for online exam solving, designed for students, test-takers, and self-learners. With just a keystroke, it automatically captures any question on the screen, analyzes it using AI, and provides detailed answers. Whether it's complex math formulas, physics problems, coding issues, or challenges from other disciplines, Snap-Solver offers clear, accurate, and structured solutions to help you better understand and master the subject matter.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

Saber-Translator

Saber-Translator is your exclusive AI comic translation tool, designed to effortlessly eliminate language barriers and enjoy the original comic fun. It offers features like translating comic images/PDFs, intelligent bubble detection and text recognition, powerful AI translation engine with multiple service providers, highly customizable translation effects, real-time preview and convenient operations, efficient image management and download, model recording and recommendation, and support for language learning with dual prompt word outputs.

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

For similar tasks

KubeDoor

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

robusta

Robusta is a tool designed to enhance Prometheus notifications for Kubernetes environments. It offers features such as smart grouping to reduce notification spam, AI investigation for alert analysis, alert enrichment with additional data like pod logs, self-healing capabilities for defining auto-remediation rules, advanced routing options, problem detection without PromQL, change-tracking for Kubernetes resources, auto-resolve functionality, and integration with various external systems like Slack, Teams, and Jira. Users can utilize Robusta with or without Prometheus, and it can be installed alongside existing Prometheus setups or as part of an all-in-one Kubernetes observability stack.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.