nndeploy

一款简单易用和高性能的AI部署框架 | An Easy-to-Use and High-Performance AI Deployment Framework

Stars: 1741

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

README:

English | 简体中文

文档 | Ask DeepWiki | 微信 | Discord

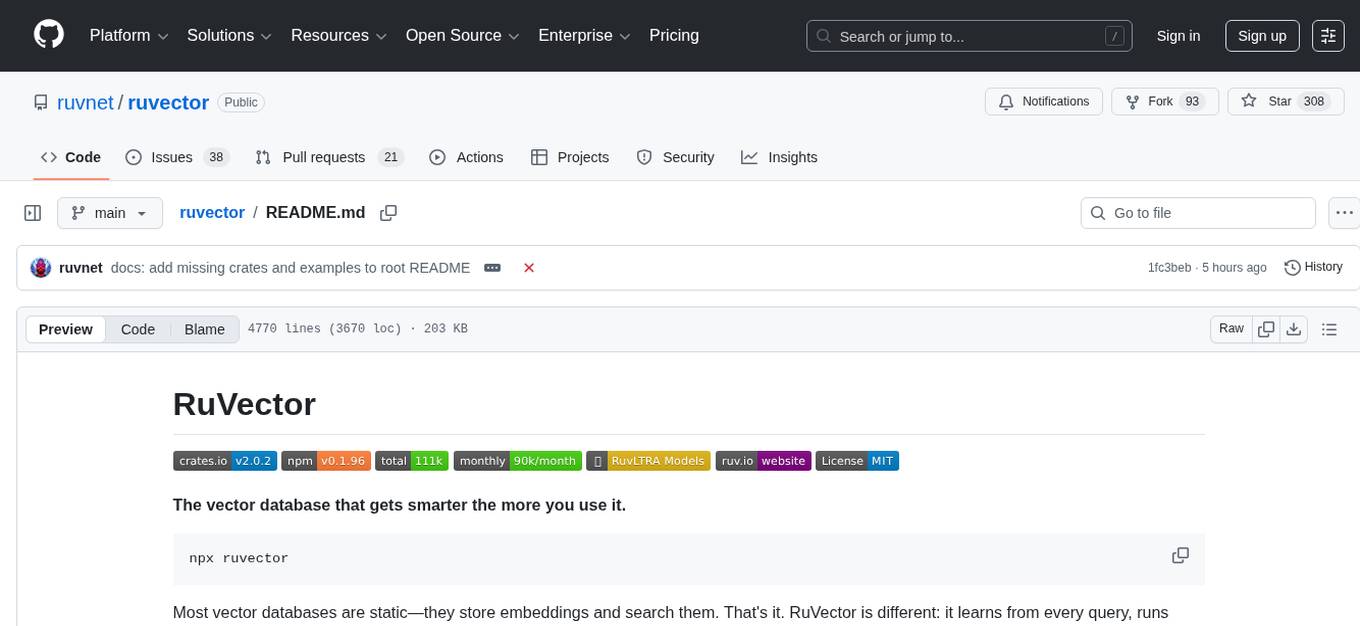

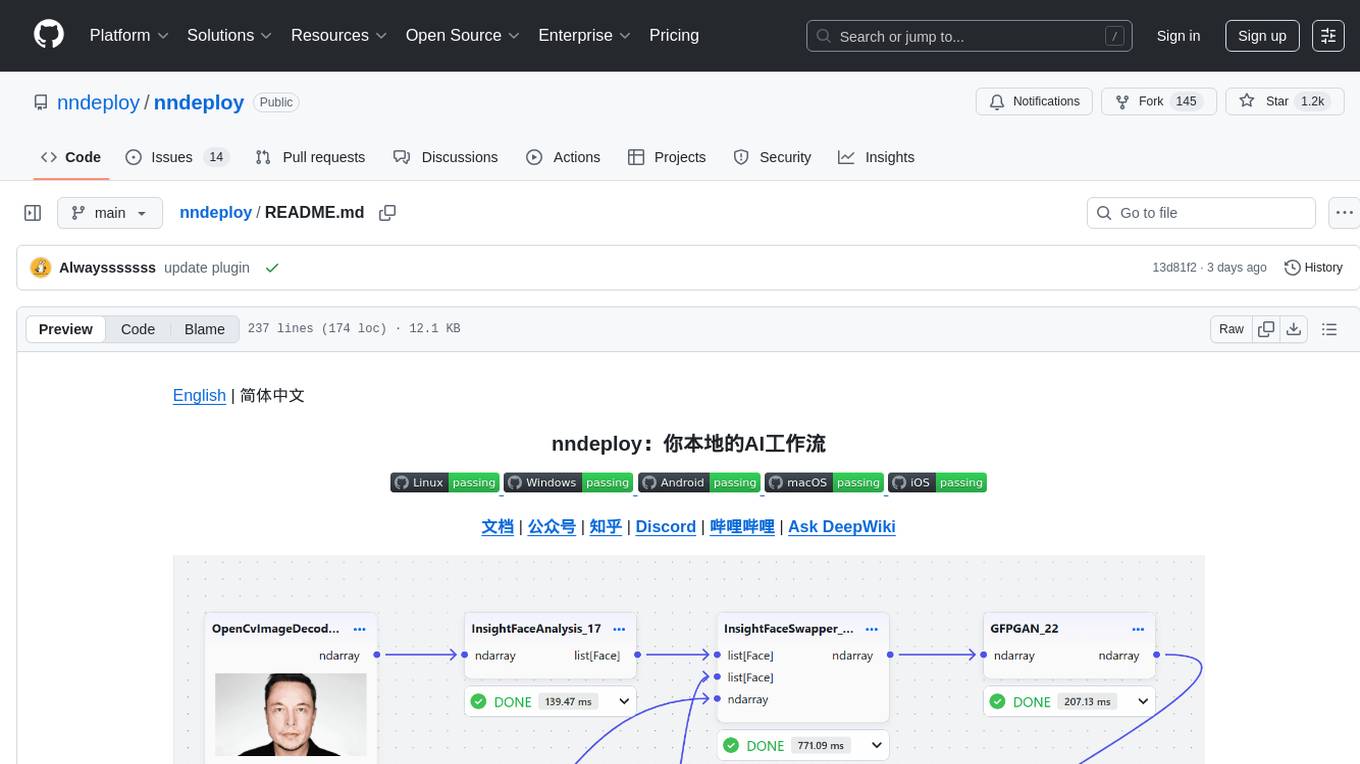

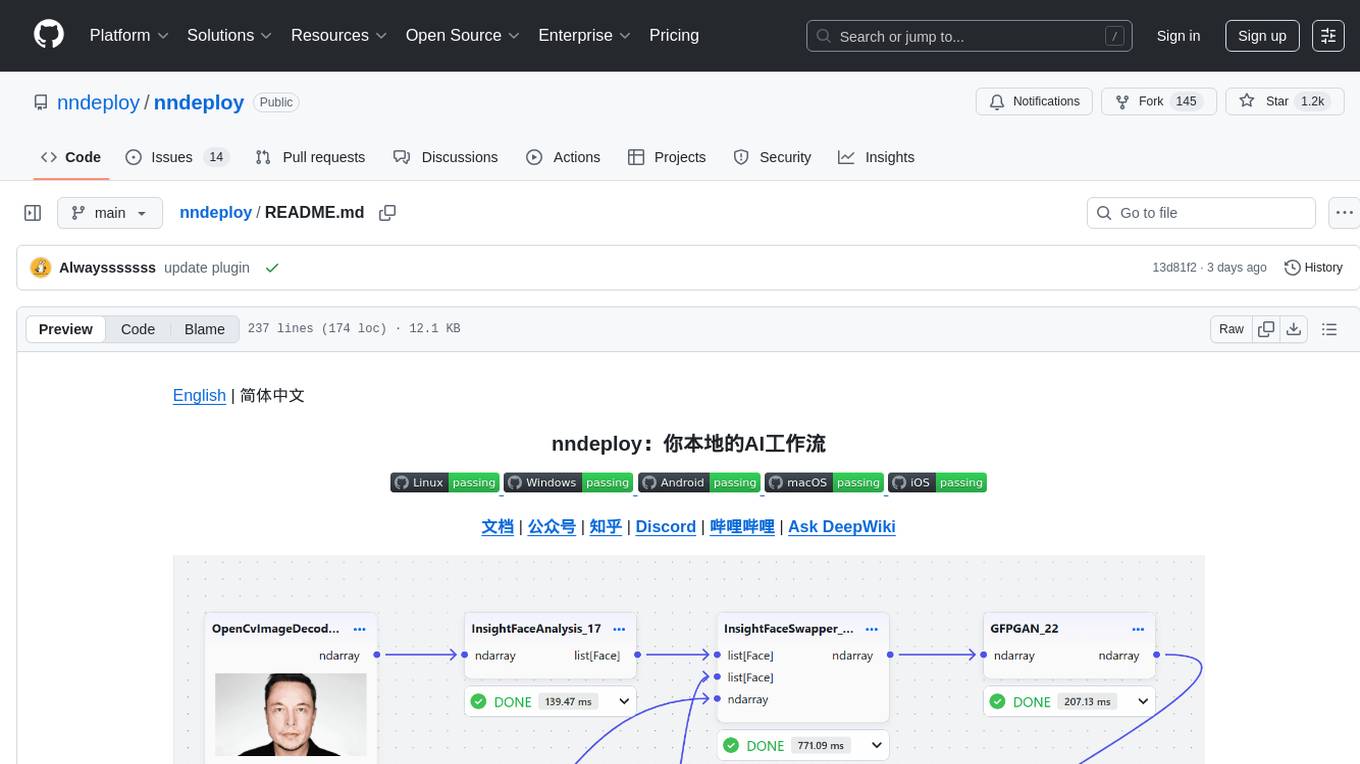

nndeploy 是一款简单易用和高性能的 AI 部署框架。解决的是 AI 算法在端侧部署的问题,包含桌面端(Windows、macOS)、移动端(Android、iOS)、边缘计算设备(NVIDIA Jetson、Ascend310B、RK 等)以及单机服务器(RTX 系列、T4、Ascend310P 等),基于可视化工作流和多端推理,可让 AI 算法在上述平台和硬件更高效、更高性能的落地。

针对10B以上的大模型(如大语言模型和 AIGC 生成模型),nndeploy 适合作为一款可视化工作流工具。

-

可视化工作流:拖拽节点即可部署 AI 算法,参数实时可调,效果一目了然。

-

自定义节点:支持 Python/C++自定义节点,无论是用 Python 实现预处理,还是用 C++/CUDA 编写高性能节点,均可无缝集成到与可视化工作流。

-

一键部署:工作流支持导出为 JSON,可通过 C++/Python API 调用,适用于 Linux、Windows、macOS、Android 等平台

桌面端搭建AI工作流 移动端部署

-

并行优化:支持串行、流水线并行、任务并行等执行模式

-

内存优化:零拷贝、内存池、内存复用等优化策略

-

高性能优化:内置 C++/CUDA/Ascend C/SIMD 等优化实现的节点

-

多端推理:一套工作流适配多端推理,深度集成 13 种主流推理框架,全面覆盖云端服务器、桌面应用、移动设备、边缘计算等全平台部署场景。框架支持灵活选择推理引擎,可按需编译减少依赖,同时支持接入自定义推理框架的独立运行模式。

推理框架 状态 ONNXRuntime ✅ TensorRT ✅ OpenVINO ✅ MNN ✅ TNN ✅ ncnn ✅ CoreML ✅ AscendCL ✅ RKNN ✅ SNPE ✅ TVM ✅ PyTorch ✅ nndeploy内部推理子模块 ✅

已部署多类 AI 模型,并开发 100+可视化节点,实现开箱即用体验。随着部署节点数量的增加,节点库的复用性不断提升,这将显著降低后续算法部署的开发成本。我们还将持续部署更多具有实用价值的算法。

| Application Scenario | Available Models | Remarks |

|---|---|---|

| Large Language Models | QWen-2.5, QWen-3 | Support small B models |

| Image/Video Generation | Stable Diffusion 1.5, Stable Diffusion XL, Stable Diffusion 3, HunyuanDiT, etc. | Support text-to-image, image-to-image, image inpainting, based on diffusers |

| Face Swapping | deep-live-cam | |

| OCR | Paddle OCR | |

| Object Detection | YOLOv5, YOLOv6, YOLOv7, YOLOv8, YOLOv11, YOLOx | |

| Object Tracking | FairMot | |

| Image Segmentation | RBMGv1.4, PPMatting, Segment Anything | |

| Classification | ResNet, MobileNet, EfficientNet, PPLcNet, GhostNet, ShuffleNet, SqueezeNet | |

| API Services | OPENAI, DeepSeek, Moonshot | Support LLM and AIGC services |

更多查看已部署模型列表详解

-

步骤一:安装

pip install --upgrade nndeploy

-

步骤二:启动可视化界面

# 方式一:命令行 nndeploy-app --port 8000 # 方式二:代码启动 cd path/to/nndeploy python app.py --port 8000

启动成功后,打开 http://localhost:8000 即可访问工作流编辑器。在这里,你可以拖拽节点、调整参数、实时预览效果,所见即所得。

-

步骤三:保存并加载运行

在可视化界面中搭建、调试完成后,点击保存,工作流会导出 JSON 文件,文件中封装了所有的处理流程。你可以用以下两种方式在生产环境中运行:

-

方式一:命令行运行

用于调试

# Python CLI nndeploy-run-json --json_file path/to/workflow.json # C++ CLI nndeploy_demo_run_json --json_file path/to/workflow.json

-

方式 2:在 Python/C++ 代码中加载运行

可以将 JSON 文件集成到你现有的 Python 或 C++ 项目中,以下是一个加载和运行 LLM 工作流的示例代码:

- Python API 加载运行 LLM 工作流

graph = nndeploy.dag.Graph("") graph.remove_in_out_node() graph.load_file("path/to/llm_workflow.json") graph.init() input = graph.get_input(0) text = nndeploy.tokenizer.TokenizerText() text.texts_ = [ "<|im_start|>user\nPlease introduce NBA superstar Michael Jordan<|im_end|>\n<|im_start|>assistant\n" ] input.set(text) status = graph.run() output = graph.get_output(0) result = output.get_graph_output() graph.deinit()

- C++ API 加载运行 LLM 工作流

std::shared_ptr<dag::Graph> graph = std::make_shared<dag::Graph>(""); base::Status status = graph->loadFile("path/to/llm_workflow.json"); graph->removeInOutNode(); status = graph->init(); dag::Edge* input = graph->getInput(0); tokenizer::TokenizerText* text = new tokenizer::TokenizerText(); text->texts_ = { "<|im_start|>user\nPlease introduce NBA superstar Michael Jordan<|im_end|>\n<|im_start|>assistant\n"}; input->set(text, false); status = graph->run(); dag::Edge* output = graph->getOutput(0); tokenizer::TokenizerText* result = output->getGraphOutput<tokenizer::TokenizerText>(); status = graph->deinit();

- Python API 加载运行 LLM 工作流

-

要求 Python 3.10+,默认包含 ONNXRuntime、MNN,更多推理后端请采用开发者模式。

测试环境:Ubuntu 22.04,i7-12700,RTX3060

-

流水线并行加速。以 YOLOv11s 端到端工作流总耗时,串行 vs 流水线并行

运行方式\推理引擎 ONNXRuntime OpenVINO TensorRT 串行 54.803 ms 34.139 ms 13.213 ms 流水线并行 47.283 ms 29.666 ms 5.681 ms 性能提升 13.7% 13.1% 57% -

任务并行加速。组合任务(分割 RMBGv1.4+检测 YOLOv11s+分类 ResNet50)的端到端总耗时,串行 vs 任务并行

运行方式\推理引擎 ONNXRuntime OpenVINO TensorRT 串行 654.315 ms 489.934 ms 59.140 ms 任务并行 602.104 ms 435.181 ms 51.883 ms 性能提升 7.98% 11.2% 12.2%

-

如果你热爱开源、喜欢折腾,不论是出于学习目的,亦或是有更好的想法,欢迎加入我们

-

微信:Always031856(欢迎加好友,进群交流,备注:nndeploy_姓名)

-

感谢以下项目:TNN、FastDeploy、opencv、CGraph、tvm、mmdeploy、FlyCV、oneflow、flowgram.ai、deep-live-cam。

-

感谢HelloGithub推荐

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nndeploy

Similar Open Source Tools

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

ppt-master

PPT Master is an AI-driven intelligent visual content generation system that converts source documents into high-quality SVG content through multi-role collaboration, supporting various formats such as presentation slides, social media posts, and marketing posters. It provides tools for PDF conversion, SVG post-processing, and PPTX export. Users can interact with AI editors to create content by describing their ideas. The system offers various AI roles for different tasks and provides a comprehensive documentation guide for workflow, design guidelines, canvas formats, image embedding best practices, chart templates, quick references, role definitions, tool usage instructions, example projects, and project workspace structure. Users can contribute to the project by enhancing design templates, chart components, documentation, bug reports, and feature suggestions. The project is open-source under the MIT License.

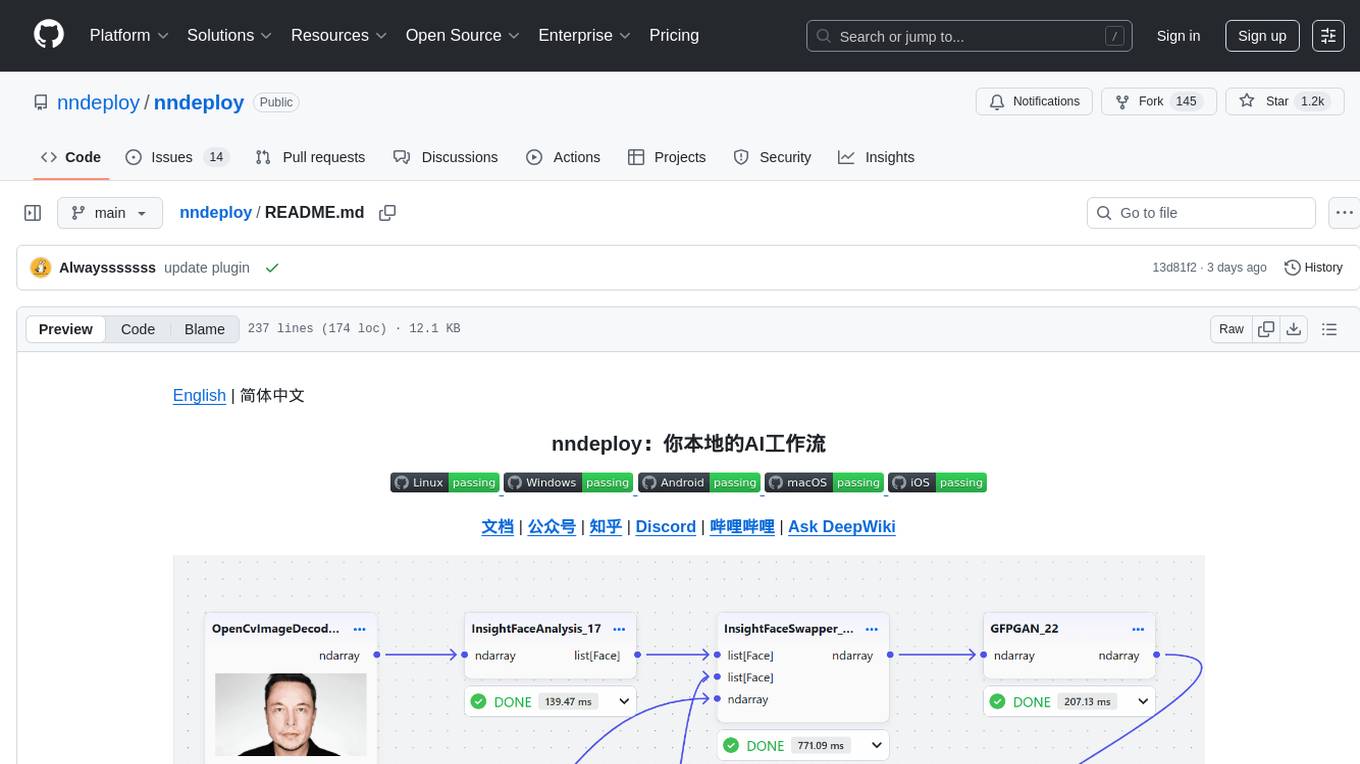

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

Qbot

Qbot is an AI-oriented automated quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It provides a full closed-loop process from data acquisition, strategy development, backtesting, simulation trading to live trading. The platform emphasizes AI strategies such as machine learning, reinforcement learning, and deep learning, combined with multi-factor models to enhance returns. Users with some Python knowledge and trading experience can easily utilize the platform to address trading pain points and gaps in the market.

hello-agents

Hello-Agents is a comprehensive tutorial on building intelligent agent systems, covering both theoretical foundations and practical applications. The tutorial aims to guide users in understanding and building AI-native agents, diving deep into core principles, architectures, and paradigms of intelligent agents. Users will learn to develop their own multi-agent applications from scratch, gaining hands-on experience with popular low-code platforms and agent frameworks. The tutorial also covers advanced topics such as memory systems, context engineering, communication protocols, and model training. By the end of the tutorial, users will have the skills to develop real-world projects like intelligent travel assistants and cyber towns.

prisma-ai

Prisma-AI is an open-source tool designed to assist users in their job search process by addressing common challenges such as lack of project highlights, mismatched resumes, difficulty in learning, and lack of answers in interview experiences. The tool utilizes AI to analyze user experiences, generate actionable project highlights, customize resumes for specific job positions, provide study materials for efficient learning, and offer structured interview answers. It also features a user-friendly interface for easy deployment and supports continuous improvement through user feedback and collaboration.

AI0x0.com

AI 0x0 is a versatile AI query generation desktop floating assistant application that supports MacOS and Windows. It allows users to utilize AI capabilities in any desktop software to query and generate text, images, audio, and video data, helping them work more efficiently. The application features a dynamic desktop floating ball, floating dialogue bubbles, customizable presets, conversation bookmarking, preset packages, network acceleration, query mode, input mode, mouse navigation, deep customization of ChatGPT Next Web, support for full-format libraries, online search, voice broadcasting, voice recognition, voice assistant, application plugins, multi-model support, online text and image generation, image recognition, frosted glass interface, light and dark theme adaptation for each language model, and free access to all language models except Chat0x0 with a key.

BlueLM

BlueLM is a large-scale pre-trained language model developed by vivo AI Global Research Institute, featuring 7B base and chat models. It includes high-quality training data with a token scale of 26 trillion, supporting both Chinese and English languages. BlueLM-7B-Chat excels in C-Eval and CMMLU evaluations, providing strong competition among open-source models of similar size. The models support 32K long texts for better context understanding while maintaining base capabilities. BlueLM welcomes developers for academic research and commercial applications.

k8m

k8m is an AI-driven Mini Kubernetes AI Dashboard lightweight console tool designed to simplify cluster management. It is built on AMIS and uses 'kom' as the Kubernetes API client. k8m has built-in Qwen2.5-Coder-7B model interaction capabilities and supports integration with your own private large models. Its key features include miniaturized design for easy deployment, user-friendly interface for intuitive operation, efficient performance with backend in Golang and frontend based on Baidu AMIS, pod file management for browsing, editing, uploading, downloading, and deleting files, pod runtime management for real-time log viewing, log downloading, and executing shell commands within pods, CRD management for automatic discovery and management of CRD resources, and intelligent translation and diagnosis based on ChatGPT for YAML property translation, Describe information interpretation, AI log diagnosis, and command recommendations, providing intelligent support for managing k8s. It is cross-platform compatible with Linux, macOS, and Windows, supporting multiple architectures like x86 and ARM for seamless operation. k8m's design philosophy is 'AI-driven, lightweight and efficient, simplifying complexity,' helping developers and operators quickly get started and easily manage Kubernetes clusters.

FaceAISDK_Android

FaceAI SDK is an on-device offline face detection, recognition, liveness detection, anti-spoofing, and 1:N/M:N face search SDK. It enables quick integration to achieve on-device face recognition, face search, and other functions. The SDK performs all functions offline on the device without the need for internet connection, ensuring privacy and security. It supports various actions for liveness detection, custom camera management, and clear imaging even in challenging lighting conditions.

Native-LLM-for-Android

This repository provides a demonstration of running a native Large Language Model (LLM) on Android devices. It supports various models such as Qwen2.5-Instruct, MiniCPM-DPO/SFT, Yuan2.0, Gemma2-it, StableLM2-Chat/Zephyr, and Phi3.5-mini-instruct. The demo models are optimized for extreme execution speed after being converted from HuggingFace or ModelScope. Users can download the demo models from the provided drive link, place them in the assets folder, and follow specific instructions for decompression and model export. The repository also includes information on quantization methods and performance benchmarks for different models on various devices.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

ComfyUI-OllamaGemini

ComfyUI GeminiOllama Extension integrates Google's Gemini API, OpenAI (ChatGPT), Anthropic's Claude, Ollama, Qwen, and image processing tools into ComfyUI for leveraging powerful models and features directly within workflows. Features include multiple AI API integrations, advanced prompt engineering, Gemini image generation, background removal, SVG conversion, FLUX resolutions, ComfyUI Styler, smart prompt generator, and more. The extension offers comprehensive API integration, advanced prompt engineering with researched templates, high-quality tools like Smart Prompt Generator and BRIA RMBG, and supports video & audio processing. It provides a single interface to access powerful AI models, transform prompts into detailed instructions, and use various tools for image processing, styling, and content generation.

md

The WeChat Markdown editor automatically renders Markdown documents as WeChat articles, eliminating the need to worry about WeChat content layout! As long as you know basic Markdown syntax (now with AI, you don't even need to know Markdown), you can create a simple and elegant WeChat article. The editor supports all basic Markdown syntax, mathematical formulas, rendering of Mermaid charts, GFM warning blocks, PlantUML rendering support, ruby annotation extension support, rich code block highlighting themes, custom theme colors and CSS styles, multiple image upload functionality with customizable configuration of image hosting services, convenient file import/export functionality, built-in local content management with automatic draft saving, integration of mainstream AI models (such as DeepSeek, OpenAI, Tongyi Qianwen, Tencent Hanyuan, Volcano Ark, etc.) to assist content creation.

Feishu-MCP

Feishu-MCP is a server that provides access, editing, and structured processing capabilities for Feishu documents for Cursor, Windsurf, Cline, and other AI-driven coding tools, based on the Model Context Protocol server. This project enables AI coding tools to directly access and understand the structured content of Feishu documents, significantly improving the intelligence and efficiency of document processing. It covers the real usage process of Feishu documents, allowing efficient utilization of document resources, including folder directory retrieval, content retrieval and understanding, smart creation and editing, efficient search and retrieval, and more. It enhances the intelligent access, editing, and searching of Feishu documents in daily usage, improving content processing efficiency and experience.

For similar tasks

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.