torra-community

Open-source visual platform for AI agent and workflow development. Inspired by Coze & Langflow, built with Nuxt4, VueFlow, TypeScript, FeathersJS & Shadcn. Drag, connect, deploy — self-hosted and fully extensible by design.

Stars: 116

Torra Community Edition is a modern AI workflow and intelligent agent visualization editor based on Nuxt 4. It offers a lightweight but production-ready architecture with frontend VueFlow + Tailwind v4 + shadcn/ui, backend FeathersJS, and built-in LangChain.js runtime. It supports multiple databases (SQLite/MySQL/MongoDB) and local ↔ cloud hot switching. The tool covers various tasks such as visual workflow editing, modern UI, native integration of LangChain.js, pluggable storage options, full-stack TypeScript implementation, and more. It is designed for enterprises looking for an easy-to-deploy and scalable solution for AI workflows.

README:

基于 Nuxt 4 的现代化 AI 工作流与智能体可视化编辑器。

Torra 社区版是 除了 n8n 之外的第二种选择:参考了 Coze 与 Langflow 的产品形态,但采用 TypeScript、Vue 3、Nuxt 4 从前到后完整实现。我们提供轻量但可生产的架构:前端 VueFlow + Tailwind v4 + shadcn/ui,后端 FeathersJS,内置 LangChain.js 运行时,默认自托管,方便企业落地与扩展。

- Nuxt 4 + Vue 3 + TypeScript 技术栈

- VueFlow 可视化编排,Tailwind CSS v4 + shadcn/ui 现代 UI

- 全栈 TypeScript:FeathersJS 后端 + LangChain.js 运行时

- 多数据库:SQLite / MySQL / MongoDB,支持本地 ↔ 云端热切换

预告:我们将于 10 月中旬 发布大版本更新。

想提前体验?现在就访问 https://www.torra.cloud,或加入我们的用户群,我们会在群内第一时间发布迭代信息与预览。

- 🚀 可视化工作流编辑器(VueFlow)

- 🎨 现代化 UI(Tailwind CSS v4 + shadcn/ui)

- 🤖 原生集成 LangChain.js

- 🗂 可插拔存储:默认本地 SQLite,可选 MySQL / MongoDB,一键迁移至远端实例

- 🔄 本地 ↔ 云端热切换,零停机

- 🪝 全链路 FeathersJS Hook(所有 CRUD)

- 🧠 覆盖主流大模型:OpenAI、Anthropic、Google、DeepSeek、Qwen、…

- 📦 连接 MySQL、PostgreSQL、Redis、Elasticsearch、文件、URL、API

- 🖼 多模态:文本 · 图片 · 音频 · 视频

- 🧪 内置 Playground 与一键 API 发布

- 📊 用量日志与计费

- 🌍 多语言界面(English / 中文 / 日本語 / …)

- ☁️ SaaS 就绪:云端版基于 Parse Platform

与 Langflow / Dify / Coze 的基础能力对齐(常用节点均已覆盖),并在此基础上提供更丰富的扩展插件(持续增加中)。

**模态覆盖:**文本 • 图片 • 音频 • 视频。**模型生态:**OpenAI、Anthropic、Google、DeepSeek、Qwen、Ollama 等。

| 分类 | 数量/状态 | 亮点 |

|---|---|---|

| Input | 3 | Chat Input、Text Input、API Input |

| Output | 2 | Chat Output、Text Output |

| Prompt | 1 | Prompt Builder |

| Image | 7 | GPT Image、Stable Diffusion、Flux、Runway、Seedream、Qwen、DALL·E 3 |

| 图像识别 | 5 | OpenAI Vision、Gemini、Qwen、Doubao、Zhipu |

| 语音 | 6 | OpenAI TTS/STT、ElevenLabs、Minimax(更多引擎规划中) |

| 视频 | 9 | Kling、Sora、Pika、Runway、Vidu、Google Veo、Dreamina、Alibaba Wan、Hailuo |

| 数据源 | 9+ | API Request/Tool、文件(多/单)、目录、MongoDB、SQL、URL、Webhook |

| Processing | 11+ | 消息↔数据、过滤、合并、JSON、结构化↔数据、保存到 OSS/Cloud |

| 模型 | 9+ | OpenAI、Anthropic、Google、xAI、DeepSeek、通义、智谱、豆包、Ollama |

| 向量库 | 1 | Milvus |

| 向量化 | 1 | OpenAI Embedding |

| 记忆 | 1 | Upstash Redis Memory |

| Agent | 1 | Agent 节点 |

| 逻辑 | 5 | If-Else、Loop、Listen、Notify、Pass |

| 工具 | 3 | Web Search、Tavily AI Search、Timezone |

| 辅助 | 12+ | 各类 ID、会话/用户/工作流/追踪、Tips、历史/存储、时长、列表 |

| MCP | 3 | HTTP、SSE、stdio |

| 子流程 | 1 | Workflow(可复用流程) |

| 插件 | 持续增长 | Markdown→WeChat、Simple Browser、Page Capture、File Marker —— 更多即将上线 |

我们会持续补齐与 Langflow / Dify / Coze 的功能,同时提供更多增值插件与供应商适配。

Torra Cloud 为 基于 Parse Platform 的多租户 SaaS 版本,面向需要即开即用、企业可控的团队与组织:

- 多租户组织 / 项目,租户级数据隔离

- RBAC 与 SSO(OAuth / OIDC),API Key(支持 BYOK)

- 套餐、配额与用量计量:按代理数、运行次数、Token、存储、Webhook 等维度限额

- 计费与发票(对接 Stripe),基于用量的限流策略

- 审计与合规:请求/响应脱敏、导出与留存策略

- Webhook / 事件流,支持 CI/CD 与 MLOps;LiveQuery 实时订阅

- 零停机迁移 与回滚;蓝绿发布

- 可观测性:工作流级 Trace、Metrics、结构化日志

欢迎在 https://www.torra.cloud 直接体验,或加入我们的用户群,我们会在群内发布迭代与预览信息。

欢迎加入社区,交流想法、获取支持、与更多用户合作:

MIT License — 请保留署名。

由 Torra 团队创建与维护。

在线演示 → https://www.torra.cloud

欢迎 Star 我们的 GitHub!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for torra-community

Similar Open Source Tools

torra-community

Torra Community Edition is a modern AI workflow and intelligent agent visualization editor based on Nuxt 4. It offers a lightweight but production-ready architecture with frontend VueFlow + Tailwind v4 + shadcn/ui, backend FeathersJS, and built-in LangChain.js runtime. It supports multiple databases (SQLite/MySQL/MongoDB) and local ↔ cloud hot switching. The tool covers various tasks such as visual workflow editing, modern UI, native integration of LangChain.js, pluggable storage options, full-stack TypeScript implementation, and more. It is designed for enterprises looking for an easy-to-deploy and scalable solution for AI workflows.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

ai_quant_trade

The ai_quant_trade repository is a comprehensive platform for stock AI trading, offering learning, simulation, and live trading capabilities. It includes features such as factor mining, traditional strategies, machine learning, deep learning, reinforcement learning, graph networks, and high-frequency trading. The repository provides tools for monitoring stocks, stock recommendations, and deployment tools for live trading. It also features new functionalities like sentiment analysis using StructBERT, reinforcement learning for multi-stock trading with a 53% annual return, automatic factor mining with 5000 factors, customized stock monitoring software, and local deep reinforcement learning strategies.

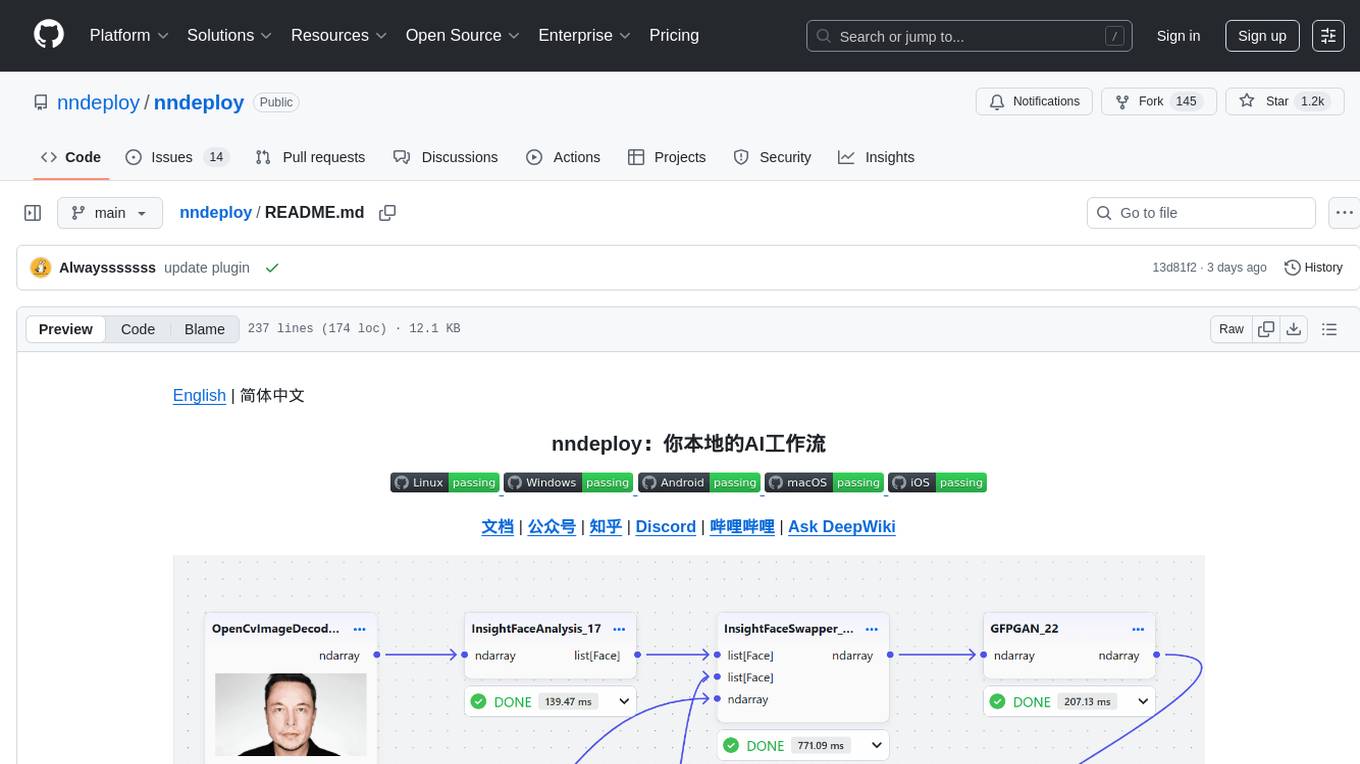

nndeploy

nndeploy is a tool that allows you to quickly build your visual AI workflow without the need for frontend technology. It provides ready-to-use algorithm nodes for non-AI programmers, including large language models, Stable Diffusion, object detection, image segmentation, etc. The workflow can be exported as a JSON configuration file, supporting Python/C++ API for direct loading and running, deployment on cloud servers, desktops, mobile devices, edge devices, and more. The framework includes mainstream high-performance inference engines and deep optimization strategies to help you transform your workflow into enterprise-level production applications.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

llm-action

This repository provides a comprehensive guide to large language models (LLMs), covering various aspects such as training, fine-tuning, compression, and applications. It includes detailed tutorials, code examples, and explanations of key concepts and techniques. The repository is maintained by Liguo Dong, an AI researcher and engineer with expertise in LLM research and development.

prisma-ai

Prisma-AI is an open-source tool designed to assist users in their job search process by addressing common challenges such as lack of project highlights, mismatched resumes, difficulty in learning, and lack of answers in interview experiences. The tool utilizes AI to analyze user experiences, generate actionable project highlights, customize resumes for specific job positions, provide study materials for efficient learning, and offer structured interview answers. It also features a user-friendly interface for easy deployment and supports continuous improvement through user feedback and collaboration.

DeepLearing-Interview-Awesome-2024

DeepLearning-Interview-Awesome-2024 is a repository that covers various topics related to deep learning, computer vision, big models (LLMs), autonomous driving, smart healthcare, and more. It provides a collection of interview questions with detailed explanations sourced from recent academic papers and industry developments. The repository is aimed at assisting individuals in academic research, work innovation, and job interviews. It includes six major modules covering topics such as large language models (LLMs), computer vision models, common problems in computer vision and perception algorithms, deep learning basics and frameworks, as well as specific tasks like 3D object detection, medical image segmentation, and more.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

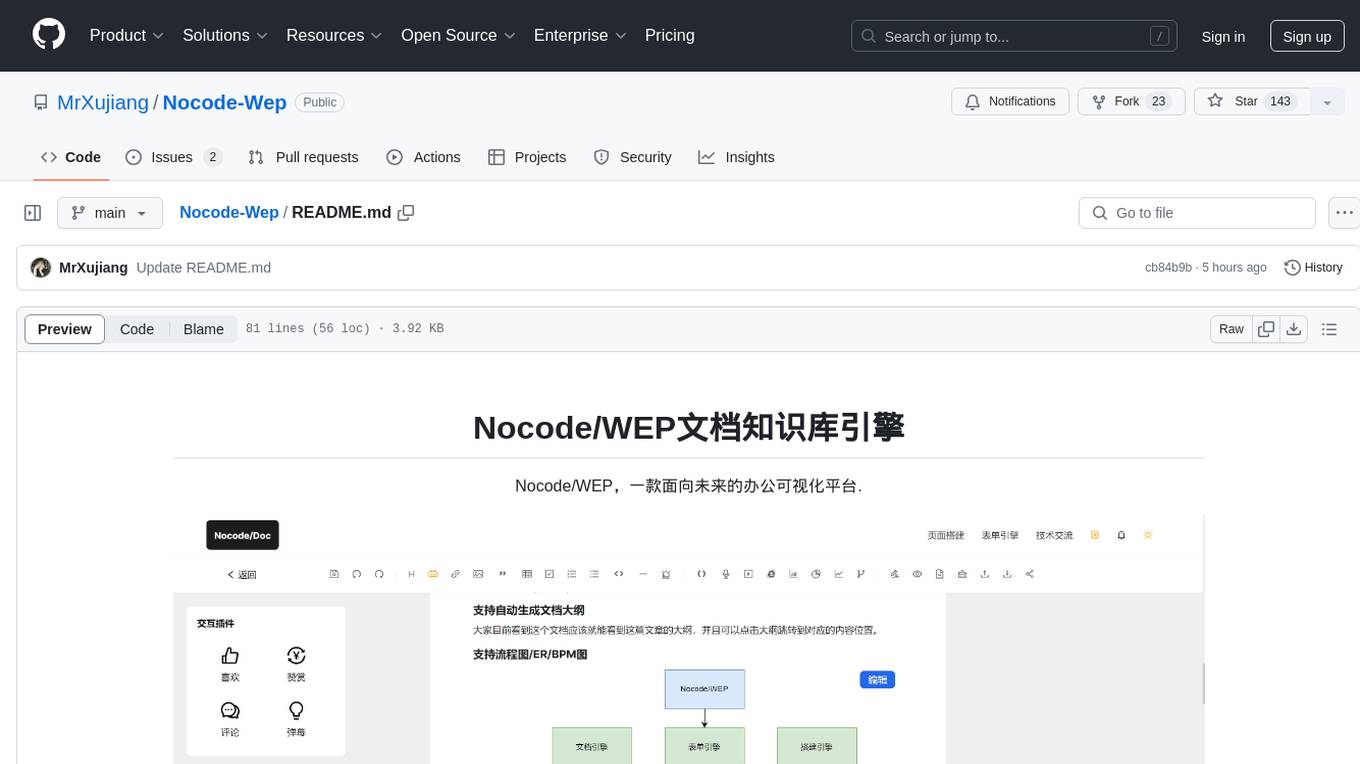

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

BlueLM

BlueLM is a large-scale pre-trained language model developed by vivo AI Global Research Institute, featuring 7B base and chat models. It includes high-quality training data with a token scale of 26 trillion, supporting both Chinese and English languages. BlueLM-7B-Chat excels in C-Eval and CMMLU evaluations, providing strong competition among open-source models of similar size. The models support 32K long texts for better context understanding while maintaining base capabilities. BlueLM welcomes developers for academic research and commercial applications.

AI-Competition-Collections

AI-Competition-Collections is a repository that collects and curates various experiences and tips from AI competitions. It includes posts on competition experiences in computer vision, NLP, speech, and other AI-related fields. The repository aims to provide valuable insights and techniques for individuals participating in AI competitions, covering topics such as image classification, object detection, OCR, adversarial attacks, and more.

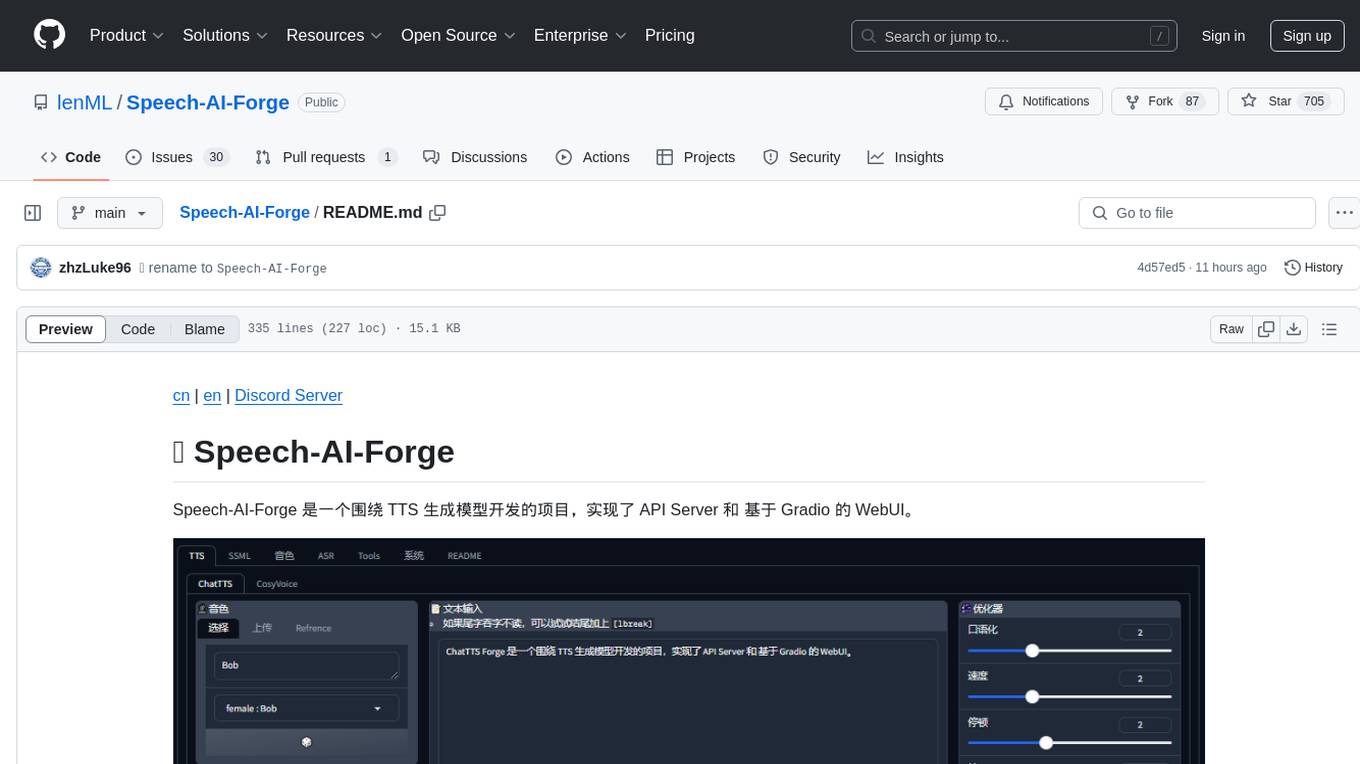

Speech-AI-Forge

Speech-AI-Forge is a project developed around TTS generation models, implementing an API Server and a WebUI based on Gradio. The project offers various ways to experience and deploy Speech-AI-Forge, including online experience on HuggingFace Spaces, one-click launch on Colab, container deployment with Docker, and local deployment. The WebUI features include TTS model functionality, speaker switch for changing voices, style control, long text support with automatic text segmentation, refiner for ChatTTS native text refinement, various tools for voice control and enhancement, support for multiple TTS models, SSML synthesis control, podcast creation tools, voice creation, voice testing, ASR tools, and post-processing tools. The API Server can be launched separately for higher API throughput. The project roadmap includes support for various TTS models, ASR models, voice clone models, and enhancer models. Model downloads can be manually initiated using provided scripts. The project aims to provide inference services and may include training-related functionalities in the future.

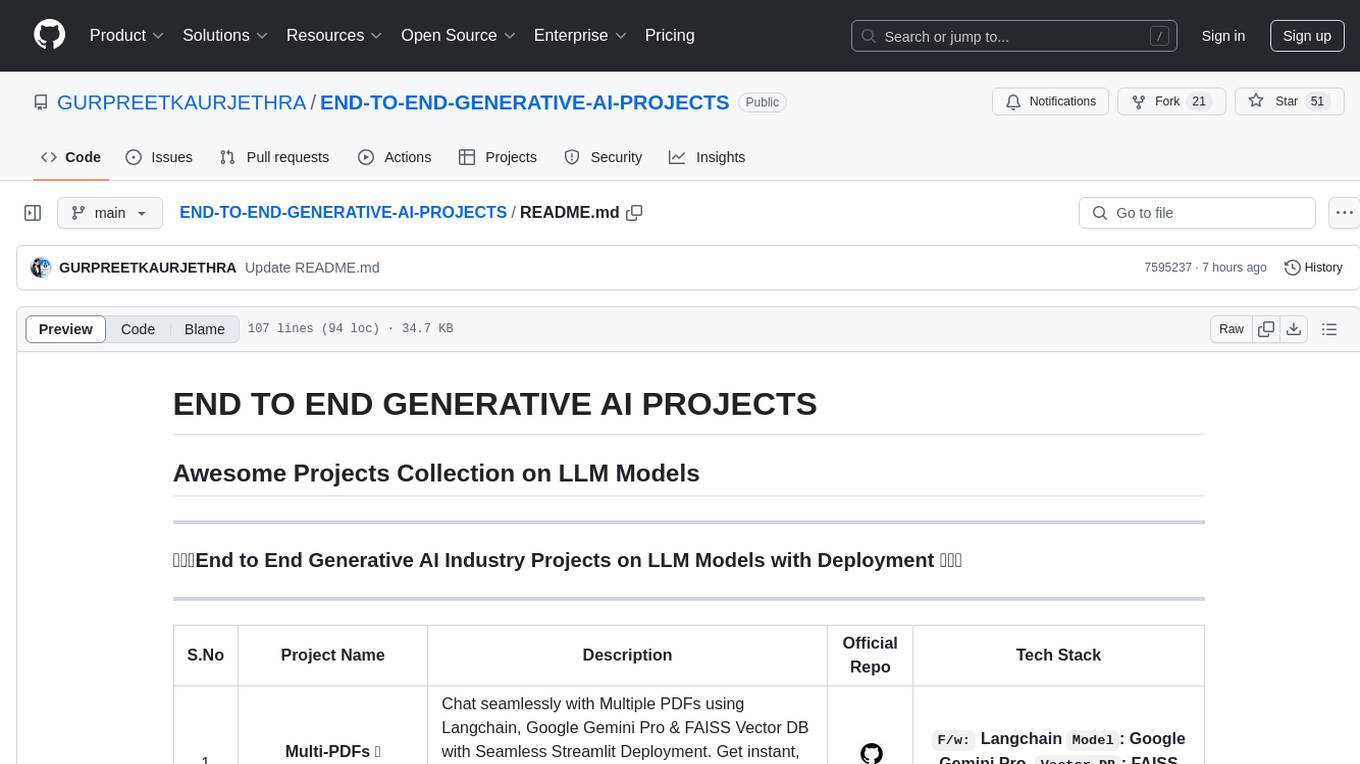

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

For similar tasks

airflow

Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative. Use Airflow to author workflows as directed acyclic graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

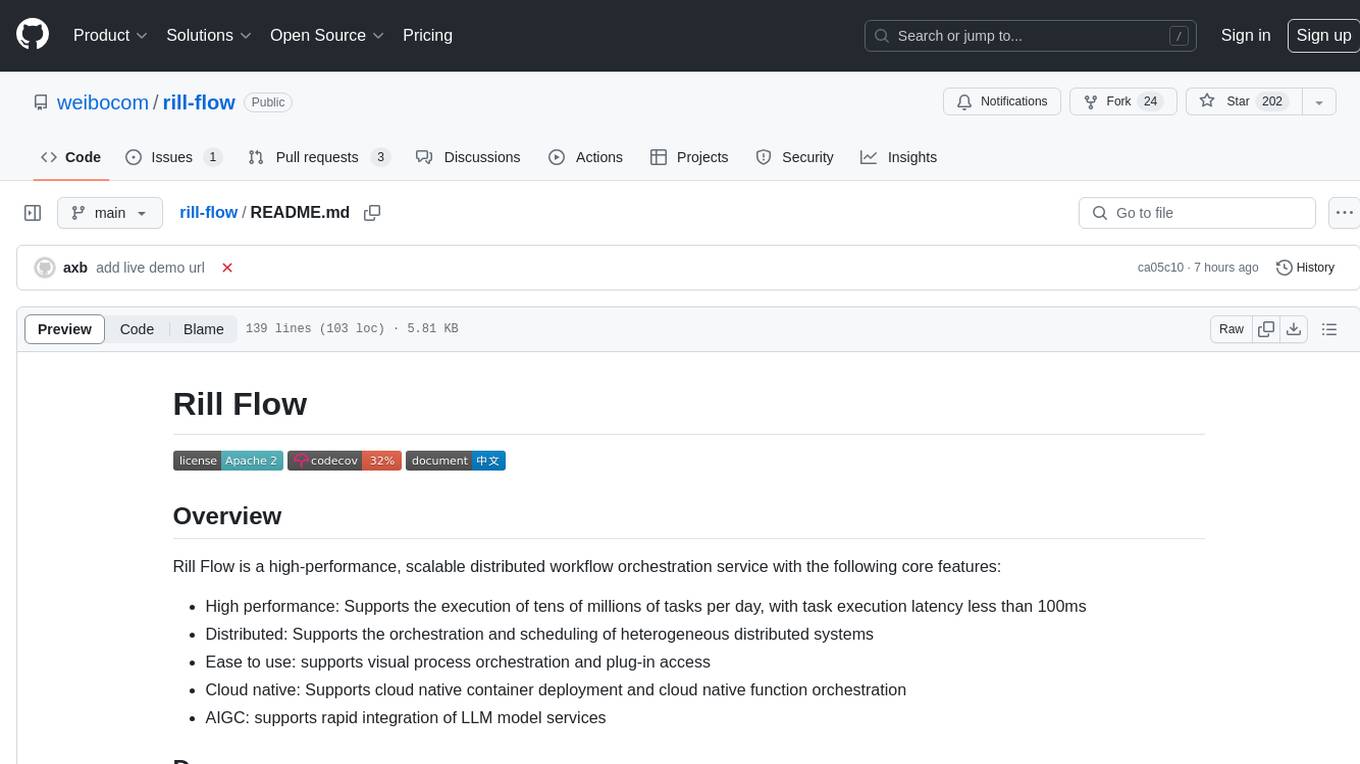

rill-flow

Rill Flow is a high-performance, scalable distributed workflow orchestration service that supports the execution of tens of millions of tasks per day with task execution latency less than 100ms. It is distributed and supports the orchestration and scheduling of heterogeneous distributed systems. Rill Flow is easy to use, supporting visual process orchestration and plug-in access. It is cloud native, allowing for cloud native container deployment and cloud native function orchestration. Additionally, Rill Flow supports rapid integration of LLM model services.

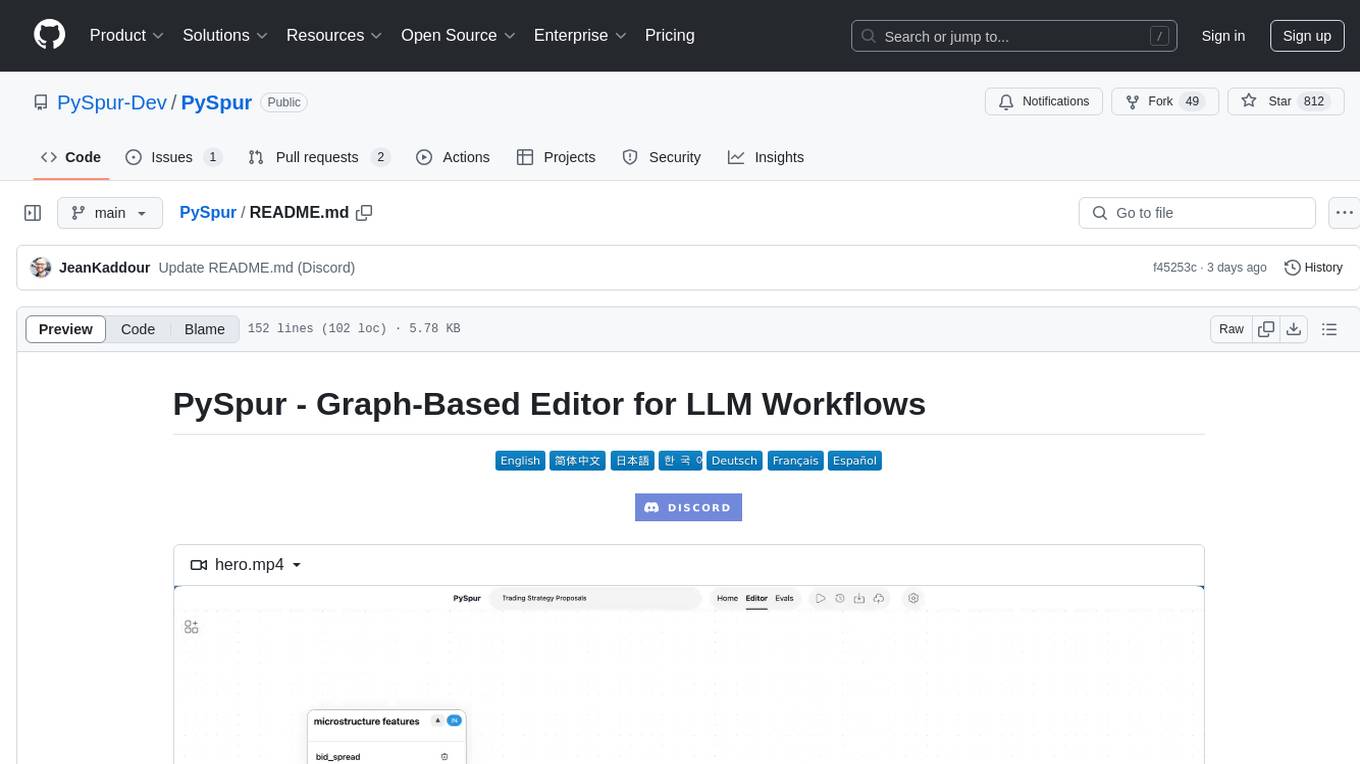

PySpur

PySpur is a graph-based editor designed for LLM workflows, offering modular building blocks for easy workflow creation and debugging at node level. It allows users to evaluate final performance and promises self-improvement features in the future. PySpur is easy-to-hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies, making it a versatile tool for workflow management in the field of AI and machine learning.

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

torra-community

Torra Community Edition is a modern AI workflow and intelligent agent visualization editor based on Nuxt 4. It offers a lightweight but production-ready architecture with frontend VueFlow + Tailwind v4 + shadcn/ui, backend FeathersJS, and built-in LangChain.js runtime. It supports multiple databases (SQLite/MySQL/MongoDB) and local ↔ cloud hot switching. The tool covers various tasks such as visual workflow editing, modern UI, native integration of LangChain.js, pluggable storage options, full-stack TypeScript implementation, and more. It is designed for enterprises looking for an easy-to-deploy and scalable solution for AI workflows.

astron-rpa

AstronRPA is an enterprise-grade Robotic Process Automation (RPA) desktop application that supports low-code/no-code development. It enables users to rapidly build workflows and automate desktop software and web pages. The tool offers comprehensive automation support for various applications, highly component-based design, enterprise-grade security and collaboration features, developer-friendly experience, native agent empowerment, and multi-channel trigger integration. It follows a frontend-backend separation architecture with components for system operations, browser automation, GUI automation, AI integration, and more. The tool is deployed via Docker and designed for complex RPA scenarios.

PaiAgent

PaiAgent is an enterprise-level AI workflow visualization orchestration platform that simplifies the combination and scheduling of AI capabilities. It allows developers and business users to quickly build complex AI processing flows through an intuitive drag-and-drop interface, without the need to write code, enabling collaboration of various large models.

ASTRA.ai

Astra.ai is a multimodal agent powered by TEN, showcasing its capabilities in speech, vision, and reasoning through RAG from local documentation. It provides a platform for developing AI agents with features like RTC transportation, extension store, workflow builder, and local deployment. Users can build and test agents locally using Docker and Node.js, with prerequisites including Agora App ID, Azure's speech-to-text and text-to-speech API keys, and OpenAI API key. The platform offers advanced customization options through config files and API keys setup, enabling users to create and deploy their AI agents for various tasks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.