gez

Gez 基于 Rspack 编译,通过 importmap 将模块映射到具有强缓存,基于内容哈希的 URL 中。

Stars: 584

Gez is a high-performance micro frontend framework based on ESM. It uses Rspack compilation and maps modules to URLs with strong caching and content-based hashing. Gez embraces modern micro frontend architecture by leveraging ESM and importmap for dependency management, providing reliable isolation with module scope, seamless integration with any modern frontend framework, intuitive development experience, and optimal performance with zero runtime overhead and reliable caching strategies.

README:

🚀 基于 ESM 的高性能微前端框架

Gez 基于 Rspack 编译,通过 importmap 将模块映射到具有强缓存、基于内容哈希的 URL 中。

📚 文档:简体中文

是时候告别过去,拥抱真正的微前端架构了。

在过去的几年里,微前端架构一直在寻找一条正确的道路。然而,我们看到的是各种复杂的技术方案,它们用层层包装和人工隔离来模拟一个理想的微前端世界。这些方案带来了沉重的性能负担,让简单的开发变得复杂,让标准的流程变得晦涩。

传统微前端方案的种种限制,正在阻碍我们前进的步伐:

- 性能噩梦:运行时注入依赖、JS 沙箱代理,每一次操作都在消耗宝贵的性能

- 脆弱的隔离:人工打造的沙箱环境,始终无法企及浏览器原生的隔离能力

- 复杂的构建:为了处理依赖关系,不得不魔改构建工具,让简单的项目变得难以维护

- 定制的规则:特殊的部署策略、运行时处理,让每一步都偏离了现代开发的标准流程

- 有限的生态:框架耦合、定制API,让技术选型被迫绑定在特定的生态中

Web 标准的进化为我们带来了新的可能。现在,我们可以用最纯粹的方式构建微前端:

- 回归原生:拥抱 ESM 和 importmap,让依赖管理回归浏览器标准

- 天然隔离:模块作用域提供了最可靠的隔离,无需任何额外的运行时开销

- 开放共赢:任何现代前端框架都能无缝接入,技术选型不再受限

- 开发体验:符合直觉的开发模式,熟悉的调试流程,一切都那么自然

- 极致性能:零运行时开销,可靠的缓存策略,让应用真正轻量起来

| 核心特性 | Gez | 传统微前端框架 |

|---|---|---|

| 依赖管理 | ✅ ESM + importmap 原生加载 ✅ 基于内容哈希的强缓存 ✅ 中心化管理,一次生效 |

❌ 运行时注入,性能损耗 ❌ 缓存策略不可靠 ❌ 依赖版本冲突风险 |

| 应用隔离 | ✅ ESM 原生模块隔离 ✅ 零运行时开销 ✅ 浏览器标准特性保障 |

❌ JS 沙箱性能开销 ❌ 复杂的状态维护 ❌ 隔离实现不稳定 |

| 构建部署 | ✅ Rspack 高性能构建 ✅ 开箱即用配置 ✅ 增量构建,按需加载 |

❌ 构建配置繁琐 ❌ 部署流程复杂 ❌ 全量构建更新 |

| 服务端渲染 | ✅ 原生 SSR 支持 ✅ 支持任意前端框架 ✅ 灵活的渲染策略 |

❌ SSR 支持有限 ❌ 框架耦合严重 ❌ 渲染策略单一 |

| 开发体验 | ✅ 完整 TypeScript 支持 ✅ 原生模块链接 ✅ 开箱即用的调试能力 |

❌ 类型支持不完善 ❌ 模块关系难以追踪 ❌ 调试成本高 |

一个完整的 HTML 服务端渲染示例,展示了如何使用 Gez 构建现代化的 Web 应用:

- 🚀 基于 Rust 构建的 Rspack,提供极致的构建性能

- 💡 包含路由、组件、样式、图片等完整功能支持

- 🛠 快速的热更新、友好的错误提示和完整的类型支持

- 📱 现代化的响应式设计,完美适配各种设备

展示基于 Vue2 的微前端架构,包含主应用和子应用:

主应用:

- 🔗 基于 ESM 导入子应用模块

- 🛠 统一的依赖管理(如 Vue 版本)

- 🌐 支持服务端渲染

子应用:

- 📦 模块化导出(组件、composables)

- 🚀 独立的开发服务器

- 💡 支持开发环境热更新

这个示例展示了:

- 如何通过 ESM 复用子应用的组件和功能

- 如何确保主子应用使用相同版本的依赖

- 如何在开发环境中独立调试子应用

基于 Preact + HTM 的高性能实现:

- ⚡️ 极致的包体积优化

- 🎯 性能优先的架构设计

- 🛠 适用于资源受限场景

所有示例都包含完整的工程配置和最佳实践指南,帮助你快速上手并应用到生产环境。查看 examples 目录了解更多详情。

v3.x - 开发阶段

当前版本基于 Rspack 构建,提供更优的开发体验和构建性能。

已知问题:

- ESM 模块导出优化中:

modern-module的export *语法存在稳定性问题 #8557

v2.x - 不推荐生产使用

此版本不再推荐用于生产环境,建议使用最新版本。

v1.x - 已停止维护

原名 Genesis,是 Gez 的前身。不再接受新功能和非关键性 bug 修复。

本项目采用 MIT 许可证。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gez

Similar Open Source Tools

gez

Gez is a high-performance micro frontend framework based on ESM. It uses Rspack compilation and maps modules to URLs with strong caching and content-based hashing. Gez embraces modern micro frontend architecture by leveraging ESM and importmap for dependency management, providing reliable isolation with module scope, seamless integration with any modern frontend framework, intuitive development experience, and optimal performance with zero runtime overhead and reliable caching strategies.

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

vocotype-cli

VocoType is a free desktop voice input method designed for professionals who value privacy and efficiency. All recognition is done locally, ensuring offline operation and no data upload. The CLI open-source version of the VocoType core engine on GitHub is mainly targeted at developers.

Snap-Solver

Snap-Solver is a revolutionary AI tool for online exam solving, designed for students, test-takers, and self-learners. With just a keystroke, it automatically captures any question on the screen, analyzes it using AI, and provides detailed answers. Whether it's complex math formulas, physics problems, coding issues, or challenges from other disciplines, Snap-Solver offers clear, accurate, and structured solutions to help you better understand and master the subject matter.

AutoGLM-GUI

AutoGLM-GUI is an AI-driven Android automation productivity tool that supports scheduled tasks, remote deployment, and 24/7 AI assistance. It features core functionalities such as deploying to servers, scheduling tasks, and creating an AI automation assistant. The tool enhances productivity by automating repetitive tasks, managing multiple devices, and providing a layered agent mode for complex task planning and execution. It also supports real-time screen preview, direct device control, and zero-configuration deployment. Users can easily download the tool for Windows, macOS, and Linux systems, and can also install it via Python package. The tool is suitable for various use cases such as server automation, batch device management, development testing, and personal productivity enhancement.

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

manga-translator-ui

This repository is a manga image translator tool that allows users to translate text in manga images automatically. It supports various types of manga, including Japanese, Korean, and American, in both black and white and color formats. The tool can detect, translate, and embed text, supporting multiple languages such as Japanese, Chinese, and English. It also includes a visual editor for adjusting text boxes. Users can interact with the tool through a Qt interface or command-line mode for batch processing. The tool offers features like intelligent text detection, multi-language OCR, multiple translation engines, high-quality translation using AI models, automatic term extraction, AI sentence segmentation, intelligent typesetting, PSD export, and batch processing. Additionally, it provides a visual editor for region editing, text editing, mask editing, undo/redo functionality, shortcut key support, and mouse wheel shortcuts.

AIResume

AIResume is an open-source resume creation platform that helps users easily create professional resumes, integrating AI technology to assist users in polishing their resumes. The project allows for template development using Vue 3, Vite, TypeScript, and Ant Design Vue. Users can edit resumes, export them as PDFs, switch between multiple resume templates, and collaborate on template development. AI features include resume refinement, deep optimization based on individual projects or experiences, and simulated interviews for user practice. Additional functionalities include theme color switching, high customization options, dark/light mode switching, real-time preview, drag-and-drop resume scaling, data export/import, data clearing, sample data prefilling, template market showcasing, and more.

bk-lite

Blueking Lite is an AI First lightweight operation product with low deployment resource requirements, low usage costs, and progressive experience, providing essential tools for operation administrators.

TrainPPTAgent

TrainPPTAgent is an AI-based intelligent presentation generation tool. Users can input a topic and the system will automatically generate a well-structured and content-rich PPT outline and page-by-page content. The project adopts a front-end and back-end separation architecture: the front-end is responsible for interaction, outline editing, and template selection, while the back-end leverages large language models (LLM) and reinforcement learning (GRPO) to complete content generation and optimization, making the generated PPT more tailored to user goals.

vscode-antigravity-cockpit

VS Code extension for monitoring Google Antigravity AI model quotas. It provides a webview dashboard, QuickPick mode, quota grouping, automatic grouping, renaming, card view, drag-and-drop sorting, status bar monitoring, threshold notifications, and privacy mode. Users can monitor quota status, remaining percentage, countdown, reset time, progress bar, and model capabilities. The extension supports local and authorized quota monitoring, multiple account authorization, and model wake-up scheduling. It also offers settings customization, user profile display, notifications, and group functionalities. Users can install the extension from the Open VSX Marketplace or via VSIX file. The source code can be built using Node.js and npm. The project is open-source under the MIT license.

DocTranslator

DocTranslator is a document translation tool that supports various file formats, compatible with OpenAI format API, and offers batch operations and multi-threading support. Whether for individual users or enterprise teams, DocTranslator helps efficiently complete document translation tasks. It supports formats like txt, markdown, word, csv, excel, pdf (non-scanned), and ppt for AI translation. The tool is deployed using Docker for easy setup and usage.

aio-hub

AIO Hub is a cross-platform AI hub built on Tauri + Vue 3 + TypeScript, aiming to provide developers and creators with precise LLM control experience and efficient toolchain. It features a chat function designed for complex tasks and deep exploration, a unified context pipeline for controlling every token sent to the model, interactive AI buttons, dual-view management for non-linear conversation mapping, open ecosystem compatibility with various AI models, and a rich text renderer for LLM output. The tool also includes features for media workstation, developer productivity, system and asset management, regex applier, collaboration enhancement between developers and AI, and more.

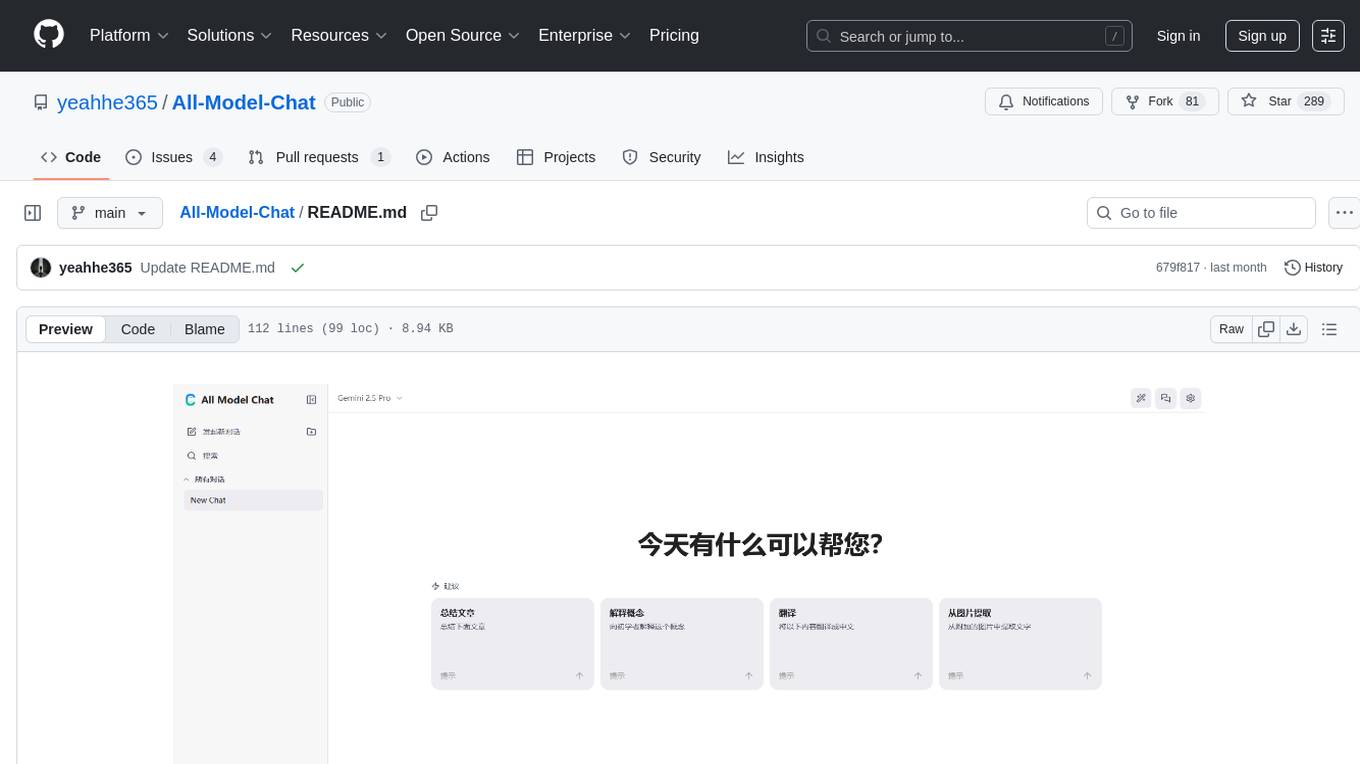

All-Model-Chat

All Model Chat is a feature-rich, highly customizable web chat application designed specifically for the Google Gemini API family. It integrates dynamic model selection, multimodal file input, streaming responses, comprehensive chat history management, and extensive customization options to provide an unparalleled AI interactive experience.

CloudFlare-AI-Insight-Daily

CloudFlare-AI-Insight-Daily is a content aggregation and generation platform powered by Cloudflare Workers. It curates the latest updates in the AI field, including industry news, popular open-source projects, cutting-edge academic papers, and tech influencers' social media comments. The platform utilizes the Google Gemini model for intelligent processing and summary generation, automatically publishing AI daily reports on GitHub Pages. Its goal is to be your efficient assistant in staying ahead in the rapidly changing AI landscape and acquiring the most valuable information.

KubeDoor

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

For similar tasks

gez

Gez is a high-performance micro frontend framework based on ESM. It uses Rspack compilation and maps modules to URLs with strong caching and content-based hashing. Gez embraces modern micro frontend architecture by leveraging ESM and importmap for dependency management, providing reliable isolation with module scope, seamless integration with any modern frontend framework, intuitive development experience, and optimal performance with zero runtime overhead and reliable caching strategies.

metaflow

Metaflow is a user-friendly library designed to assist scientists and engineers in developing and managing real-world data science projects. Initially created at Netflix, Metaflow aimed to enhance the productivity of data scientists working on diverse projects ranging from traditional statistics to cutting-edge deep learning. For further information, refer to Metaflow's website and documentation.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

ComposeAI

ComposeAI is an Android & iOS application similar to ChatGPT, built using Compose Multiplatform. It utilizes various technologies such as Compose Multiplatform, Material 3, OpenAI Kotlin, Voyager, Koin, SQLDelight, Multiplatform Settings, Coil3, Napier, BuildKonfig, Firebase Analytics & Crashlytics, and AdMob. The app architecture follows Google's latest guidelines. Users need to set up their own OpenAI API key before using the app.

aiohttp-devtools

aiohttp-devtools provides dev tools for developing applications with aiohttp and associated libraries. It includes CLI commands for running a local server with live reloading and serving static files. The tools aim to simplify the development process by automating tasks such as setting up a new application and managing dependencies. Developers can easily create and run aiohttp applications, manage static files, and utilize live reloading for efficient development.

minefield

BitBom Minefield is a tool that uses roaring bit maps to graph Software Bill of Materials (SBOMs) with a focus on speed, air-gapped operation, scalability, and customizability. It is optimized for rapid data processing, operates securely in isolated environments, supports millions of nodes effortlessly, and allows users to extend the project without relying on upstream changes. The tool enables users to manage and explore software dependencies within isolated environments by offline processing and analyzing SBOMs.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

For similar jobs

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

dev-conf-replay

This repository contains information about various IT seminars and developer conferences in South Korea, allowing users to watch replays of past events. It covers a wide range of topics such as AI, big data, cloud, infrastructure, devops, blockchain, mobility, games, security, mobile development, frontend, programming languages, open source, education, and community events. Users can explore upcoming and past events, view related YouTube channels, and access additional resources like free programming ebooks and data structures and algorithms tutorials.

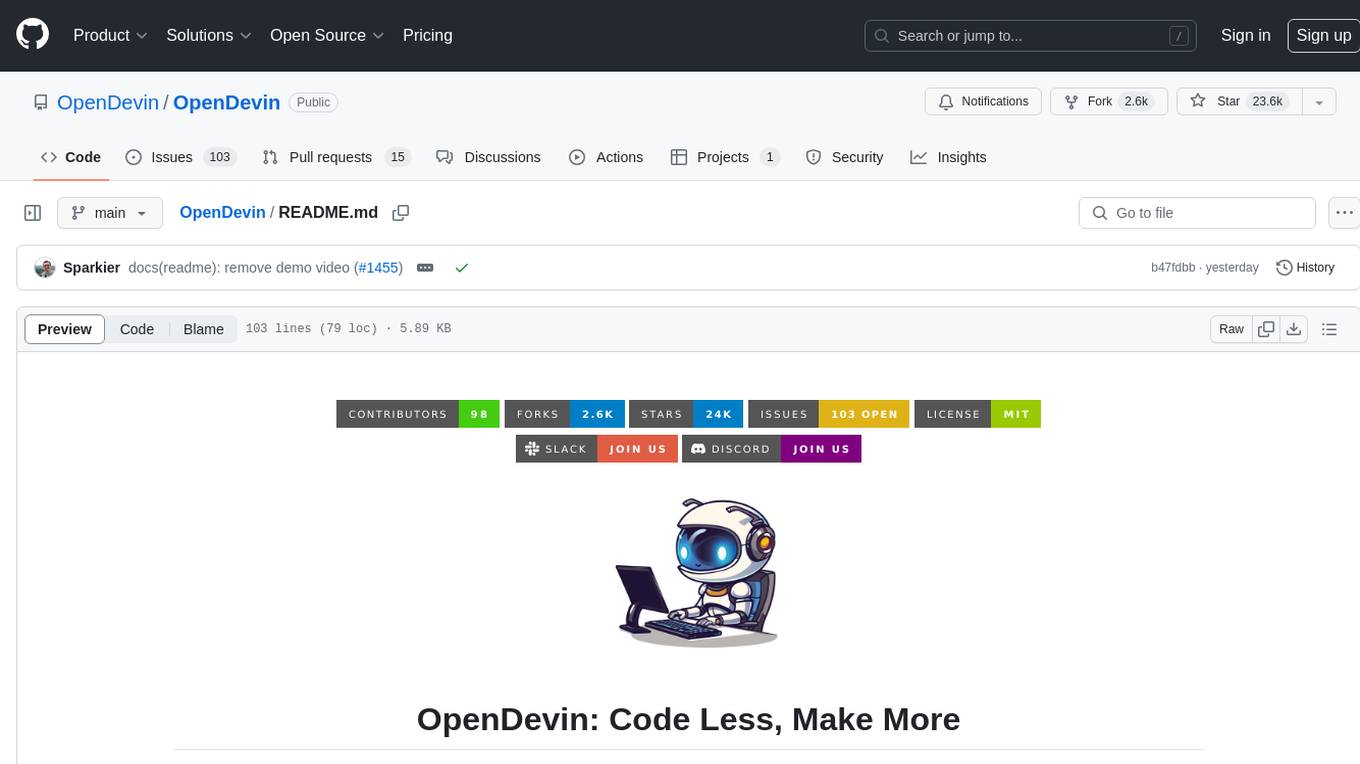

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

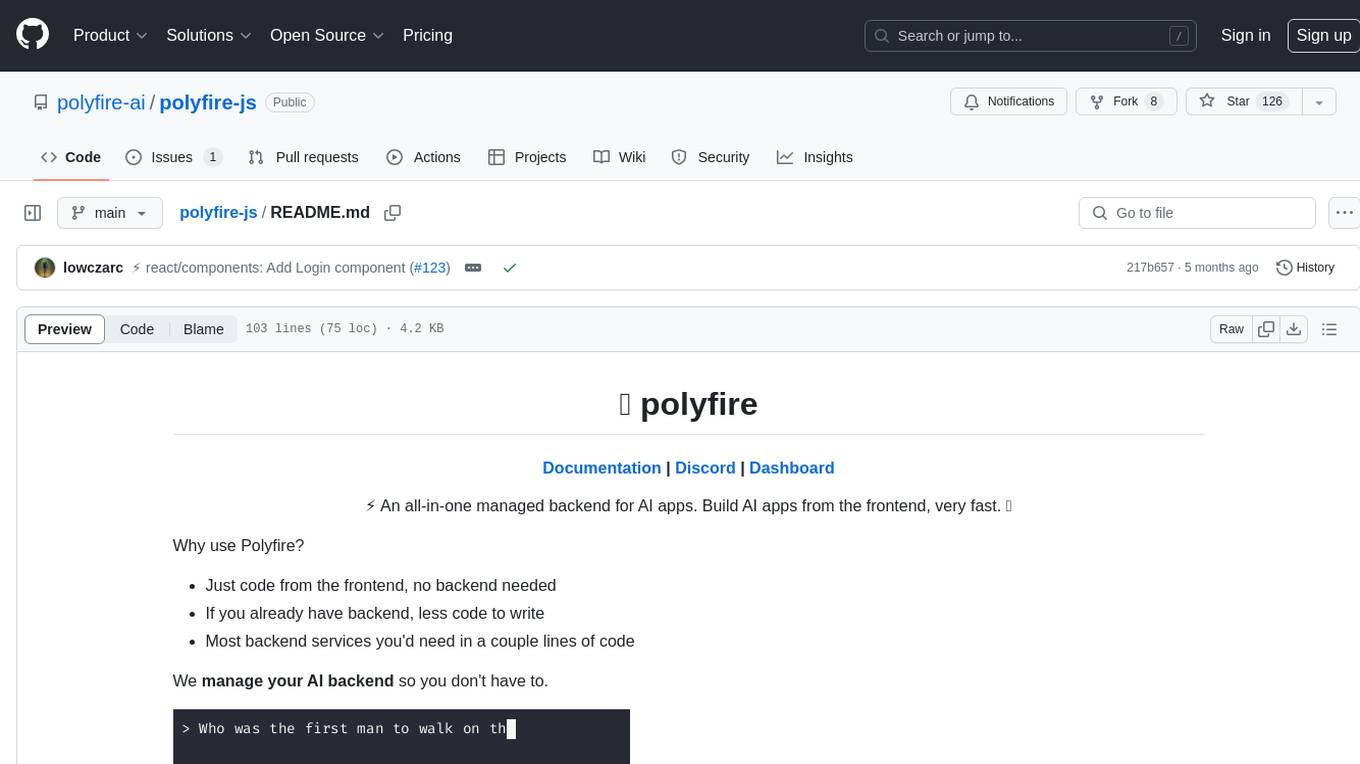

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI applications directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files, generate simple text, manage long-term memory, and generate images. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

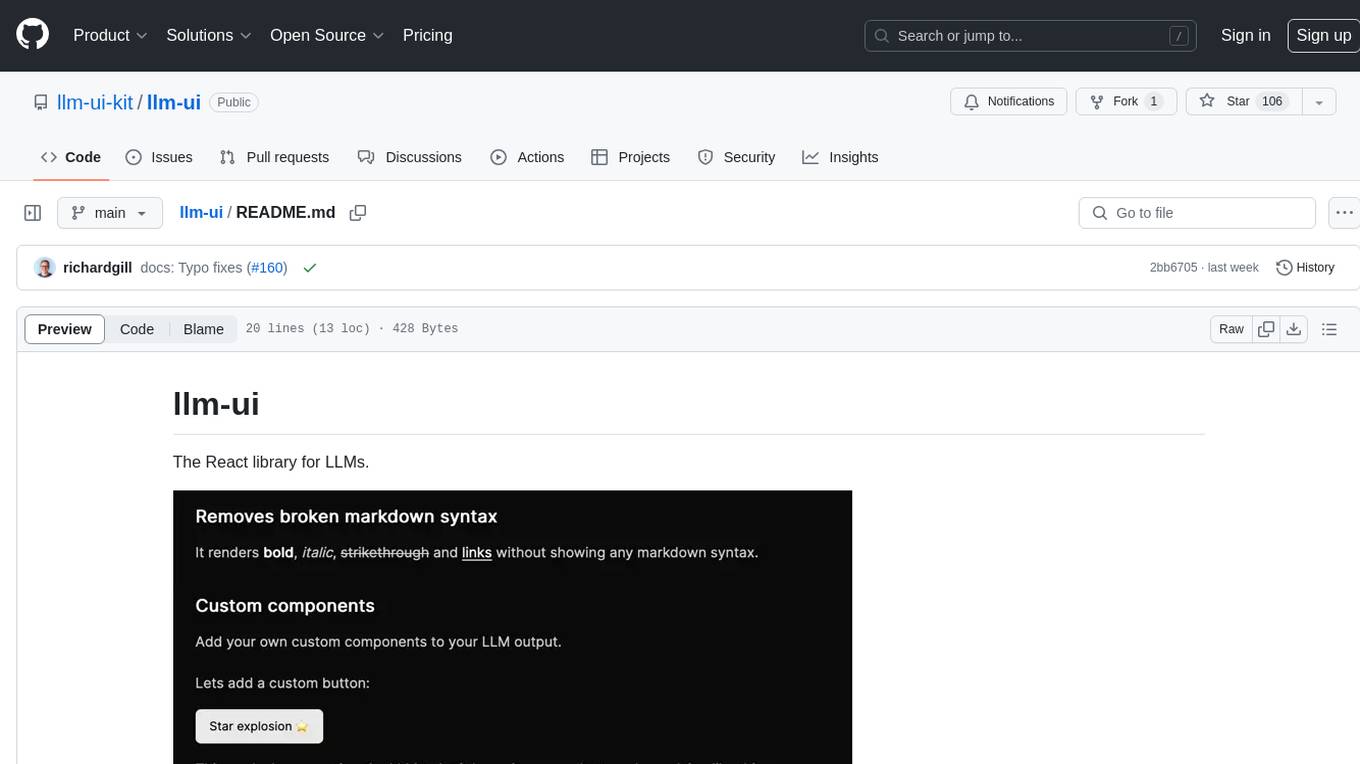

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.