Open-dLLM

The most open diffusion language model for code generation — releasing pretraining, evaluation, inference, and checkpoints.

Stars: 237

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

README:

👉 TL;DR: Open-dLLM is the most open release of a diffusion-based large language model to date —

including pretraining, evaluation, inference, and checkpoints.

This repo introduces Open-dCoder, the code-generation variant of Open-dLLM.

💻 Code | 📖 Blog | 🤗 Model

QuickSort generation using Open-dCoder (0.5B)

- 🏋️ Pretraining pipeline + open datasets

- ⚡ Inference scripts — easy sampling & generation

- 📊 Evaluation suite — HumanEval, MBPP, Infilling (lm-eval-harness + custom metrics)

- 📦 Weights + checkpoints on Hugging Face

- 🤝 Transparent configs for full reproducibility

Most diffusion LLM repos (e.g., LLaDA, Dream) only release inference scripts + weights, which limits reproducibility.

Open-dLLM is the first to open-source the entire stack for diffusion LLMs.

👉 With Open-dLLM, you can go from raw data → training → checkpoints → evaluation → inference, all in one repo.

| Project | Data | Training Code | Inference | Evaluation | Weights |

|---|---|---|---|---|---|

| Open-dLLM / Open-dCoder (ours) | ✅ | ✅ | ✅ | ✅ | ✅ |

| LLaDA | ❌ | ❌ | ✅ | ✅ | |

| Dream | ❌ | ❌ | ✅ | ✅ | |

| Gemini-Diffusion | ❌ | ❌ | ❌ | ❌ | ❌ (API only) |

| Seed Diffusion | ❌ | ❌ | ❌ | ❌ | ❌ (API only) |

| Mercury | ❌ | ❌ | ❌ | ❌ | ❌ (API only) |

✅ = fully available · ❌ = not provided ·

We use micromamba for environment management (feel free to adapt to conda):

micromamba install -c nvidia/label/cuda-12.3.0 cuda-toolkit -y

pip install ninja

# install the newest torch with cu121

pip install torch==2.5.0 --index-url https://download.pytorch.org/whl/cu121

pip install "flash-attn==2.7.4.post1" \

--extra-index-url https://github.com/Dao-AILab/flash-attention/releases/download

pip install --upgrade --no-cache-dir \

tensordict torchdata byte-flux triton>=3.1.0 \

transformers==4.54.1 accelerate datasets peft hf-transfer \

codetiming hydra-core pandas pyarrow>=15.0.0 pylatexenc \

wandb ninja liger-kernel==0.5.8 \

pytest yapf py-spy pyext pre-commit ruff packaging

pip install -e .from transformers import AutoTokenizer

from veomni.models.transformers.qwen2.modeling_qwen2 import Qwen2ForCausalLM

from veomni.models.transformers.qwen2.generation_utils import MDMGenerationConfig

import torch

model_id = "fredzzp/open-dcoder-0.5B"

device = "cuda" if torch.cuda.is_available() else "cpu"

# Load tokenizer + model

tokenizer = AutoTokenizer.from_pretrained(model_id, trust_remote_code=True)

model = Qwen2ForCausalLM.from_pretrained(

model_id, torch_dtype=torch.bfloat16, trust_remote_code=True

).to(device).eval()

# Prompt

prompt = "Write a quick sort algorithm in python."

input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(device)

# Generation config

gen_cfg = MDMGenerationConfig(max_new_tokens=128, steps=200, temperature=0.7)

with torch.no_grad():

outputs = model.diffusion_generate(inputs=input_ids, generation_config=gen_cfg)

print(tokenizer.decode(outputs.sequences[0], skip_special_tokens=True))👉 For full logging, history tracking, and file output:

python sample.pyWe release a fully open-source evaluation suite for diffusion-based LLMs (dLLMs), covering both standard code generation tasks and code infilling tasks.

Benchmarks include: HumanEval / HumanEval+, MBPP / MBPP+, HumanEval-Infill, SantaCoder-FIM.

| Method | HumanEval | HumanEval+ | MBPP | MBPP+ | ||||

|---|---|---|---|---|---|---|---|---|

| Pass@1 | Pass@10 | Pass@1 | Pass@10 | Pass@1 | Pass@10 | Pass@1 | Pass@10 | |

| LLaDA (8B) | 35.4 | 50.0 | 30.5 | 43.3 | 38.8 | 53.4 | 52.6 | 69.1 |

| Dream (7B) | 56.7 | 59.2 | 50.0 | 53.7 | 55.4 | 56.2 | 71.5 | 72.5 |

| Mask DFM (1.3B) | 9.1 | 17.6 | 7.9 | 13.4 | 6.2 | 25.0 | – | – |

| Edit Flow (1.3B) | 12.8 | 24.3 | 10.4 | 20.7 | 10.0 | 36.4 | – | – |

| Open-dCoder (0.5B, Ours) | 20.8 | 38.4 | 17.6 | 35.2 | 16.7 | 38.4 | 23.9 | 53.6 |

Despite being only 0.5B parameters, Open-dCoder competes with much larger dLLMs in code completion tasks.

| Method | HumanEval Infill Pass@1 | SantaCoder Exact Match |

|---|---|---|

| LLaDA-8B | 48.3 | 35.1 |

| Dream-7B | 39.4 | 40.7 |

| DiffuCoder-7B | 54.8 | 38.8 |

| Dream-Coder-7B | 55.3 | 40.0 |

| Open-dCoder (0.5B, Ours) | 32.5 | 29.6 |

| Open-dCoder (0.5B, Ours) Oracle Length | 77.4 | 56.4 |

We followed the average fixed length evaluation setting in DreamOn to get the results.

Install evaluation packages:

pip install -e lm-evaluation-harness human-eval-infillingcd eval/eval_completion

bash run_eval.shcd eval/eval_infill

bash run_eval.sh- Data: Concise, high-quality code corpus FineCode, hosted on Hugging Face.

- Initialization: Following Dream, continued pretraining from Qwen2.5-Coder, adapting it into the diffusion framework.

-

Loss: Masked Diffusion Model (MDM) objective — masking ratios uniformly sampled from

[0,1], reconstructed with cross-entropy loss.

python3 scripts/download_hf_data.py --repo_id fredzzp/fine_code --local_dir ./dataexport TOKENIZERS_PARALLELISM=false

NNODES=${NNODES:=1}

NPROC_PER_NODE=4

NODE_RANK=${NODE_RANK:=0}

MASTER_ADDR=${MASTER_ADDR:=0.0.0.0}

MASTER_PORT=${MASTER_PORT:=12345}

torchrun --nnodes=$NNODES --nproc-per-node $NPROC_PER_NODE --node-rank $NODE_RANK \

--master-addr=$MASTER_ADDR --master-port=$MASTER_PORT tasks/train_torch.py \

configs/pretrain/qwen2_5_coder_500M.yaml \

--data.train_path=data/data \

--train.ckpt_manager=dcp \

--train.micro_batch_size=16 \

--train.global_batch_size=512 \

--train.output_dir=logs/Qwen2.5-Coder-0.5B_mdm \

--train.save_steps=10000from huggingface_hub import HfApi

REPO_ID = "fredzzp/open-dcoder-0.5B"

LOCAL_DIR = "logs/Qwen2.5-Coder-0.5B_mdm/checkpoints/global_step_370000/hf_ckpt"

api = HfApi()

api.create_repo(repo_id=REPO_ID, repo_type="model", exist_ok=True)

api.upload_folder(repo_id=REPO_ID, repo_type="model", folder_path=LOCAL_DIR)This project builds on incredible prior work:

- Frameworks & Tooling: VeOmni, lm-eval-harness

- Open-source dLLMs: LLaDA, Dream

- Pioneering dLLMs: Gemini-Diffusion, Seed Diffusion, Mercury

- Foundational research: MD4, MDLM, DPLM

We stand on the shoulders of these projects, and hope Open-dLLM contributes back to the diffusion LLM community.

If you use Open-dLLM or Open-dCoder in your research, please cite us:

@misc{opendllm2025,

title = {Open-dLLM: Open Diffusion Large Language Models},

author = {Fred Zhangzhi Peng, Shuibai Zhang, Alex Tong, and contributors},

year = {2025},

howpublished = {\url{https://github.com/pengzhangzhi/Open-dLLM}},

note = {Blog: \url{https://oval-shell-31c.notion.site/Open-Diffusion-Large-Language-Model-25e03bf6136480b7a4ebe3d53be9f68a?pvs=74},

Model: \url{https://huggingface.co/fredzzp/open-dcoder-0.5B}}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Open-dLLM

Similar Open Source Tools

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

awesome-ai-efficiency

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

agentica

Agentica is a human-centric framework for building large language model agents. It provides functionalities for planning, memory management, tool usage, and supports features like reflection, planning and execution, RAG, multi-agent, multi-role, and workflow. The tool allows users to quickly code and orchestrate agents, customize prompts, and make API calls to various services. It supports API calls to OpenAI, Azure, Deepseek, Moonshot, Claude, Ollama, and Together. Agentica aims to simplify the process of building AI agents by providing a user-friendly interface and a range of functionalities for agent development.

FaceAISDK_Android

FaceAI SDK is an on-device offline face detection, recognition, liveness detection, anti-spoofing, and 1:N/M:N face search SDK. It enables quick integration to achieve on-device face recognition, face search, and other functions. The SDK performs all functions offline on the device without the need for internet connection, ensuring privacy and security. It supports various actions for liveness detection, custom camera management, and clear imaging even in challenging lighting conditions.

ChuanhuChatGPT

Chuanhu Chat is a user-friendly web graphical interface that provides various additional features for ChatGPT and other language models. It supports GPT-4, file-based question answering, local deployment of language models, online search, agent assistant, and fine-tuning. The tool offers a range of functionalities including auto-solving questions, online searching with network support, knowledge base for quick reading, local deployment of language models, GPT 3.5 fine-tuning, and custom model integration. It also features system prompts for effective role-playing, basic conversation capabilities with options to regenerate or delete dialogues, conversation history management with auto-saving and search functionalities, and a visually appealing user experience with themes, dark mode, LaTeX rendering, and PWA application support.

skpro

skpro is a library for supervised probabilistic prediction in python. It provides `scikit-learn`-like, `scikit-base` compatible interfaces to: * tabular **supervised regressors for probabilistic prediction** \- interval, quantile and distribution predictions * tabular **probabilistic time-to-event and survival prediction** \- instance-individual survival distributions * **metrics to evaluate probabilistic predictions** , e.g., pinball loss, empirical coverage, CRPS, survival losses * **reductions** to turn `scikit-learn` regressors into probabilistic `skpro` regressors, such as bootstrap or conformal * building **pipelines and composite models** , including tuning via probabilistic performance metrics * symbolic **probability distributions** with value domain of `pandas.DataFrame`-s and `pandas`-like interface

Nocode-Wep

Nocode/WEP is a forward-looking office visualization platform that includes modules for document building, web application creation, presentation design, and AI capabilities for office scenarios. It supports features such as configuring bullet comments, global article comments, multimedia content, custom drawing boards, flowchart editor, form designer, keyword annotations, article statistics, custom appreciation settings, JSON import/export, content block copying, and unlimited hierarchical directories. The platform is compatible with major browsers and aims to deliver content value, iterate products, share technology, and promote open-source collaboration.

sktime

sktime is a Python library for time series analysis that provides a unified interface for various time series learning tasks such as classification, regression, clustering, annotation, and forecasting. It offers time series algorithms and tools compatible with scikit-learn for building, tuning, and validating time series models. sktime aims to enhance the interoperability and usability of the time series analysis ecosystem by empowering users to apply algorithms across different tasks and providing interfaces to related libraries like scikit-learn, statsmodels, tsfresh, PyOD, and fbprophet.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

actor-core

Actor-core is a lightweight and flexible library for building actor-based concurrent applications in Java. It provides a simple API for creating and managing actors, as well as handling message passing between actors. With actor-core, developers can easily implement scalable and fault-tolerant systems using the actor model.

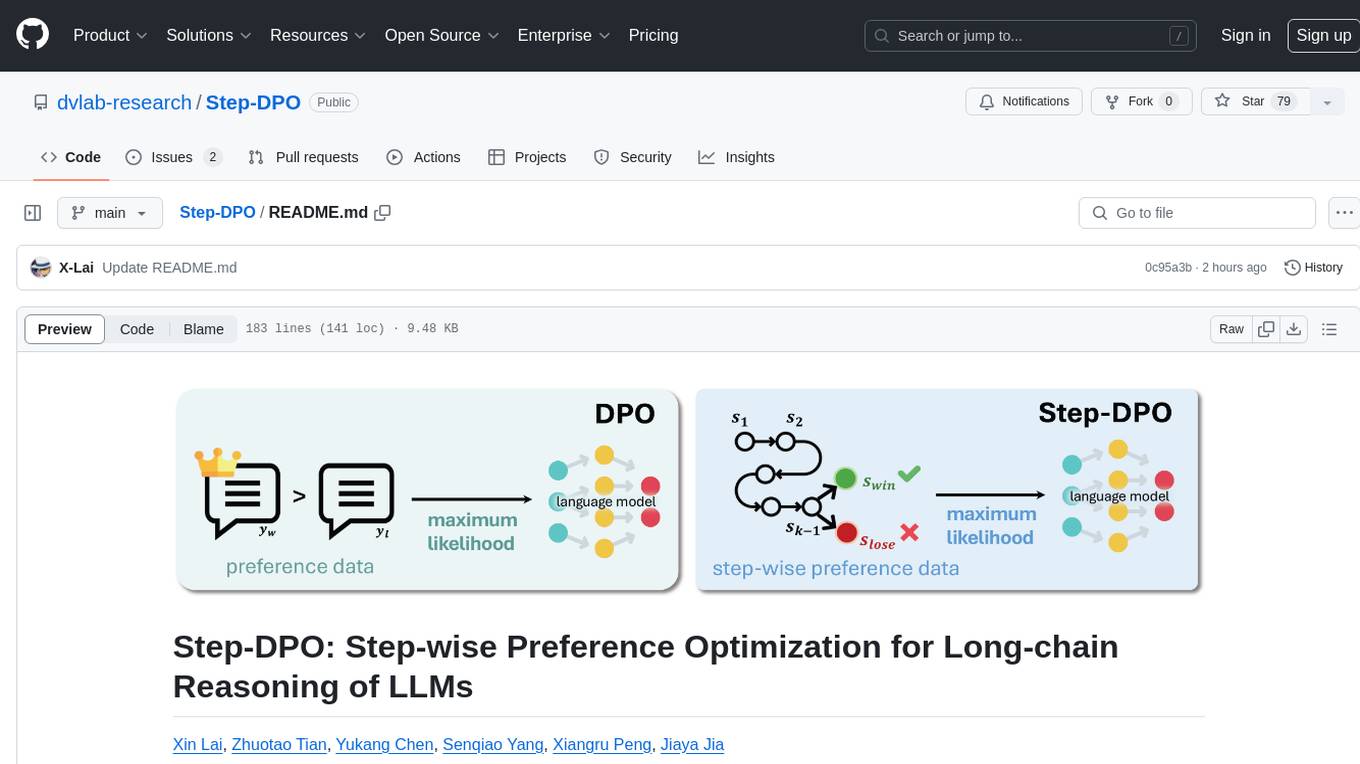

Step-DPO

Step-DPO is a method for enhancing long-chain reasoning ability of LLMs with a data construction pipeline creating a high-quality dataset. It significantly improves performance on math and GSM8K tasks with minimal data and training steps. The tool fine-tunes pre-trained models like Qwen2-7B-Instruct with Step-DPO, achieving superior results compared to other models. It provides scripts for training, evaluation, and deployment, along with examples and acknowledgements.

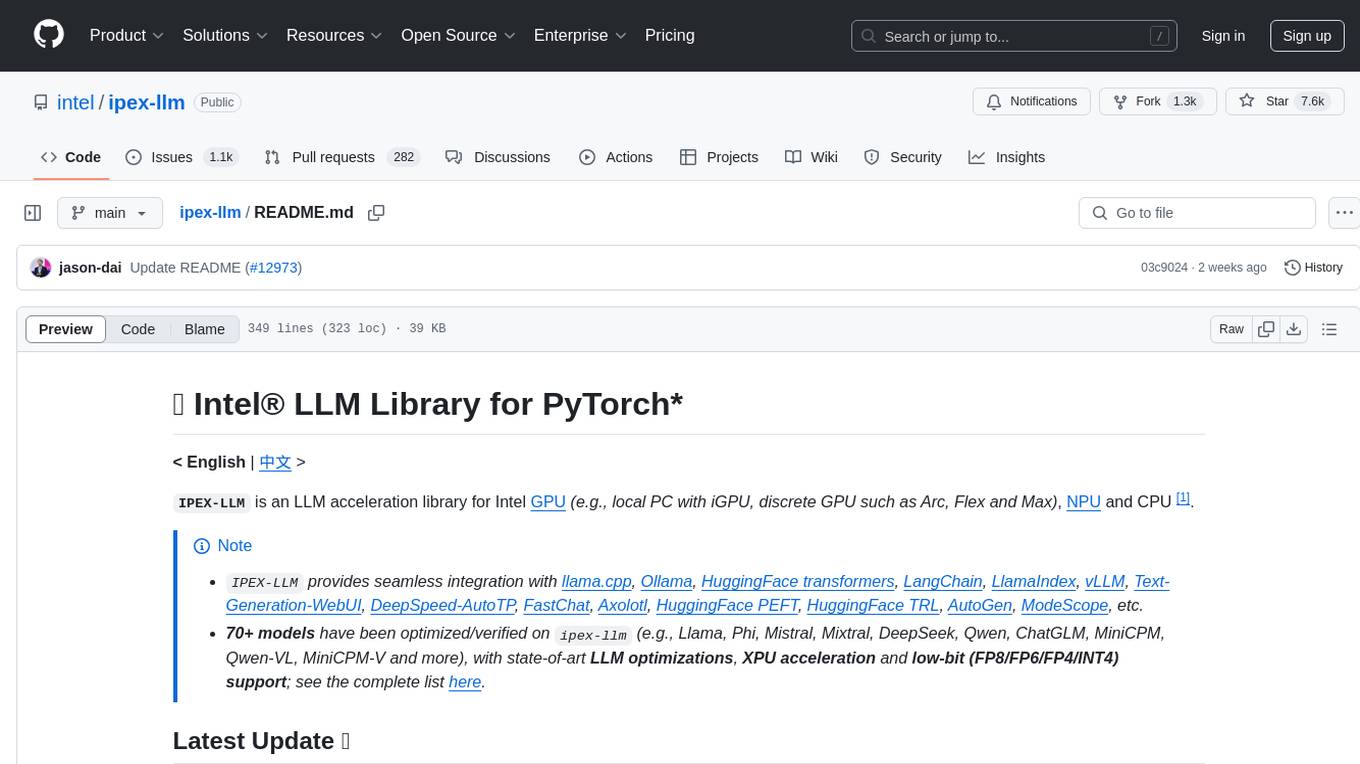

ipex-llm

The `ipex-llm` repository is an LLM acceleration library designed for Intel GPU, NPU, and CPU. It provides seamless integration with various models and tools like llama.cpp, Ollama, HuggingFace transformers, LangChain, LlamaIndex, vLLM, Text-Generation-WebUI, DeepSpeed-AutoTP, FastChat, Axolotl, and more. The library offers optimizations for over 70 models, XPU acceleration, and support for low-bit (FP8/FP6/FP4/INT4) operations. Users can run different models on Intel GPUs, NPU, and CPUs with support for various features like finetuning, inference, serving, and benchmarking.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

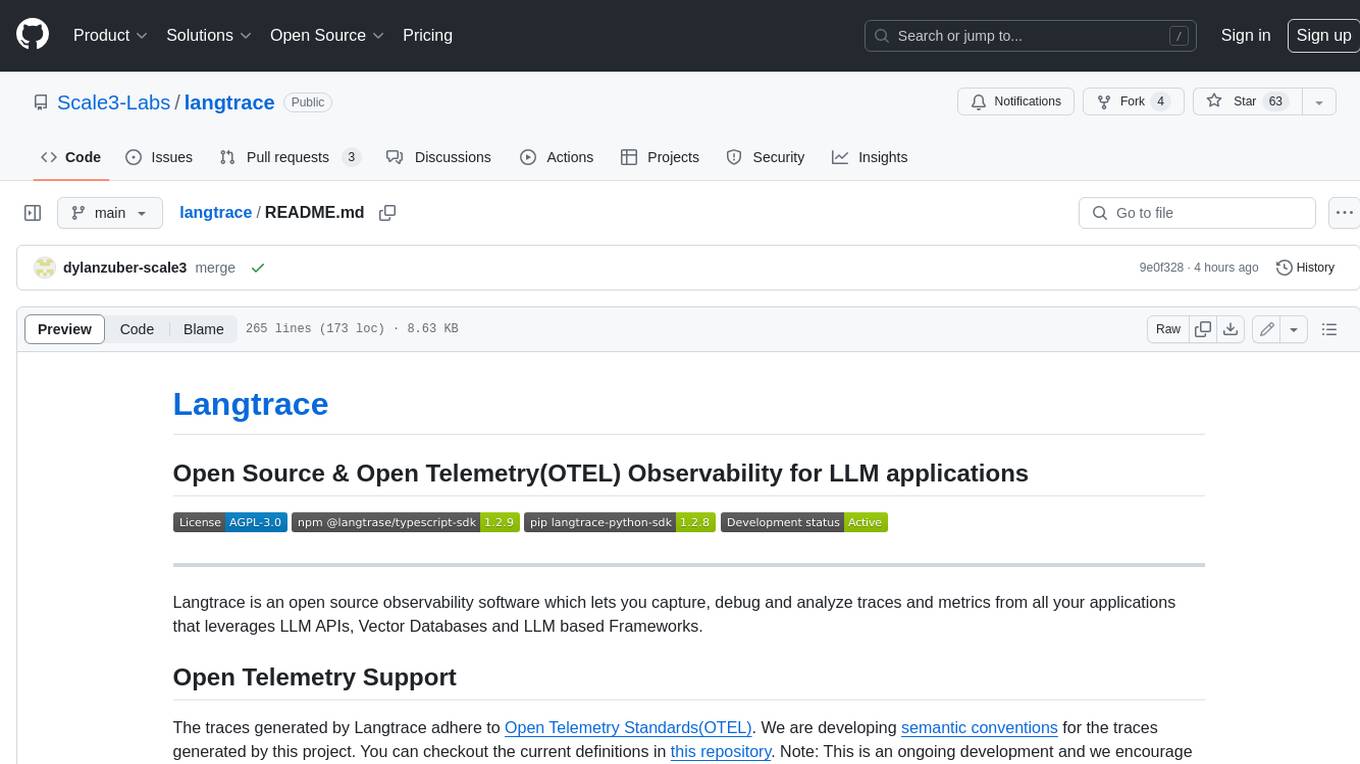

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

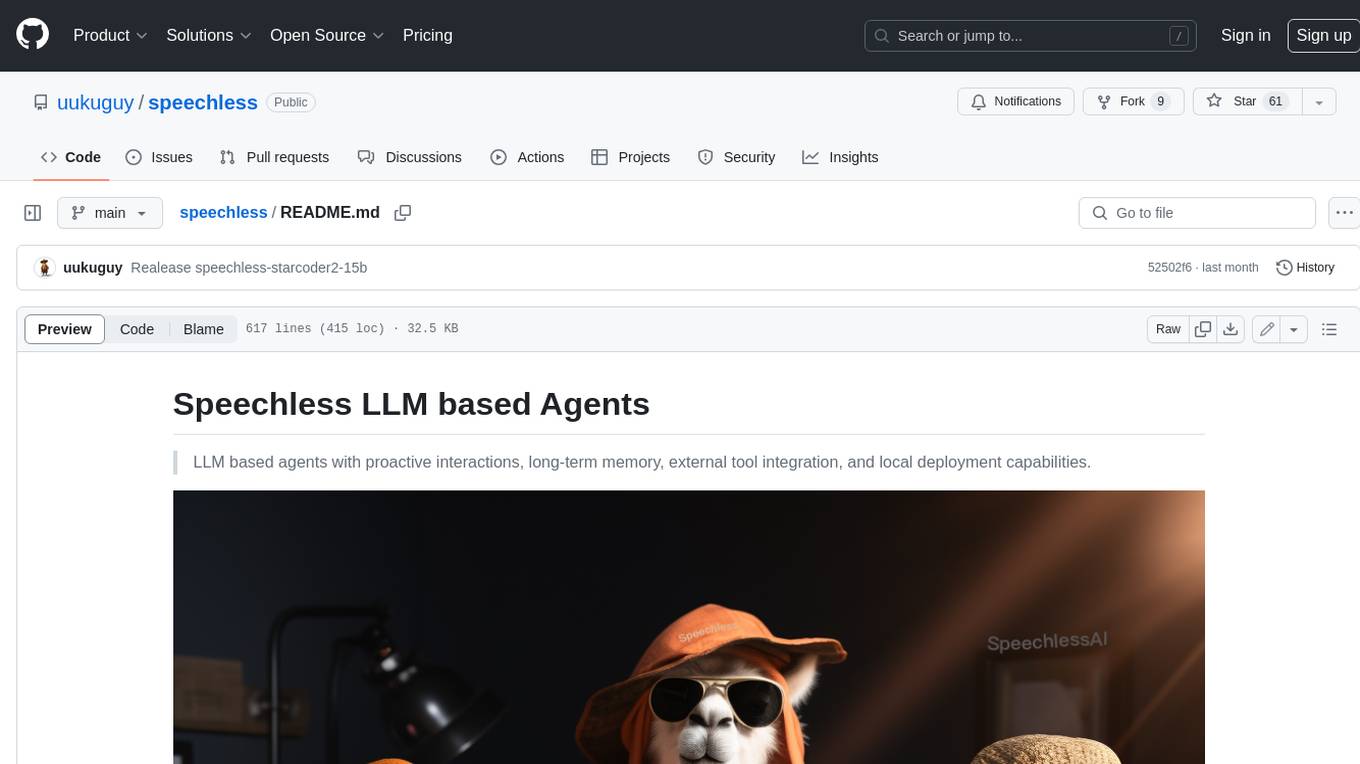

speechless

Speechless.AI is committed to integrating the superior language processing and deep reasoning capabilities of large language models into practical business applications. By enhancing the model's language understanding, knowledge accumulation, and text creation abilities, and introducing long-term memory, external tool integration, and local deployment, our aim is to establish an intelligent collaborative partner that can independently interact, continuously evolve, and closely align with various business scenarios.

For similar tasks

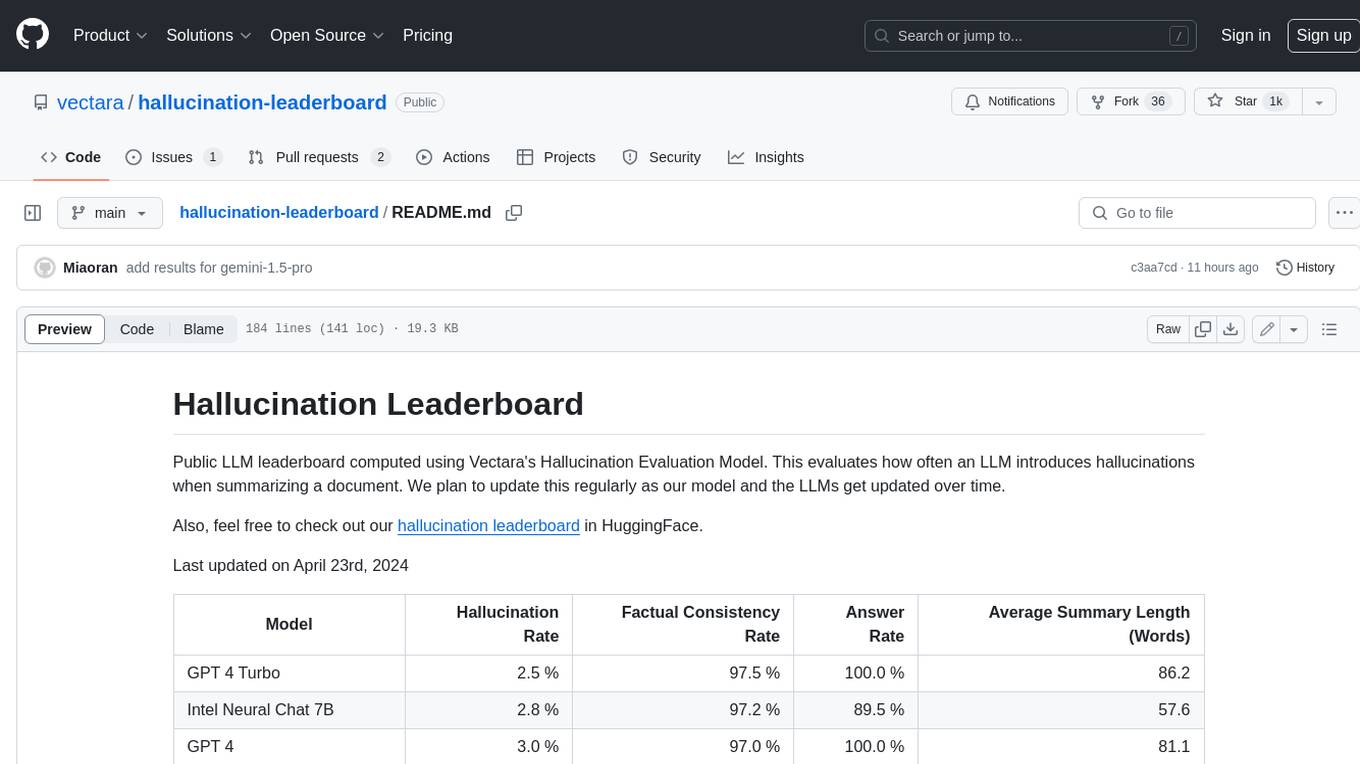

hallucination-leaderboard

This leaderboard evaluates the hallucination rate of various Large Language Models (LLMs) when summarizing documents. It uses a model trained by Vectara to detect hallucinations in LLM outputs. The leaderboard includes models from OpenAI, Anthropic, Google, Microsoft, Amazon, and others. The evaluation is based on 831 documents that were summarized by all the models. The leaderboard shows the hallucination rate, factual consistency rate, answer rate, and average summary length for each model.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

bocoel

BoCoEL is a tool that leverages Bayesian Optimization to efficiently evaluate large language models by selecting a subset of the corpus for evaluation. It encodes individual entries into embeddings, uses Bayesian optimization to select queries, retrieves from the corpus, and provides easily managed evaluations. The tool aims to reduce computation costs during evaluation with a dynamic budget, supporting models like GPT2, Pythia, and LLAMA through integration with Hugging Face transformers and datasets. BoCoEL offers a modular design and efficient representation of the corpus to enhance evaluation quality.

cladder

CLadder is a repository containing the CLadder dataset for evaluating causal reasoning in language models. The dataset consists of yes/no questions in natural language that require statistical and causal inference to answer. It includes fields such as question_id, given_info, question, answer, reasoning, and metadata like query_type and rung. The dataset also provides prompts for evaluating language models and example questions with associated reasoning steps. Additionally, it offers dataset statistics, data variants, and code setup instructions for using the repository.

uncheatable_eval

Uncheatable Eval is a tool designed to assess the language modeling capabilities of LLMs on real-time, newly generated data from the internet. It aims to provide a reliable evaluation method that is immune to data leaks and cannot be gamed. The tool supports the evaluation of Hugging Face AutoModelForCausalLM models and RWKV models by calculating the sum of negative log probabilities on new texts from various sources such as recent papers on arXiv, new projects on GitHub, news articles, and more. Uncheatable Eval ensures that the evaluation data is not included in the training sets of publicly released models, thus offering a fair assessment of the models' performance.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.