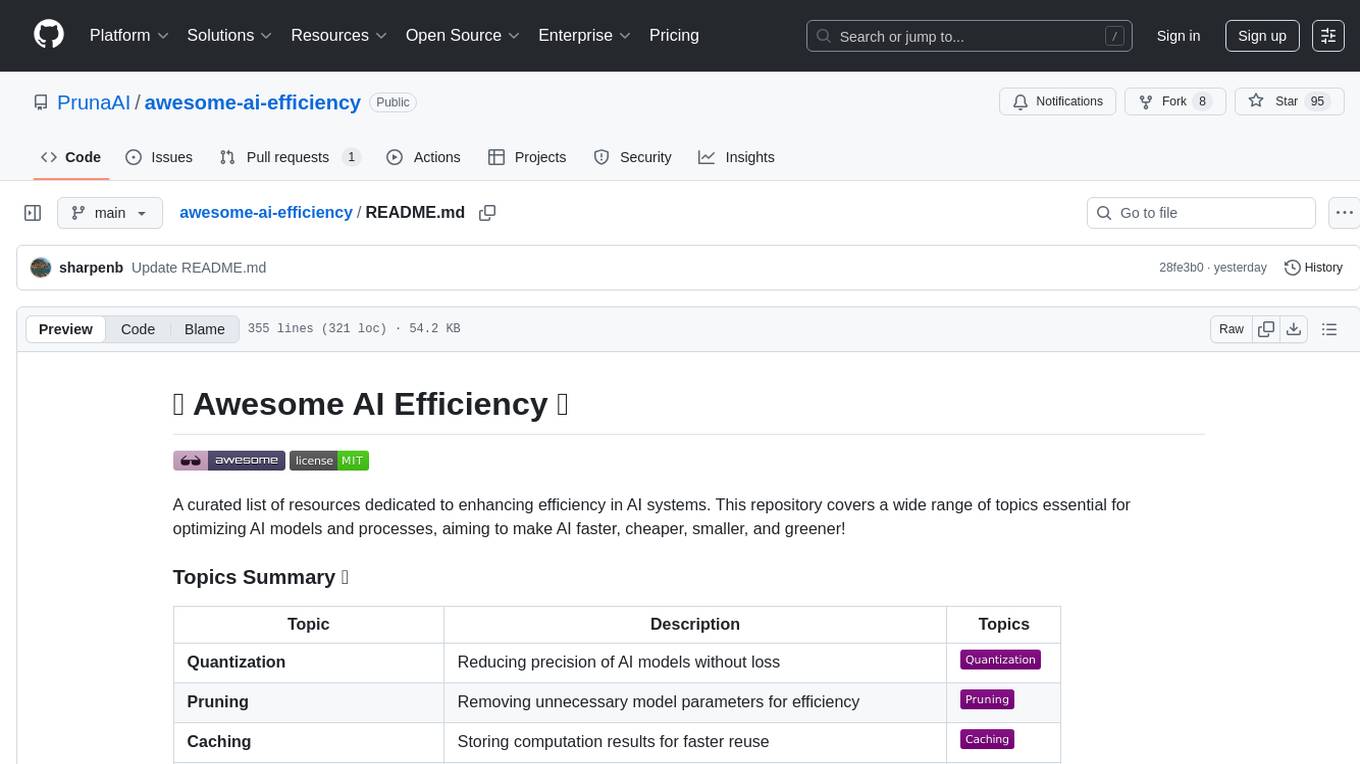

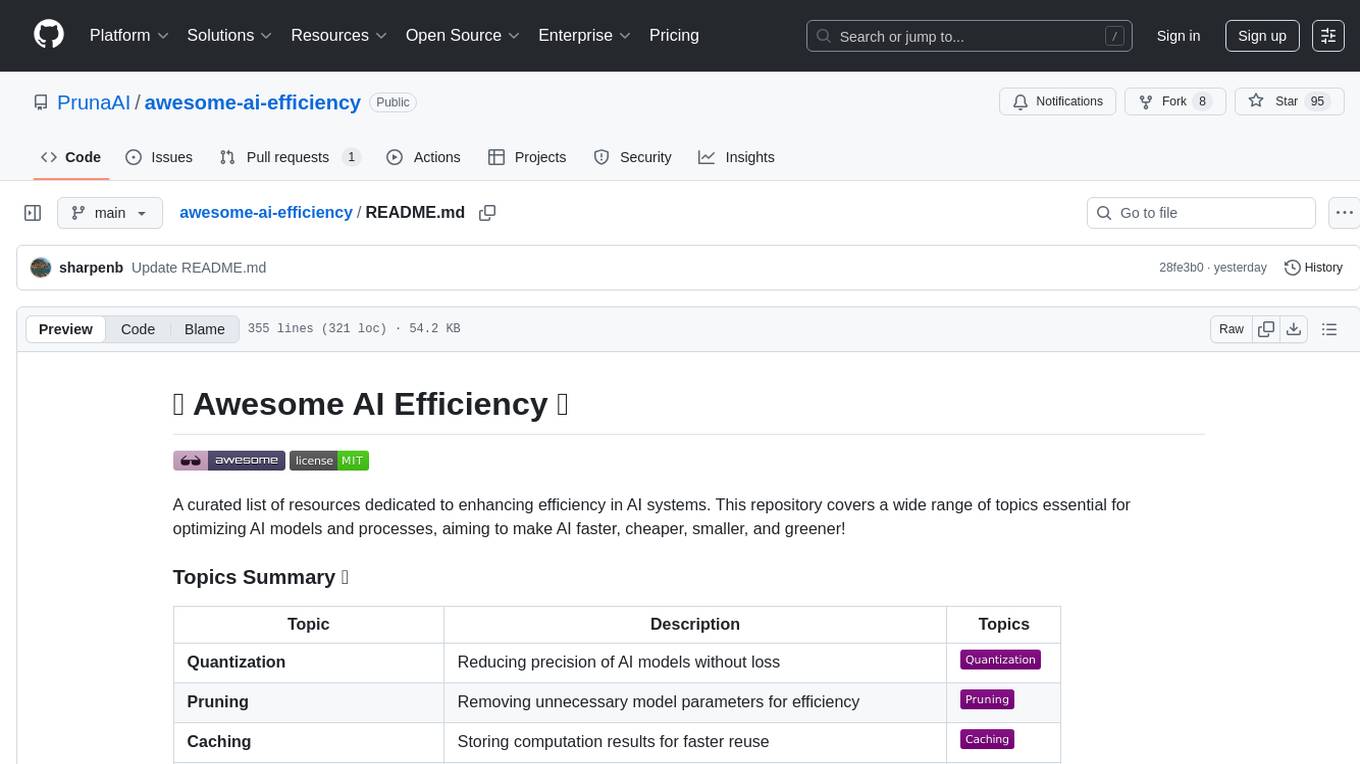

awesome-ai-efficiency

A curated list of materials on AI efficiency

Stars: 115

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

README:

A curated list of resources dedicated to enhancing efficiency in AI systems. This repository covers a wide range of topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener!

If you find this list helpful, give it a ⭐ on GitHub, share it, and contribute by submitting a pull request or issue!

- Facts 📊

- Tools 🛠️

- Articles 📰

- Reports 📈

- Research Articles 📄

- Blogs 📰

- Books 📚

- Lectures 🎓

- People 🧑💻

- Organizations 🌍

- Contributing 🤝

- License 📄

- 3-40Wh: Amount of energy consumed for one small to long ChatGPT query (Source, 2025)

- 1L: Estimated amount of water required for 20-100 ChatGPT queries (Source, 2025)

- 2 nuclear plants: Number of nuclear plants to constantly work ot generate enough energy if 80M people generate 5 pages per day (Source, 2025)

- 1 smartphone charge: Amount of energy required to AI generate a couple of images or run a few thousands inference with an LLM (Source, 2024)

- >10s: Time requried to generate 1 HD image with Flux on H100 or to generate 100 tokens with Llama 3 on T4 (Source and Source, 2024)

- 7-10 smartphone charges: Amount of energy required to AI generate one video with Wan 2.1 (Source)

- 61,848.0x: Difference between the highest and lowest energy use in energy leaderboard for AI models (Source, 2025).

- 1,300MWh: GPT-3, for example, is estimated to use just under 1,300 megawatt hours (MWh) of electricity; about as much power as consumed annually by 130 US homes (Source, 2024)

- 800M users/week: Amount of users using ChatGPT per week in 2025 (Source)

- 1B messages/day: Amount of ChatGPT queries per day in 2025 (Source)

- +160%: Expected increase of data center power consumption by 2030 (Source)

- x3.8: Hardware acceleration (GPU/TPU) reduces energy consumption by a factor of 3.8 compared with the CPU, for the same task, but also reduces response time by up to 39% (Source)

- x18:The carbon footprint of a task can vary by a factor of 18 depending on the model, framework and backend used (Source)

- ❤️ Pruna ❤️: A package to make AI models faster, smaller, faster, greener by combining compression methods (incl. quantization, pruning, caching, compilation, distillation...) on various hardware.

- TensorRT: High-performance deep learning inference library for NVIDIA GPUs.

- ONNX: Open Neural Network Exchange format for interoperability among deep learning frameworks.

- Code Carbon: Library to track energy and carbon efficiency of various hardware.

- LLM Perf: A framework for benchmarking the performance of transformers models with different hardwares, backends and optimizations.

- ML.ENERGY Leaderboard: An initiative to benchmark energy efficiency of AI models.

- AI Energy Score: An initiative to establish comparable energy efficiency ratings for AI models, helping the industry make informed decisions about sustainability in AI development.

- Model Optimization Toolkit: TensorFlow toolkit for optimizing machine learning models for deployment and execution.

- Green Coding: LLM service that you can use to prompt most open source models and see the resource usage.

- EcoLogits: EcoLogits is a python library that tracks the energy consumption and environmental footprint of using generative AI models through APIs.

- Perplexity Kernels: GPU kernels by Perplexity.

- Fast Tokenizer: Fast tokenizer is an efficient and optimized tokenizer engine for llm inference serving.

- WeightWatcher: WeightWatcher (WW) is an open-source, diagnostic tool for analyzing Deep Neural Networks (DNN), without needing access to training or even test data..

- Cockpit: A Practical Debugging Tool for Training Deep Neural Networks.

- Electrictiy Map: A live map showing the origin of the electricity in world regions and their CO2 intensity.

- MLCA: A tool for machine learning life cycle assessment.

- TritonParse: A visualization and analysis tool for Triton IR files, designed to help developers analyze, debug, and understand Triton kernel compilation processes.

- Routing on Random Forests: A framework for training and serving LLM based on random forest-based routers, thus allowing to optimize for costs.

- LLMCache: An LLM serving engine extension to reduce time-to-first-token and increase throughput, especially under long-context scenarios.

- ExLlamaV3: An optimized quantization and inference library for running LLMs locally on modern consumer-class GPUs.

- FlashDeBERTa: Flash implementation of DeBERTa disentangled attention mechanism.

- QuACK: An assortiment of Kernels for GPUs.

- Pi-Quant: An assortiment of Kernels for CPUs.

- pplx-kernels: An assortiment of Kernels for GPUs.

- LMCache: an LLM serving engine extension to reduce TTFT and increase throughput, especially under long-context scenarios, by optimizing the KV caches.

- FastWan: a family of video generation models trained via “sparse distillation”.

- GEAK Agent: This is an LLM-based multi-agent framework, which can generate functional and efficient gpu kernels automatically.

- Fused Kernel Library: Implementation of a package that allows user to define GPU kernel fusion, for non CUDA programmers.

- "Energy and AI Observatory" (2025) - IEA

- "AI’s Impacts, how to limit them, and why" (2025) - Better Tech

- "How much energy does ChatGPT use?" (2025) - Epoch AI

- "Data centers et intelligence artificielle : la course au gigantisme" (2025) - Le Monde

- "What's the environmental cost of AI?" (2024) - CO2 AI

- "Shrinking the giants: Paving the way for TinyAI" (2024) - Cell Press

- "DeepSeek might not be such good news for energy after all" (2024) - MIT Technology Review

- "AI already uses as much energy as a small country. It’s only the beginning." (2024) - Vox

- "Quelle contribution du numérique à la décarbonation ?" (2024) - France Stratégie

- "Les promesses de l’IA grevées par un lourd bilan carbone" (2024) - Le Monde

- "How much electricity does AI consume?" (2024) - The Verge

- "How do I track the direct environmental impact of my own inference and training when working with AI?" (2024) - Blog

- "Data center emissions probably 662% higher than big tech claims. Can it keep up the ruse?" (2024) - The Guardian

- "Light bulbs have energy ratings — so why can’t AI chatbots?" (2024) - Nature

- "The Environmental Impacts of AI -- Primer" (2024) - Hugging Face

- "The Climate and Sustainability Implications of Generative AI" (2024) - MIT

- "AI's "eye-watering" use of resources could be a hurdle to achieving climate goals, argue experts" (2023) - dezeen

- "How coders can help save the planet?" (2023) - Blog

- "Reducing the Carbon Footprint of Generative AI" (2023) - Blog

- "The MPG of LLMs: Exploring the Energy Efficiency of Generative AI" (2023) - Blog

- "Ecologie numérique: L’IA durable, entre vœu pieux et opportunité de marché" (2025) - Libération

- "The environmental impact of local text AI" (2025) - Green Spector

- "Misinformation by Omission: The Need for More Environmental Transparency in AI" (2025) - None

- "A General Framework for Frugal AI" (2025) - AFNOR

- "The 2025 AI Index Report" (2025) - Stanford Human-centered Artificial Intelligence

- "Energy and AI" (2025) - International Energy Agency

- "Key challenges for the environmental performance of AI" (2025) - French Ministry

- "Artificial Intelligence and electricity: A system dynamics approach" (2024) - Schneider

- "Notable AI Models" (2025) - Epoch AI

- "Powering Artificial Intelligence" (2024) - Deloitte

- "Google Sustainability Reports" (2024) - Google

- "How much water does AI consume? The public deserves to know" (2023) - OECD

- "Measuring the environmental impacts of artificial intelligence compute and applications" (2022) - OECD

- "Look Ma, No Bubbles! Designing a Low-Latency Megakernel for Llama-1B (2025)" - Hazy Research

- "Our contribution to a global environmental standard for AI (2025)" - Mistral AI

- "AI: It's All About Inference Now (2025)" - ACM Queue

- "ScalarLM vLLM Optimization with Virtual Channels" (2025) - ScalarLM

- "Review of Inference Optimization" (2025) - Aussie AI

- "The Limits of Large Fused Kernels on Nvidia GPUs: Why Real-Time AI Inference Needs More" (2025) - Smallest AI

- "How Much Power does a SOTA Open Video Model Use?" (2025) - Hugging Face

- "Improving Quantized FP4 Weight Quality via Logit Distillation" (2025) - Mobius Labs

- "Introducing NVFP4 for Efficient and Accurate Low-Precision Inference" (2025) - Nvidia

- "The LLM Engineer Almanac" (2025) - Modal

- "Enhance Your Models in 5 Minutes with the Hugging Face Kernel Hub" (2025) - Hugging Face

- "Reduce, Reuse, Recycle: Why Open Source is a Win for Sustainability" (2025) - Hugging Face

- "Mixture of Experts: When Does It Really Deliver Energy Efficiency?" (2025) - Neural Watt

- "Efficient and Portable Mixture-of-Experts Communication" (2025) - Perplexity

- "Optimizing Tokenization for Faster and Efficient LLM Processing" (2025) - Medium

- "Tensor Parallelism with CUDA - Multi-GPU Matrix Multiplication" (2025) - Substack

- "Automating GPU Kernel Generation with DeepSeek-R1 and Inference Time Scaling" (2025) - Nvidia Developer

- "AI CUDA Engineer" (2025) - Sakana AI

- "The ML/AI Engineer's starter guide to GPU Programming" (2025) - Neural Bits

- "Understanding Quantization for LLMs" (2024) - Medium

- "Don't Merge Your LoRA Adapter Into a 4-bit LLM" (2023) - Substack

- "Matrix Multiplication Background User's Guide" (2023) - Nvidia Developer

- "GPU Performance Background User's Guide" (2023) - Nvidia Developer

- Programming Massively Parallel Processors: A Hands-on Approach (2022), Wen-mei W. Hwu, David B. Kirk, Izzat El Hajj

- Efficient Deep Learning (2022), Gaurav Menghani, Naresh Singh

- AI Efficiency Courses: Slides, Exercises (2025) - Lecture by Bertrand Charpentier

- Data Compression, Theory and Applications: YouTube, Slides (2024) - Stanford

- MIT Han's Lab (2024) - MIT Lecture by Han's lab

- GPU Mode (2020) - Tutorials by GPU mode community

| Organization | Description | Website |

|---|---|---|

| Data4Good | A platform that connects data scientists with social impact projects to address global challenges using data. | data4good.org |

| Gen AI Impact | A platform dedidaceted to understand generative AI environmental footprint. | genai-impact.org |

| Make.org | A global platform that empowers citizens to propose and take action on social and environmental issues through collective projects. | make.org |

| CodeCarbon | A tool that helps track the carbon emissions of machine learning models and optimizes them for sustainability. | codecarbon.io |

| Sustainable AI Coalition | An organization dedicated to advancing sustainability in AI technologies and promoting best practices for green AI. | sustainableaicoalition.org |

| FruitPunch AI | A community that solves AI solutions for impact organizations that contribute to the SDG's. | fruitpunch.ai |

Contributions are welcome! Please follow our contribution guidelines to add new resources or suggest improvements that promote AI efficiency. Youc can contact @sharpenb if you have any questions.

This project is licensed under the MIT License. Feel free to share and use the resources as needed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-ai-efficiency

Similar Open Source Tools

awesome-ai-efficiency

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

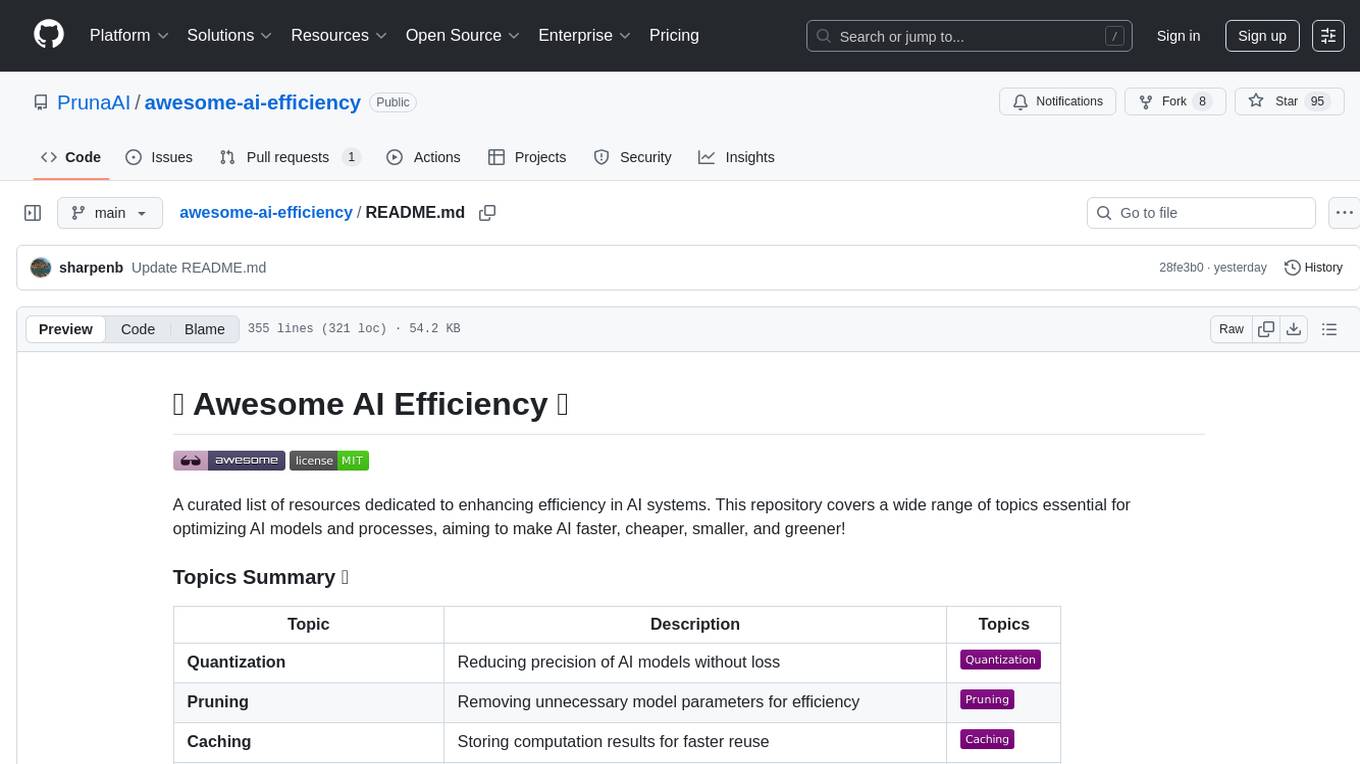

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

Xwin-LM

Xwin-LM is a powerful and stable open-source tool for aligning large language models, offering various alignment technologies like supervised fine-tuning, reward models, reject sampling, and reinforcement learning from human feedback. It has achieved top rankings in benchmarks like AlpacaEval and surpassed GPT-4. The tool is continuously updated with new models and features.

sktime

sktime is a Python library for time series analysis that provides a unified interface for various time series learning tasks such as classification, regression, clustering, annotation, and forecasting. It offers time series algorithms and tools compatible with scikit-learn for building, tuning, and validating time series models. sktime aims to enhance the interoperability and usability of the time series analysis ecosystem by empowering users to apply algorithms across different tasks and providing interfaces to related libraries like scikit-learn, statsmodels, tsfresh, PyOD, and fbprophet.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

RLinf

RLinf is a flexible and scalable open-source infrastructure designed for post-training foundation models via reinforcement learning. It provides a robust backbone for next-generation training, supporting open-ended learning, continuous generalization, and limitless possibilities in intelligence development. The tool offers unique features like Macro-to-Micro Flow, flexible execution modes, auto-scheduling strategy, embodied agent support, and fast adaptation for mainstream VLA models. RLinf is fast with hybrid mode and automatic online scaling strategy, achieving significant throughput improvement and efficiency. It is also flexible and easy to use with multiple backend integrations, adaptive communication, and built-in support for popular RL methods. The roadmap includes system-level enhancements and application-level extensions to support various training scenarios and models. Users can get started with complete documentation, quickstart guides, key design principles, example gallery, advanced features, and guidelines for extending the framework. Contributions are welcome, and users are encouraged to cite the GitHub repository and acknowledge the broader open-source community.

LlamaV-o1

LlamaV-o1 is a Large Multimodal Model designed for spontaneous reasoning tasks. It outperforms various existing models on multimodal reasoning benchmarks. The project includes a Step-by-Step Visual Reasoning Benchmark, a novel evaluation metric, and a combined Multi-Step Curriculum Learning and Beam Search Approach. The model achieves superior performance in complex multi-step visual reasoning tasks in terms of accuracy and efficiency.

matrixone

MatrixOne is the industry's first database to bring Git-style version control to data, combined with MySQL compatibility, AI-native capabilities, and cloud-native architecture. It is a HTAP (Hybrid Transactional/Analytical Processing) database with a hyper-converged HSTAP engine that seamlessly handles transactional, analytical, full-text search, and vector search workloads in a single unified system—no data movement, no ETL, no compromises. Manage your database like code with features like instant snapshots, time travel, branch & merge, instant rollback, and complete audit trail. Built for the AI era, MatrixOne is MySQL-compatible, AI-native, and cloud-native, offering storage-compute separation, elastic scaling, and Kubernetes-native deployment. It serves as one database for everything, replacing multiple databases and ETL jobs with native OLTP, OLAP, full-text search, and vector search capabilities.

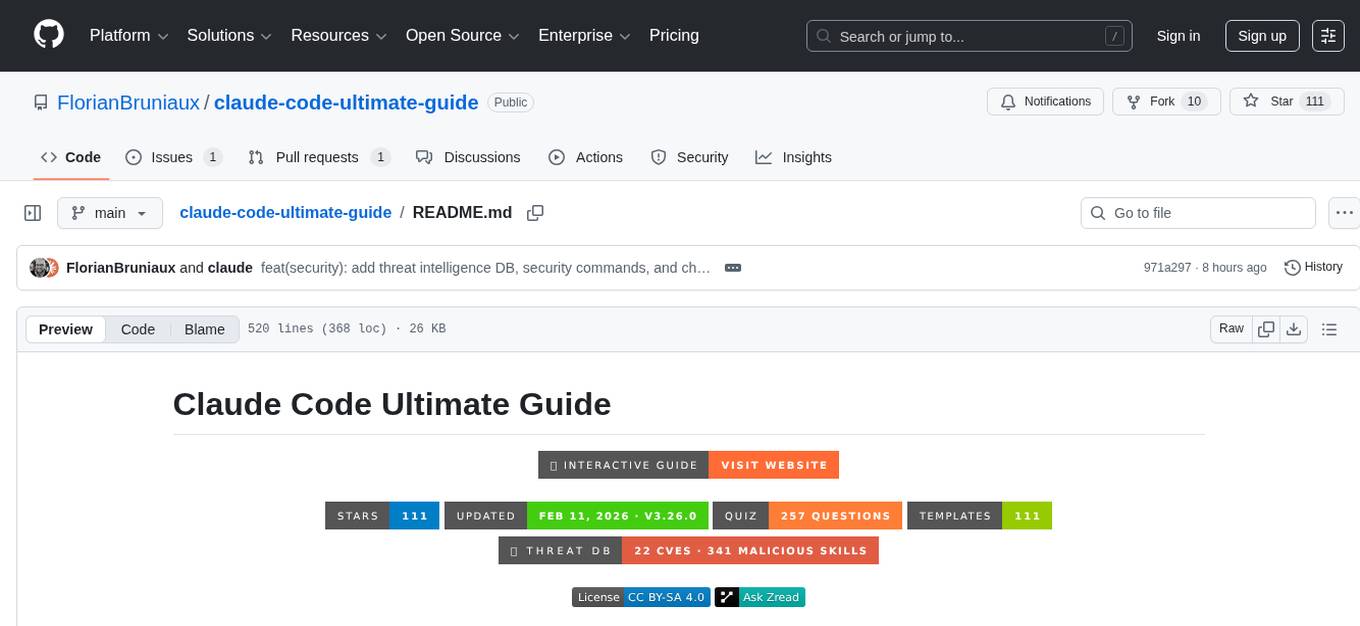

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

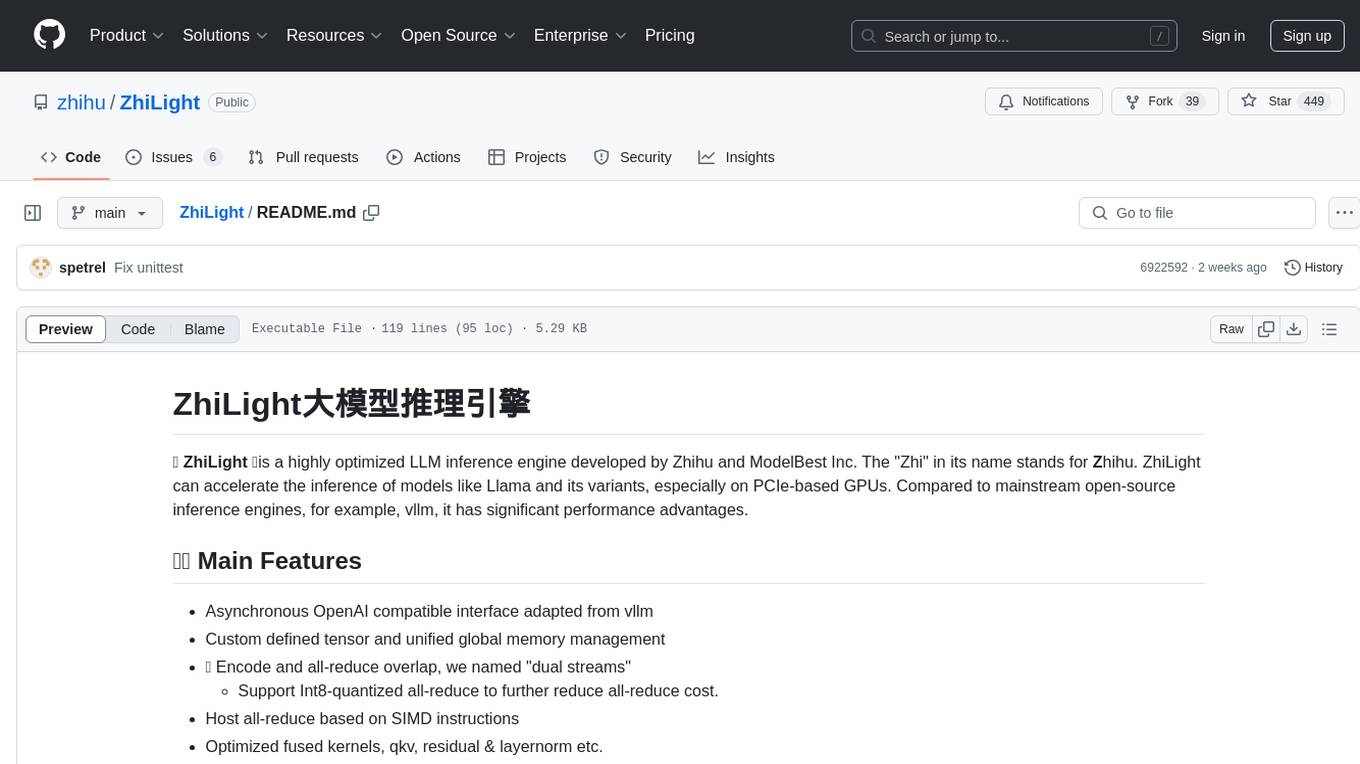

ZhiLight

ZhiLight is a highly optimized large language model (LLM) inference engine developed by Zhihu and ModelBest Inc. It accelerates the inference of models like Llama and its variants, especially on PCIe-based GPUs. ZhiLight offers significant performance advantages compared to mainstream open-source inference engines. It supports various features such as custom defined tensor and unified global memory management, optimized fused kernels, support for dynamic batch, flash attention prefill, prefix cache, and different quantization techniques like INT8, SmoothQuant, FP8, AWQ, and GPTQ. ZhiLight is compatible with OpenAI interface and provides high performance on mainstream NVIDIA GPUs with different model sizes and precisions.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

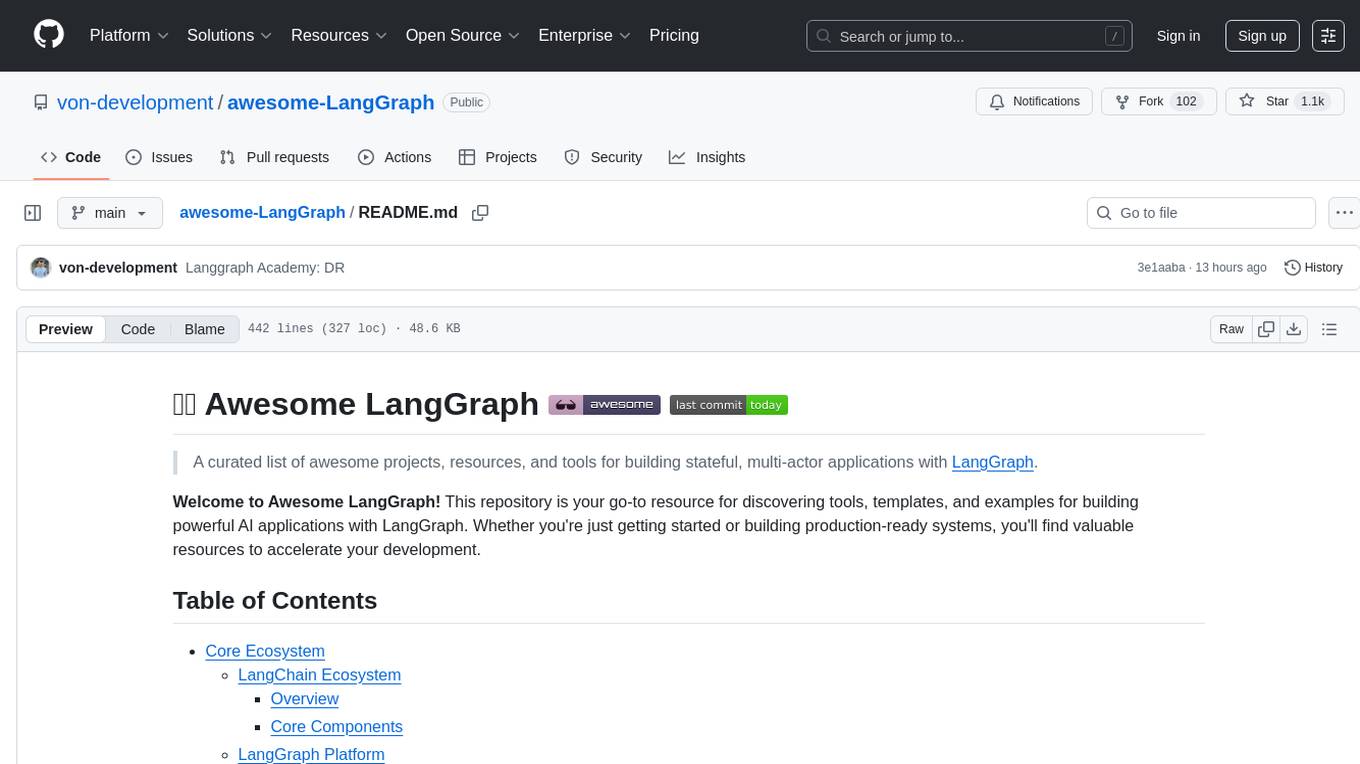

awesome-LangGraph

Awesome LangGraph is a curated list of projects, resources, and tools for building stateful, multi-actor applications with LangGraph. It provides valuable resources for developers at all stages of development, from beginners to those building production-ready systems. The repository covers core ecosystem components, LangChain ecosystem, LangGraph platform, official resources, starter templates, pre-built agents, example applications, development tools, community projects, AI assistants, content & media, knowledge & retrieval, finance & business, sustainability, learning resources, companies using LangGraph, contributing guidelines, and acknowledgments.

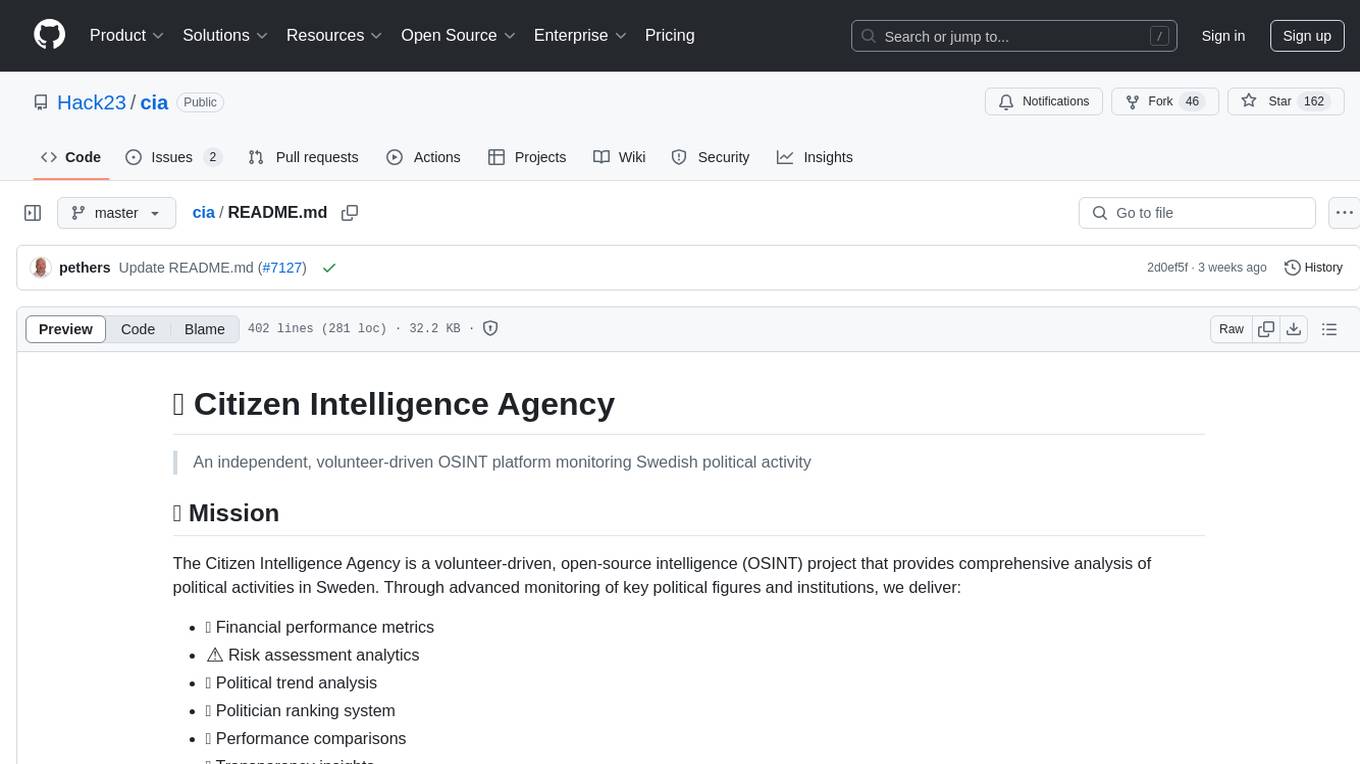

cia

CIA is a powerful open-source tool designed for data analysis and visualization. It provides a user-friendly interface for processing large datasets and generating insightful reports. With CIA, users can easily explore data, perform statistical analysis, and create interactive visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, CIA offers a comprehensive set of features to streamline your data analysis workflow and uncover valuable insights.

For similar tasks

awesome-ai-efficiency

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.

unified-cache-management

Unified Cache Manager (UCM) is a tool designed to persist the LLM KVCache and replace redundant computations through various retrieval mechanisms. It supports prefix caching and offers training-free sparse attention retrieval methods, enhancing performance for long sequence inference tasks. UCM also provides a PD disaggregation solution based on a storage-compute separation architecture, enabling easier management of heterogeneous computing resources. When integrated with vLLM, UCM significantly reduces inference latency in scenarios like multi-turn dialogue and long-context reasoning tasks.

InferenceMAX

InferenceMAX™ is an open-source benchmarking tool designed to track real-time performance improvements in popular open-source inference frameworks and models. It runs a suite of benchmarks every night to capture progress in near real-time, providing a live indicator of inference performance. The tool addresses the challenge of rapidly evolving software ecosystems by benchmarking the latest software packages, ensuring that benchmarks do not go stale. InferenceMAX™ is supported by industry leaders and contributors, providing transparent and reproducible benchmarks that help the ML community make informed decisions about hardware and software performance.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

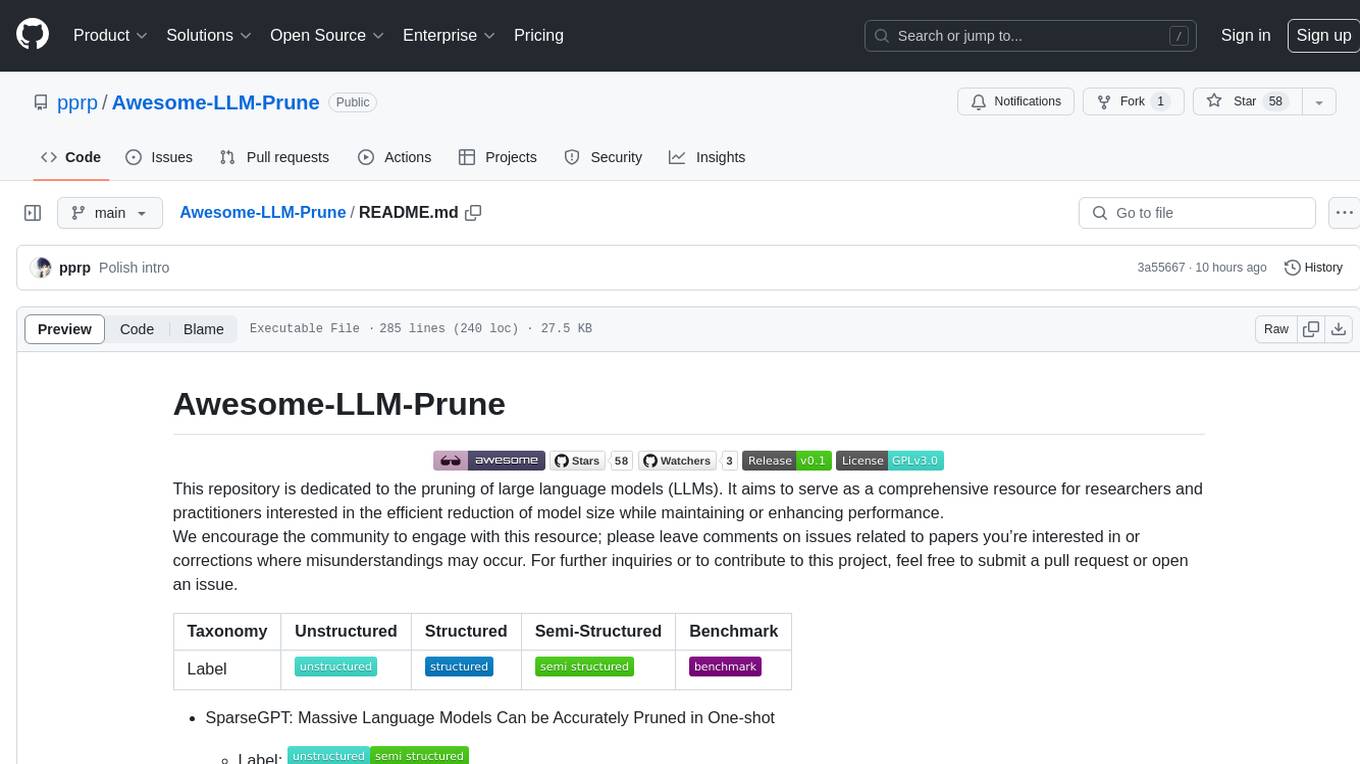

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.