LlamaV-o1

Rethinking Step-by-step Visual Reasoning in LLMs

Stars: 215

LlamaV-o1 is a Large Multimodal Model designed for spontaneous reasoning tasks. It outperforms various existing models on multimodal reasoning benchmarks. The project includes a Step-by-Step Visual Reasoning Benchmark, a novel evaluation metric, and a combined Multi-Step Curriculum Learning and Beam Search Approach. The model achieves superior performance in complex multi-step visual reasoning tasks in terms of accuracy and efficiency.

README:

Omkar Thawakar* , Dinura Dissanayake* , Ketan More* , Ritesh Thawkar* , Ahmed Heakl* , Noor Ahsan* , Yuhao Li* , Mohammed Zumri* , Jean Lahoud*, Rao Muhammad Anwer, Hisham Cholakkal, Ivan Laptev, Mubarak Shah, Fahad Shahbaz Khan and Salman Khan

*Equal Contribution

Mohamed bin Zayed University of Artificial Intelligence, UAE

- January-13-2025: Technical Report of LlamaV-o1 is released on Arxiv. Paper

- January-10-2025: *Code, Model & Dataset release. Our VCR-Bench is available at: HuggingFace. Model Checkpoint: HuggingFace. Code is available at: GitHub.🤗

LlamaV-o1 is a Large Multimodal Model capable of spontaneous reasoning.

-

Our LlamaV-o1 model outperforms Gemini-1.5-flash,GPT-4o-mini, Llama-3.2-Vision-Instruct, Mulberry, and Llava-CoT on our proposed VCR-Bench.

-

Our LlamaV-o1 model outperforms Gemini-1.5-Pro,GPT-4o-mini, Llama-3.2-Vision-Instruct, Mulberry, Llava-CoT, etc. on six challenging multimodal benchmarks (MMStar, MMBench, MMVet, MathVista, AI2D and Hallusion).

- Step-by-Step Visual Reasoning Benchmark: To the best of our knowledge, the proposed benchmark is the first effort designed to evaluate multimodal multi-step reasoning tasks across diverse topics. The proposed benchmark, named VRC-Bench, spans around eight different categories (Visual Reasoning, Math & Logic Reasoning, Social & Cultural Context, Medical Imaging (Basic Medical Science), Charts & Diagram Understanding, OCR & Document Understanding, Complex Visual Perception and Scientific Reasoning) with over 1,000 challenging samples and more than 4k reasoning steps.

- Novel Evaluation Metric: A metric that assesses the reasoning quality at the level of individual steps, emphasizing both correctness and logical coherence.

- Combined Multi-Step Curriculum Learning and Beam Search Approach: A multimodal rea- soning method, named LlamaV-o1, that combines the structured progression of curriculum learning with the efficiency of Beam Search. The proposed approach ensures incremental skill development while optimizing reasoning paths, enabling the model to be effective in complex multi-step visual reasoning tasks in terms of both accuracy and efficiency. Specifi- cally, the proposed LlamaV-o1 achieves an absolute gain of 3.8% in terms of average score across six benchmarks while being 5× faster, compared to the recent Llava-CoT.

The figure presents our benchmark structure and the comparative performance of LMMs on VRC-Bench. The dataset spans diverse domains, including mathematical & logical reasoning, scientific reasoning, visual perception, and specialized areas such as medical imaging, cultural understanding, and document OCR. It also includes tasks like chart & diagram comprehension to test real-world applications. The bar chart compares various state-of-the-art models, showcasing final answer accuracy and step-by-step reasoning performance. Our LlamaV-o1 model surpasses GPT-4o-mini, Gemini-1.5-Flash, and Llava-CoT in complex multimodal reasoning tasks, achieving superior accuracy and logical coherence.

Table 1: Comparison of models based on Final Answer accuracy and Reasoning Steps performance on the proposed VRC-Bench. The best results in each case (closed-source and open-source) are in bold. Our LlamaV-o1 achieves superior performance compared to its open-source counterpart (Llava-CoT) while also being competitive against the closed-source models.

| Model | GPT-4o | Claude-3.5 | Gemini-2.0 | Gemini-1.5 Pro | Gemini-1.5 Flash | GPT-4o Mini | Llama-3.2 Vision | Mulberry | Llava-CoT | LlamaV-o1 (Ours) |

|---|---|---|---|---|---|---|---|---|---|---|

| Final Answer | 59.28 | 61.35 | 61.16 | 61.35 | 54.99 | 56.39 | 48.40 | 51.90 | 54.09 | 56.49 |

| Reasoning Steps | 76.68 | 72.12 | 74.08 | 72.12 | 71.86 | 74.05 | 58.37 | 63.86 | 66.21 | 68.93 |

Table 2: Performance comparison on six benchmark datasets (MMStar, MMBench, MMVet, MathVista, AI2D, and Hallusion) along with average scores. The comparison includes both closed-source and open-source models. GPT-4o achieves the highest average score (71.8%) among closed-source models, while our LlamaV-o1 leads open-source models with an average score of 67.33%, surpassing Llava-CoT by 3.8%.

| Model | MMStar | MMBench | MMVet | MathVista | AI2D | Hallusion | Average |

|---|---|---|---|---|---|---|---|

| Closed-Source | |||||||

| GPT-4o-0806 | 66.0 | 82.4 | 80.8 | 62.7 | 84.7 | 54.2 | 71.8 |

| Claude3.5-Sonnet-0620 | 64.2 | 75.4 | 68.7 | 61.6 | 80.2 | 49.9 | 66.7 |

| Gemini-1.5-Pro | 56.4 | 71.5 | 71.3 | 57.7 | 79.1 | 45.6 | 63.6 |

| GPT-4o-mini-0718 | 54.9 | 76.9 | 74.6 | 52.4 | 77.8 | 46.1 | 63.8 |

| Open-Source | |||||||

| InternVL2-8B | 62.5 | 77.4 | 56.9 | 58.3 | 83.6 | 45.0 | 64.0 |

| Ovis1.5-Gemma2-9B | 58.7 | 76.3 | 50.9 | 65.6 | 84.5 | 48.2 | 64.0 |

| MiniCPM-V2.6-8B | 57.1 | 75.7 | 56.3 | 60.6 | 82.1 | 48.1 | 63.3 |

| Llama-3.2-90B-Vision-Inst | 51.1 | 76.8 | 74.1 | 58.3 | 69.5 | 44.1 | 62.3 |

| VILA-1.5-40B | 53.2 | 75.3 | 44.4 | 49.5 | 77.8 | 40.9 | 56.9 |

| Mulberry-7B | 61.3 | 75.34 | 43.9 | 57.49 | 78.95 | 54.1 | 62.78 |

| Llava-CoT | 57.6 | 75.0 | 60.3 | 54.8 | 85.7 | 47.8 | 63.5 |

| Our Models | |||||||

| Llama-3.2-11B (baseline) | 49.8 | 65.8 | 57.6 | 48.6 | 77.3 | 40.3 | 56.9 |

| LlamaV-o1 (Ours) | 59.53 | 79.89 | 65.4 | 54.4 | 81.24 | 63.51 | 67.33 |

You can download the pretrained weights of LlamaV-o1 from the Huggingface: omkarthawakar/LlamaV-o1.

You can download the VRC-Bench from the Huggingface: omkarthawakar/VRC-Bench.

You can use sample inference code provided in eval/llamav-o1.py where we show sample inference on an image with multi-step reasoning.

from transformers import MllamaForConditionalGeneration, AutoProcessor

model_id = "omkarthawakar/LlamaV-o1"

model = MllamaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

)

processor = AutoProcessor.from_pretrained(model_id)We used llama-recipes to finetune our LlamaV-o1.

More details about finetuning will be available soon!

To reproduce our results on VRC-Bench:

Please run following.

python eval/inference.py

python eval/get_result.py

Please make sure to put correct name/path of generated json and ChatGPT API key in eval/get_result.py

To reproduce our results on SIX Benchmark Datasets:

We used VLMEvalKit to evaluate LlamaV-o1 on six benchmark datasets.

replace the file vlmeval/vlm/llama_vision with eval/llama_vision.py

Add following line to llama_series model of vlmeval/config.py file.

'LlamaV-o1': partial(llama_vision, model_path='omkarthawakar/LlamaV-o1'),

Run the following commamd

torchrun --nproc-per-node=8 run.py --data MMStar AI2D_TEST HallusionBench MMBench_DEV_EN MMVet MathVista_MINI --model LlamaV-o1 --work-dir LlamaV-o1 --verbose

If you find this paper useful, please consider staring 🌟 this repo and citing 📑 our paper:

@misc{thawakar2025llamavo1,

title={LlamaV-o1: Rethinking Step-by-step Visual Reasoning in LLMs},

author={Omkar Thawakar and Dinura Dissanayake and Ketan More and Ritesh Thawkar and Ahmed Heakl and Noor Ahsan and Yuhao Li and Mohammed Zumri and Jean Lahoud and Rao Muhammad Anwer and Hisham Cholakkal and Ivan Laptev and Mubarak Shah and Fahad Shahbaz Khan and Salman Khan},

year={2025},

eprint={2501.06186},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2501.06186},

}

- This project is primarily distributed under the Apache 2.0 license, as specified in the LICENSE file.

- Thanks to LlaVa-CoT for their awesome work. LlaVa-CoT

- The service is provided as a research preview for non-commercial purposes only, governed by the LLAMA 3.2 Community License Agreement and the Terms of Use for data generated by OpenAI. If you encounter any potential violations, please reach out to us.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LlamaV-o1

Similar Open Source Tools

LlamaV-o1

LlamaV-o1 is a Large Multimodal Model designed for spontaneous reasoning tasks. It outperforms various existing models on multimodal reasoning benchmarks. The project includes a Step-by-Step Visual Reasoning Benchmark, a novel evaluation metric, and a combined Multi-Step Curriculum Learning and Beam Search Approach. The model achieves superior performance in complex multi-step visual reasoning tasks in terms of accuracy and efficiency.

Xwin-LM

Xwin-LM is a powerful and stable open-source tool for aligning large language models, offering various alignment technologies like supervised fine-tuning, reward models, reject sampling, and reinforcement learning from human feedback. It has achieved top rankings in benchmarks like AlpacaEval and surpassed GPT-4. The tool is continuously updated with new models and features.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

microgpt-c

MicroGPT-C is a project that focuses on tiny specialist models working together to outperform monoliths on specific tasks. It is a C port of a Python GPT model, rewritten in pure C99 with zero dependencies. The project explores the concept of coordinated intelligence through 'organelles' that differentiate based on training data, resulting in improved performance across logic games and real-world data experiments.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

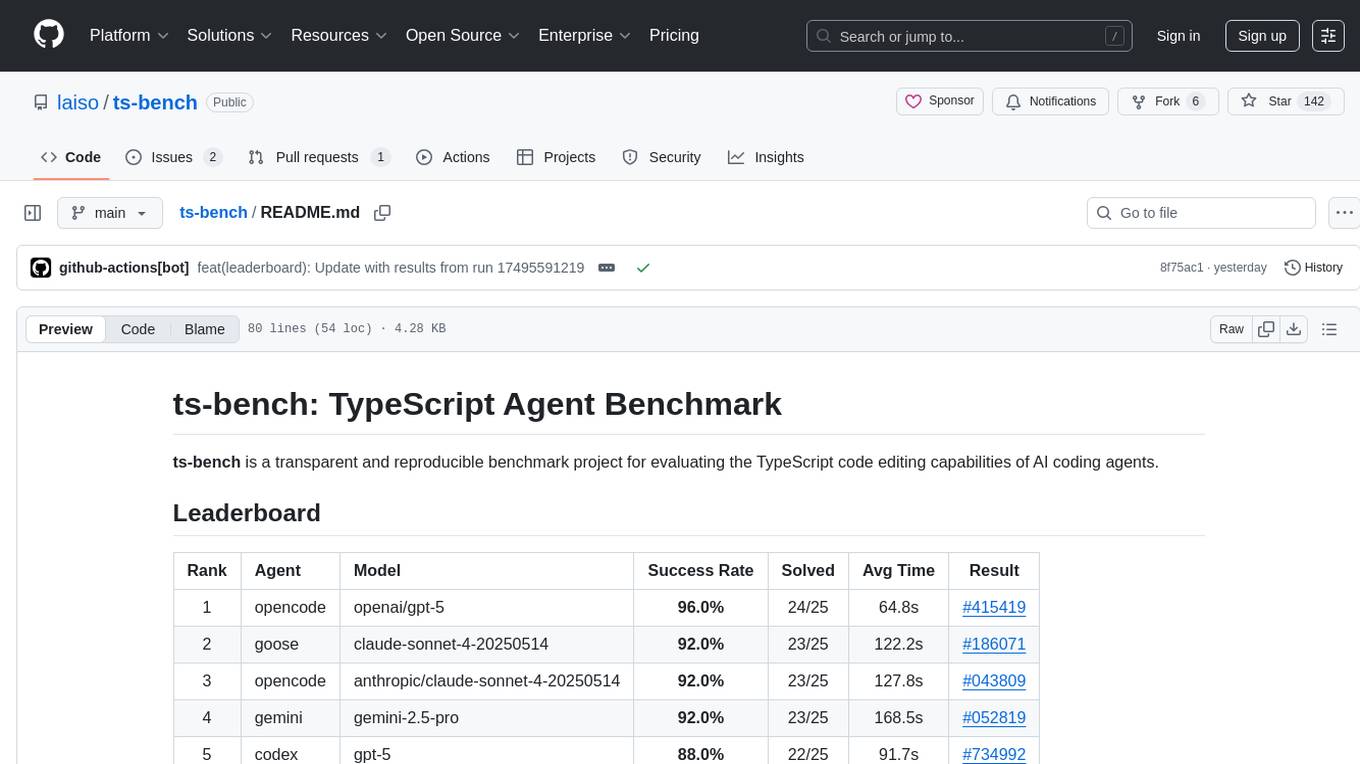

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

pai-opencode

PAI-OpenCode is a complete port of Daniel Miessler's Personal AI Infrastructure (PAI) to OpenCode, an open-source, provider-agnostic AI coding assistant. It brings modular capabilities, dynamic multi-agent orchestration, session history, and lifecycle automation to personalize AI assistants for users. With support for 75+ AI providers, PAI-OpenCode offers dynamic per-task model routing, full PAI infrastructure, real-time session sharing, and multiple client options. The tool optimizes cost and quality with a 3-tier model strategy and a 3-tier research system, allowing users to switch presets for different routing strategies. PAI-OpenCode's architecture preserves PAI's design while adapting to OpenCode, documented through Architecture Decision Records (ADRs).

RLinf

RLinf is a flexible and scalable open-source infrastructure designed for post-training foundation models via reinforcement learning. It provides a robust backbone for next-generation training, supporting open-ended learning, continuous generalization, and limitless possibilities in intelligence development. The tool offers unique features like Macro-to-Micro Flow, flexible execution modes, auto-scheduling strategy, embodied agent support, and fast adaptation for mainstream VLA models. RLinf is fast with hybrid mode and automatic online scaling strategy, achieving significant throughput improvement and efficiency. It is also flexible and easy to use with multiple backend integrations, adaptive communication, and built-in support for popular RL methods. The roadmap includes system-level enhancements and application-level extensions to support various training scenarios and models. Users can get started with complete documentation, quickstart guides, key design principles, example gallery, advanced features, and guidelines for extending the framework. Contributions are welcome, and users are encouraged to cite the GitHub repository and acknowledge the broader open-source community.

Video-ChatGPT

Video-ChatGPT is a video conversation model that aims to generate meaningful conversations about videos by combining large language models with a pretrained visual encoder adapted for spatiotemporal video representation. It introduces high-quality video-instruction pairs, a quantitative evaluation framework for video conversation models, and a unique multimodal capability for video understanding and language generation. The tool is designed to excel in tasks related to video reasoning, creativity, spatial and temporal understanding, and action recognition.

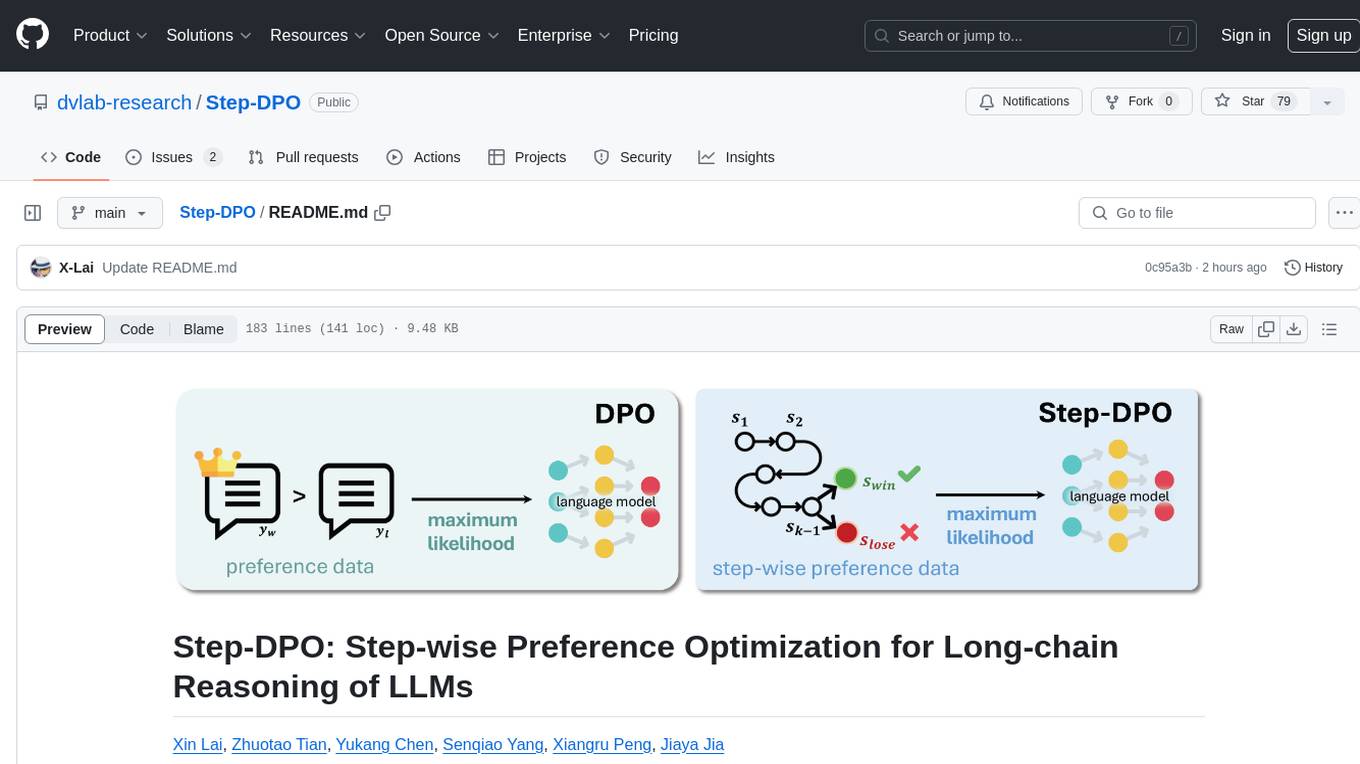

Step-DPO

Step-DPO is a method for enhancing long-chain reasoning ability of LLMs with a data construction pipeline creating a high-quality dataset. It significantly improves performance on math and GSM8K tasks with minimal data and training steps. The tool fine-tunes pre-trained models like Qwen2-7B-Instruct with Step-DPO, achieving superior results compared to other models. It provides scripts for training, evaluation, and deployment, along with examples and acknowledgements.

Open-dLLM

Open-dLLM is the most open release of a diffusion-based large language model, providing pretraining, evaluation, inference, and checkpoints. It introduces Open-dCoder, the code-generation variant of Open-dLLM. The repo offers a complete stack for diffusion LLMs, enabling users to go from raw data to training, checkpoints, evaluation, and inference in one place. It includes pretraining pipeline with open datasets, inference scripts for easy sampling and generation, evaluation suite with various metrics, weights and checkpoints on Hugging Face, and transparent configs for full reproducibility.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.