Best AI tools for< Reduce Model Size >

20 - AI tool Sites

Backend.AI

Backend.AI is an enterprise-scale cluster backend for AI frameworks that offers scalability, GPU virtualization, HPC optimization, and DGX-Ready software products. It provides a fast and efficient way to build, train, and serve AI models of any type and size, with flexible infrastructure options. Backend.AI aims to optimize backend resources, reduce costs, and simplify deployment for AI developers and researchers. The platform integrates seamlessly with existing tools and offers fractional GPU usage and pay-as-you-play model to maximize resource utilization.

OpenAI Strawberry Model

OpenAI Strawberry Model is a cutting-edge AI initiative that represents a significant leap in AI capabilities, focusing on enhancing reasoning, problem-solving, and complex task execution. It aims to improve AI's ability to handle mathematical problems, programming tasks, and deep research, including long-term planning and action. The project showcases advancements in AI safety and aims to reduce errors in AI responses by generating high-quality synthetic data for training future models. Strawberry is designed to achieve human-like reasoning and is expected to play a crucial role in the development of OpenAI's next major model, codenamed 'Orion.'

Industrial Engineer AI

Industrial Engineer AI is an advanced AI tool designed to assist industrial engineers in optimizing processes and improving efficiency in manufacturing environments. The application utilizes machine learning algorithms to analyze data, identify bottlenecks, and suggest solutions for streamlining operations. With its user-friendly interface and powerful capabilities, Industrial Engineer AI is a valuable tool for professionals looking to enhance productivity and reduce costs in industrial settings.

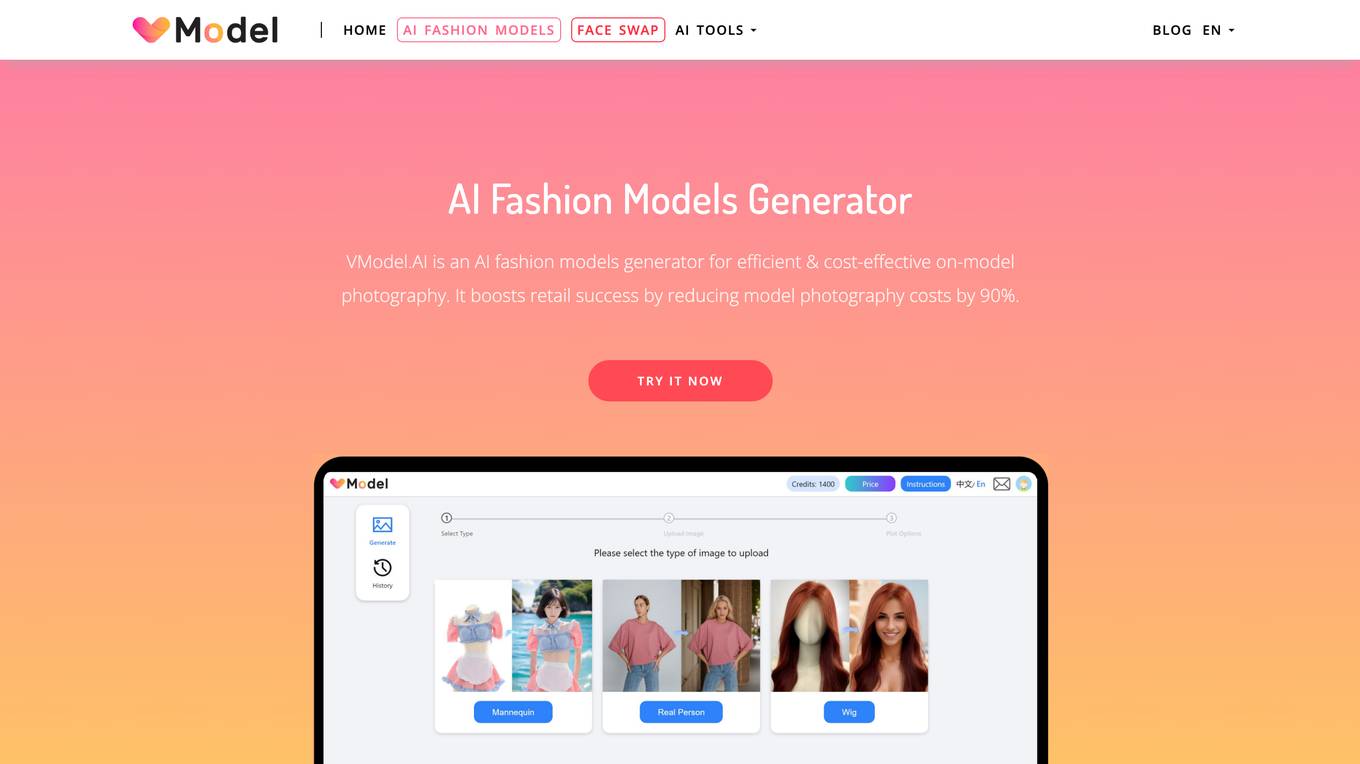

VModel.AI

VModel.AI is an AI fashion models generator that revolutionizes on-model photography for fashion retailers. It utilizes artificial intelligence to create high-quality on-model photography without the need for elaborate photoshoots, reducing model photography costs by 90%. The tool helps diversify stores, improve E-commerce engagement, reduce returns, promote diversity and inclusion in fashion, and enhance product offerings.

Frugal

Frugal is an intelligent application cost engineering platform that optimizes code to reduce cloud costs automatically. It is the first AI-powered cost optimization platform built for engineers, empowering them to find and fix inefficiencies in code that drain cloud budgets. The platform aims to reinvent cost engineering by enabling developers to reduce application costs and improve cloud efficiency through automated identification and resolution of wasteful practices.

Pongo

Pongo is an AI-powered tool that helps reduce hallucinations in Large Language Models (LLMs) by up to 80%. It utilizes multiple state-of-the-art semantic similarity models and a proprietary ranking algorithm to ensure accurate and relevant search results. Pongo integrates seamlessly with existing pipelines, whether using a vector database or Elasticsearch, and processes top search results to deliver refined and reliable information. Its distributed architecture ensures consistent latency, handling a wide range of requests without compromising speed. Pongo prioritizes data security, operating at runtime with zero data retention and no data leaving its secure AWS VPC.

Architechtures

Architechtures is a generative AI-powered building design platform that helps architects and real estate developers design optimal residential developments in minutes. The platform uses AI technology to provide instant insights, regulatory confidence, and rapid iterations for architectural projects. Users can input design criteria, model solutions in 2D and 3D, and receive real-time architectural solutions that best fit their standards. Architechtures facilitates a collaborative design process between users and Artificial Intelligence, enabling efficient decision-making and control over design aspects.

Granica

Granica is an AI tool designed for data compression and optimization, enabling users to transform petabytes of data into terabytes with self-optimizing, lossless compression. It offers state-of-the-art technology that works seamlessly across various platforms like Iceberg, Delta, Trino, Spark, Snowflake, and Databricks. Granica helps organizations reduce storage costs, improve query performance, and enhance data accessibility for AI and analytics workloads.

AiPlus

AiPlus is an AI tool designed to serve as a cost-efficient model gateway. It offers users a platform to access and utilize various AI models for their projects and tasks. With AiPlus, users can easily integrate AI capabilities into their applications without the need for extensive development or resources. The tool aims to streamline the process of leveraging AI technology, making it accessible to a wider audience.

Pulze.ai

Pulze.ai is a cloud-based AI-powered customer engagement platform that helps businesses automate their customer service and marketing efforts. It offers a range of features including a chatbot, live chat, email marketing, and social media management. Pulze.ai is designed to help businesses improve their customer satisfaction, increase sales, and reduce costs.

Watermelon

Watermelon is an AI customer service tool that integrates with OpenAI's latest model, GPT-4o. It allows users to build chatbots powered by GPT-4o to automate customer interactions, handle frequently asked questions, and collaborate seamlessly between chatbots and human agents. Watermelon offers features such as chatbot building, customizable chat widgets, statistics tracking, inbox collaboration, and various integrations with APIs and webhooks. The application caters to industries like e-commerce, education, healthcare, and financial services, providing solutions for sales support, lead generation, marketing, HR, and customer service.

Bot Butcher

Bot Butcher is an AI-powered antispam API for websites that helps web developers combat contact form spam bots using artificial intelligence. It offers a modern alternative to reCAPTCHA, maximizing privacy by classifying messages as spam or not spam with a large language model. The tool is designed for enterprise scalability, vertical SaaS, and website builder apps, providing continuous model improvements and context-aware classification while focusing on privacy.

SellerPic

SellerPic is an AI image tool designed specifically for e-commerce sellers to enhance their product images effortlessly. It offers AI Fashion Model and AI Product Image features to transform DIY snapshots into professional, studio-quality images in a fraction of the time. SellerPic focuses on boosting sales by providing high-quality product images that captivate the audience and improve conversion rates. Trusted by sellers across various platforms, SellerPic is a game-changer for e-commerce marketing teams, creatives, and store owners.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

AI Lean Canvas Generator

The AI Lean Canvas Generator is an AI-powered tool designed to help businesses create Lean Canvas for their company based on its description. It simplifies the process of generating a one-page business plan by utilizing artificial intelligence technology to streamline the creation and validation of business models. The tool is based on the Lean Startup methodology and emphasizes rapid experimentation and iterative development to reduce risk and uncertainty in the early stages of a business. It offers a user-friendly interface that allows users to input their company's information and quickly generate a comprehensive Lean Canvas.

VirtuLook Product Photo Generator

VirtuLook Product Photo Generator is an AI-powered tool that revolutionizes product photography by generating high-quality images using cutting-edge AI algorithms. It offers features like fashion model generation, product background generation, and text-based photo generation. The tool helps businesses enhance their online presence, drive sales conversions, and reduce production costs by providing visually appealing product images. Users can easily create lifelike photos of virtual models, experiment with different looks, and visualize clothing creations without the need for physical prototypes or expensive photo shoots.

ASKTOWEB

ASKTOWEB is an AI-powered service that enhances websites by adding AI search buttons to SaaS landing pages, software documentation pages, and other websites. It allows visitors to easily search for information without needing specific keywords, making websites more user-friendly and useful. ASKTOWEB analyzes user questions to improve site content and discover customer needs. The service offers multi-model accuracy verification, direct reference jump links, multilingual chatbot support, effortless attachment with a single line of script, and a simple UI without annoying pop-ups. ASKTOWEB reduces the burden on customer support by acting as a buffer for inquiries about available information on the website.

Directly

Directly is an AI-powered platform that offers on-demand and automated customer support solutions. The platform connects organizations with highly qualified experts who can handle customer inquiries efficiently. By leveraging AI and machine learning, Directly automates repetitive questions, improving business continuity and digital transformation. The platform follows a pay-for-performance compensation model and provides global support in multiple languages. Directly aims to enhance customer satisfaction, reduce contact center volume, and save costs for businesses.

Truffle

Truffle is an AI-powered application designed to help teams retain knowledge built up in Slack by automatically answering questions and generating documentation. By utilizing cutting-edge AI technology, Truffle summarizes important conversations and makes them easily searchable, reducing the time spent on repetitive questions. The application offers a simple pricing model and can be added to a single Slack channel for free, with the option to upgrade for unlimited channels. Truffle is suitable for various teams, including HR, IT-support, and product teams, aiming to shift the focus back to core tasks and improve knowledge sharing within the organization.

Wild Moose

Wild Moose is an AI-powered SRE Copilot tool designed to help companies handle incidents efficiently. It offers fast and efficient root cause analysis that improves with every incident by automatically gathering and analyzing logs, metrics, and code to pinpoint root causes. The tool converts tribal knowledge into custom playbooks, constantly improves performance with a system model that learns from each incident, and integrates seamlessly with various observability tools and deployment platforms. Wild Moose reduces cognitive load on teams, automates routine tasks, and provides actionable insights in real-time, enabling teams to act fast during outages.

4 - Open Source AI Tools

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

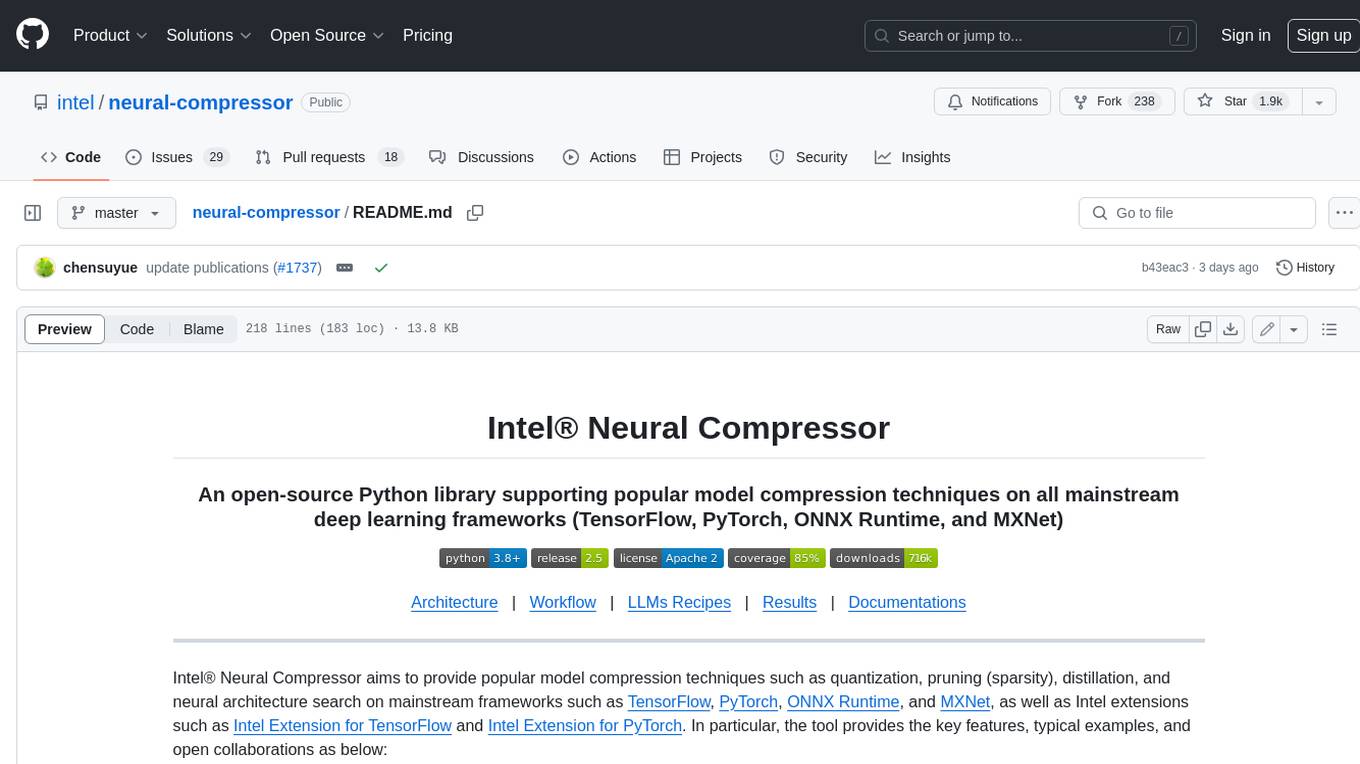

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

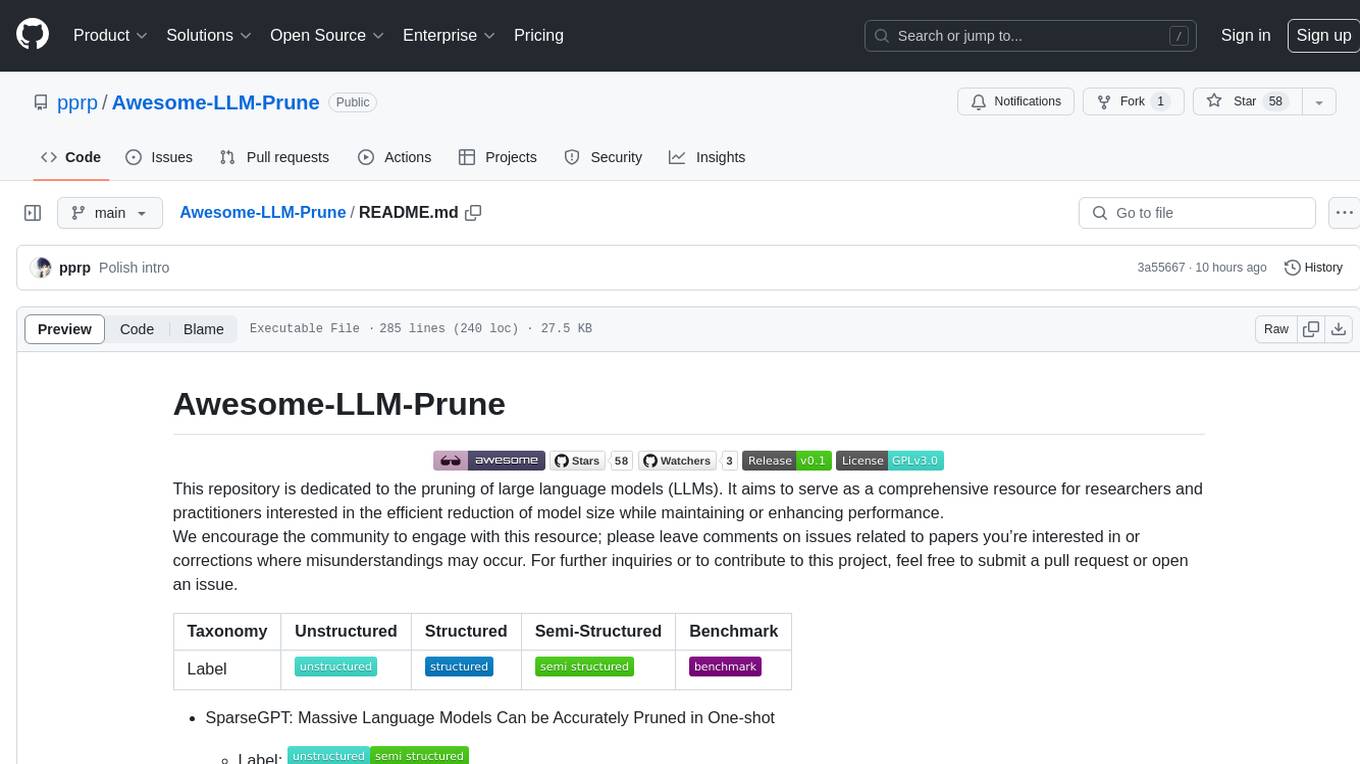

Awesome-LLM-Prune

This repository is dedicated to the pruning of large language models (LLMs). It aims to serve as a comprehensive resource for researchers and practitioners interested in the efficient reduction of model size while maintaining or enhancing performance. The repository contains various papers, summaries, and links related to different pruning approaches for LLMs, along with author information and publication details. It covers a wide range of topics such as structured pruning, unstructured pruning, semi-structured pruning, and benchmarking methods. Researchers and practitioners can explore different pruning techniques, understand their implications, and access relevant resources for further study and implementation.

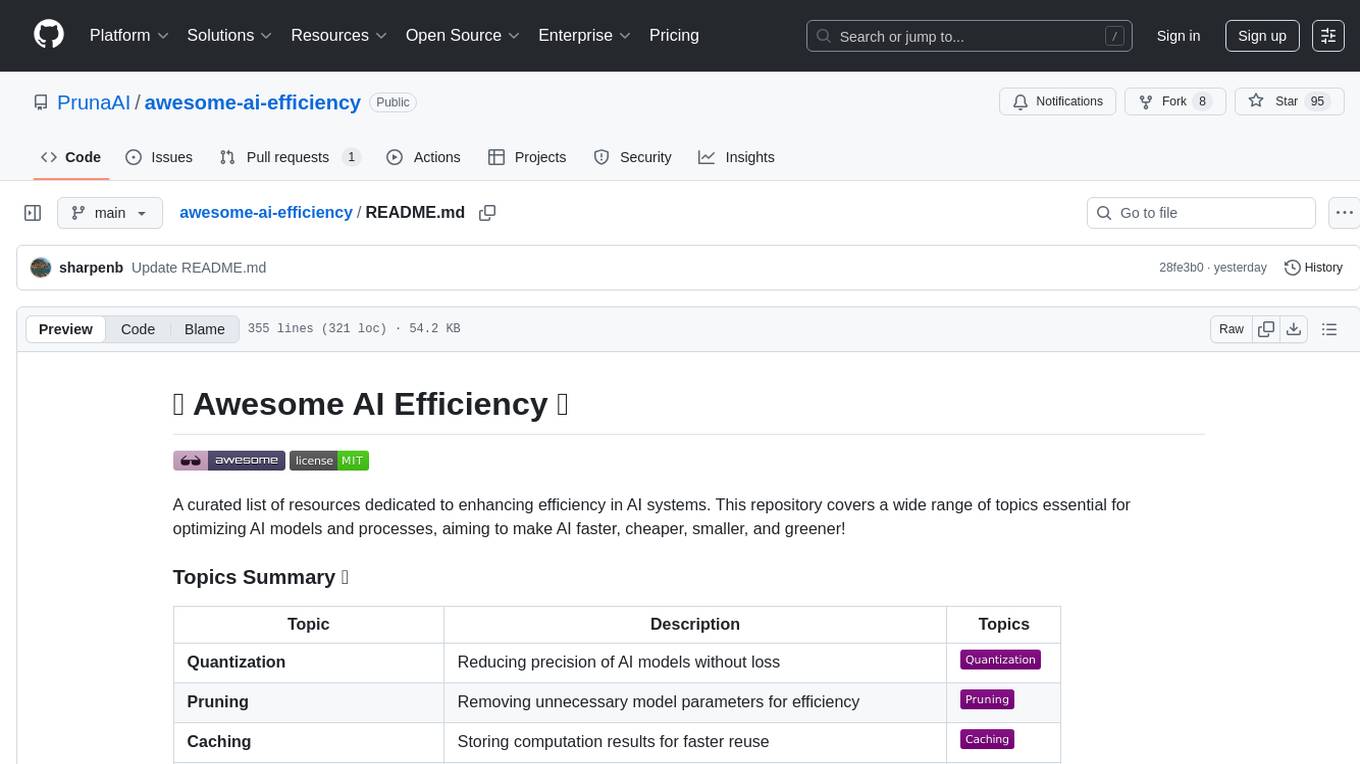

awesome-ai-efficiency

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

20 - OpenAI Gpts

Carbon Footprint Calculator

Carbon footprint calculations breakdown and advices on how to reduce it

Eco Advisor

I'm an Environmental Impact Analyzer, here to calculate and reduce your carbon footprint.

Your Business Taxes: Guide

insightful articles and guides on business tax strategies at AfterTaxCash. Discover expert advice and tips to optimize tax efficiency, reduce liabilities, and maximize after-tax profits for your business. Stay informed to make informed financial decisions.

EcoTracker Pro 🌱📊

Track & analyze your carbon footprint with ease! EcoTracker Pro helps you make eco-friendly choices & reduce your impact. 🌎♻️

Tax Optimization Techniques for Investors

💼📉 Maximize your investments with AI-driven tax optimization! 💡 Learn strategies to reduce taxes 📊 and boost after-tax returns 💰. Get tailored advice 📘 for smart investing 📈. Not a financial advisor. 🚀💡

🥦✨ Low-FODMAP Meal Guide 🍇📘

Your go-to GPT for navigating the low-FODMAP diet! Find recipes, substitutes, and meal plans tailored to reduce IBS symptoms. 🍽️🌿

Process Optimization Advisor

Improves operational efficiency by optimizing processes and reducing waste.

Sustainable Energy K-12 School Expert

The world's trusted source for cost effective energy management in schools

Adorable Zen Master

A gateway to Zen's joy and wisdom. Explore mindfulness, meditation, and the path of sudden awareness through play with this charming friendly guide.