Best AI tools for< Improve Inference Speed >

20 - AI tool Sites

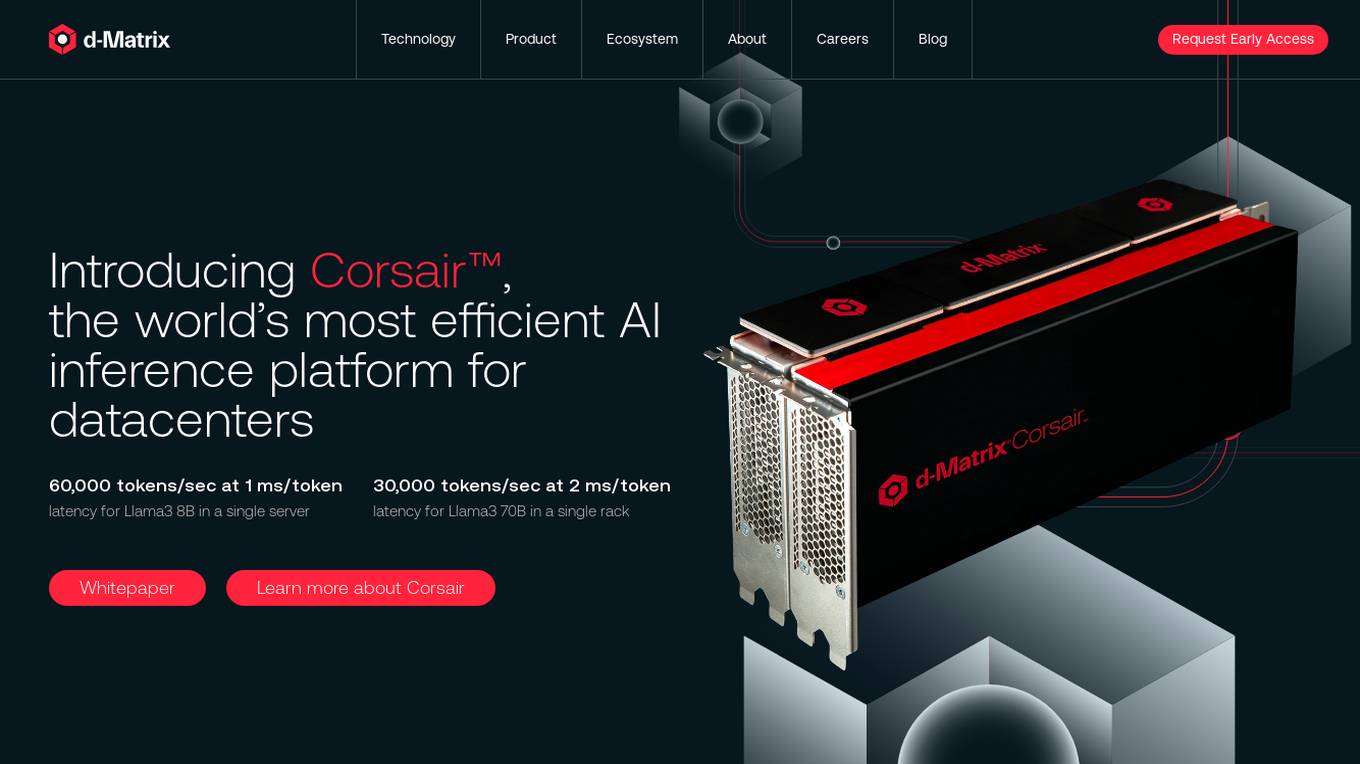

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

Lamini

Lamini is an enterprise-level LLM platform that offers precise recall with Memory Tuning, enabling teams to achieve over 95% accuracy even with large amounts of specific data. It guarantees JSON output and delivers massive throughput for inference. Lamini is designed to be deployed anywhere, including air-gapped environments, and supports training and inference on Nvidia or AMD GPUs. The platform is known for its factual LLMs and reengineered decoder that ensures 100% schema accuracy in the JSON output.

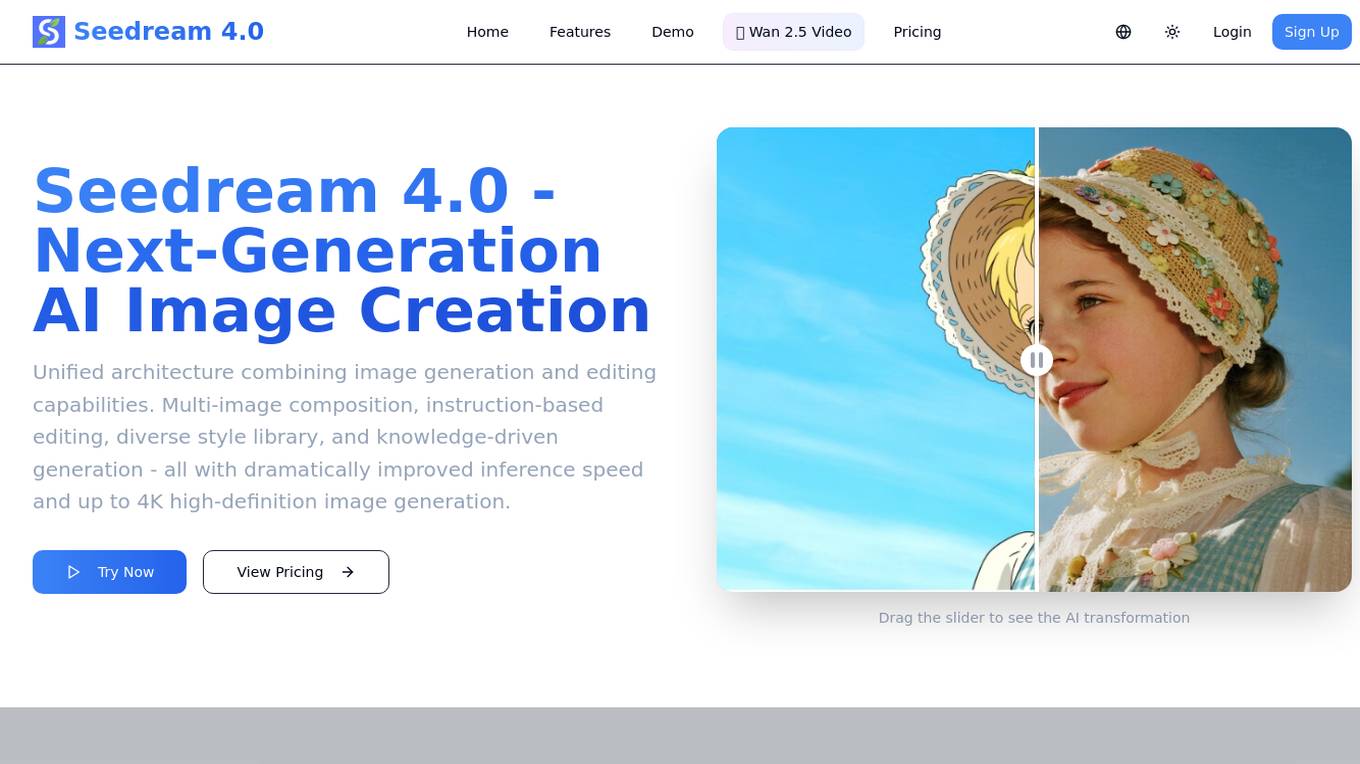

Seedream 4.0

Seedream 4.0 is an AI image generator and editor that offers a unified architecture combining image generation and editing capabilities. It provides features such as multi-image composition, instruction-based editing, diverse style library, and knowledge-driven generation. Users can experience the next generation of AI image creation with dramatically improved inference speed and up to 4K high-definition image generation.

Tensordyne

Tensordyne is a generative AI inference compute tool designed and developed in the US and Germany. It focuses on re-engineering AI math and defining AI inference to run the biggest AI models for thousands of users at a fraction of the rack count, power, and cost. Tensordyne offers custom silicon and systems built on the Zeroth Scaling Law, enabling breakthroughs in AI technology.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

ThirdAI

ThirdAI is an AI platform that offers a production-ready solution for building and deploying AI applications quickly and efficiently. It provides advanced AI/GenAI technology that can run on any infrastructure, reducing barriers to delivering production-grade AI solutions. With features like enterprise SSO, built-in models, no-code interface, and more, ThirdAI empowers users to create AI applications without the need for specialized GPU servers or AI skills. The platform covers the entire workflow of building AI applications end-to-end, allowing for easy customization and deployment in various environments.

Inworld

Inworld is an AI framework designed for games and media, offering a production-ready framework for building AI agents with client-side logic and local model inference. It provides tools optimized for real-time data ingestion, low latency, and massive scale, enabling developers to create engaging and immersive experiences for users. Inworld allows for building custom AI agent pipelines, refining agent behavior and performance, and seamlessly transitioning from prototyping to production. With support for C++, Python, and game engines, Inworld aims to future-proof AI development by integrating 3rd-party components and foundational models to avoid vendor lock-in.

poolside

poolside is an advanced foundational AI model designed specifically for software engineering challenges. It allows users to fine-tune the model on their own code, enabling it to understand project uniqueness and complexities that generic models can't grasp. The platform aims to empower teams to build better, faster, and happier by providing a personalized AI model that continuously improves. In addition to the AI model for writing code, poolside offers an intuitive editor assistant and an API for developers to leverage.

pplx-api

The pplx-api is an AI tool designed to provide documentation and examples for blazingly fast LLM inference. It offers a reference for developers to integrate AI capabilities into their applications efficiently. The tool focuses on enhancing natural language processing tasks by leveraging advanced models and algorithms. Users can access detailed guides, API references, changelogs, and engage in discussions related to AI technologies.

Segwise

Segwise is an AI tool designed to help game developers increase their game's Lifetime Value (LTV) by providing insights into player behavior and metrics. The tool uses AI agents to detect causal LTV drivers, root causes of LTV drops, and opportunities for growth. Segwise offers features such as running causal inference models on player data, hyper-segmenting player data, and providing instant answers to questions about LTV metrics. It also promises seamless integrations with gaming data sources and warehouses, ensuring data ownership and transparent pricing. The tool aims to simplify the process of improving LTV for game developers.

BuildAi

BuildAi is an AI tool designed to provide the lowest cost GPU cloud for AI training on the market. The platform is powered with renewable energy, enabling companies to train AI models at a significantly reduced cost. BuildAi offers interruptible pricing, short term reserved capacity, and high uptime pricing options. The application focuses on optimizing infrastructure for training and fine-tuning machine learning models, not inference, and aims to decrease the impact of computing on the planet. With features like data transfer support, SSH access, and monitoring tools, BuildAi offers a comprehensive solution for ML teams.

Anote

Anote is a human-centered AI company that provides a suite of products and services to help businesses improve their data quality and build better AI models. Anote's products include a data labeler, a private chatbot, a model inference API, and a lead generation tool. Anote's services include data annotation, model training, and consulting.

CHAI AI

CHAI AI is a leading conversational AI platform that focuses on building AI solutions for quant traders. The platform has secured significant funding rounds to expand its computational capabilities and talent acquisition. CHAI AI offers a range of models and techniques, such as reinforcement learning with human feedback, model blending, and direct preference optimization, to enhance user engagement and retention. The platform aims to provide users with the ability to create their own ChatAIs and offers custom GPU orchestration for efficient inference. With a strong focus on user feedback and recognition, CHAI AI continues to innovate and improve its AI models to meet the demands of a growing user base.

TechTarget

TechTarget is a leading provider of purchase intent data and marketing services for the technology industry. Our data-driven solutions enable technology companies to identify and engage with their target audiences, and to measure the impact of their marketing campaigns. We offer a range of products and services, including:

MakerJournal

MakerJournal is a powerful AI tool designed to help users improve social engagement and increase audience by automatically generating summaries of log entries. It allows users to manage multiple projects, generate updates from GitHub commits, and post to social media accounts effortlessly. MakerJournal simplifies the process of deciding what to post, enabling users to focus on creating great products while enhancing their social rankings. With easy-to-use features and automatic summaries, MakerJournal streamlines the process of logging progress and increasing social influence.

Bodify

Bodify is a predictive analytics platform that helps online retailers improve the shopping experience for their customers. By using AI to analyze customer data, Bodify can provide retailers with insights into what products customers are most likely to purchase, what sizes and styles they prefer, and what factors influence their decisions. This information can then be used to create more personalized and relevant shopping experiences, which can lead to higher conversion rates, increased customer loyalty, and improved bottom-line results.

Cast.app

Cast.app is an AI-driven platform that automates customer success management, enabling businesses to grow and preserve revenue by leveraging AI agents. The application offers a range of features such as automating customer onboarding, driving usage and adoption, minimizing revenue churn, influencing renewals and revenue expansion, and scaling without increasing team size. Cast.app provides personalized recommendations, insights, and customer communications, enhancing customer engagement and satisfaction. The platform is designed to streamline customer interactions, improve retention rates, and drive revenue growth through AI-powered automation and personalized customer experiences.

100 Minds

100 Minds is an AI-powered platform that focuses on turning learning into practice through role play, instant feedback, leadership mentoring, and measurable impact. The platform offers conversational AI practice to develop critical human skills applicable to various roles such as leadership, sales, operations, and individual contributions. It addresses the systemic problem of lack of real-world practice in learning and emphasizes the importance of building skills through practice, feedback, and repetition in context. 100 Minds provides a safe space to fail and retry, instant in-the-moment feedback, and scalable practice experiences across locations and shifts. It aims to improve skills through real scenarios, actionable feedback, and measurable progress at individual and organizational levels.

partnrUP

partnrUP is an AI-powered influencer marketing platform that automates the influencer marketing process from discovery to campaign management. It offers features such as AI influencer discovery, influencer recruitment, creator management, influencer gifting, AI creative briefs, campaign management, content management, content performance analytics, retail page syndication, and customer user-generated content. The platform helps brands source creators, run campaigns, track results, and turn influence into measurable growth. partnrUP caters to startups, midmarket businesses, enterprises, and agencies, providing solutions for influencer marketing, full funnel management, ambassador pages, affiliate programs, and commerce enablement. It offers fully managed services without overhead, resulting in higher creator response rates, less time spent on coordination, and improved creator payment accuracy and compliance.

4 - Open Source AI Tools

Awesome-Resource-Efficient-LLM-Papers

A curated list of high-quality papers on resource-efficient Large Language Models (LLMs) with a focus on various aspects such as architecture design, pre-training, fine-tuning, inference, system design, and evaluation metrics. The repository covers topics like efficient transformer architectures, non-transformer architectures, memory efficiency, data efficiency, model compression, dynamic acceleration, deployment optimization, support infrastructure, and other related systems. It also provides detailed information on computation metrics, memory metrics, energy metrics, financial cost metrics, network communication metrics, and other metrics relevant to resource-efficient LLMs. The repository includes benchmarks for evaluating the efficiency of NLP models and references for further reading.

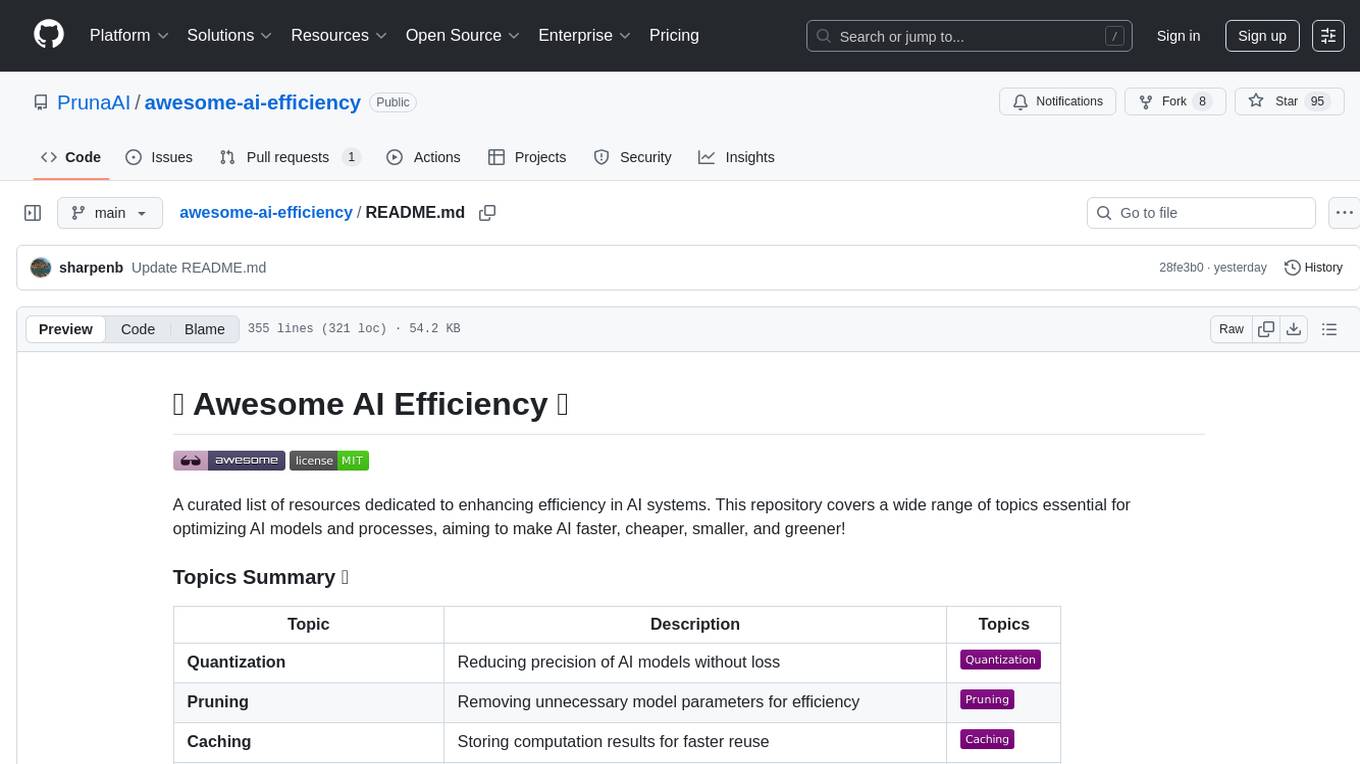

awesome-ai-efficiency

Awesome AI Efficiency is a curated list of resources dedicated to enhancing efficiency in AI systems. The repository covers various topics essential for optimizing AI models and processes, aiming to make AI faster, cheaper, smaller, and greener. It includes topics like quantization, pruning, caching, distillation, factorization, compilation, parameter-efficient fine-tuning, speculative decoding, hardware optimization, training techniques, inference optimization, sustainability strategies, and scalability approaches.

unified-cache-management

Unified Cache Manager (UCM) is a tool designed to persist the LLM KVCache and replace redundant computations through various retrieval mechanisms. It supports prefix caching and offers training-free sparse attention retrieval methods, enhancing performance for long sequence inference tasks. UCM also provides a PD disaggregation solution based on a storage-compute separation architecture, enabling easier management of heterogeneous computing resources. When integrated with vLLM, UCM significantly reduces inference latency in scenarios like multi-turn dialogue and long-context reasoning tasks.

InferenceMAX

InferenceMAX™ is an open-source benchmarking tool designed to track real-time performance improvements in popular open-source inference frameworks and models. It runs a suite of benchmarks every night to capture progress in near real-time, providing a live indicator of inference performance. The tool addresses the challenge of rapidly evolving software ecosystems by benchmarking the latest software packages, ensuring that benchmarks do not go stale. InferenceMAX™ is supported by industry leaders and contributors, providing transparent and reproducible benchmarks that help the ML community make informed decisions about hardware and software performance.

20 - OpenAI Gpts

Digital Experiment Analyst

Demystifying Experimentation and Causal Inference with 1-Sided Tests Focus

人為的コード性格分析(Code Persona Analyst)

コードを分析し、言語ではなくスタイルに焦点を当て、プログラムを書いた人の性格を推察するツールです。( It is a tool that analyzes code, focuses on style rather than language, and infers the personality of the person who wrote the program. )

Persuasion Maestro

Expert in NLP, persuasion, and body language, teaching through lessons and practical tests.

UX & UI

Gives you tips and suggestions on how you can improve your application for your users.

Memory Enhancer

Offers exercises and techniques to improve memory retention and cognitive functions.

English Conversation Role Play Creator

Generates conversation examples and chunks for specified situations. Improve your instantaneous conversational skills through repetitive practice!

Customer Retention Consultant

Analyzes customer churn and provides strategies to improve loyalty and retention.

Agile Coach Expert

Agile expert providing practical, step-by-step advice with the agile way of working of your team and organisation. Whether you're looking to improve your Agile skills or find solutions to specific problems. Including Scrum, Kanban and SAFe knowledge.

Kemi - Research & Creative Assistant

I improve marketing effectiveness by designing stunning research-led assets in a flash!

Quickest Feedback for Language Learner

Helps improve language skills through interactive scenarios and feedback.

Le VPN - Your Secure Internet Proxy

Bypass Internet censorship & improve your security online