robustmq

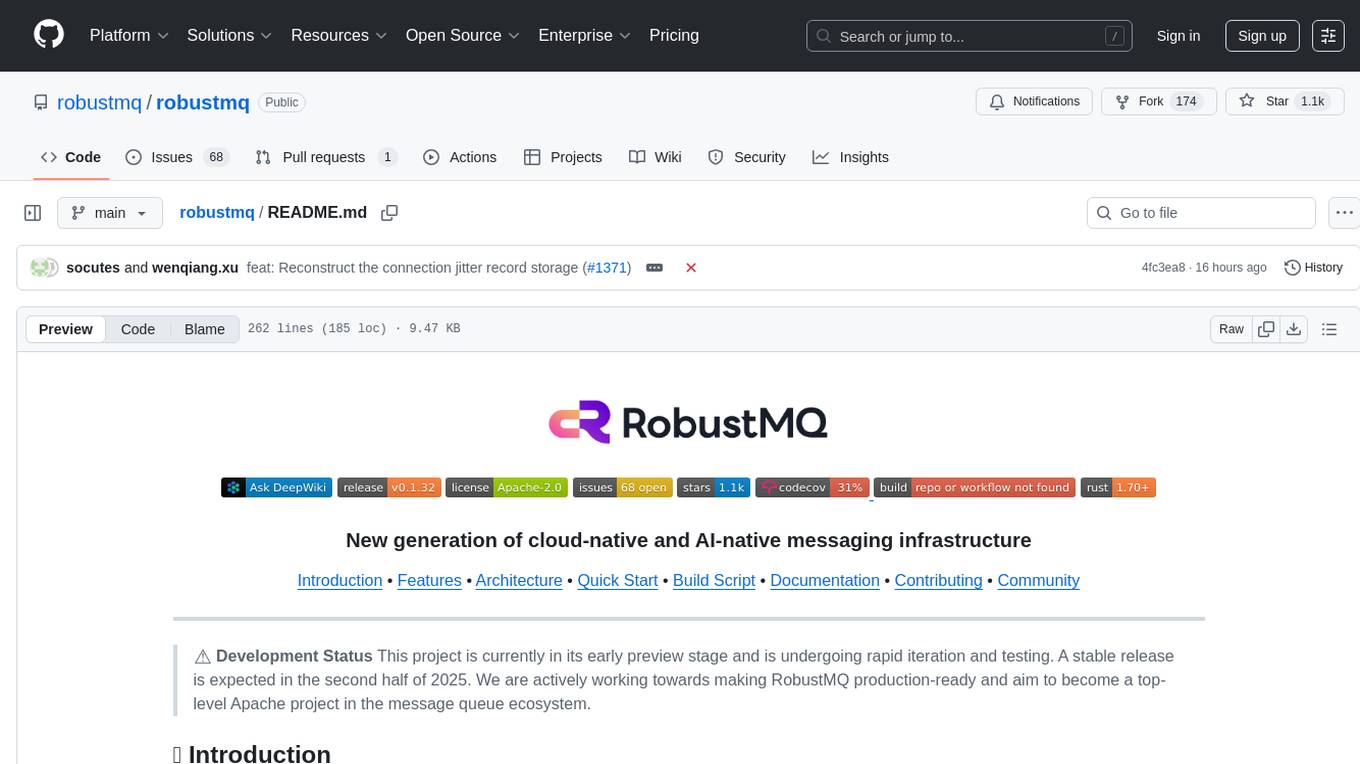

New generation of cloud-native and AI-native messaging infrastructure.

Stars: 1285

RobustMQ is a next-generation, high-performance, multi-protocol message queue built in Rust. It aims to create a unified messaging infrastructure tailored for modern cloud-native and AI systems. With features like high performance, distributed architecture, multi-protocol support, pluggable storage, cloud-native readiness, multi-tenancy, security features, observability, and user-friendliness, RobustMQ is designed to be production-ready and become a top-level Apache project in the message queue ecosystem by the second half of 2025.

README:

Introduction • Features • Architecture • Quick Start • Build Script • Documentation • Contributing • Community

⚠️ Development Status This project is currently in its early preview stage and is undergoing rapid iteration and testing. A stable release is expected in the second half of 2025. We are actively working towards making RobustMQ production-ready and aim to become a top-level Apache project in the message queue ecosystem.

RobustMQ is a next-generation, high-performance, multi-protocol message queue built in Rust. Our vision is to create a unified messaging infrastructure tailored for modern cloud-native and AI systems.

- 🚀 High Performance: Built with Rust, ensuring memory safety, zero-cost abstractions, and blazing-fast performance

- 🏗️ Distributed Architecture: Separation of compute, storage, and scheduling for optimal scalability and resource utilization

- 🔌 Multi-Protocol Support: Native support for MQTT (3.x/4.x/5.x), AMQP, Kafka, and RocketMQ protocols

- 💾 Pluggable Storage: Modular storage layer supporting local files, S3, HDFS, and other storage backends

- ☁️ Cloud-Native: Kubernetes-ready with auto-scaling, service discovery, and observability built-in

- 🏢 Multi-Tenancy: Support for virtual clusters within a single physical deployment

- 🔐 Security First: Built-in authentication, authorization, and encryption support

- 📊 Observability: Comprehensive metrics, tracing, and logging with Prometheus and OpenTelemetry integration

- 🎯 User-Friendly: Simple deployment, intuitive management console, and extensive documentation

- Broker Server: High-performance message handling with multi-protocol support

- Meta Service: Metadata management and cluster coordination using Raft consensus

- Journal Server: Persistent storage layer with pluggable backends

- Web Console: Management interface for monitoring and administration

- One Binary, One Process: Simplified deployment and operations

- Protocol Isolation: Different protocols use dedicated ports (MQTT: 1883/1884/8083/8084, Kafka: 9092, gRPC: 1228)

- Fault Tolerance: Built-in replication and automatic failover

- Horizontal Scaling: Add capacity by simply adding more nodes

# Clone the repository

git clone https://github.com/robustmq/robustmq.git

cd robustmq

# Build and run

cargo run --package cmd --bin broker-serverMethod 1: Manual Download

Visit the releases page and download the appropriate package for your platform:

# Example for Linux x86_64 (replace with your platform)

wget https://github.com/robustmq/robustmq/releases/latest/download/robustmq-v0.1.30-linux-amd64.tar.gz

# Extract the package

tar -xzf robustmq-v0.1.30-linux-amd64.tar.gz

cd robustmq-v0.1.30-linux-amd64

# Run the server

./bin/robust-server startAvailable platforms: linux-amd64, linux-arm64, darwin-amd64, darwin-arm64, windows-amd64

Method 2: Automated Install Script (Recommended)

# Download and install automatically

curl -fsSL https://raw.githubusercontent.com/robustmq/robustmq/main/scripts/install.sh | bash

# Or download the script first to review it

wget https://raw.githubusercontent.com/robustmq/robustmq/main/scripts/install.sh

chmod +x install.sh

./install.sh --help # See available optionsdocker run -p 1883:1883 -p 9092:9092 robustmq/robustmq:latestOnce RobustMQ is running, you should see output similar to:

You can verify the installation by connecting with any MQTT client to localhost:1883 or using the web console.

RobustMQ provides a build script to automatically package installation packages for your local system:

# Build for current platform (includes server binaries and web UI)

./scripts/build.sh📚 For advanced build options, cross-platform compilation, and detailed instructions, please refer to our documentation:

- 📖 Official Documentation - Comprehensive guides and API references

- 🚀 Quick Start Guide - Get up and running in minutes

- 🔧 MQTT Documentation - MQTT-specific features and configuration

- 💻 Command Reference - CLI commands and usage

- 🎛️ Web Console - Management interface

We welcome contributions from the community! RobustMQ is an open-source project, and we're excited to collaborate with developers interested in Rust, distributed systems, and message queues.

- 📋 Read our Contribution Guide

- 🔍 Check Good First Issues

- 🍴 Fork the repository

- 🌿 Create a feature branch

- ✅ Make your changes with tests

- 📤 Submit a pull request

Join our growing community of developers, users, and contributors:

- 🎮 Discord Server - Real-time chat, questions, and collaboration

- 🐛 GitHub Issues - Bug reports and feature requests

- 💡 GitHub Discussions - General discussions and ideas

-

微信群: Join our WeChat group for Chinese-speaking users

-

个人微信: If the group QR code has expired, add the developer's personal WeChat:

RobustMQ is licensed under the Apache License 2.0, which strikes a balance between open collaboration and allowing you to use the software in your projects, whether open source or proprietary.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for robustmq

Similar Open Source Tools

robustmq

RobustMQ is a next-generation, high-performance, multi-protocol message queue built in Rust. It aims to create a unified messaging infrastructure tailored for modern cloud-native and AI systems. With features like high performance, distributed architecture, multi-protocol support, pluggable storage, cloud-native readiness, multi-tenancy, security features, observability, and user-friendliness, RobustMQ is designed to be production-ready and become a top-level Apache project in the message queue ecosystem by the second half of 2025.

nullclaw

NullClaw is the smallest fully autonomous AI assistant infrastructure, a static Zig binary that fits on any $5 board, boots in milliseconds, and requires nothing but libc. It features an impossibly small 678 KB static binary with no runtime or framework overhead, near-zero memory usage, instant startup, true portability across different CPU architectures, and a feature-complete stack with 22+ providers, 11 channels, and 18+ tools. The tool is lean by default, secure by design, fully swappable with core systems as vtable interfaces, and offers no lock-in with OpenAI-compatible provider support and pluggable custom endpoints.

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

cedar-OS

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

emqx

EMQX is a highly scalable and reliable MQTT platform designed for IoT data infrastructure. It supports various protocols like MQTT 5.0, 3.1.1, and 3.1, as well as MQTT-SN, CoAP, LwM2M, and MQTT over QUIC. EMQX allows connecting millions of IoT devices, processing messages in real time, and integrating with backend data systems. It is suitable for applications in AI, IoT, IIoT, connected vehicles, smart cities, and more. The tool offers features like massive scalability, powerful rule engine, flow designer, AI processing, robust security, observability, management, extensibility, and a unified experience with the Business Source License (BSL) 1.1.

awesome-alt-clouds

Awesome Alt Clouds is a curated list of non-hyperscale cloud providers offering specialized infrastructure and services catering to specific workloads, compliance requirements, and developer needs. The list includes various categories such as Infrastructure Clouds, Sovereign Clouds, Unikernels & WebAssembly, Data Clouds, Workflow and Operations Clouds, Network, Connectivity and Security Clouds, Vibe Clouds, Developer Happiness Clouds, Authorization, Identity, Fraud and Abuse Clouds, Monetization, Finance and Legal Clouds, Customer, Marketing and eCommerce Clouds, IoT, Communications, and Media Clouds, Blockchain Clouds, Source Code Control, Cloud Adjacent, and Future Clouds.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

IIMS-By-AI

The Intelligent Information Management System (IIMS) is a comprehensive, multi-functional integrated platform designed to provide efficient and intelligent information management solutions. It includes core functions such as Electronic Educational Administration System (EAS) and Document Management System (DMS), with features like student record management, financial management, archive file upload, and conversation management. The system integrates AI advanced functions for conversation and knowledge base Q&A, model support for Ollama and OpenAI, and technical features like permission management, AI integration, data security, and streaming output support. Currently under active development, the system offers role-based access control, keyword extraction, and real-time response capabilities.

mosaico

Mosaico is a blazing-fast data platform designed to bridge the gap between Robotics and Physical AI. It streamlines data management, compression, and search by replacing monolithic files with a structured archive powered by Rust and Python. The platform operates on a standard client-server model, with the server daemon, mosaicod, handling heavy lifting tasks like data conversion, compression, and organized storage. The Python SDK (mosaico-sdk-py) and Rust backend (mosaicod) are included in this monorepo configuration to simplify testing and reduce compatibility issues. Mosaico enables the ingestion of standard ROS sequences, transforming them into synchronized, randomized dataframes for Physical AI applications. Efficiency is built into the architecture, with data batches streamed directly from the Mosaico data platform, eliminating the need to download massive datasets locally.

TuyaOpen

TuyaOpen is an open source AI+IoT development framework supporting cross-chip platforms and operating systems. It provides core functionalities for AI+IoT development, including pairing, activation, control, and upgrading. The SDK offers robust security and compliance capabilities, meeting data compliance requirements globally. TuyaOpen enables the development of AI+IoT products that can leverage the Tuya APP ecosystem and cloud services. It continues to expand with more cloud platform integration features and capabilities like voice, video, and facial recognition.

Awesome-Vibe-Coding

Awesome-Vibe-Coding is a curated list of Vibe Coding open-source projects, tools, and learning resources. It includes a comprehensive collection of AI-powered development toolkits, IDEs, extensions, platforms, and cloud-based agents for modern software development. The repository also covers development standards, MCP servers, agent communication protocols, agent SDKs, supporting tools, vibe coding projects, and learning resources. The project aims to showcase the power of AI-assisted development and provide a resource hub for developers interested in Vibe Coding practices.

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

For similar tasks

robustmq

RobustMQ is a next-generation, high-performance, multi-protocol message queue built in Rust. It aims to create a unified messaging infrastructure tailored for modern cloud-native and AI systems. With features like high performance, distributed architecture, multi-protocol support, pluggable storage, cloud-native readiness, multi-tenancy, security features, observability, and user-friendliness, RobustMQ is designed to be production-ready and become a top-level Apache project in the message queue ecosystem by the second half of 2025.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.