cedar-OS

The open-source framework for building AI-native frontends

Stars: 73

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

README:

An open-source framework for building the next generation of AI-native software

For the first time in history, products can come to life. We help you build products with life that are designed to interact with humans.

Cedar OS is a comprehensive React framework that bridges the gap between AI agents and React applications. It provides everything you need to build AI-native applications where agents can read, write, and interact with your application state just like users can.

More importantly, we expecially focus interaction layer between AI and humans. We believe that reading and writing text is effortful, and that the user should have easier, more intuitive and powerful ways to interact with AI.

Unlike traditional chat widgets or AI integrations, Cedar OS enables true AI-native experiences with:

- Full State Integration: AI agents can read and modify your React application state through a type-safe interface

- Real-time Streaming: Built-in support for streaming responses and real-time AI interactions

- Voice-First Design: Native voice integration for natural AI conversations

- Flexible Architecture: Works with any AI provider (OpenAI, Anthropic, Mastra, AI SDK, or custom backends)

- Component-First: Shadcn-style components that you own and can fully customize

Connect to any AI backend with type-safe, provider-specific configurations:

- OpenAI, Anthropic, Google, Mistral, Groq, XAI

- Vercel AI SDK integration

- Mastra framework support

- Custom backend implementations

-

FloatingCedarChat- Floating chat interface -

SidePanelCedarChat- Sidebar chat panel -

CedarCaptionChat- Embedded caption-style chat - Built-in streaming, typing indicators, and message history

// AI can read and modify this state

const [todos, setTodos] = useCedarState(

'todos',

[],

'User todo list manageable by AI'

);- Type-safe state registration for AI access

- Custom setters for controlled AI interactions

- Automatic state synchronization and persistence

// @mention system for rich context

@user @file:components.tsx @state:todos- Intelligent mention providers for users, files, state, and custom data

- Contextual AI responses based on mentioned content

- Extensible mention system for domain-specific contexts

- Real-time voice-to-text and text-to-voice

- WebSocket streaming for low-latency interactions

- Customizable voice settings and providers

- Browser and backend TTS support

- Radial menu system for quick AI interactions

- Keyboard shortcuts and gesture support

- Customizable spell registry and workflows

- Shadcn-style component architecture - you own the code

- Built with Tailwind CSS for easy styling

- Dark/light mode support

- Animation-rich interfaces that reflect AI fluidity

Cedar OS isn't just a chat widget - it's a complete framework for building applications where AI is a first-class citizen. AI agents can interact with your app state, navigate users to different views, and perform complex workflows.

- Zero Lock-in: All components are copied to your project (Shadcn-style)

- Full Customization: Override any internal function or component

- Type Safety: Comprehensive TypeScript support with provider-specific typing

- Works Everywhere: Next.js, Create React App, Vite, and other React frameworks

Built by developers who've shipped AI copilots in production, Cedar OS handles the complex parts:

- State lifecycle management across component unmounting

- Streaming response handling and error recovery

- Context management and memory optimization

- Provider failover and retry logic

- Custom AI providers and backends

- Plugin system for mentions, spells, and workflows

- Message type extensions for domain-specific UI

- Hook-based architecture for easy integration

npx cedar-os-cli plant-seedimport { CedarCopilot, FloatingCedarChat } from 'cedar-os';

function App() {

return (

<CedarCopilot llmProvider={{ provider: 'openai', apiKey: 'your-key' }}>

<YourApp />

<FloatingCedarChat />

</CedarCopilot>

);

}- 📖 Documentation - Complete guides and API reference

- 🚀 Getting Started - Build your first AI-native app

- 🎯 Examples - See Cedar OS in action

- 📞 Book a Call - Free onboarding and guidance

- 📧 Contact - Get direct support

Built for developers creating the most ambitious AI-native applications of the future.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for cedar-OS

Similar Open Source Tools

cedar-OS

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

llama.ui

llama.ui is an open-source desktop application that provides a beautiful, user-friendly interface for interacting with large language models powered by llama.cpp. It is designed for simplicity and privacy, allowing users to chat with powerful quantized models on their local machine without the need for cloud services. The project offers multi-provider support, conversation management with indexedDB storage, rich UI components including markdown rendering and file attachments, advanced features like PWA support and customizable generation parameters, and is privacy-focused with all data stored locally in the browser.

nekro-agent

Nekro Agent is an AI chat plugin and proxy execution bot that is highly scalable, offers high freedom, and has minimal deployment requirements. It features context-aware chat for group/private chats, custom character settings, sandboxed execution environment, interactive image resource handling, customizable extension development interface, easy deployment with docker-compose, integration with Stable Diffusion for AI drawing capabilities, support for various file types interaction, hot configuration updates and command control, native multimodal understanding, visual application management control panel, CoT (Chain of Thought) support, self-triggered timers and holiday greetings, event notification understanding, and more. It allows for third-party extensions and AI-generated extensions, and includes features like automatic context trigger based on LLM, and a variety of basic commands for bot administrators.

blurr

Panda is a proactive, on-device AI agent for Android that autonomously understands natural language commands and operates your phone's UI to achieve them. It acts as a personal operator, handling complex, multi-step tasks across different applications. With intelligent UI automation, high-quality voice, and personalized local memory, Panda simplifies interactions with technology. Built on Kotlin, Panda's architecture includes Eyes & Hands for physical device connection, The Brain for reasoning, and The Agent for execution. The project is a proof-of-concept aiming to become an indispensable assistant.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

onyx

Onyx is an open-source Gen-AI and Enterprise Search tool that serves as an AI Assistant connected to company documents, apps, and people. It provides a chat interface, can be deployed anywhere, and offers features like user authentication, role management, chat persistence, and UI for configuring AI Assistants. Onyx acts as an Enterprise Search tool across various workplace platforms, enabling users to access team-specific knowledge and perform tasks like document search, AI answers for natural language queries, and integration with common workplace tools like Slack, Google Drive, Confluence, etc.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

odoo-llm

This repository provides a comprehensive framework for integrating Large Language Models (LLMs) into Odoo. It enables seamless interaction with AI providers like OpenAI, Anthropic, Ollama, and Replicate for chat completions, text embeddings, and more within the Odoo environment. The architecture includes external AI clients connecting via `llm_mcp_server` and Odoo AI Chat with built-in chat interface. The core module `llm` offers provider abstraction, model management, and security, along with tools for CRUD operations and domain-specific tool packs. Various AI providers, infrastructure components, and domain-specific tools are available for different tasks such as content generation, knowledge base management, and AI assistants creation.

sdk-python

Strands Agents is a lightweight and flexible SDK that takes a model-driven approach to building and running AI agents. It supports various model providers, offers advanced capabilities like multi-agent systems and streaming support, and comes with built-in MCP server support. Users can easily create tools using Python decorators, integrate MCP servers seamlessly, and leverage multiple model providers for different AI tasks. The SDK is designed to scale from simple conversational assistants to complex autonomous workflows, making it suitable for a wide range of AI development needs.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

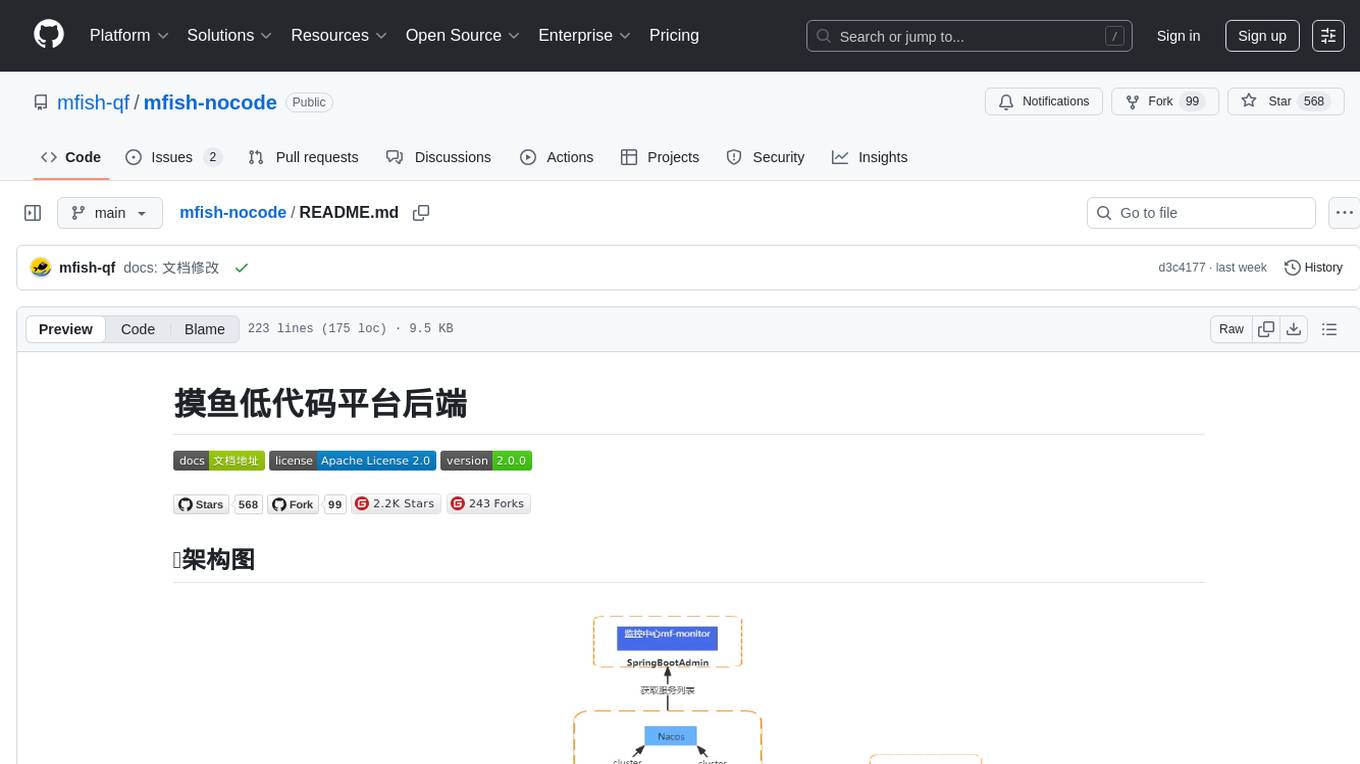

mfish-nocode

Mfish-nocode is a low-code/no-code platform that aims to make development as easy as fishing. It breaks down technical barriers, allowing both developers and non-developers to quickly build business systems, increase efficiency, and unleash creativity. It is not only an efficiency tool for developers during leisure time, but also a website building tool for novices in the workplace, and even a secret weapon for leaders to prototype.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

airstate

AirState is a straightforward software development kit that enables users to integrate real-time collaboration functionalities into their web applications. With its user-friendly interface and robust capabilities, AirState simplifies the process of incorporating live collaboration features, making it an ideal choice for developers seeking to enhance the interactive elements of their projects. The SDK offers a seamless solution for creating engaging and interactive web experiences, allowing users to easily implement real-time collaboration tools without the need for extensive coding knowledge or complex configurations. By leveraging AirState, developers can streamline the development process and deliver dynamic web applications that facilitate real-time communication and collaboration among users.

chatmcp

Chatmcp is a chatbot framework for building conversational AI applications. It provides a flexible and extensible platform for creating chatbots that can interact with users in a natural language. With Chatmcp, developers can easily integrate chatbot functionality into their applications, enabling users to communicate with the system through text-based conversations. The framework supports various natural language processing techniques and allows for the customization of chatbot behavior and responses. Chatmcp simplifies the development of chatbots by providing a set of pre-built components and tools that streamline the creation process. Whether you are building a customer support chatbot, a virtual assistant, or a chat-based game, Chatmcp offers the necessary features and capabilities to bring your conversational AI ideas to life.

For similar tasks

cedar-OS

Cedar OS is an open-source framework that bridges the gap between AI agents and React applications, enabling the creation of AI-native applications where agents can interact with the application state like users. It focuses on providing intuitive and powerful ways for humans to interact with AI through features like full state integration, real-time streaming, voice-first design, and flexible architecture. Cedar OS offers production-ready chat components, agentic state management, context-aware mentions, voice integration, spells & quick actions, and fully customizable UI. It differentiates itself by offering a true AI-native architecture, developer-first experience, production-ready features, and extensibility. Built with TypeScript support, Cedar OS is designed for developers working on ambitious AI-native applications.

cossistant

Cossistant is an open source chat support widget tailored for the React ecosystem. It offers headless components for building customizable chat interfaces, real-time messaging with WebSocket technology, and tools for managing customer conversations. The tool is API-first, self-hosted, developer-friendly with TypeScript support, and provides complete integration flexibility. It uses technologies like Next.js, TailwindCSS, and WebSockets, and supports databases like PlanetScale for production and DBgin for local development. Cossistant is ideal for developers seeking a versatile chat solution that can be easily integrated into their applications.

gaia-ui

A collection of production-ready UI components designed specifically for building AI assistants and chatbots. These components are battle-tested in production at GAIA, designed to handle edge cases and real-world scenarios, accessible, responsive, and performant. The library focuses on quality over quantity, offering components that solve real problems better than existing alternatives. Users can easily add components to their projects using the provided commands, and the library is actively being developed and refined for better compatibility and smaller bundle sizes.

dewhale

Dewhale is a GitHub-Powered AI tool designed for effortless development. It utilizes prompt engineering techniques under the GPT-4 model to issue commands, allowing users to generate code with lower usage costs and easy customization. The tool seamlessly integrates with GitHub, providing version control, code review, and collaborative features. Users can join discussions on the design philosophy of Dewhale and explore detailed instructions and examples for setting up and using the tool.

aura-design

Aura Design is an open-source AI-friendly component library for next-generation intelligent applications. It offers AI-optimized interactive components designed for seamless integration with AI model I/O. The library works with various web frameworks and allows easy customization to suit specific requirements. With a modern design and smart markdown renderer, Aura Design enhances the overall user experience.

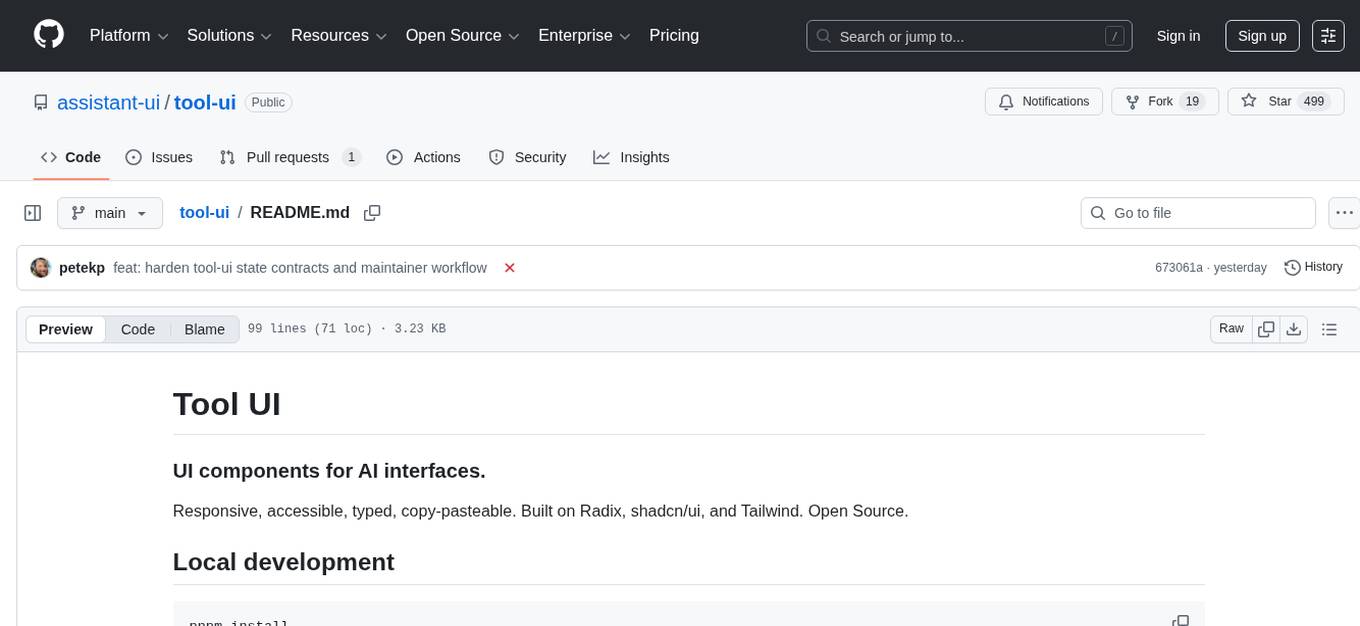

tool-ui

Tool UI is a collection of UI components designed for AI interfaces. It provides responsive, accessible, and copy-pasteable components built on Radix, shadcn/ui, and Tailwind. The repository offers a variety of components such as Approval Card, Audio, Chart, Citation, Code Block, Data Table, Image, Image Gallery, and more. Tool UI is maintained by assistant-ui and optimized for direct maintenance rather than open-ended external contributions.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.