spec-workflow-mcp

A Model Context Protocol (MCP) server that provides structured spec-driven development workflow tools for AI-assisted software development, featuring a real-time web dashboard and VSCode extension for monitoring and managing your project's progress directly in your development environment.

Stars: 1340

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

README:

A Model Context Protocol (MCP) server that provides structured spec-driven development workflow tools for AI-assisted software development, featuring a real-time web dashboard and VSCode extension for monitoring and managing your project's progress directly in your development environment.

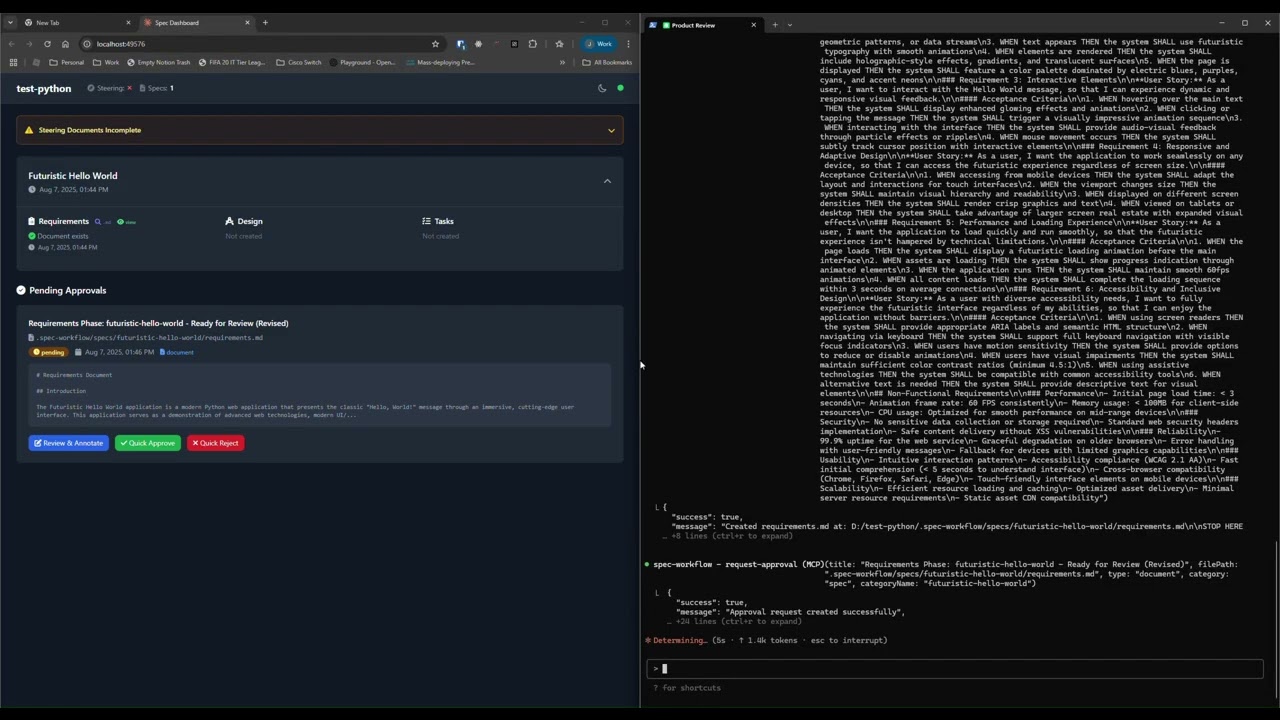

See how the approval system works: create documents, request approval through the dashboard, provide feedback, and track revisions.

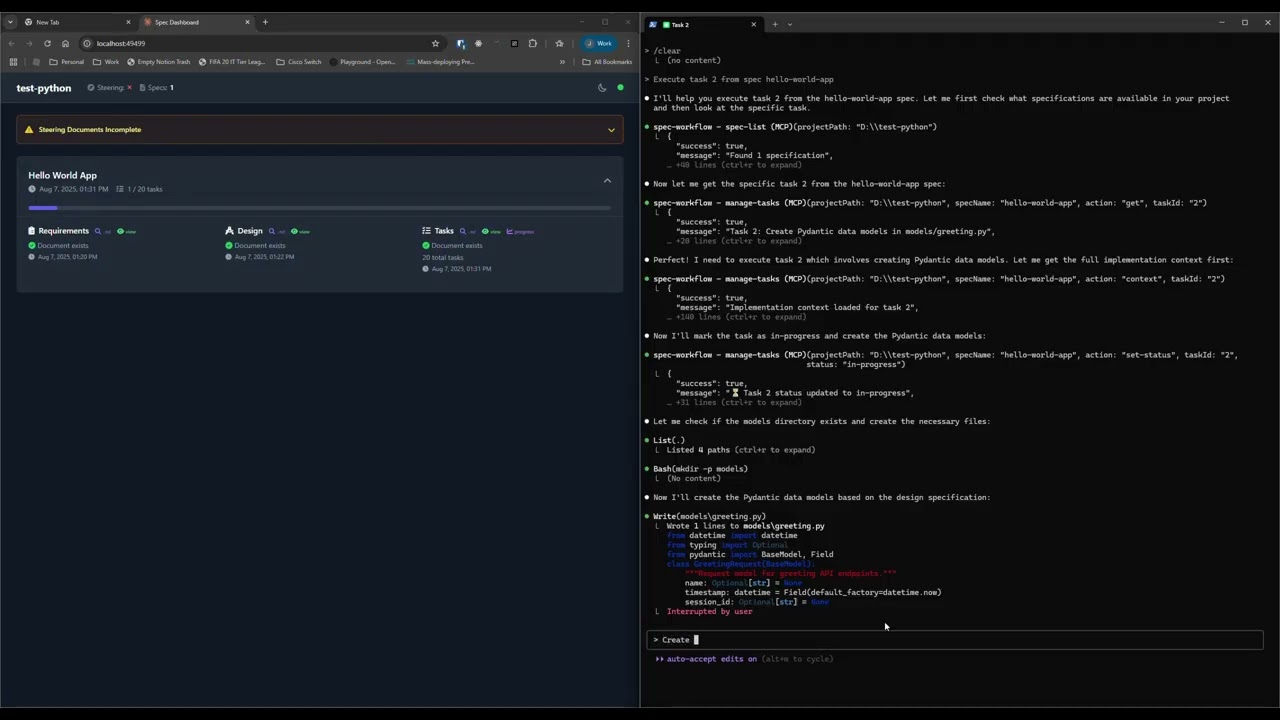

Explore the real-time dashboard: view specs, track progress, navigate documents, and monitor your development workflow.

- Structured Development Workflow - Sequential spec creation (Requirements → Design → Tasks)

- Real-Time Web Dashboard - Monitor specs, tasks, and progress with live updates

- VSCode Extension - Integrated sidebar dashboard for developers working in VSCode

- Document Management - View and manage all spec documents from dashboard or extension

- Archive System - Organize completed specs to keep active projects clean

- Task Progress Tracking - Visual progress bars and detailed task status

- Approval Workflow - Complete approval process with approve, reject, and revision requests

- Steering Documents - Project vision, technical decisions, and structure guidance

- Sound Notifications - Configurable audio alerts for approvals and task completions

- Bug Workflow - Complete bug reporting and resolution tracking

- Template System - Pre-built templates for all document types

- Cross-Platform - Works on Windows, macOS, and Linux

-

Add to your AI tool configuration (see MCP Client Setup below):

{ "mcpServers": { "spec-workflow": { "command": "npx", "args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"] } } }With Auto-Started Dashboard (opens dashboard automatically with MCP server):

{ "mcpServers": { "spec-workflow": { "command": "npx", "args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project", "--AutoStartDashboard"] } } }With Custom Port:

{ "mcpServers": { "spec-workflow": { "command": "npx", "args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project", "--AutoStartDashboard", "--port", "3456"] } } }Note: Can be used without path to your project, but some MCP clients may not start the server from the current directory.

-

Choose your interface:

# Dashboard only mode (uses ephemeral port) npx -y @pimzino/spec-workflow-mcp@latest /path/to/your/project --dashboard # Dashboard only with custom port npx -y @pimzino/spec-workflow-mcp@latest /path/to/your/project --dashboard --port 3000 # View all available options npx -y @pimzino/spec-workflow-mcp@latest --help

Command-Line Options:

-

--help- Show comprehensive usage information and examples -

--dashboard- Run dashboard-only mode (no MCP server) -

--AutoStartDashboard- Auto-start dashboard with MCP server -

--port <number>- Specify dashboard port (1024-65535). Works with both--dashboardand--AutoStartDashboard

Install the Spec Workflow MCP Extension from the VSCode marketplace:

- Open VSCode in your project directory containing

.spec-workflow/ - The extension automatically provides the dashboard functionality within VSCode

- Access via the Spec Workflow icon in the Activity Bar

- No separate dashboard needed - everything runs within your IDE

Extension Features:

- Integrated sidebar dashboard with real-time updates

- Archive system for organizing completed specs

- Full approval workflow with VSCode native dialogs

- Sound notifications for approvals and completions

- Editor context menu actions for approvals and comments

IMPORTANT: For CLI users, the web dashboard is mandatory. For VSCode users, the extension replaces the need for a separate web dashboard while providing the same functionality directly in your IDE.

-

You can simply mention spec-workflow or whatever name you gave the MCP server in your conversation. The AI will handle the complete workflow automatically or you can use some of the example prompts below:

- "Create a spec for user authentication" - Creates complete spec workflow for that feature

- "Create a spec called payment-system" - Builds full requirements → design → tasks

- "Build a spec for @prd" - Takes your existing PRD and creates the complete spec workflow from it

- "Create a spec for shopping-cart - include add to cart, quantity updates, and checkout integration" - Detailed feature spec

- "List my specs" - Shows all specs and their current status

- "Show me the user-auth progress" - Displays detailed progress information

- "Execute task 1.2 in spec user-auth" - Runs a specific task from your spec

- Copy prompts from dashboard - Use the "Copy Prompt" button in the task list on your dashboard

The agent automatically handles approval workflows, task management, and guides you through each phase.

Augment Code

Configure in your Augment settings:

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"]

}

}

}Claude Code CLI

Add to your MCP configuration:

claude mcp add spec-workflow npx @pimzino/spec-workflow-mcp@latest -- /path/to/your/projectImportant Notes:

- The

-yflag bypasses npm prompts for smoother installation - The

--separator ensures the path is passed to the spec-workflow script, not to npx - Replace

/path/to/your/projectwith your actual project directory path

Alternative for Windows (if the above doesn't work):

claude mcp add spec-workflow cmd.exe /c "npx @pimzino/spec-workflow-mcp@latest /path/to/your/project"Claude Desktop

Add to claude_desktop_config.json:

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"]

}

}

}Or with auto-started dashboard:

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project", "--AutoStartDashboard"]

}

}

}Cline/Claude Dev

Add to your MCP server configuration:

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"]

}

}

}Continue IDE Extension

Add to your Continue configuration:

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"]

}

}

}Cursor IDE

Add to your Cursor settings (settings.json):

{

"mcpServers": {

"spec-workflow": {

"command": "npx",

"args": ["-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"]

}

}

}OpenCode

Add to your opencode.json configuration file (either global at ~/.config/opencode/opencode.json or project-specific):

{

"$schema": "https://opencode.ai/config.json",

"mcp": {

"spec-workflow": {

"type": "local",

"command": ["npx", "-y", "@pimzino/spec-workflow-mcp@latest", "/path/to/your/project"],

"enabled": true

}

}

}Note: Replace

/path/to/your/projectwith the actual path to your project directory where you want the spec workflow to operate.

-

spec-workflow-guide- Complete guide for the spec-driven workflow process -

steering-guide- Guide for creating project steering documents

-

create-spec-doc- Create/update spec documents (requirements, design, tasks) -

spec-list- List all specs with status information -

spec-status- Get detailed status of a specific spec -

manage-tasks- Comprehensive task management for spec implementation

-

get-template-context- Get markdown templates for all document types -

get-steering-context- Get project steering context and guidance -

get-spec-context- Get context for a specific spec

-

create-steering-doc- Create project steering documents (product, tech, structure)

-

request-approval- Request user approval for documents -

get-approval-status- Check approval status -

delete-approval- Clean up completed approvals

The web dashboard is a separate service for CLI users. Each project gets its own dedicated dashboard running on an ephemeral port. The dashboard provides:

- Live Project Overview - Real-time updates of specs and progress

- Document Viewer - Read requirements, design, and tasks documents

- Task Progress Tracking - Visual progress bars and task status

- Steering Documents - Quick access to project guidance

- Dark Mode - Automatically enabled for better readability

- Spec Cards - Overview of each spec with status indicators

- Document Navigation - Switch between requirements, design, and tasks

- Task Management - View task progress and copy implementation prompts

- Real-Time Updates - WebSocket connection for live project status

The VSCode extension provides all dashboard functionality directly within your IDE:

- Sidebar Integration - Access everything from the Activity Bar

- Archive Management - Switch between active and archived specs

- Native Dialogs - VSCode confirmation dialogs for all actions

- Editor Integration - Context menu actions for approvals and comments

- Sound Notifications - Configurable audio alerts

- No External Dependencies - Works entirely within VSCode

- Single Environment - No need to switch between browser and IDE

- Native Experience - Uses VSCode's native UI components

- Better Integration - Context menu actions and editor integration

- Simplified Setup - No separate dashboard service required

steering-guide → create-steering-doc (product, tech, structure)

Creates foundational documents to guide your project development.

spec-workflow-guide → create-spec-doc → [review] → implementation

Sequential process: Requirements → Design → Tasks → Implementation

- Use

get-spec-contextfor detailed implementation context - Use

manage-tasksto track task completion - Monitor progress via the web dashboard

your-project/

.spec-workflow/

steering/

product.md # Product vision and goals

tech.md # Technical decisions

structure.md # Project structure guide

specs/

{spec-name}/

requirements.md # What needs to be built

design.md # How it will be built

tasks.md # Implementation breakdown

approval/

{spec-name}/

{document-id}.json # Approval status tracking

# Install dependencies

npm install

# Build the project

npm run build

# Run in development mode (with auto-reload)

npm run dev

# Start the production server

npm start

# Clean build artifacts

npm run clean-

Claude MCP configuration not working with project path

- Ensure you're using the correct syntax:

claude mcp add spec-workflow npx -y @pimzino/spec-workflow-mcp@latest -- /path/to/your/project - The

--separator is crucial for passing the path to the script rather than to npx - Verify the path exists and is accessible

- For paths with spaces, ensure they're properly quoted in your shell

- Check the generated configuration in your

claude.jsonto ensure the path appears in theargsarray

- Ensure you're using the correct syntax:

-

Dashboard not starting

- Ensure you're using the

--dashboardflag when starting the dashboard service - The dashboard must be started separately from the MCP server

- Check console output for the dashboard URL and any error messages

- If using

--port, ensure the port number is valid (1024-65535) and not in use by another application

- Ensure you're using the

-

Approvals not working

- Verify the dashboard is running alongside the MCP server

- The dashboard is required for document approvals and task tracking

- Check that both services are pointing to the same project directory

-

MCP server not connecting

- Verify the file paths in your configuration are correct

- Ensure the project has been built with

npm run build - Check that Node.js is available in your system PATH

-

Port conflicts

- If you get a "port already in use" error, try a different port with

--port <different-number> - Use

netstat -an | find ":3000"(Windows) orlsof -i :3000(macOS/Linux) to check what's using a port - Omit the

--portparameter to automatically use an available ephemeral port

- If you get a "port already in use" error, try a different port with

-

Dashboard not updating

- The dashboard uses WebSockets for real-time updates

- Refresh the browser if connection is lost

- Check console for any JavaScript errors

- Check the Issues page for known problems

- Create a new issue using the provided templates

- Use the workflow guides within the tools for step-by-step instructions

GPL-3.0

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for spec-workflow-mcp

Similar Open Source Tools

spec-workflow-mcp

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

context-portal

Context-portal is a versatile tool for managing and visualizing data in a collaborative environment. It provides a user-friendly interface for organizing and sharing information, making it easy for teams to work together on projects. With features such as customizable dashboards, real-time updates, and seamless integration with popular data sources, Context-portal streamlines the data management process and enhances productivity. Whether you are a data analyst, project manager, or team leader, Context-portal offers a comprehensive solution for optimizing workflows and driving better decision-making.

openmcp-client

OpenMCP is an integrated plugin for MCP server debugging in vscode/trae/cursor, combining development and testing functionalities. It includes tools for testing MCP resources, managing large model interactions, project-level management, and supports multiple large models. The openmcp-sdk allows for deploying MCP as an agent app with easy configuration and execution of tasks. The project follows a modular design allowing implementation in different modes on various platforms.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

Ivy-Framework

Ivy-Framework is a powerful tool for building internal applications with AI assistance using C# codebase. It provides a CLI for project initialization, authentication integrations, database support, LLM code generation, secrets management, container deployment, hot reload, dependency injection, state management, routing, and external widget framework. Users can easily create data tables for sorting, filtering, and pagination. The framework offers a seamless integration of front-end and back-end development, making it ideal for developing robust internal tools and dashboards.

Sage

Sage is a production-ready, modular, and intelligent multi-agent orchestration framework for complex problem solving. It intelligently breaks down complex tasks into manageable subtasks through seamless agent collaboration. Sage provides Deep Research Mode for comprehensive analysis and Rapid Execution Mode for quick task completion. It offers features like intelligent task decomposition, agent orchestration, extensible tool system, dual execution modes, interactive web interface, advanced token tracking, rich configuration, developer-friendly APIs, and robust error recovery mechanisms. Sage supports custom workflows, multi-agent collaboration, custom agent development, agent flow orchestration, rule preferences system, message manager for smart token optimization, task manager for comprehensive state management, advanced file system operations, advanced tool system with plugin architecture, token usage & cost monitoring, and rich configuration system. It also includes real-time streaming & monitoring, advanced tool development, error handling & reliability, performance monitoring, MCP server integration, and security features.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

ai-app-lab

The ai-app-lab is a high-code Python SDK Arkitect designed for enterprise developers with professional development capabilities. It provides a toolset and workflow set for developing large model applications tailored to specific business scenarios. The SDK offers highly customizable application orchestration, quality business tools, one-stop development and hosting services, security enhancements, and AI prototype application code examples. It caters to complex enterprise development scenarios, enabling the creation of highly customized intelligent applications for various industries.

llm-agents.nix

Nix packages for AI coding agents and development tools. Automatically updated daily. This repository provides a wide range of AI coding agents and tools that can be used in the terminal environment. The tools cover various functionalities such as code assistance, AI-powered development agents, CLI tools for AI coding, workflow and project management, code review, utilities like search tools and browser automation, and usage analytics for AI coding sessions. The repository also includes experimental features like sandboxed execution, provider abstraction, and tool composition to explore how Nix can enhance AI-powered development.

ai-manus

AI Manus is a general-purpose AI Agent system that supports running various tools and operations in a sandbox environment. It offers deployment with minimal dependencies, supports multiple tools like Terminal, Browser, File, Web Search, and messaging tools, allocates separate sandboxes for tasks, manages session history, supports stopping and interrupting conversations, file upload and download, and is multilingual. The system also provides user login and authentication. The project primarily relies on Docker for development and deployment, with model capability requirements and recommended Deepseek and GPT models.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

bisheng

Bisheng is a leading open-source **large model application development platform** that empowers and accelerates the development and deployment of large model applications, helping users enter the next generation of application development with the best possible experience.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

vibe

Vibe Design System is a collection of packages for React.js development, providing components, styles, and guidelines to streamline the development process and enhance user experience. It includes a Core component library, Icons library, Testing utilities, Codemods, and more. The system also features an MCP server for intelligent assistance with component APIs, usage examples, icons, and best practices. Vibe 2 is no longer actively maintained, with users encouraged to upgrade to Vibe 3 for the latest improvements and ongoing support.

stagehand

Stagehand is an AI web browsing framework that simplifies and extends web automation using three simple APIs: act, extract, and observe. It aims to provide a lightweight, configurable framework without complex abstractions, allowing users to automate web tasks reliably. The tool generates Playwright code based on atomic instructions provided by the user, enabling natural language-driven web automation. Stagehand is open source, maintained by the Browserbase team, and supports different models and model providers for flexibility in automation tasks.

For similar tasks

spec-workflow-mcp

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

ragna

Ragna is a RAG orchestration framework designed for managing workflows and orchestrating tasks. It provides a comprehensive set of features for users to streamline their processes and automate repetitive tasks. With Ragna, users can easily create, schedule, and monitor workflows, making it an ideal tool for teams and individuals looking to improve their productivity and efficiency. The framework offers extensive documentation, community support, and a user-friendly interface, making it accessible to users of all skill levels. Whether you are a developer, data scientist, or project manager, Ragna can help you simplify your workflow management and boost your overall performance.

tegon

Tegon is an open-source AI-First issue tracking tool designed for engineering teams. It aims to simplify task management by leveraging AI and integrations to automate task creation, prioritize tasks, and enhance bug resolution. Tegon offers features like issues tracking, automatic title generation, AI-generated labels and assignees, custom views, and upcoming features like sprints and task prioritization. It integrates with GitHub, Slack, and Sentry to streamline issue tracking processes. Tegon also plans to introduce AI Agents like PR Agent and Bug Agent to enhance product management and bug resolution. Contributions are welcome, and the product is licensed under the MIT License.

Advanced-GPTs

Nerority's Advanced GPT Suite is a collection of 33 GPTs that can be controlled with natural language prompts. The suite includes tools for various tasks such as strategic consulting, business analysis, career profile building, content creation, educational purposes, image-based tasks, knowledge engineering, marketing, persona creation, programming, prompt engineering, role-playing, simulations, and task management. Users can access links, usage instructions, and guides for each GPT on their respective pages. The suite is designed for public demonstration and usage, offering features like meta-sequence optimization, AI priming, prompt classification, and optimization. It also provides tools for generating articles, analyzing contracts, visualizing data, distilling knowledge, creating educational content, exploring topics, generating marketing copy, simulating scenarios, managing tasks, and more.

aioclock

An asyncio-based scheduling framework designed for execution of periodic tasks with integrated support for dependency injection, enabling efficient and flexible task management. Aioclock is 100% async, light, fast, and resource-friendly. It offers features like task scheduling, grouping, trigger definition, easy syntax, Pydantic v2 validation, and upcoming support for running the task dispatcher on a different process and backend support for horizontal scaling.

airavata

Apache Airavata is a software framework for executing and managing computational jobs on distributed computing resources. It supports local clusters, supercomputers, national grids, academic and commercial clouds. Airavata utilizes service-oriented computing, distributed messaging, and workflow composition. It includes a server package with an API, client SDKs, and a general-purpose UI implementation called Apache Airavata Django Portal.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

obsidian-systemsculpt-ai

SystemSculpt AI is a comprehensive AI-powered plugin for Obsidian, integrating advanced AI capabilities into note-taking, task management, knowledge organization, and content creation. It offers modules for brain integration, chat conversations, audio recording and transcription, note templates, and task generation and management. Users can customize settings, utilize AI services like OpenAI and Groq, and access documentation for detailed guidance. The plugin prioritizes data privacy by storing sensitive information locally and offering the option to use local AI models for enhanced privacy.

For similar jobs

alan-sdk-ios

Alan AI SDK for iOS is a powerful tool that allows developers to quickly create AI agents for their iOS apps. With Alan AI Platform, users can easily design, embed, and host conversational experiences in their applications. The platform offers a web-based IDE called Alan AI Studio for creating dialog scenarios, lightweight SDKs for embedding AI agents, and a backend powered by top-notch speech recognition and natural language understanding technologies. Alan AI enables human-like conversations and actions through voice commands, with features like on-the-fly updates, dialog flow testing, and analytics.

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses an Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. The tool supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled languages. It generates JUnit tests, detects faults, handles SQL databases, and supports authentication. EvoMaster has been funded by the European Research Council and the Research Council of Norway.

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

pgx

Pgx is a collection of GPU/TPU-accelerated parallel game simulators for reinforcement learning (RL). It provides JAX-native game simulators for various games like Backgammon, Chess, Shogi, and Go, offering super fast parallel execution on accelerators and beautiful visualization in SVG format. Pgx focuses on faster implementations while also being sufficiently general, allowing environments to be converted to the AEC API of PettingZoo for running Pgx environments through the PettingZoo API.

sophia

Sophia is an open-source TypeScript platform designed for autonomous AI agents and LLM based workflows. It aims to automate processes, review code, assist with refactorings, and support various integrations. The platform offers features like advanced autonomous agents, reasoning/planning inspired by Google's Self-Discover paper, memory and function call history, adaptive iterative planning, and more. Sophia supports multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It provides a flexible platform for the TypeScript community to expand and support various use cases and integrations.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.