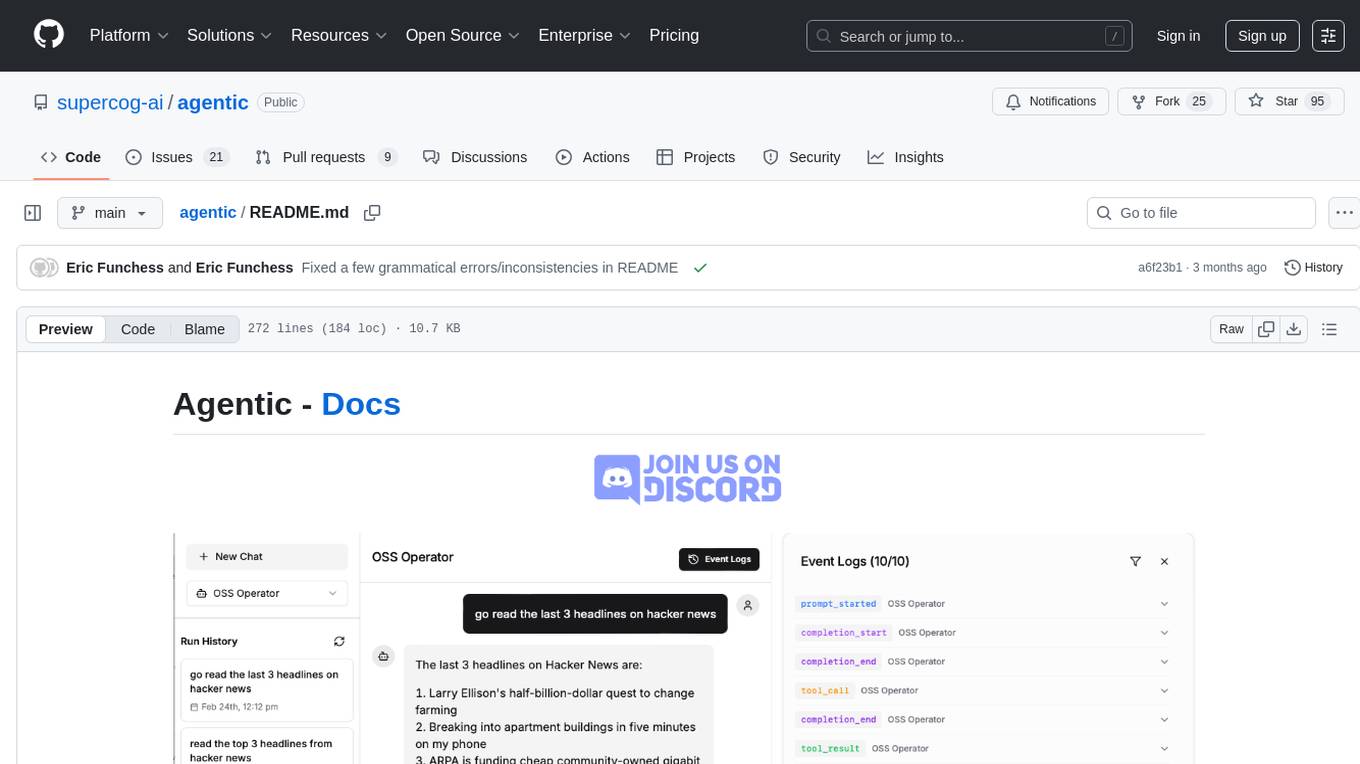

agentic

An opinionated framework for building sophisticated AI Agents

Stars: 95

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

README:

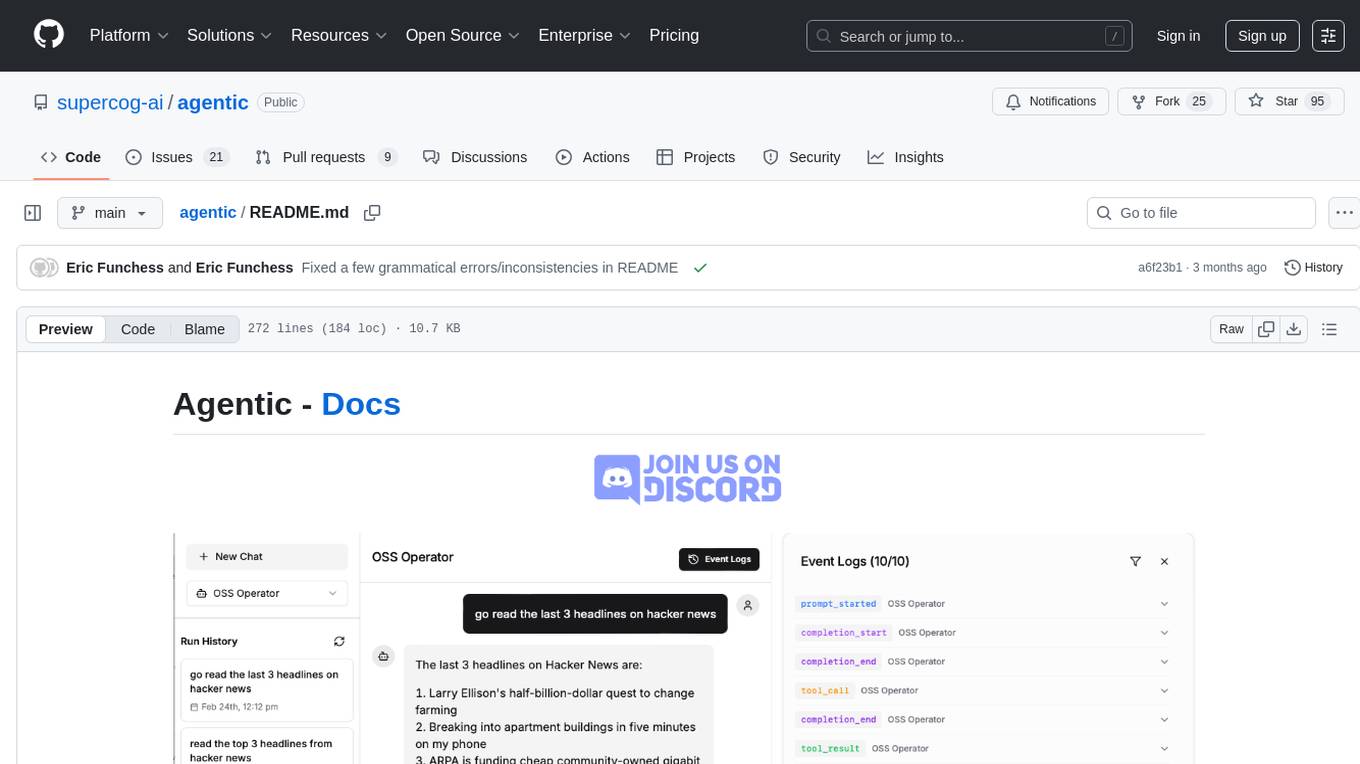

Agentic - Docs

Agentic makes it easy to create AI agents - autonomous software programs that understand natural language and can use tools to do work on your behalf.

Agentic is in the tradition of opinionated frameworks. We've tried to encode lots of sensible defaults and best practices into the design, testing and deployment of agents.

Agentic is a few different things:

- A lightweight agent framework. Same part of the stack as SmolAgents or PydanticAI.

- A reference implementation of the agent protocol.

- An agent runtime built on Ray

- An optional "batteries included" set of features to help you get running quickly:

You can pretty much use any of these features and leave the others. There are lots of framework choices but we think we have embedded some good ideas into ours.

Some of the framework features:

- Approachable and simple to use, but flexible enough to support the most complex agents

- Supports teams of cooperating agents

- Supports Human-in-the-loop

- Easy definition and use of tools (functions, class methods, import LangChain tools, ...)

- Built alongside a set of production-tested tools

Visit the docs: https://supercog-ai.github.io/agentic/latest/

Perform complex research on any topic. Adapted from the LangChain version (but you can actually understand the code).

...full browser automation, including using authenticated sessions...

An agent team which auto-produces and publishes a daily podcast. Customize for your news interests.

Your own meeting bot agent with meeting summaries stored into RAG.

At this stage it's probably easiest to run this repo from source. We use uv for package management:

Note If you're on Linux or Windows and installing the

ragextra you will need to add--extra-index-url https://download.pytorch.org/whl/cputo install the CPU version of PyTorch.

git clone [email protected]:supercog-ai/agentic.git

uv venv --python 3.12

source .venv/bin/activate

# For MacOS

uv pip install -e "./agentic[all,dev]"

# For Linux or Windows

uv pip install -e "./agentic[all,dev]" --extra-index-url https://download.pytorch.org/whl/cpu --index-strategy unsafe-first-matchthese commands will install the agentic package locally so that you can use the agentic CLI command

and so your pythonpath is set correctly.

You can also try installing just the package:

# For MacOS

uv pip install "agentic-framework[all,dev]"

# For Linux or Windows

uv pip install "agentic-framework[all,dev]" --extra-index-url https://download.pytorch.org/whl/cpuNow setup your folder to hold your agents:

agentic init .The install will copy examples and a basic file structure into the directory myagents. You can name

or rename this folder however you like.

Visit the docs for a tutorial on getting started with the framework.

Agentic provides multiple ways to interact with your agents, from command-line interfaces to web applications. This guide covers all available methods.

| Interface | Use Case | Features |

|---|---|---|

| Command Line (CLI) | Quick testing, scripting | Simple text I/O, dot commands |

| REST API | Integration with other applications | HTTP endpoints, event streaming |

| Next.js Dashboard | Professional web UI | Real-time updates, thread history, background tasks |

| Streamlit Dashboard | Quick prototyping | Simple web UI with minimal setup |

The CLI provides a simple REPL interface for direct conversations with your agents.

agentic thread examples/basic_agent.pyThe REST API allows integration with web applications, automation systems, or other services.

agentic serve examples/basic_agent.pyThe Next.js Dashboard offers a full-featured web interface with:

- Multiple agent management

- Real-time event streaming

- Background task management

- Thread history and logs

- Markdown rendering

agentic dashboard start --agent-path examples/basic_agent.pyLearn more about the Next.js Dashboard →

The Streamlit Dashboard provides a lightweight interface for quick prototyping.

agentic streamlit --agent-path examples/basic_agent.pyLearn more about the Streamlit Dashboard →

You can always interact with agents directly in Python:

from agentic.common import Agent

# Create an agent

agent = Agent(

name="My Agent",

instructions="You are a helpful assistant.",

model="openai/gpt-4o-mini"

)

# Use the << operator for a quick response

response = agent << "Hello, how are you?"

print(response)

# For more control over the conversation

request_id = agent.start_request("Tell me a joke").request_id

for event in agent.get_events(request_id):

print(event)Learn more about Programmatic Access →

Agentic builds on Litellm to enable consistent support for many different LLM models.

Under the covers, Agentic uses Ray to host and run your agents. Ray implements an actor model which implements a much better architecture for running complex agents than a typical web framework.

Agentic requires API keys for the LLM providers you plan to use. Copy the .env.example file to .env and set the following environment variables:

OPENAI_API_KEY=your_openai_api_key_here

ANTHROPIC_API_KEY=your_anthropic_api_key_here

You only need to set the API keys for the models you plan to use. For example, if you're only using OpenAI models, you only need to set OPENAI_API_KEY.

Run tests:

pytest

Preview docs locally:

mike serve

Deploy the docs:

mike deploy --push dev

Yup, there's a lot of agent frameworks. But many of these are "gen 1" frameworks - designed before anyone had really built agents and tried to put them into production. Agentic is informed by our learnings at Supercog from building and running hundreds of agents over the past year.

Some reasons why Agentic is different:

- We have a thin abstraction over the LLM. The "agent loop" code is a couple hundred lines calling directly into the LLM API (the OpenAI completion API via Litellm).

- Logging is built-in and usable out of the box. Trace agent threads, tool calls, and LLM completions with ability to control the right level of detail.

- Well designed abstractions with just a few nouns: Agent, Tool, Thread, Run. Stop assembling the computational graph out of toothpicks.

- Rich event system goes beyond text so agents can work with data and media.

- Event streams can have multiple channels, so your agent can "run in the background" and still notify you of what is happening.

- Human-in-the-loop is built into the framework, not hacked in. An agent can wait indefinitely, or get notification from any channel like an email or webhook.

- Context length, token usage, and timing usage data is emitted in a standard form.

- Tools are designed to support configuration and authentication, not just run on a sea of random env vars.

- Use tools from almost any framework, including MCP and Composio.

- "Tools are agents". You can use tools and agents interchangeably. This is where the world is heading, that whatever "service" your agent uses it will be indistinguishable whether that service is "hard-coded" or implemented by another agent.

- Agents can add or remove tools dynamically while they are running. (coming soon...)

- "Batteries included". Easy RAG support. Every agent has an API interface. UI tools for quickly building a UI for your agents. "Agent contracts" for testing.

- Automatic context management keeps your agent within context length limits.

We would love you to contribute! We especially welcome:

- New tools

- Example agents

- New UI apps

but obviously we appreciate bug reports, bug fixes, etc... We encourage tests with all contributions, but especially if you want to modify the core framework please submit tests in the PR.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agentic

Similar Open Source Tools

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

ms-agent

MS-Agent is a lightweight framework designed to empower agents with autonomous exploration capabilities. It provides a flexible and extensible architecture for creating agents capable of tasks like code generation, data analysis, and tool calling with MCP support. The framework supports multi-agent interactions, deep research, code generation, and is lightweight and extensible for various applications.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

trae-agent

Trae-agent is a Python library for building and training reinforcement learning agents. It provides a simple and flexible framework for implementing various reinforcement learning algorithms and experimenting with different environments. With Trae-agent, users can easily create custom agents, define reward functions, and train them on a variety of tasks. The library also includes utilities for visualizing agent performance and analyzing training results, making it a valuable tool for both beginners and experienced researchers in the field of reinforcement learning.

OpenManus-RL

OpenManus-RL is an open-source initiative focused on enhancing reasoning and decision-making capabilities of large language models (LLMs) through advanced reinforcement learning (RL)-based agent tuning. The project explores novel algorithmic structures, diverse reasoning paradigms, sophisticated reward strategies, and extensive benchmark environments. It aims to push the boundaries of agent reasoning and tool integration by integrating insights from leading RL tuning frameworks and continuously updating progress in a dynamic, live-streaming fashion.

langchain

LangChain is a framework for building LLM-powered applications that simplifies AI application development by chaining together interoperable components and third-party integrations. It helps developers connect LLMs to diverse data sources, swap models easily, and future-proof decisions as technology evolves. LangChain's ecosystem includes tools like LangSmith for agent evals, LangGraph for complex task handling, and LangGraph Platform for deployment and scaling. Additional resources include tutorials, how-to guides, conceptual guides, a forum, API reference, and chat support.

solace-agent-mesh

Solace Agent Mesh is an open-source framework designed for building event-driven multi-agent AI systems. It enables the creation of teams of AI agents with distinct skills and tools, facilitating communication and task delegation among agents. The framework is built on top of Solace AI Connector and Google's Agent Development Kit, providing a standardized communication layer for asynchronous, event-driven AI agent architecture. Solace Agent Mesh supports agent orchestration, flexible interfaces, extensibility, agent-to-agent communication, and dynamic embeds, making it suitable for developing complex AI applications with scalability and reliability.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

deepteam

Deepteam is a powerful open-source tool designed for deep learning projects. It provides a user-friendly interface for training, testing, and deploying deep neural networks. With Deepteam, users can easily create and manage complex models, visualize training progress, and optimize hyperparameters. The tool supports various deep learning frameworks and allows seamless integration with popular libraries like TensorFlow and PyTorch. Whether you are a beginner or an experienced deep learning practitioner, Deepteam simplifies the development process and accelerates model deployment.

ISEK

ISEK is a decentralized agent network framework that enables building intelligent, collaborative agent-to-agent systems. It integrates the Google A2A protocol and ERC-8004 contracts for identity registration, reputation building, and cooperative task-solving, creating a self-organizing, decentralized society of agents. The platform addresses challenges in the agent ecosystem by providing an incentive system for users to pay for agent services, motivating developers to build high-quality agents and fostering innovation and quality in the ecosystem. ISEK focuses on decentralized agent collaboration and coordination, allowing agents to find each other, reason together, and act as a decentralized system without central control. The platform utilizes ERC-8004 for decentralized identity, reputation, and validation registries, establishing trustless verification and reputation management.

AReaL

AReaL (Ant Reasoning RL) is an open-source reinforcement learning system developed at the RL Lab, Ant Research. It is designed for training Large Reasoning Models (LRMs) in a fully open and inclusive manner. AReaL provides reproducible experiments for 1.5B and 7B LRMs, showcasing its scalability and performance across diverse computational budgets. The system follows an iterative training process to enhance model performance, with a focus on mathematical reasoning tasks. AReaL is equipped to adapt to different computational resource settings, enabling users to easily configure and launch training trials. Future plans include support for advanced models, optimizations for distributed training, and exploring research topics to enhance LRMs' reasoning capabilities.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

MeeseeksAI

MeeseeksAI is a framework designed to orchestrate AI agents using a mermaid graph and networkx. It provides a structured approach to managing and coordinating multiple AI agents within a system. The framework allows users to define the interactions and dependencies between agents through a visual representation, making it easier to understand and modify the behavior of the AI system. By leveraging the power of networkx, MeeseeksAI enables efficient graph-based computations and optimizations, enhancing the overall performance of AI workflows. With its intuitive design and flexible architecture, MeeseeksAI simplifies the process of building and deploying complex AI systems, empowering users to create sophisticated agent interactions with ease.

For similar tasks

agentic

Agentic is a lightweight and flexible Python library for building multi-agent systems. It provides a simple and intuitive API for creating and managing agents, defining their behaviors, and simulating interactions in a multi-agent environment. With Agentic, users can easily design and implement complex agent-based models to study emergent behaviors, social dynamics, and decentralized decision-making processes. The library supports various agent architectures, communication protocols, and simulation scenarios, making it suitable for a wide range of research and educational applications in the fields of artificial intelligence, machine learning, social sciences, and robotics.

Awesome-LLM-in-Social-Science

This repository compiles a list of academic papers that evaluate, align, simulate, and provide surveys or perspectives on the use of Large Language Models (LLMs) in the field of Social Science. The papers cover various aspects of LLM research, including assessing their alignment with human values, evaluating their capabilities in tasks such as opinion formation and moral reasoning, and exploring their potential for simulating social interactions and addressing issues in diverse fields of Social Science. The repository aims to provide a comprehensive resource for researchers and practitioners interested in the intersection of LLMs and Social Science.

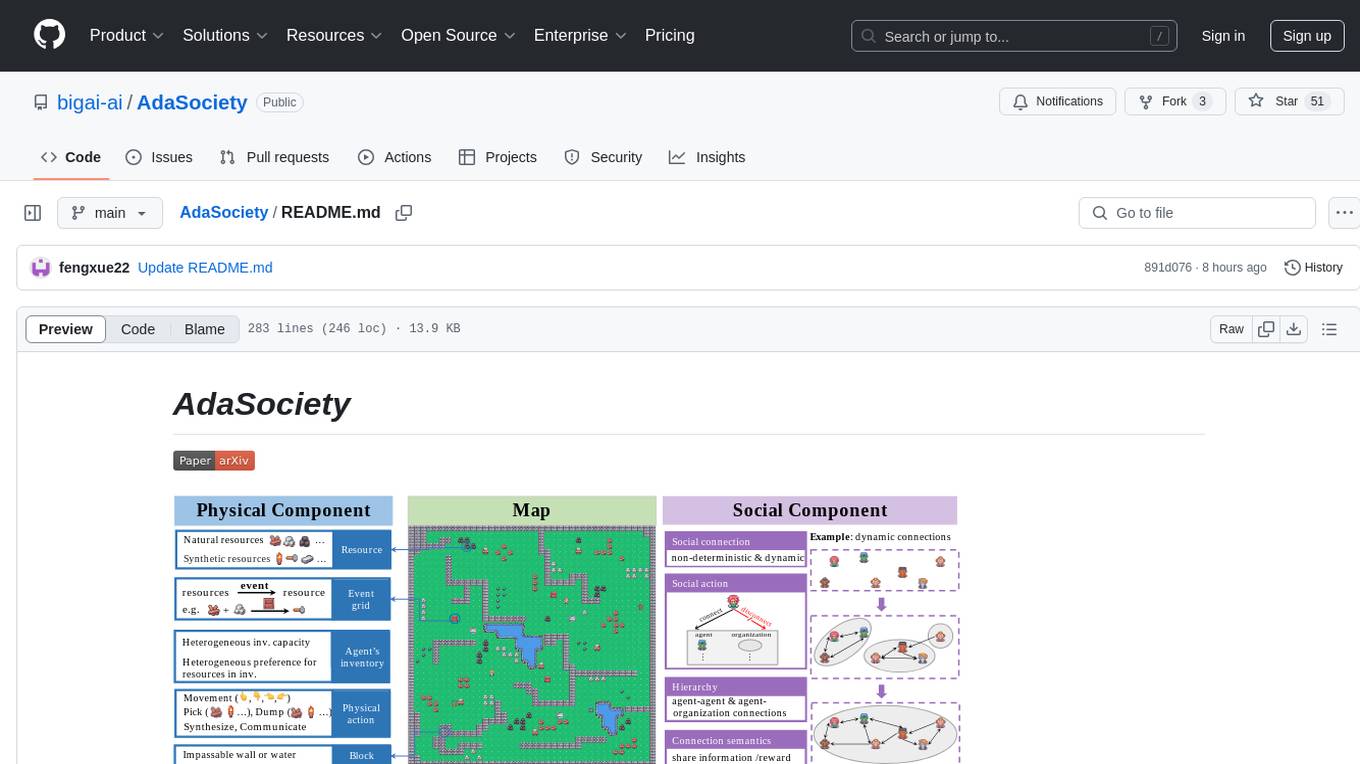

AdaSociety

AdaSociety is a multi-agent environment designed for simulating social structures and decision-making processes. It offers built-in resources, events, and player interactions. Users can customize the environment through JSON configuration or custom Python code. The environment supports training agents using RLlib and LLM frameworks. It provides a platform for studying multi-agent systems and social dynamics.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.