ST-Raptor

LLM-Powered Semi-Structured Table Question Answering

Stars: 231

ST-Raptor is a powerful open-source tool for analyzing and visualizing spatial-temporal data. It provides a user-friendly interface for exploring complex datasets and generating insightful visualizations. With ST-Raptor, users can easily identify patterns, trends, and anomalies in their spatial-temporal data, making it ideal for researchers, analysts, and data scientists working with geospatial and time-series data.

README:

-

[ ] Main functionss

- [x] Support both local deployment or API calls for LLM, VLM, and Embedding models.

- [x] Support diverse input formats: HTML, CSV, MARKDOWN, ...

- [ ] Support Image input.

- [ ] Expand the table extraction module to support table types beyond problem definition.

-

[ ] Benchmark

- [x] Update both english and chinese version of SSTQA Benchmark.

- [ ] The SSTQAv2 is on the way!!!

-

[ ] Visualization

- [x] Support visualization platform based on Gradio.

- [ ] Support hyper-parameter settings through Gradio.

- [ ] Support the visualization of HO-Tree structure and manually revise.

ST-Raptor is a tool for answering questions over tables with diverse semi-structured layouts. It takes only an Excel-formatted table and a natural language question as input, and produces precise answers.

Unlike many existing approaches, ST-Raptor requires no additional fine-tuning. It combines a vision-language model (VLM) with a tree-construction algorithm (HO-Tree) and flexibly integrates with different LLMs. ST-Raptor employs a two-stage validation mechanism to ensure reliable results.

Semi-structures tables like personal information form, academic tables, financial tables... from Excel, websites (HTML), Markdown, csv files...

The 102 tables and 764 questions in SSTQA are carefully curated from over 2031 real-world tables by considering $(i)$ tables featuring semi-structured formats, such as nested cells, multi-row/column headers, irregular layouts and $(ii)$ coverage across 19 representative real scenarios.

We list out 10 representative real scenarios as below:

Human Resources, Corporate Management, Financial Management, Marketing, Warehouse Management, Academic, Schedule Management, Application Forms, Education-related, and Sales Management.

You can find the SSTQA benchmark in ./data directory: SSTQA-en SSTQA-ch

The following table demonstrates the answering accuracy (%) and ROUGE-L score of different methods over our collected SSTQA benchmark and other two benchmarks.

Note that the required question answering is highly dependent on both the semi-structured table complexity and the question complexity.

NL2SQL methods: OpenSearch-SQL

Fine-tuning based methods: TableLLaMA TableLLM

Agent based methods: ReAcTable TAT-LLM

Vision Language Model based methods: TableLLaVA mPLUG-DocOwl1.5

Foudation Models: GPT-4o DeepSeekV3

| Method | WikiTQ-ST | TempTabQA-ST | SSTQA | SSTQA |

|---|---|---|---|---|

| Accuracy (%) | Accuracy (%) | Accuracy (%) | ROUGE-L (%) | |

| NL2SQL (200 Samples) | ||||

| OpenSearch-SQL | 38.89 | 4.76 | 24.00 | 23.87 |

| Fine-tuning based | ||||

| TableLLaMA | 35.01 | 32.70 | 40.39 | 26.71 |

| TableLLM | 62.40 | 9.13 | 7.84 | 2.93 |

| Agent based | ||||

| ReAcTable | 68.00 | 35.88 | 37.24 | 7.49 |

| TAT-LLM | 23.32 | 61.86 | 39.78 | 19.26 |

| VLM based | ||||

| TableLLaVA | 20.41 | 6.91 | 9.52 | 5.92 |

| mPLUG-DocOwl1.5 | 39.80 | 39.80 | 29.56 | 28.43 |

| Foundation Model | ||||

| GPT-4o | 60.71 | 74.83 | 62.12 | 43.86 |

| DeepSeekV3 | 69.64 | 63.81 | 62.16 | 46.17 |

| ST-Raptor | 71.17 | 77.59 | 72.39 | 52.19 |

git clone [email protected]:weAIDB/ST-Raptor.git

cd ST-RaptorEnvironment.

- Use the following command to install the conda environment.

# create virtual environment

conda create -n straptor python=3.10

conda activate straptor

# install required packages

pip install -r requirements.txt- Install the HTML rendering plugin

wkhtmltoxand font package.

wget https://github.com/wkhtmltopdf/packaging/releases/download/0.12.6.1-2/wkhtmltox_0.12.6.1-2.jammy_amd64.deb

sudo apt-get install -f ./wkhtmltox_0.12.6.1-2.jammy_amd64.deb

sudo apt-get install -y fonts-noto-cjk fonts-wqy-microheiBenchmark

- You can find the SSTQA benchmark in

./datadirectory: SSTQA-en SSTQA-ch- You can also find the SSTQA benchmark on hugging face SSTQA Huggingface

- Change the settings in

./main.py

# You need to change this

input_jsonl = 'PATH_TO_YOUR_INPUT_JSONL' # The QA pairs

table_dir = 'PATH_TO_YOUR_TABLE_DIR' # The corresponding tables

pkl_dir = 'PATH_TO_YOUR_PKL_DIR' # The directory to store HO-Tree object files

output_jsonl = 'PATH_TO_YOUR_OUTPUT_JSONL' # The QA results

log_dir = 'PATH_TO_YOUR_LOG_DIR' # The directory to store log filesThe Q&A data is stored in a JSONL format file, and the format of each record is as follows.

{

"id": "XXX",

"table_id": "XXX",

"query": "XXX",

"label": "XXX" // Optional when inference

}Model Configuration. The model configuration in our paper includes Deepseek-V3 (LLM API) + InternVL2.5 26B (VLM) + Multilingual-E5-Large-Instruct (Embedding Model). This configuration requires a total of approximately 160GB of GPU memory. You can replace the model according to the hardware situation or change it to use APIs.

You need to set model configuration in ./utils/constnts.py

"""Change this for requesting LLM"""

LLM_API_URL = "YOUR_LLM_API_URL"

LLM_API_KEY = "YOUR_LLM_API_KEY"

LLM_MODEL_TYPE = "YOUR_LLM_MODEL_TYPE"

"""Change this for requesting VLM"""

VLM_API_URL = "YOUR_VLM_API_URL"

VLM_API_KEY = "YOUR_VLM_API_KEY"

VLM_MODEL_TYPE = "YOUR_VLM_MODEL_TYPE"

"""Change this for requesting Embedding Model"""

EMBEDDING_TYPE = "api" # api / local

## If EMBEDDING_TYPE is local

EMBEDDING_MODE_PATH = "YOUR_PATH_TO_MULTILINGULE_E5"

## If EMBEDDING_TYPE is api

EMBEDDING_API_URL = "YOUR_EMBEDDING_API_URL"

EMBEDDING_API_KEY = "YOUR_EMBEDDING_API_KEY"

EMBEDDING_MODEL_TYPE = "YOUR_EMBEDDING_MODEL_TYPE"If you want to use other format of APIs, please revise the code in ./utils/api_utils.py

Use local deployment VLM and Embedding Model with LLM API as an example.

First to Download InternVL2.5 and Download Multilingual-E5

- Install the vllm package.

pip install vllm- Denote the GPU and deploy the VLM.

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m vllm.entrypoints.openai.api_server \

--model=PATH_TO_INTERNVL \

--served-model-name internvl

--port 8138 \

--trust-remote-code \

--max-num-batched-tokens 8192 \

--seed 42 \

--tensor-parallel-size 4- Set API configs in

./utils/constnts.py

"""Change this for requesting LLM"""

LLM_API_URL = "YOUR_LLM_API_URL" # [Change This]

LLM_API_KEY = "YOUR_LLM_API_KEY" # [Change This]

LLM_MODEL_TYPE = "YOUR_LLM_MODEL_TYPE" # [Change This]

"""Change this for requesting VLM"""

VLM_API_URL = "http://localhost:8000/v1/"

VLM_API_KEY = "Empty"

VLM_MODEL_TYPE = "internvl"

"""Change this for requesting Embedding Model"""

EMBEDDING_TYPE = "local" # api / local

## If EMBEDDING_TYPE is local

EMBEDDING_MODE_PATH = "YOUR_PATH_TO_MULTILINGULE_E5" # [Change This]If you have completed all the above settings, use the following command to start execution.

python ./main.py| Question | Ground Truth | TableLLaMA | TableLLM | ReAcTable | TAT-LLM | TableLLaVA | mPLUG-DocOwl1.5 | DeepseekV3 | GPT-4o | ST-Raptor |

|---|---|---|---|---|---|---|---|---|---|---|

| What is the value of the employment service satisfaction indicator in the overall budget performance target table for municipal departments in 2024? | ≧90% | 75.0 | 737 | ≧95% | ≧90% | 80% | ≧90% | ≧90% | ≧90% | ≧90% |

| How many items are there in the drawing specifications? | 15 | 2 | To change the template, you can follow these steps: ... | 7 | 108 | 17 | 4 | 15 | 23 | 15 |

| How many status codes are there in the status code table? | 3 | 3 | To change the template, you can follow these steps: ... | 7 | 5 | 33 | 3 | 3 | 4 | 3 |

| Which month had the lowest expenditure in 2020? | February | Travel expenses | To find the total expenditure amount in June 2019 ... | June 5th | "" | June 5th | Long Boat Festival welfare | February | January | February |

| How many sales records did the brand "Tengyuan Mingju" have in June? | 7 | 3 | "" | 7 | "" | 13 | 5 | 7 | 8 | 7 |

| What was the business hospitality expense of the Comprehensive Management Office in February? | 5106.36 | 5106.36 | "" | "" | SELECT SUM(Amount incurred) FROM DF WHERE Project Content = 'Business entertainment expenses' ... |

3500 | 130,168 | 5106.36 | 5106.36 | 5106.36 |

| What is the proposed funding for the social insurance gap and living allowance for college graduates under the "Three Supports and One Assistance" program? | 587.81 million yuan | 587.81 | To find the number of financially supported personnel ... | To find the proposed investment amount for the social insurance gap and living allowance ... | 587.81 | 1.2 billion | 1140 | 587.81 | 587.81 | 587.81 |

| What is the target value for the number of new urban employment in the 2024 Municipal Department Overall Budget Performance Target Table? | 50000 people | 50000 | To find the number of financially supported personnel in... | The question asks for the indicator value for the number of new urban employment ... | 50000 | 1484 | 50000 | 50000 | 50000 | 50000 |

| How many first-level indicators are there in the performance metrics? | 3 | 10 | 10 | 10 | 10 | 100 | 2 | 3 | 4 | 3 |

| How many third-level indicators are there in the quantity indicators of the performance metrics? | 4 | 2 | To change the template, you can follow these steps: ... | To determine how many information items in the information item comparison... | 12#13#14#15#16#17#18#19#20#21#22#23#24#25#26#27#28#29#30... | 108 | 4 | 8 | 3 | 8 |

| How many points are deducted each time for disciplinary violations? | 0.5 | 0.5 | 0.5 | 0.5 | 0.5 points | For each violation of discipline, 10% of the employee's base salary will be deducted. | 0.5 points | 0.5 | 0.5 | 0.5 |

| How many evaluation items are there for technical management in the key performance review indicators? | 9 | 15 | The item with the highest standard score in the basic performance evaluation indicators is ... | 7 | 16 | 10 | 4 | 0 | 10 | 0 |

| Has the fire safety approval process been completed for the area covered by this tender? | Already registered, provided | already applied for and provided | completed for the bidding area | The item with the highest standard score in the basic performance... | "" | The fire protection application procedure has been completed for the bidding area. | Yes | Construction reported and provided | The fire protection application has been completed. | Construction reported, provided |

| How many responsible departments are involved at the construction site? | 1 | 2 | To determine the employee with the longest tenure in the table, ... | 10 | 11 | 22 | 2 | 1 | 1 | 1 |

| What is the total financial expense for all months? | 1312 | 6500 | 5635559.66 | "" | 64800.0 | 4300000 | 5502 | 1412 | 1412 | 1412 |

| Who is responsible for sealing the reserved holes and sleeves of fire protection facilities and equipment? | winning bidder | winning bidder | "" | To answer the question "Who is responsible for sealing the reserved ... | "" | fire department | the manufacturer | winning bidder | The winning bidder is responsible. | winning bidder |

| Which two products had sales exceeding 3800 in June? | Potato chips, Soy milk | Potato chips, Soy milk | To find the sales volume of soy milk in June, we | SQL: SELECT `product name`, June FROM DF WHERE June > 3800;.... |

Potato chips | In June, the sales of the two products were 3800 and 4200. | Potato chips, Soy milk | Potato chips, Soy milk | Potato chips, Soy milk | Potato chips, Soy milk |

| How many times is the sales volume of soy milk in May compared to the sales volume of potato chips in May? | 1.32 | 2.06667 | 5000 | 1.32 | 0.27778 | 1.046154 | 2.2 | 1.32 | 1.32 | 1.32 |

| How much did the sales volume of glucose increase in February compared to January? | 350 | 1150 | 5000 | 350 | 350 | 2300 | 100 | 350 | 350 | 350 |

| Which month has the highest sales volume of nutritious oat cereal? | June | May | June | June | June | March | June | June | June | June |

| What was the sales volume of soy milk in June? | 5000 | 5000 | 5000 | 5000 | 5000 | 1150 | 5000 | 5000 | 5000 | 5000 |

| How many items are there in the specific project? | 10 | 1 | The specific contents of determining the cost calculation object... | 1 | 10 | 19 | 10 | 10 | 12 | 10 |

| What is the description for the "Reported" status in the status code table? | Change coordination, review feasibility by the Change Advisory Board. | The Change Coordination Change Advisory Committee conducts a feasibility review. | To change the template, you can follow these steps: | The table provided does not contain any information about the "declared" status in the change status code table. | The Change Coordination Change Advisory Committee conducts a feasibility review. | The "declared" status is used to indicate that a change has been declared but not yet implemented. | The Change Coordination Advisory Committee conducts a feasibility review. | Change coordination Change Advisory Board conducts feasibility review. | Change coordination Change Advisory Board conducts feasibility review. | Change coordination Change Advisory Board conducts feasibility review. |

| What is the description related to information security requirements in the table of change reasons? | Information security related management is required | Information security related management needs | To change the template, you can refer to the "Change Template" row in the table. | "" | Information security related management needs | The change reason table includes information security needs, which are related to the change request. | Information security relates to the management needs | Information security related management requirements | Information security related management requirements | Information security related management requirements |

| What was the sales volume of glucose in March? | 1150 | 1150 | 5000 | 1150 | 1150 | 1800 | 1150 | 1150 | 1150 | 1150 |

| What is the number of new urban employment positions? | 12,790,000 people | 1279.0 | 1279 | 1279 | 1279 | 1000 people | 1279 | 1279 | 12,79 million people | 1279 |

| How many entries are there in the table of reasons for change? | 10 | 3 | To change the template, you can follow these steps: ... | To determine how many reasons in the change reason table involve business... | 3 | 10 | 4 | 1 | 10 | 1 |

| How many phases are there in the change phase code table? | 6 | 4 | To change the template, you can follow these steps ... | 55 | 5 | 17 | 4 | 6 | 6 | 6 |

| What is the description of the change closure phase in the change phase code table? | Change closed and archived | Change closure phase is the last phase of the change management process | To change the template, you can follow these steps: | The table provided does not contain any information about the "change closure phase" or its description. | Change closure and archiving | The change closure phase is a change phase that is used to indicate that the change has been completed | Change closure and archiving | Change closed and archived | Change closed and archived | Change closed and archived |

| How many more participants are enrolled in the basic old-age insurance for urban and rural residents than in the basic old-age insurance for urban employees at the end of the period? | 9745.25 million people | 53046.1618 | 1279 | 9745.2486 | 9745.2486 | 10000 | 200000 | 9745.2486 | 9745.2486 | 9745.2486 |

| What is the percentage of unemployment insurance fund expenditure out of its fund revenue? | 96.53% | 0.023256 | 1279 | 95.76% | 0.96911 | 55.56 | 33% | 96.53 | 96.53% | 96.53 |

| What is the total number of urban unemployed individuals who have found employment again and the number of individuals with employment difficulties who have found employment in employment and reemployment programs? | 668 | 254 | 1279 | 668 | 668 | 10000 | 584 | 668 | 668 | 66 |

Note: The "" cell in the table indicate that the baseline fails to generate an answer of that question.

The full result please refer to the file: baseline_output.jsonl

If you like this project, please cite our paper link:

@article{tang2026straptor,

author = {Zirui Tang and Boyu Niu and Xuanhe Zhou and Boxiu Li and Wei Zhou and Jiannan Wang and Guoliang Li and Xinyi Zhang and Fan Wu},

title = {ST-Raptor: LLM-Powered Semi-Structured Table Question Answering},

journal = {Proc. {ACM} Manag. Data},

year = {2026}

}

ST-Raptor@Complex Semi-Structured Table Analysis Community (WeChat)

ST-Raptor@复杂半结构表格分析社区 (微信群)

This project is licensed under the MIT License - see the LICENSE file for details

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ST-Raptor

Similar Open Source Tools

ST-Raptor

ST-Raptor is a powerful open-source tool for analyzing and visualizing spatial-temporal data. It provides a user-friendly interface for exploring complex datasets and generating insightful visualizations. With ST-Raptor, users can easily identify patterns, trends, and anomalies in their spatial-temporal data, making it ideal for researchers, analysts, and data scientists working with geospatial and time-series data.

ROGRAG

ROGRAG is a powerful open-source tool designed for data analysis and visualization. It provides a user-friendly interface for exploring and manipulating datasets, making it ideal for researchers, data scientists, and analysts. With ROGRAG, users can easily import, clean, analyze, and visualize data to gain valuable insights and make informed decisions. The tool supports a wide range of data formats and offers a variety of statistical and visualization tools to help users uncover patterns, trends, and relationships in their data. Whether you are working on exploratory data analysis, statistical modeling, or data visualization, ROGRAG is a versatile tool that can streamline your workflow and enhance your data analysis capabilities.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

arconia

Arconia is a powerful open-source tool for managing and visualizing data in a user-friendly way. It provides a seamless experience for data analysts and scientists to explore, clean, and analyze datasets efficiently. With its intuitive interface and robust features, Arconia simplifies the process of data manipulation and visualization, making it an essential tool for anyone working with data.

axolotl

Axolotl is a lightweight and efficient tool for managing and analyzing large datasets. It provides a user-friendly interface for data manipulation, visualization, and statistical analysis. With Axolotl, users can easily import, clean, and explore data to gain valuable insights and make informed decisions. The tool supports various data formats and offers a wide range of functions for data processing and modeling. Whether you are a data scientist, researcher, or business analyst, Axolotl can help streamline your data workflows and enhance your data analysis capabilities.

datatune

Datatune is a data analysis tool designed to help users explore and analyze datasets efficiently. It provides a user-friendly interface for importing, cleaning, visualizing, and modeling data. With Datatune, users can easily perform tasks such as data preprocessing, feature engineering, model selection, and evaluation. The tool offers a variety of statistical and machine learning algorithms to support data analysis tasks. Whether you are a data scientist, analyst, or researcher, Datatune can streamline your data analysis workflow and help you derive valuable insights from your data.

simple-data-analysis

Simple data analysis (SDA) is an easy-to-use and high-performance TypeScript library for data analysis. It can be used with tabular and geospatial data. The library is maintained by Nael Shiab, a computational journalist and senior data producer for CBC News. SDA is based on DuckDB, a fast in-process analytical database, and it sends SQL queries to be executed by DuckDB. The library provides methods inspired by Pandas (Python) and the Tidyverse (R), and it also supports writing custom SQL queries and processing data with JavaScript. Additionally, SDA offers methods for leveraging large language models (LLMs) for data cleaning, extraction, categorization, and natural language interaction, as well as for embeddings and semantic search.

atlas

Atlas is a powerful data visualization tool that allows users to create interactive charts and graphs from their datasets. It provides a user-friendly interface for exploring and analyzing data, making it ideal for both beginners and experienced data analysts. With Atlas, users can easily customize the appearance of their visualizations, add filters and drill-down capabilities, and share their insights with others. The tool supports a wide range of data formats and offers various chart types to suit different data visualization needs. Whether you are looking to create simple bar charts or complex interactive dashboards, Atlas has you covered.

vizra-adk

Vizra-ADK is a data visualization tool that allows users to create interactive and customizable visualizations for their data. With a user-friendly interface and a wide range of customization options, Vizra-ADK makes it easy for users to explore and analyze their data in a visually appealing way. Whether you're a data scientist looking to create informative charts and graphs, or a business analyst wanting to present your findings in a compelling way, Vizra-ADK has you covered. The tool supports various data formats and provides features like filtering, sorting, and grouping to help users make sense of their data quickly and efficiently.

clewdr

Clewdr is a collaborative platform for data analysis and visualization. It allows users to upload datasets, perform various data analysis tasks, and create interactive visualizations. The platform supports multiple users working on the same project simultaneously, enabling real-time collaboration and sharing of insights. Clewdr is designed to streamline the data analysis process and facilitate communication among team members. With its user-friendly interface and powerful features, Clewdr is suitable for data scientists, analysts, researchers, and anyone working with data to gain valuable insights and make informed decisions.

turftopic

Turftopic is a Python library that provides tools for sentiment analysis and topic modeling of text data. It allows users to analyze large volumes of text data to extract insights on sentiment and topics. The library includes functions for preprocessing text data, performing sentiment analysis using machine learning models, and conducting topic modeling using algorithms such as Latent Dirichlet Allocation (LDA). Turftopic is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data analysts.

dranet

Dranet is a Python library for analyzing and visualizing data from neural networks. It provides tools for interpreting model predictions, understanding feature importance, and evaluating model performance. With Dranet, users can gain insights into how neural networks make decisions and improve model transparency and interpretability.

CrossIntelligence

CrossIntelligence is a powerful tool for data analysis and visualization. It allows users to easily connect and analyze data from multiple sources, providing valuable insights and trends. With a user-friendly interface and customizable features, CrossIntelligence is suitable for both beginners and advanced users in various industries such as marketing, finance, and research.

dyad

Dyad is a lightweight Python library for analyzing dyadic data, which involves pairs of individuals and their interactions. It provides functions for computing various network metrics, visualizing network structures, and conducting statistical analyses on dyadic data. Dyad is designed to be user-friendly and efficient, making it suitable for researchers and practitioners working with relational data in fields such as social network analysis, communication studies, and psychology.

xorq

Xorq (formerly LETSQL) is a data processing library built on top of Ibis and DataFusion to write multi-engine data workflows. It provides a flexible and powerful tool for processing and analyzing data from various sources, enabling users to create complex data pipelines and perform advanced data transformations.

youtu-graphrag

Youtu-GraphRAG is a vertically unified agentic paradigm that connects the entire framework based on graph schema, allowing seamless domain transfer with minimal intervention. It introduces key innovations like schema-guided hierarchical knowledge tree construction, dually-perceived community detection, agentic retrieval, advanced construction and reasoning capabilities, fair anonymous dataset 'AnonyRAG', and unified configuration management. The framework demonstrates robustness with lower token cost and higher accuracy compared to state-of-the-art methods, enabling enterprise-scale deployment with minimal manual intervention for new domains.

For similar tasks

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

Transformers_And_LLM_Are_What_You_Dont_Need

Transformers_And_LLM_Are_What_You_Dont_Need is a repository that explores the limitations of transformers in time series forecasting. It contains a collection of papers, articles, and theses discussing the effectiveness of transformers and LLMs in this domain. The repository aims to provide insights into why transformers may not be the best choice for time series forecasting tasks.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package for time series forecasting with state-of-the-art network architectures. It offers a high-level API for training networks on pandas data frames and utilizes PyTorch Lightning for scalable training on GPUs and CPUs. The package aims to simplify time series forecasting with neural networks by providing a flexible API for professionals and default settings for beginners. It includes a timeseries dataset class, base model class, multiple neural network architectures, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. PyTorch Forecasting is built on pytorch-lightning for easy training on various hardware configurations.

spider

Spider is a high-performance web crawler and indexer designed to handle data curation workloads efficiently. It offers features such as concurrency, streaming, decentralization, headless Chrome rendering, HTTP proxies, cron jobs, subscriptions, smart mode, blacklisting, whitelisting, budgeting depth, dynamic AI prompt scripting, CSS scraping, and more. Users can easily get started with the Spider Cloud hosted service or set up local installations with spider-cli. The tool supports integration with Node.js and Python for additional flexibility. With a focus on speed and scalability, Spider is ideal for extracting and organizing data from the web.

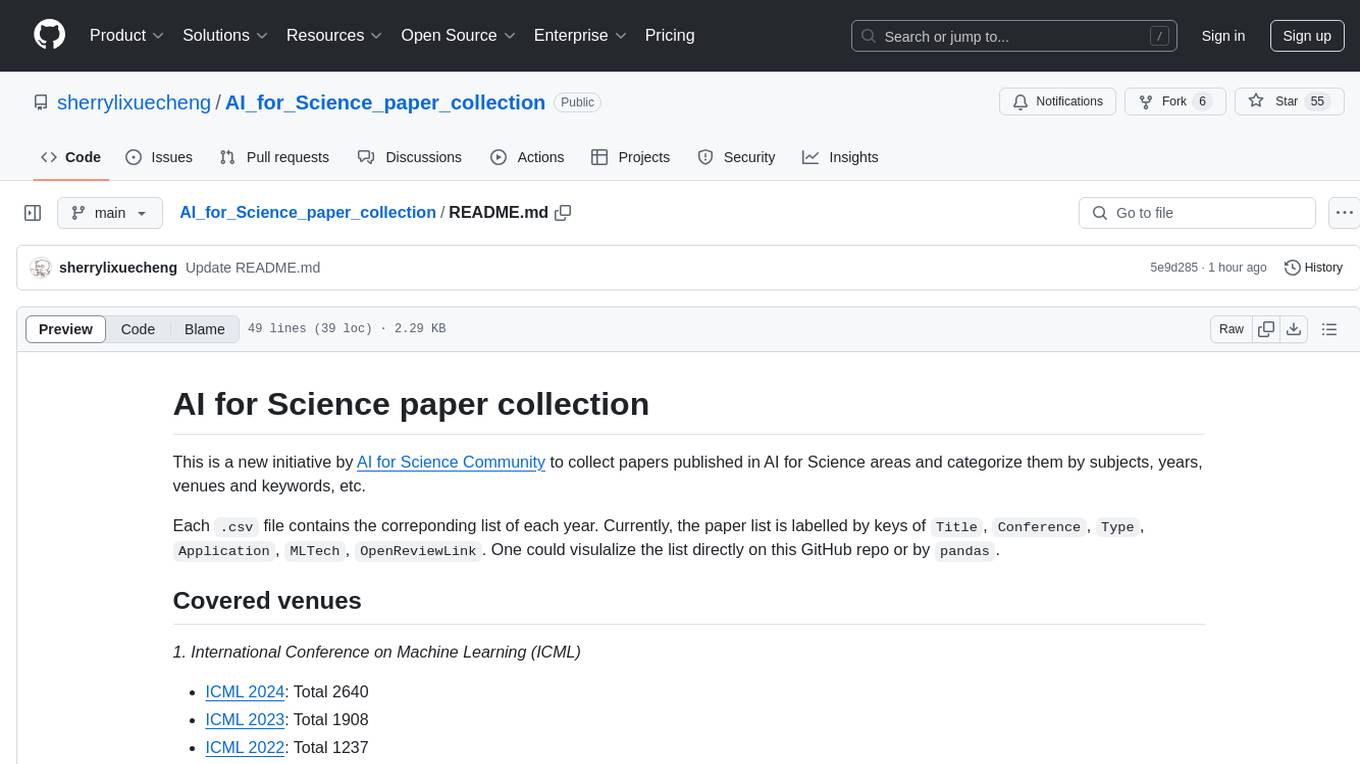

AI_for_Science_paper_collection

AI for Science paper collection is an initiative by AI for Science Community to collect and categorize papers in AI for Science areas by subjects, years, venues, and keywords. The repository contains `.csv` files with paper lists labeled by keys such as `Title`, `Conference`, `Type`, `Application`, `MLTech`, `OpenReviewLink`. It covers top conferences like ICML, NeurIPS, and ICLR. Volunteers can contribute by updating existing `.csv` files or adding new ones for uncovered conferences/years. The initiative aims to track the increasing trend of AI for Science papers and analyze trends in different applications.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package designed for state-of-the-art timeseries forecasting using deep learning architectures. It offers a high-level API and leverages PyTorch Lightning for efficient training on GPU or CPU with automatic logging. The package aims to simplify timeseries forecasting tasks by providing a flexible API for professionals and user-friendly defaults for beginners. It includes features such as a timeseries dataset class for handling data transformations, missing values, and subsampling, various neural network architectures optimized for real-world deployment, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. Built on pytorch-lightning, it supports training on CPUs, single GPUs, and multiple GPUs out-of-the-box.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.