Transformers_And_LLM_Are_What_You_Dont_Need

The best repository showing why transformers might not be the answer for time series forecasting and showcasing the best SOTA non transformer models.

Stars: 644

Transformers_And_LLM_Are_What_You_Dont_Need is a repository that explores the limitations of transformers in time series forecasting. It contains a collection of papers, articles, and theses discussing the effectiveness of transformers and LLMs in this domain. The repository aims to provide insights into why transformers may not be the best choice for time series forecasting tasks.

README:

The best repository showing why transformers don’t work in time series forecasting

- Problems in the current research on forecasting with transformers, foundational models, etc. by Christof Bergmeir

- Are Transformers Effective for Time Series Forecasting? by Ailing Zeng, Muxi Chen, Lei Zhang, Qiang Xu (The Chinese University of Hong Kong, International Digital Economy Academy (IDEA), 2022) code 🔥🔥🔥🔥🔥

- LLMs and foundational models for time series forecasting: They are not (yet) as good as you may hope by Christoph Bergmeir (2023) 🔥🔥🔥🔥🔥

- Transformers Are What You Do Not Need by Valeriy Manokhin (2023) 🔥🔥🔥🔥🔥

- Time Series Foundational Models: Their Role in Anomaly Detection and Prediction (2024) code

- Deep Learning is What You Do Not Need by Valeriy Manokhin (2022) 🔥🔥🔥🔥🔥

- Why do Transformers suck at Time Series Forecasting by Devansh (2023)

- Frequency-domain MLPs are More Effective Learners in Time Series Forecasting by Kun Yi, Qi Zhang, Wei Fan, Shoujin Wang, Pengyang Wang, Hui He, Defu Lian, Ning An, Longbing Cao, Zhendong Niu (Bejing Institute of Technology, Tongji University, University of Oxford, Universuty of Technology Sydney, University of Macau, HeFei University of Technology, Macquarie University) (2023) 🔥🔥🔥🔥🔥

- Forecasting CPI inflation under economic policy and geo-political uncertainties by Shovon Sengupta, Tanujit Chakraborty, Sunny Kumar Singh (Fidelity Investments, Sorbonne University, BITS Pilani Hyderabad). (2024) 🔥🔥🔥🔥🔥

- Revisiting Long-term Time Series Forecasting: An Investigation on Linear Mapping by Zhe Li, Shiyi Qi, Yiduo Li, Zenglin Xu (Harbin Institute of Technology, Shenzhen, 2023) code

- SCINet: Time Series Modeling and Forecasting with Sample Convolution and Interaction by Minhao Liu, Ailing Zeng, Muxi Chen, Zhijian Xu, Qiuxia Lai, Lingna Ma, Qiang Xu (The Chinese University of Hong Kong,2022) code

- WINNET:TIME SERIES FORECASTING WITH A WINDOW-ENHANCED PERIOD EXTRACTING AND INTERACTING by Wenjie Ou, Dongyue Guo, Zheng Zhang, Zhishuo Zhao, Yi Lin (Sichuan University, China, 2023)

- A Multi-Scale Decomposition MLP-Mixer for Time Series Analysis by Shuhan Zhong, Sizhe Song, Guanyao Li, Weipeng Zhuo, Yang Liu, S.-H. Gary Chan, The Hong Kong University of Science and Technology Hong Kong, 2023) code 🔥🔥🔥🔥🔥

- TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis by (Haixu Wu, Tengge Hu, Yong Liu, Hang Zhou, Jianmin Wang, Mingsheng Longj, , Tsinghua University, 2023) code 🔥🔥🔥🔥🔥

- MTS-Mixers: Multivariate Time Series Forecasting via Factorized Temporal and Channel Mixing code 🔥🔥🔥🔥🔥

- Reversible Instance Normalization for Accurate Time-Series Forecasting against Distribution Shift by Taesung Kim, Jinhee Kim, Yunwon Tae, Cheonbok Park, Jang-Ho Choi, Jaegul Choo (Kaist AI, Vuno, Naver Corp, ETRI, ICLR 2022) code project page 🔥🔥🔥🔥🔥

- WINNet: Wavelet-inspired Invertible Network for Image Denoising by Wenjie Ou, Dongyue Guo, Zheng Zhang, Zhishuo Zhao, Yi Lin (College of Computer Science, Sichuan University, China) code 🔥🔥🔥🔥🔥

- Mlinear: Rethink the Linear Model for Time-series Forecasting Wei Li, Xiangxu Meng, Chuhao Chen and Jianing Chen (Harbin Engineering University, 2023) 🔥🔥🔥🔥🔥

- Minimalist Traffic Prediction: Linear Layer Is All You Need by Wenying Duan, Hong Rao, Wei Huang, Xiaoxi He (Nanchang, University, Universify of Macau, 2023)

- Frequency-domain MLPs are More Effective Learners in Time Series Forecasting by Kun Yi, Qi Zhang, Wei Fan, Shoujin Wang, Pengyang Wang, Hui He, Defu Lian, Ning An, Longbing Cao, Zhendong Niu (Beijing Institute of Technology, Tongji University, University of Oxford University of Technology Sydney, University of Macau, USTC, HeFei University of Technology, Macquarie University, 2023) code 🔥🔥🔥🔥🔥

- AN END-TO-END TIME SERIES MODEL FOR SIMULTANEOUS IMPUTATION AND FORECAST by Trang H. Tran, Lam M. Nguyen, Kyongmin Yeo, Nam Nguyen, Dzung Phan, Roman Vaculin Jayant Kalagnanam (School of Operations Research and Information Engineering, Cornell University; IBM Research, Thomas J. Watson Research Center, Yorktown Heights, NY, USA, 2023) 🔥🔥🔥🔥🔥

- Long-term Forecasting with TiDE: Time-series Dense Encoder by Abhimanyu Das, Weihao Kong, Andrew Leach, Shaan Mathur, Rajat Sen, Rose Yu (Google Cloud, University of California, San Diego, 2023)

- TSMixer: Lightweight MLP-Mixer Model for Multivariate Time Series Forecasting by Vijay Ekambaram, Arindam Jati, Nam Nguyen, Phanwadee Sinthong, Jayant Kalagnanam (IBM Research, 2023) code code

- Koopa: Learning Non-stationary Time Series Dynamics with Koopman Predictors by Yong Liu, Chenyu Li, Jianmin Wang, Mingsheng Long (Tsinghua University, 2023) code 🔥🔥🔥🔥🔥

- Attractor Memory for Long-Term Time Series Forecasting: A Chaos Perspective Jiaxi Hu, Yuehong Hu, Wei Chen, Ming Jin, Shirui Pan, Qingsong Wen, Yuxuan Liang (2024) 🔥🔥🔥🔥🔥

- When and How: Learning Identifiable Latent States for Nonstationary Time Series Forecasting (2024) 🔥🔥🔥🔥🔥

- Deep Coupling Network For Multivariate Time Series Forecasting (2024)

- Linear Dynamics-embedded Neural Network for Long-Sequence Modeling by Tongyi Liang and Han-Xiong Li (City University of Hong Kong, 2024).

- PDETime: Rethinking Long-Term Multivariate Time Series Forecasting from the perspective of partial differential equations (2024)

- CATS: Enhancing Multivariate Time Series Forecasting by Constructing Auxiliary Time Series as Exogenous Variables (2024) 🔥🔥🔥🔥🔥

- Is Mamba Effective for Time Series Forecasting? code (2024) 🔥🔥🔥🔥🔥

- STG-Mamba: Spatial-Temporal Graph Learning via Selective State Space Model (2024)

- TimeMachine: A Time Series is Worth 4 Mambas for Long-term Forecasting code (2024)🔥🔥🔥🔥🔥

- FITS: Modeling Time Series with 10k Parameters code (2023)

- TSLANet: Rethinking Transformers for Time Series Representation Learning code (2024) 🔥🔥🔥🔥🔥

- WFTNet: Exploiting Global and Local Periodicity in Long-term Time Series Forecasting code (2024) 🔥🔥🔥🔥🔥

- SiMBA: Simplified Mamba-based Architecture for Vision and Multivariate Time series code (2024) 🔥🔥🔥🔥🔥

- SOFTS: Efficient Multivariate Time Series Forecasting with Series-Core Fusion code (2024) 🔥🔥🔥🔥🔥

- Integrating Mamba and Transformer for Long-Short Range Time Series Forecasting code (2024) 🔥🔥🔥🔥🔥

- SparseTSF: Modeling Long-term Time Series Forecasting with 1k Parameters (2024) 🔥🔥🔥🔥🔥

- Boosting MLPs with a Coarsening Strategy for Long-Term Time Series Forecasting (2024) 🔥🔥🔥🔥🔥

- Multi-Scale Dilated Convolution Network for Long-Term Time Series Forecasting (2024)

- ModernTCN: A Modern Pure Convolution Structure for General Time Series Analysis code (ICLR 2024 Spotlight)

- Adaptive Extraction Network for Multivariate Long Sequence Time-Series Forecasting (2024) 🔥🔥🔥🔥🔥

- Interpretable Multivariate Time Series Forecasting Using Neural Fourier Transform (2024) 🔥🔥🔥🔥🔥

- PERIODICITY DECOUPLING FRAMEWORK FOR LONG- TERM SERIES FORECASTING code (2024) 🔥🔥🔥🔥🔥

- Chimera: Effectively Modeling Multivariate Time Series with 2-Dimensional State Space Models 🔥🔥🔥🔥🔥 (2024)

- Time Evidence Fusion Network: Multi-source View in Long-Term Time Series Forecasting code (2024)

- ATFNet: Adaptive Time-Frequency Ensembled Network for Long-term Time Series Forecasting code (2024) 🔥🔥🔥🔥

- C-Mamba: Channel Correlation Enhanced State Space Models for Multivariate Time Series Forecasting (2024) 🔥🔥🔥🔥

- The Power of Minimalism in Long Sequence Time-series Forecasting

- WindowMixer: Intra-Window and Inter-Window Modeling for Time Series Forecasting

- xLSTMTime : Long-term Time Series Forecasting With xLSTM code (2024)

- Not All Frequencies Are Created Equal:Towards a Dynamic Fusion of Frequencies in Time-Series Forecasting (2024) 🔥🔥🔥🔥

- FMamba: Mamba based on Fast-attention for Multivariate Time-series Forecasting (2024)

- Long Input Sequence Network for Long Time Series Forecasting (2024)

- Time-series Forecasting with Tri-Decomposition Linear-based Modelling and Series-wise Metrics (2024) 🔥🔥🔥🔥

- An Evaluation of Standard Statistical Models and LLMs on Time Series Forecasting (2024) LLM 🔥🔥🔥🔥

- Macroeconomic Forecasting with Large Language Models (2024) LLM 🔥🔥🔥🔥

- Language Models Still Struggle to Zero-shot Reason about Time Series (2024) LLM 🔥🔥🔥🔥

- KAN4TSF: Are KAN and KAN-based models Effective for Time Series Forecasting? (2024)

- Simplified Mamba with Disentangled Dependency Encoding for Long-Term Time Series Forecasting (2024)

- Transformers are Expressive, But Are They Expressive Enough for Regression? (2024) paper showing transformers cant approximate smooth functions

- MixLinear: Extreme Low Resource Multivariate Time Series Forecasting with 0.1K Parameters

- MMFNet: Multi-Scale Frequency Masking Neural Network for Multivariate Time Series Forecasting

- Neural Fourier Modelling: A Highly Compact Approach to Time-Series Analysis code

- CMMamba: channel mixing Mamba for time series forecasting

- EffiCANet: Efficient Time Series Forecasting with Convolutional Attention

- Curse of Attention: A Kernel-Based Perspective for Why Transformers Fail to Generalize on Time Series Forecasting and Beyond

- CycleNet: Enhancing Time Series Forecasting through Modeling Periodic Patterns code

- Are Language Models Actually Useful for Time Series Forecasting?

- SOFTS: Efficient Multivariate Time Series Forecasting with Series-Core Fusion code

- FTLinear: MLP based on Fourier Transform for Multivariate Time-series Forecasting

- WPMixer: Efficient Multi-Resolution Mixing for Long-Term Time Series Forecasting code

- Zero Shot Time Series Forecasting Using Kolmogorov Arnold Networks

- BEAT: Balanced Frequency Adaptive Tuning for Long-Term Time-Series Forecasting (2025) 🔥🔥🔥🔥🔥

- A Multi-Task Learning Approach to Linear Multivariate Forecasting (2025)

- Benchmarking Time Series Forecasting Models: From Statistical Techniques to Foundation Models in Real-World Applications (2025)

- Day-ahead demand response potential prediction in residential buildings with HITSKAN: A fusion of Kolmogorov-Arnold networks and N-HiTS (2025)

- Do We Really Need Deep Learning Models for Time Series Forecasting? (2021)

- Two Steps Forward and One Behind: Rethinking Time Series Forecasting with Deep Learning (2023)

- Are Self-Attentions Effective for Time Series Forecasting? (2024)

- What Matters in Transformers? Not All Attention is Needed (2024)

- Time Series Foundational Models: Their Role in Anomaly Detection and Prediction (2024)

- Performance of Zero-Shot Time Series Foundation Models on Cloud Data (2025) 🔥🔥🔥🔥🔥

- Position: There are no Champions in Long-Term Time Series Forecasting (2025)

- FinTSB: A Comprehensive and Practical Benchmark for Financial Time Series Forecasting

- Cherry-Picking in Time Series Forecasting: How to Select Datasets to Make Your Model Shine

- TFB: Towards Comprehensive and Fair Benchmarking of Time Series Forecasting Methods 🔥🔥🔥🔥🔥

- DUET: Dual Clustering Enhanced Multivariate Time Series Forecasting

- CycleNet: Enhancing Time Series Forecasting through Modeling Periodic Patterns code

- Can LLMs Understand Time Series Anomalies? code

- EMForecaster: A Deep Learning Framework for Time Series Forecasting in Wireless Networks with Distribution-Free Uncertainty Quantification

- Times2D: Multi-Period Decomposition and Derivative Mapping for General Time Series Forecasting code

- [TimeGPT vs TiDE: Is Zero-Shot Inference the Future of Forecasting or Just Hype?](https://arxiv.org/abs/2205.13504 by Luís Roque and Rafael Guedes. (2024)🔥🔥🔥🔥🔥

- TimeGPT-1, discussion on Hacker News (2023)

- TimeGPT : The first Generative Pretrained Transformer for Time-Series Forecasting

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Transformers_And_LLM_Are_What_You_Dont_Need

Similar Open Source Tools

Transformers_And_LLM_Are_What_You_Dont_Need

Transformers_And_LLM_Are_What_You_Dont_Need is a repository that explores the limitations of transformers in time series forecasting. It contains a collection of papers, articles, and theses discussing the effectiveness of transformers and LLMs in this domain. The repository aims to provide insights into why transformers may not be the best choice for time series forecasting tasks.

WeatherGFT

WeatherGFT is a physics-AI hybrid model designed to generalize weather forecasts to finer-grained temporal scales beyond the training dataset. It incorporates physical partial differential equations (PDEs) into neural networks to simulate fine-grained physical evolution and correct biases. The model achieves state-of-the-art performance in forecasting tasks at different time scales, from nowcasting to medium-range forecasts, by utilizing a lead time-aware training framework and a carefully designed PDE kernel. WeatherGFT bridges the gap between nowcast and medium-range forecast by extending forecasting abilities to predict accurately at a 30-minute time scale.

Medical_Image_Analysis

The Medical_Image_Analysis repository focuses on X-ray image-based medical report generation using large language models. It provides pre-trained models and benchmarks for CheXpert Plus dataset, context sample retrieval for X-ray report generation, and pre-training on high-definition X-ray images. The goal is to enhance diagnostic accuracy and reduce patient wait times by improving X-ray report generation through advanced AI techniques.

Awesome-LLM-Quantization

Awesome-LLM-Quantization is a curated list of resources related to quantization techniques for Large Language Models (LLMs). Quantization is a crucial step in deploying LLMs on resource-constrained devices, such as mobile phones or edge devices, by reducing the model's size and computational requirements.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

vector-search-class-notes

The 'vector-search-class-notes' repository contains class materials for a course on Long Term Memory in AI, focusing on vector search and databases. The course covers theoretical foundations and practical implementation of vector search applications, algorithms, and systems. It explores the intersection of Artificial Intelligence and Database Management Systems, with topics including text embeddings, image embeddings, low dimensional vector search, dimensionality reduction, approximate nearest neighbor search, clustering, quantization, and graph-based indexes. The repository also includes information on the course syllabus, project details, selected literature, and contributions from industry experts in the field.

miles-credit

CREDIT is an open software platform for training and deploying AI atmospheric prediction models. It offers fast models with flexible configuration options for input data and neural network architecture. The user-friendly interface enables quick setup and iteration. Developed by the MILES group and NSF National Center for Atmospheric Research, CREDIT combines advanced AI/ML with atmospheric science expertise. It provides a stable release with various models, training, and deployment options, with ongoing development. Detailed documentation is available for installation, training, deployment, config file interpretation, and API usage.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

interpret

InterpretML is an open-source package that incorporates state-of-the-art machine learning interpretability techniques under one roof. With this package, you can train interpretable glassbox models and explain blackbox systems. InterpretML helps you understand your model's global behavior, or understand the reasons behind individual predictions. Interpretability is essential for: - Model debugging - Why did my model make this mistake? - Feature Engineering - How can I improve my model? - Detecting fairness issues - Does my model discriminate? - Human-AI cooperation - How can I understand and trust the model's decisions? - Regulatory compliance - Does my model satisfy legal requirements? - High-risk applications - Healthcare, finance, judicial, ...

Slow_Thinking_with_LLMs

STILL is an open-source project exploring slow-thinking reasoning systems, focusing on o1-like reasoning systems. The project has released technical reports on enhancing LLM reasoning with reward-guided tree search algorithms and implementing slow-thinking reasoning systems using an imitate, explore, and self-improve framework. The project aims to replicate the capabilities of industry-level reasoning systems by fine-tuning reasoning models with long-form thought data and iteratively refining training datasets.

xlstm-jax

The xLSTM-jax repository contains code for training and evaluating the xLSTM model on language modeling using JAX. xLSTM is a Recurrent Neural Network architecture that improves upon the original LSTM through Exponential Gating, normalization, stabilization techniques, and a Matrix Memory. It is optimized for large-scale distributed systems with performant triton kernels for faster training and inference.

AlphaFold3

AlphaFold3 is an implementation of the Alpha Fold 3 model in PyTorch for accurate structure prediction of biomolecular interactions. It includes modules for genetic diffusion and full model examples for forward pass computations. The tool allows users to generate random pair and single representations, operate on atomic coordinates, and perform structure predictions based on input tensors. The implementation also provides functionalities for training and evaluating the model.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

awesome-ml-gen-ai-elixir

A curated list of Machine Learning (ML) and Generative AI (GenAI) packages and resources for the Elixir programming language. It includes core tools for data exploration, traditional machine learning algorithms, deep learning models, computer vision libraries, generative AI tools, livebooks for interactive notebooks, and various resources such as books, videos, and articles. The repository aims to provide a comprehensive overview for experienced Elixir developers and ML/AI practitioners exploring different ecosystems.

For similar tasks

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

Transformers_And_LLM_Are_What_You_Dont_Need

Transformers_And_LLM_Are_What_You_Dont_Need is a repository that explores the limitations of transformers in time series forecasting. It contains a collection of papers, articles, and theses discussing the effectiveness of transformers and LLMs in this domain. The repository aims to provide insights into why transformers may not be the best choice for time series forecasting tasks.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package for time series forecasting with state-of-the-art network architectures. It offers a high-level API for training networks on pandas data frames and utilizes PyTorch Lightning for scalable training on GPUs and CPUs. The package aims to simplify time series forecasting with neural networks by providing a flexible API for professionals and default settings for beginners. It includes a timeseries dataset class, base model class, multiple neural network architectures, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. PyTorch Forecasting is built on pytorch-lightning for easy training on various hardware configurations.

spider

Spider is a high-performance web crawler and indexer designed to handle data curation workloads efficiently. It offers features such as concurrency, streaming, decentralization, headless Chrome rendering, HTTP proxies, cron jobs, subscriptions, smart mode, blacklisting, whitelisting, budgeting depth, dynamic AI prompt scripting, CSS scraping, and more. Users can easily get started with the Spider Cloud hosted service or set up local installations with spider-cli. The tool supports integration with Node.js and Python for additional flexibility. With a focus on speed and scalability, Spider is ideal for extracting and organizing data from the web.

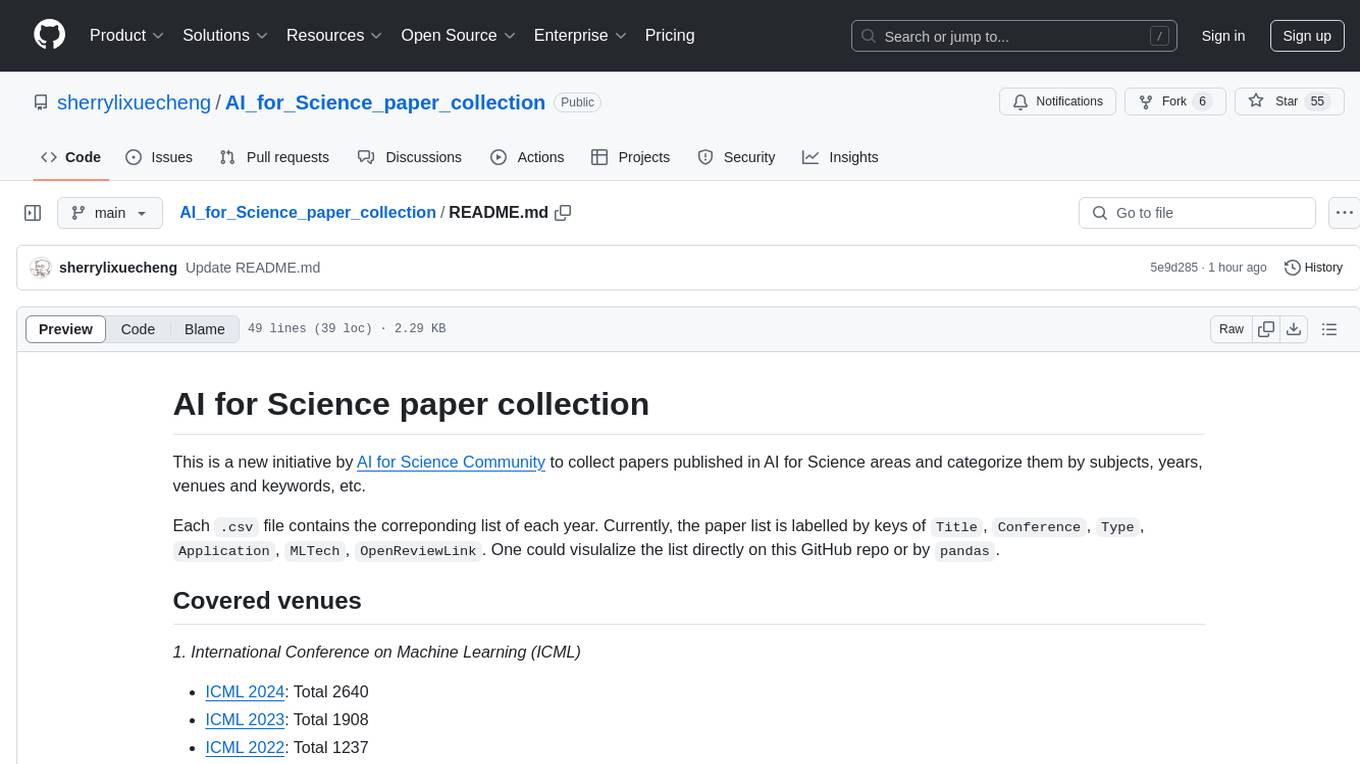

AI_for_Science_paper_collection

AI for Science paper collection is an initiative by AI for Science Community to collect and categorize papers in AI for Science areas by subjects, years, venues, and keywords. The repository contains `.csv` files with paper lists labeled by keys such as `Title`, `Conference`, `Type`, `Application`, `MLTech`, `OpenReviewLink`. It covers top conferences like ICML, NeurIPS, and ICLR. Volunteers can contribute by updating existing `.csv` files or adding new ones for uncovered conferences/years. The initiative aims to track the increasing trend of AI for Science papers and analyze trends in different applications.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package designed for state-of-the-art timeseries forecasting using deep learning architectures. It offers a high-level API and leverages PyTorch Lightning for efficient training on GPU or CPU with automatic logging. The package aims to simplify timeseries forecasting tasks by providing a flexible API for professionals and user-friendly defaults for beginners. It includes features such as a timeseries dataset class for handling data transformations, missing values, and subsampling, various neural network architectures optimized for real-world deployment, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. Built on pytorch-lightning, it supports training on CPUs, single GPUs, and multiple GPUs out-of-the-box.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.