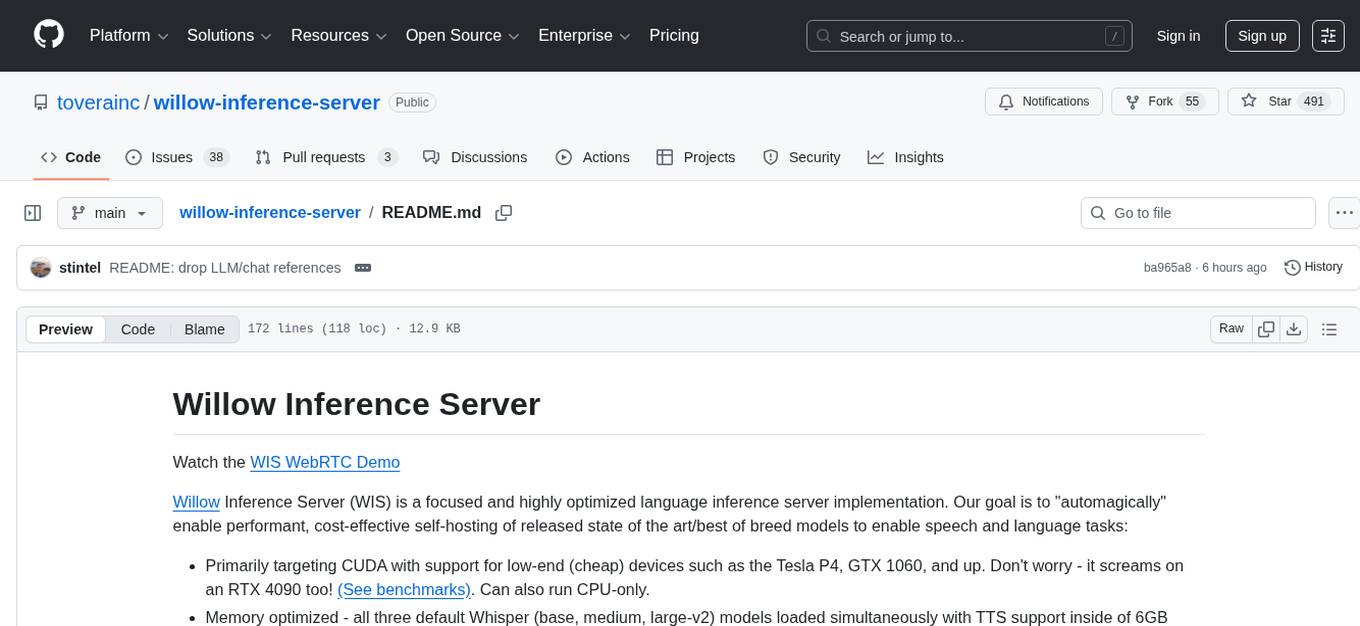

willow-inference-server

Open source, local, and self-hosted highly optimized language inference server supporting ASR/STT, TTS, and LLM across WebRTC, REST, and WS

Stars: 491

Willow Inference Server (WIS) is a highly optimized language inference server implementation focused on enabling performant, cost-effective self-hosting of state-of-the-art models for speech and language tasks. It supports ASR and TTS tasks, runs on CUDA with low-end device support, and offers various transport options like REST, WebRTC, and Web Sockets. WIS is memory optimized, leverages CTranslate2 for Whisper support, and enables custom TTS voices. The server automatically detects available CUDA VRAM and optimizes functionality accordingly. Users can programmatically select Whisper models and parameters for each request to balance speed and quality.

README:

Watch the WIS WebRTC Demo

Willow Inference Server (WIS) is a focused and highly optimized language inference server implementation. Our goal is to "automagically" enable performant, cost-effective self-hosting of released state of the art/best of breed models to enable speech and language tasks:

- Primarily targeting CUDA with support for low-end (cheap) devices such as the Tesla P4, GTX 1060, and up. Don't worry - it screams on an RTX 4090 too! (See benchmarks). Can also run CPU-only.

- Memory optimized - all three default Whisper (base, medium, large-v2) models loaded simultaneously with TTS support inside of 6GB VRAM.

- ASR. Heavy emphasis - Whisper optimized for very high quality as-close-to-real-time-as-possible speech recognition via a variety of means (Willow, WebRTC, POST a file, integration with devices and client applications, etc). Results in hundreds of milliseconds or less for most intended speech tasks.

- TTS. Primarily provided for assistant tasks (like Willow!) and visually impaired users.

- Support for a variety of transports. REST, WebRTC, Web Sockets.

- Performance and memory optimized. Leverages CTranslate2 for Whisper support.

- Willow support. WIS powers the Tovera hosted best-effort example server Willow users enjoy.

- Support for WebRTC - stream audio in real-time from browsers or WebRTC applications to optimize quality and response time. Heavily optimized for long-running sessions using WebRTC audio track management. Leave your session open for days at a time and have self-hosted ASR transcription within hundreds of milliseconds while conserving network bandwidth and CPU!

- Support for custom TTS voices. With relatively small audio recordings WIS can create and manage custom TTS voices. See API documentation for more information.

With the goal of enabling democratization of this functionality WIS will detect available CUDA VRAM, compute platform support, etc and optimize and/or disable functionality automatically (currently in order - ASR, TTS). For all supported Whisper models (large-v2, medium, and base) loaded simultaneously current minimum supported hardware is GTX 1060 3GB (6GB for ASR and TTS). User applications across all supported transports are able to programatically select and configure Whisper models and parameters (model size, beam, language detection/translation, etc) and TTS voices on a per-request basis depending on the needs of the application to balance speed/quality.

Note that we are primarily targeting CUDA - the performance, cost, and power usage of cheap GPUs like the Tesla P4 and GTX 1060 is too good to ignore. We'll make our best effort to support CPU wherever possible for current and future functionality but our emphasis is on performant latency-sensitive tasks even with low-end GPUs like the GTX 1070/Tesla P4 (as of this writing roughly $100 USD on the used market - and plenty of stock!).

For CUDA support you will need to have the NVIDIA drivers for your supported hardware. We recommend Nvidia driver version 530.

# Clone this repo:

git clone https://github.com/toverainc/willow-inference-server.git && cd willow-inference-server

# Ensure you have nvidia-container-toolkit and not nvidia-docker

# On Arch Linux:

yay -S libnvidia-container-tools libnvidia-container nvidia-container-toolkit docker-buildx

# Ubuntu:

./deps/ubuntu.sh# Install

./utils.sh install

# Generate self-signed TLS cert (or place a "real" one at nginx/key.pem and nginx/cert.pem)

./utils.sh gen-cert [your hostname]

# Start WIS

./utils.sh run

Note that (like Willow) Willow Inference Server is very early and advancing rapidly! Users are encouraged to contribute (hence the build requirement). For the 1.0 release of WIS we will provide ready to deploy Docker containers.

Willow: Configure Willow to use https://[your host]:19000/api/willow then build and flash.

WebRTC demo client: https://[your host]:19000/rtc

API documentation for REST interface: https://[your host]:19000/api/docs

System runtime can be configured by placing a .env file in the WIS root to override any variables set by utils.sh. You can also change more WIS specific parameters by copying settings.py to custom_settings.py.

WIS has been successfully tested on Windows with WSL (Windows Subsystem for Linux). With ASR and STT only requiring a total of 4GB VRAM WIS can be run concurrently with standard Windows desktop tasks on GPUs with 8GB VRAM.

| Device | Model | Beam Size | Speech Duration (ms) | Inference Time (ms) | Realtime Multiple |

|---|---|---|---|---|---|

| RTX 4090 | large-v2 | 5 | 3840 | 140 | 27x |

| RTX 3090 | large-v2 | 5 | 3840 | 219 | 17x |

| H100 | large-v2 | 5 | 3840 | 294 | 12x |

| H100 | large-v2 | 5 | 10688 | 519 | 20x |

| H100 | large-v2 | 5 | 29248 | 1223 | 23x |

| GTX 1060 | large-v2 | 5 | 3840 | 1114 | 3x |

| Tesla P4 | large-v2 | 5 | 3840 | 1099 | 3x |

| GTX 1070 | large-v2 | 5 | 3840 | 742 | 5x |

| RTX 4090 | medium | 1 | 3840 | 84 | 45x |

| RTX 3090 | medium | 1 | 3840 | 140 | 27x |

| GTX 1070 | medium | 1 | 3840 | 424 | 9x |

| GTX 1070 | medium | 1 | 10688 | 564 | 18x |

| GTX 1070 | medium | 1 | 29248 | 1118 | 26x |

| GTX 1060 | medium | 1 | 3840 | 588 | 6x |

| Tesla P4 | medium | 1 | 3840 | 586 | 6x |

| RTX 4090 | medium | 1 | 29248 | 377 | 77x |

| RTX 3090 | medium | 1 | 29248 | 520 | 56x |

| GTX 1060 | medium | 1 | 29248 | 1612 | 18x |

| Tesla P4 | medium | 1 | 29248 | 1730 | 16x |

| GTX 1070 | base | 1 | 3840 | 70 | 54x |

| GTX 1070 | base | 1 | 10688 | 92 | 115x |

| GTX 1070 | base | 1 | 29248 | 195 | 149x |

| RTX 4090 | base | 1 | 180000 | 277 | 648x (not a typo) |

| RTX 3090 | base | 1 | 180000 | 435 | 414x (not a typo) |

| RTX 3090 | tiny | 1 | 180000 | 366 | 491x (not a typo) |

| GTX 1070 | tiny | 1 | 3840 | 46 | 82x |

| GTX 1070 | tiny | 1 | 10688 | 64 | 168x |

| GTX 1070 | tiny | 1 | 29248 | 135 | 216x |

| Threadripper PRO 5955WX | tiny | 1 | 3840 | 140 | 27x |

| Threadripper PRO 5955WX | base | 1 | 3840 | 245 | 15x |

| Threadripper PRO 5955WX | small | 1 | 3840 | 641 | 5x |

| Threadripper PRO 5955WX | medium | 1 | 3840 | 1614 | 2x |

| Threadripper PRO 5955WX | large | 1 | 3840 | 3344 | 1x |

As you can see the realtime multiple increases dramatically with longer speech segments. Note that these numbers will also vary slightly depending on broader system configuration - CPU, RAM, etc.

When using WebRTC or Willow end-to-end latency in the browser/Willow and supported applications is the numbers above plus network latency for response - with the advantage being you can skip the "upload" portion as audio is streamed in realtime!

We are very interested in working with the community to optimize WIS for CPU. We haven't focused on it because we consider medium beam 1 to be the minimum configuration for intended tasks and CPUs cannot currently meet our latency targets.

Raspberry Pi Benchmarks run on Raspberry Pi 4 4GB Debian 11.7 aarch64 with faster-whisper version 0.5.1. Canakit 3 AMP USB-C power adapter and fan. All models int8 with OMP_NUM_THREADS=4 and language set as en. Same methodology as timing above with model load time excluded (WIS keeps models loaded). All inference time numbers rounded down. Max temperatures as reported by vcgencmd measure_temp were 57.9 C.

| Device | Model | Beam Size | Speech Duration (ms) | Inference Time (ms) | Realtime Multiple |

|---|---|---|---|---|---|

| Pi | tiny | 1 | 3840 | 3333 | 1.15x |

| Pi | base | 1 | 3840 | 6207 | 0.62x |

| Pi | medium | 1 | 3840 | 50807 | 0.08x |

| Pi | large-v2 | 1 | 3840 | 91036 | 0.04x |

More coming soon!

We understand the focus and emphasis on CUDA may be troubling or limiting for some users. We will provide additional CPU vs GPU benchmarks but spoiler alert: a $100 used GPU from eBay will beat the fastest CPUs on the market while consuming less power at SIGNIFICANTLY lower cost. GPUs are very fundamentally different architectually and while there is admirable work being done with CPU optimized projects such as whisper.cpp and CTranslate2 we believe that GPUs will maintain drastic speed, cost, and power advantages for the forseeable future. That said, we are interested in getting feedback (and PRs!) from WIS users to make full use of CTranslate2 to optimize for CPU.

Perusing eBay and other used marketplaces the GTX 1070 seems to be the best performance/price ratio for ASR/STT and TTS while leaving VRAM room for the future. The author ordered an EVGA GTX 1070 FTW ACX3.0 for $120 USD with shipping and tax on 5/19/2023.

The author has a long background with VoIP, WebRTC, etc. We deploy some fairly unique "tricks" to support long-running WebRTC sessions while conserving bandwidth and CPU. In between start/stop of audio record we pause (and then resume) the WebRTC audio track to bring bandwidth down to 5 kbps at 5 packets per second at idle while keeping response times low. This is done to keep ICE active and any NAT/firewall pinholes open while minimizing bandwidth and CPU usage. Did I mention it's optimized?

Start/stop of sessions and return of results uses WebRTC data channels.

See the Willow TypeScript Client repo to integrate WIS WebRTC support into your own frontend.

- Integrate WebRTC with Home Assistant dashboard to support streaming audio directly from the HA dashboard on desktop or mobile.

- Desktop/mobile transcription apps (look out for a future announcement on this!).

- Desktop/mobile assistant apps - Willow everywhere!

We're looking for feedback from the community on preferred implementations, voices, etc. See the open issue.

Why do it again when you're saying the same thing? Support on-disk caching of TTS output for lightning fast TTS response times.

Meta released MMS on 5/22/2023, supporting over 1,000 languages across speech to text and text to speech!

WIS is very early and we will refactor, modularize, and improve documentation well before the 1.0 release.

We may support user-defined modules to chain together any number of supported tasks within one request, enabling such things as:

Speech in -> Arbitrary API -> Speech out

...and more, directly in WIS!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for willow-inference-server

Similar Open Source Tools

willow-inference-server

Willow Inference Server (WIS) is a highly optimized language inference server implementation focused on enabling performant, cost-effective self-hosting of state-of-the-art models for speech and language tasks. It supports ASR and TTS tasks, runs on CUDA with low-end device support, and offers various transport options like REST, WebRTC, and Web Sockets. WIS is memory optimized, leverages CTranslate2 for Whisper support, and enables custom TTS voices. The server automatically detects available CUDA VRAM and optimizes functionality accordingly. Users can programmatically select Whisper models and parameters for each request to balance speed and quality.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

recommenders

Recommenders is a project under the Linux Foundation of AI and Data that assists researchers, developers, and enthusiasts in prototyping, experimenting with, and bringing to production a range of classic and state-of-the-art recommendation systems. The repository contains examples and best practices for building recommendation systems, provided as Jupyter notebooks. It covers tasks such as preparing data, building models using various recommendation algorithms, evaluating algorithms, tuning hyperparameters, and operationalizing models in a production environment on Azure. The project provides utilities to support common tasks like loading datasets, evaluating model outputs, and splitting training/test data. It includes implementations of state-of-the-art algorithms for self-study and customization in applications.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

GenAIComps

GenAIComps is an initiative aimed at building enterprise-grade Generative AI applications using a microservice architecture. It simplifies the scaling and deployment process for production, abstracting away infrastructure complexities. GenAIComps provides a suite of containerized microservices that can be assembled into a mega-service tailored for real-world Enterprise AI applications. The modular approach of microservices allows for independent development, deployment, and scaling of individual components, promoting modularity, flexibility, and scalability. The mega-service orchestrates multiple microservices to deliver comprehensive solutions, encapsulating complex business logic and workflow orchestration. The gateway serves as the interface for users to access the mega-service, providing customized access based on user requirements.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

TinyLLM

TinyLLM is a project that helps build a small locally hosted language model with a web interface using consumer-grade hardware. It supports multiple language models, builds a local OpenAI API web service, and serves a Chatbot web interface with customizable prompts. The project requires specific hardware and software configurations for optimal performance. Users can run a local language model using inference servers like vLLM, llama-cpp-python, and Ollama. The Chatbot feature allows users to interact with the language model through a web-based interface, supporting features like summarizing websites, displaying news headlines, stock prices, weather conditions, and using vector databases for queries.

compose-for-agents

Compose for Agents is a tool that allows users to run demos using OpenAI models or locally with Docker Model Runner. The tool supports multi-agent and single-agent systems for various tasks such as fact-checking, summarizing GitHub issues, marketing strategy, SQL queries, travel planning, and more. Users can configure the demos by creating a `.mcp.env` file, supplying required tokens, and running `docker compose up --build`. Additionally, users can utilize OpenAI models by creating a `secret.openai-api-key` file and starting the project with the OpenAI configuration.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

MathEval

MathEval is a benchmark designed for evaluating the mathematical capabilities of large models. It includes over 20 evaluation datasets covering various mathematical domains with more than 30,000 math problems. The goal is to assess the performance of large models across different difficulty levels and mathematical subfields. MathEval serves as a reliable reference for comparing mathematical abilities among large models and offers guidance on enhancing their mathematical capabilities in the future.

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

Vision-Agents

Vision Agents is an open-source project by Stream that provides building blocks for creating intelligent, low-latency video experiences powered by custom models and infrastructure. It offers multi-modal AI agents that watch, listen, and understand video in real-time. The project includes SDKs for various platforms and integrates with popular AI services like Gemini and OpenAI. Vision Agents can be used for tasks such as sports coaching, security camera systems with package theft detection, and building invisible assistants for various applications. The project aims to simplify the development of real-time vision AI applications by providing a range of processors, integrations, and out-of-the-box features.

For similar tasks

willow-inference-server

Willow Inference Server (WIS) is a highly optimized language inference server implementation focused on enabling performant, cost-effective self-hosting of state-of-the-art models for speech and language tasks. It supports ASR and TTS tasks, runs on CUDA with low-end device support, and offers various transport options like REST, WebRTC, and Web Sockets. WIS is memory optimized, leverages CTranslate2 for Whisper support, and enables custom TTS voices. The server automatically detects available CUDA VRAM and optimizes functionality accordingly. Users can programmatically select Whisper models and parameters for each request to balance speed and quality.

metavoice-src

MetaVoice-1B is a 1.2B parameter base model trained on 100K hours of speech for TTS (text-to-speech). It has been built with the following priorities: * Emotional speech rhythm and tone in English. * Zero-shot cloning for American & British voices, with 30s reference audio. * Support for (cross-lingual) voice cloning with finetuning. * We have had success with as little as 1 minute training data for Indian speakers. * Synthesis of arbitrary length text

modelfusion

ModelFusion is an abstraction layer for integrating AI models into JavaScript and TypeScript applications, unifying the API for common operations such as text streaming, object generation, and tool usage. It provides features to support production environments, including observability hooks, logging, and automatic retries. You can use ModelFusion to build AI applications, chatbots, and agents. ModelFusion is a non-commercial open source project that is community-driven. You can use it with any supported provider. ModelFusion supports a wide range of models including text generation, image generation, vision, text-to-speech, speech-to-text, and embedding models. ModelFusion infers TypeScript types wherever possible and validates model responses. ModelFusion provides an observer framework and logging support. ModelFusion ensures seamless operation through automatic retries, throttling, and error handling mechanisms. ModelFusion is fully tree-shakeable, can be used in serverless environments, and only uses a minimal set of dependencies.

MeloTTS

MeloTTS is a high-quality multi-lingual text-to-speech library by MyShell.ai. It supports various languages including English (American, British, Indian, Australian), Spanish, French, Chinese, Japanese, and Korean. The Chinese speaker also supports mixed Chinese and English. The library is fast enough for CPU real-time inference and offers features like using without installation, local installation, and training on custom datasets. The Python API and model cards are available in the repository and on HuggingFace. The community can join the Discord channel for discussions and collaboration opportunities. Contributions are welcome, and the library is under the MIT License. MeloTTS is based on TTS, VITS, VITS2, and Bert-VITS2.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

openedai-speech

OpenedAI Speech is a free, private text-to-speech server compatible with the OpenAI audio/speech API. It offers custom voice cloning and supports various models like tts-1 and tts-1-hd. Users can map their own piper voices and create custom cloned voices. The server provides multilingual support with XTTS voices and allows fixing incorrect sounds with regex. Recent changes include bug fixes, improved error handling, and updates for multilingual support. Installation can be done via Docker or manual setup, with usage instructions provided. Custom voices can be created using Piper or Coqui XTTS v2, with guidelines for preparing audio files. The tool is suitable for tasks like generating speech from text, creating custom voices, and multilingual text-to-speech applications.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

cgft-llm

The cgft-llm repository is a collection of video tutorials and documentation for implementing large models. It provides guidance on topics such as fine-tuning llama3 with llama-factory, lightweight deployment and quantization using llama.cpp, speech generation with ChatTTS, introduction to Ollama for large model deployment, deployment tools for vllm and paged attention, and implementing RAG with llama-index. Users can find detailed code documentation and video tutorials for each project in the repository.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.