RustySEO

SEO/GEO toolkit to analyse, crawl, parse and optimise websites & logs (Nginx & Apache)

Stars: 151

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

README:

Without crawl limits, start ranking better on search engines.

TUI mode is a standalone app that requires to be installed separately. You can find it here.

RustySEO is an all-in-one, cross-platform Marketing toolkit, designed for comprehensive SEO & GEO analysis. It enables users to crawl websites, analise server logs gaining actionable insights into their marketing and SEO strategies.

As an open-source project, RustySEO aims to enhance your SEO efforts. Please note that initial versions may have bugs and issues, and we welcome your contributions in the form of bug reports or fixes via our repository.

Our mission is to offer a robust, free alternative to the costly commercial SEO tools currently on the market.

Keep in mind that all the integrations are free to use (up to an extent). Its abuse will result in 404 or 503 by the providers.

As long as you don't spam (many requests per second) you should be ok to use RustySEO as your daily driver for SEO. :)

For a better experience you need to get a Google PageSpeed Insights API KEY.

Got to "Connectors" > "PageSpeed Insights" and add your key, for the time being you can try one I generated (It will expire over time):

AIzaSyAHsCM-Cs4cCqdGi798wh8af-bSnXbVUjwWe recommend using Google Gemini if you want the best AI features.

As of today, the smaller local LLMs are not working properly and are not recommended if you want to make the most of the AI features.

These LLMs might get deprecated due to their lack of uniform performance across different models.

Authentication currently takes place server side, as such it is slightly more cumbersome when integrating with Google OAuth 2.0. This is particularly true for Google Search Console (GSC) and Google Analytics 4 (GA4)

This authentication will be passed to the client-side in the future.

Use at your own risk, this software is new and under development.

- Shallow & deep crawl;

- Technical Diagnostics (core web vitals, Page Speed Insights);

- On-Page SEO Analysis (content analysis, Keyword density, etc...);

- Improvements / Suggestions on page crawl;

- Dashboards;

- Task Manager / Tracker

- Reporting (CSV, Excel, Google Sheets, PDF);

- Topic generator;

- Keyword generator;

- Local LLM (ollama);

- Free API LLM (Google Gemini);

- Built-in AI chatbot;

- Crawl history;

- Image conversion and optimization;

| # | Feature | Status |

|---|---|---|

| 1 | Deep crawl (multiple pages concurrently) | ✅ |

| 2 | More integrations | ✅ |

| 3 | Better reporting | ✅ |

| 4 | Password protection & encryption | |

| 5 | Client-side OAuth2 authentication | ✅ |

| 6 | Better local LLM support | ❌ |

| 7 | SEMrush Integration | |

| 8 | Topic / Content calendar view | ✅ |

| 9 | Regression Analysis of GA4 data | |

| 10 | Topic Modeling | |

| 11 | Chatbot Crawl Context | ✅ |

| 12 | Extraction of HTML / JS / CSS fragments | ☠️ |

| 13 | Schema Generator & Validator | ✅ |

| 14 | Keyword Clustering | ✅ |

| 15 | Machine Learning | ✅ |

| 16 | Collaboration | ⏳ |

| 17 | API / Streaming | ⏳ |

| 18 | Very Large website support (> 100K URLs) | ✅ |

- Google Search Console

- Google Cloud Platform

- GA4

- Google Gemini

- Ollama

- PageSpeed Insights

- Microsoft Clarity

| Keys | Result |

|---|---|

| CTRL + D | Deep Crawl |

| CTRL + S | Shalow Crawl |

| CTRL + H | Toggle Sidebar |

| CTRL + L | Toggle Side Task Manager |

| CTRL + T | Create TODO/Task |

| CTRL + Shift + C | Delete Logs From DB |

| CTRL + / | Clear cache |

| CTRL + Shift + / | Full app reset |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RustySEO

Similar Open Source Tools

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

Awesome-AITools

This repo collects AI-related utilities. ## All Categories * All Categories * ChatGPT and other closed-source LLMs * AI Search engine * Open Source LLMs * GPT/LLMs Applications * LLM training platform * Applications that integrate multiple LLMs * AI Agent * Writing * Programming Development * Translation * AI Conversation or AI Voice Conversation * Image Creation * Speech Recognition * Text To Speech * Voice Processing * AI generated music or sound effects * Speech translation * Video Creation * Video Content Summary * OCR(Optical Character Recognition)

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

generative-ai-with-javascript

The 'Generative AI with JavaScript' repository is a comprehensive resource hub for JavaScript developers interested in delving into the world of Generative AI. It provides code samples, tutorials, and resources from a video series, offering best practices and tips to enhance AI skills. The repository covers the basics of generative AI, guides on building AI applications using JavaScript, from local development to deployment on Azure, and scaling AI models. It is a living repository with continuous updates, making it a valuable resource for both beginners and experienced developers looking to explore AI with JavaScript.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

squirrelscan

Squirrelscan is a website audit tool designed for SEO, performance, and security audits. It offers 230+ rules across 21 categories, AI-native design for Claude Code and AI workflows, smart incremental crawling, and multiple output formats. It provides E-E-A-T auditing, crawl history tracking, and is developer-friendly with a CLI. Users can run audits in the terminal, integrate with AI coding agents, or pipe output to AI assistants. The tool is available for macOS, Linux, Windows, npm, and npx installations, and is suitable for autonomous AI workflows.

together-cookbook

The Together Cookbook is a collection of code and guides designed to help developers build with open source models using Together AI. The recipes provide examples on how to chain multiple LLM calls, create agents that route tasks to specialized models, run multiple LLMs in parallel, break down tasks into parallel subtasks, build agents that iteratively improve responses, perform LoRA fine-tuning and inference, fine-tune LLMs for repetition, improve summarization capabilities, fine-tune LLMs on multi-step conversations, implement retrieval-augmented generation, conduct multimodal search and conditional image generation, visualize vector embeddings, improve search results with rerankers, implement vector search with embedding models, extract structured text from images, summarize and evaluate outputs with LLMs, generate podcasts from PDF content, and get LLMs to generate knowledge graphs.

boost

Laravel Boost accelerates AI-assisted development by providing essential context and structure for generating high-quality, Laravel-specific code. It includes an MCP server with specialized tools, AI guidelines, and a Documentation API. Boost is designed to streamline AI-assisted coding workflows by offering precise, context-aware results and extensive Laravel-specific information.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

dataforce.studio

DataForce Studio is an open-source MLOps platform designed to help build, manage, and deploy AI/ML models with ease. It supports the entire model lifecycle, from creation to deployment and monitoring, within a user-friendly interface. The platform is in active early development, aiming to provide features like post-deployment monitoring, model deployment, data science agent, experiment snapshots, model cards, Python SDK, model registry, notebooks, in-browser runtime, and express tasks for prompt optimization and tabular data.

kodus-ai

Kodus AI is an open-source AI agent designed to review code like a real teammate, providing personalized, context-aware code reviews to help teams catch bugs, enforce best practices, and maintain a clean codebase. It seamlessly integrates with Git workflows, learns team coding patterns, and offers custom review policies. Kodus supports all programming languages with semantic and AST analysis, enhancing code review accuracy and providing actionable feedback. The tool is available in Cloud and Self-Hosted editions, offering features like self-hosting, unlimited users, custom integrations, and advanced compliance support.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

For similar tasks

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

DB-GPT

DB-GPT is an open source AI native data app development framework with AWEL(Agentic Workflow Expression Language) and agents. It aims to build infrastructure in the field of large models, through the development of multiple technical capabilities such as multi-model management (SMMF), Text2SQL effect optimization, RAG framework and optimization, Multi-Agents framework collaboration, AWEL (agent workflow orchestration), etc. Which makes large model applications with data simpler and more convenient.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

aide

AIDE (Advanced Intrusion Detection Environment) is a tool for monitoring file system changes. It can be used to detect unauthorized changes to monitored files and directories. AIDE was written to be a simple and free alternative to Tripwire. Features currently included in AIDE are as follows: o File attributes monitored: permissions, inode, user, group file size, mtime, atime, ctime, links and growing size. o Checksums and hashes supported: SHA1, MD5, RMD160, and TIGER. CRC32, HAVAL and GOST if Mhash support is compiled in. o Plain text configuration files and database for simplicity. o Rules, variables and macros that can be customized to local site or system policies. o Powerful regular expression support to selectively include or exclude files and directories to be monitored. o gzip database compression if zlib support is compiled in. o Free software licensed under the GNU General Public License v2.

OpsPilot

OpsPilot is an AI-powered operations navigator developed by the WeOps team. It leverages deep learning and LLM technologies to make operations plans interactive and generalize and reason about local operations knowledge. OpsPilot can be integrated with web applications in the form of a chatbot and primarily provides the following capabilities: 1. Operations capability precipitation: By depositing operations knowledge, operations skills, and troubleshooting actions, when solving problems, it acts as a navigator and guides users to solve operations problems through dialogue. 2. Local knowledge Q&A: By indexing local knowledge and Internet knowledge and combining the capabilities of LLM, it answers users' various operations questions. 3. LLM chat: When the problem is beyond the scope of OpsPilot's ability to handle, it uses LLM's capabilities to solve various long-tail problems.

aimeos-typo3

Aimeos is a professional, full-featured, and high-performance e-commerce extension for TYPO3. It can be installed in an existing TYPO3 website within 5 minutes and can be adapted, extended, overwritten, and customized to meet specific needs.

For similar jobs

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

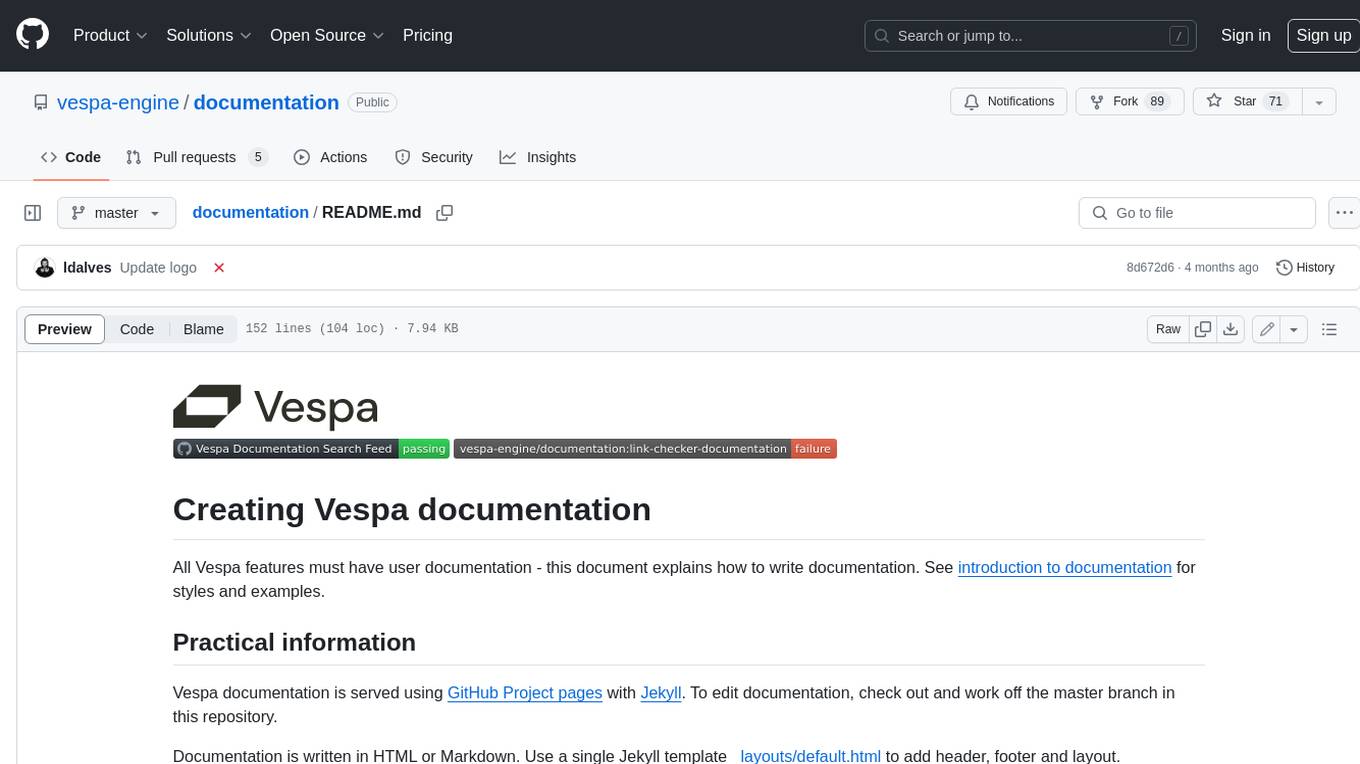

documentation

Vespa documentation is served using GitHub Project pages with Jekyll. To edit documentation, check out and work off the master branch in this repository. Documentation is written in HTML or Markdown. Use a single Jekyll template _layouts/default.html to add header, footer and layout. Install bundler, then $ bundle install $ bundle exec jekyll serve --incremental --drafts --trace to set up a local server at localhost:4000 to see the pages as they will look when served. If you get strange errors on bundle install try $ export PATH=“/usr/local/opt/[email protected]/bin:$PATH” $ export LDFLAGS=“-L/usr/local/opt/[email protected]/lib” $ export CPPFLAGS=“-I/usr/local/opt/[email protected]/include” $ export PKG_CONFIG_PATH=“/usr/local/opt/[email protected]/lib/pkgconfig” The output will highlight rendering/other problems when starting serving. Alternatively, use the docker image `jekyll/jekyll` to run the local server on Mac $ docker run -ti --rm --name doc \ --publish 4000:4000 -e JEKYLL_UID=$UID -v $(pwd):/srv/jekyll \ jekyll/jekyll jekyll serve or RHEL 8 $ podman run -it --rm --name doc -p 4000:4000 -e JEKYLL_ROOTLESS=true \ -v "$PWD":/srv/jekyll:Z docker.io/jekyll/jekyll jekyll serve The layout is written in denali.design, see _layouts/default.html for usage. Please do not add custom style sheets, as it is harder to maintain.

deep-seek

DeepSeek is a new experimental architecture for a large language model (LLM) powered internet-scale retrieval engine. Unlike current research agents designed as answer engines, DeepSeek aims to process a vast amount of sources to collect a comprehensive list of entities and enrich them with additional relevant data. The end result is a table with retrieved entities and enriched columns, providing a comprehensive overview of the topic. DeepSeek utilizes both standard keyword search and neural search to find relevant content, and employs an LLM to extract specific entities and their associated contents. It also includes a smaller answer agent to enrich the retrieved data, ensuring thoroughness. DeepSeek has the potential to revolutionize research and information gathering by providing a comprehensive and structured way to access information from the vastness of the internet.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.