discourse-chatbot

An AI bot with RAG capability for Topics and Chat in Discourse, currently powered by OpenAI

Stars: 68

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

README:

- The original Discourse AI Chatbot!

- Converse with the bot in any Post or Chat Channel, one to one or with others!

- Customise the character of your bot to suit your forum!

- want it to sound like William Shakespeare, or Winston Churchill? can do!

- The new "RAG mode" can now:

- Search your whole* forum for answers so the bot can be an expert on the subject of your forum.

- not just be aware of the information on the current Topic or Channel.

- Search Wikipedia

- Search current news*

- Search Google*

- Return current End Of Day market data for stocks.*

- Do "complex" maths accurately (with no made up or "hallucinated" answers!)

- Search your whole* forum for answers so the bot can be an expert on the subject of your forum.

- EXPERIMENTAL Vision support - the bot can see your pictures and answer questions on them! (turn

chatbot_support_visionON) - Uses cutting edge Open AI API and functions capability of their excellent, industry leading Large Language Models.

- Includes a special quota system to manage access to the bot: more trusted and/or paying members can have greater access to the bot!

- Also supports Azure and proxy server connections

- Use third party proxy processes to translate the calls to support alternative LLMs like Gemini e.g. this one

*sign-up for external (not affiliated) API services required. Links in settings.

There are two modes:

-

RAG mode is very smart and knows facts posted on your forum.

-

Basic bot mode can sometimes make mistakes, but is cheaper to run because it makes fewer calls to the Large Language Model:

This bot can be used in public spaces on your forum. To make the bot especially useful there is RAG mode (one setting per bot trust level). This is not set by default.

In RAG mode the bot is, by default, goverened by setting chatbot embeddings strategy (default benchmark_user) privy to all content a Trust Level 1 user would see. Thus, if interacted with in a public facing Topic, there is a possibility the bot could "leak" information if you tend to gate content at the Trust Level 0 or 1 level via Category permissions. This level was chosen because through experience most sites usually do not gate sensitive content at low trust levels but it depends on your specific needs.

For this mode, make sure you have at least one user with Trust Level 1 and no additional group membership beyond the automated groups. (bear in mind the bot will then know everything a TL1 level user would know and can share it). You can choose to lower chatbot embeddings benchmark user trust level if you have a Trust Level 0 user with no additional group membership beyond automated groups.

Alternatively:

- Switch

chatbot embeddings strategytocategoryand populatechatbot embeddings categorieswith Categories you wish the bot to know about. (Be aware that if you add any private Categories, it should know about those and anything the bot says in public, anywhere might leak to less privileged users so just be a bit careful on what you add). - only use the bot in

basicmode (but the bot then won't see any posts) - mitigate with moderation

You can see that this setup is a compromise. In order to make the bot useful it needs to be knowledgeable about the content on your site. Currently it is not possible for the bot to selectively read members only content and share that only with members which some admins might find limiting but there is no way to easily solve the that whilst the bot is able to talk in public. Contact me if you have special needs and would like to sponsor some work in this space. Bot permissioning with semantic search is a non-trivial problem. The system is currently optimised for speed. NB Private Messages are never read by the bot.

- Open AI API response can be slow at times on more advanced models due to high demand. However Chatbot supports GPT 3.5 too which is fast and responsive and perfectly capable.

- Is extensible and supporting other cloud bots is intended (hence the generic name for the plugin), but currently 'only' supports interaction with Open AI Large Language Models (LLM) such as GPT-4 natively. Please contact me if you wish to add additional bot types or want to support me to add more. PR welcome. Can already use proxy servers to access other services without code changes though!

- Is extensible to support the searching of other content beyond just the current set provided.

If you wish Chatbot to know about the content on your site, turn this setting ON:

chatbot_embeddings_enabled

Only necessary if you want to use the RAG type bot and ensure it is aware of the content on your forum, not just the current Topic.

Initially, we need to create the embeddings for all in-scope posts, so the bot can find forum information. This now happens in the background once this setting is enabled and you do not need to do anything.

This seeding job can take a period of days for very big sites.

This is determined by several settings:

-

chatbot_embeddings_strategywhich can be either "benchmark_user" or "category" -

chatbot_embeddings_benchmark_user_trust_levelsets the relevant trust level for the former -

chatbot_embeddings_categoriesifcategorystrategy is set, gives the bot access to consider all posts in specified Category.

If you change these settings, over time, the population of Embeddings will morph.

Enter the container:

./launcher enter app

and run the following rake command:

rake chatbot:refresh_embeddings[1]

which at present will run twice due to unknown reason (sorry! feel free to PR) but the [1] ensures the second time it will only add missing embeddings (ie none immediately after first run) so is somewhat moot.

In the unlikely event you get rate limited by OpenAI (unlikely!) you can complete the embeddings by doing this:

rake chatbot:refresh_embeddings[1,1]

which will fill in the missing ones (so nothing lost from the error) but will continue more cautiously putting a 1 second delay between each call to Open AI.

Compared to bot interactions, embeddings are not expensive to create, but do watch your usage on your Open AI dashboard in any case.

NB Embeddings are only created for Posts and only those Posts for which a Trust Level One user would have access. This seemed like a reasonable compromise. It will not create embeddings for posts from Trust Level 2+ only accessible content.

@37Rb writes: "Here’s a SQL query I’m using with the Data Explorer plugin to monitor & verify embeddings… in case it helps anyone else."

SELECT e.id, e.post_id AS post, p.topic_id AS topic, p.post_number,

p.topic_id, e.created_at, e.updated_at, p.deleted_at AS post_deleted

FROM chatbot_post_embeddings e LEFT JOIN posts p ON e.post_id = p.id

You might get an error like this:

OpenAI HTTP Error (spotted in ruby-openai 6.3.1): {"error"=>{"message"=>"This model's maximum context length is 8192 tokens, however you requested 8528 tokens (8528 in your prompt; 0 for the completion). Please reduce your prompt; or completion length.", "type"=>"invalid_request_error", "param"=>nil, "code"=>nil}}

This is how you resolve it ...

As per your error message, the embedding model has a limit of:

8192 tokens

however you requested 8528

You need to drop the current value of this setting:

chatbot_open_ai_embeddings_char_limit:

by about 4 x the diff and see if it works (a token is roughly 4 characters).

So, in this example, 4 x (8528 - 8192) = 1344

So drop chatbot_open_ai_embeddings_char_limit current value by 1500 to be safe. However, the default value was set according to a lot of testing for English Posts, but for other languages it may need lowering.

This will then cut off more text and request tokens and hopefully the embedding will go through. If not you will need to confirm the difference and reduce it further accordingly. Eventually it will be low enough so you don’t need to look at it again.

You don't need to do anything but change the setting: the background job will take care of things, if gradually.

If you really want to speed the process up, do:

- Change the setting

chatbot_open_ai_embeddings_modelto your new preferred model - It's best to first delete all your current embeddings:

- go into the container

./launcher enter app - enter the rails console

rails c - run

::DiscourseChatbot::PostEmbedding.delete_all -

exit(to return to root within container)

- go into the container

- run

rake chatbot:refresh_embeddings[1] - if for any Open AI side reason that fails part way through, run it again until you get to 100%

- the new model is known to be more accurate, so you might have to drop

chatbot_forum_search_function_similarity_thresholdor you might get no results :). I dropped my default value from0.8to0.6, but your mileage may vary.

Take a moment to read through the entire set of Plugin settings. The chatbot bot type setting is key, and there is one for each chatbot "Trust Level":

RAG mode is superior but will make more calls to the API, potentially increasing cost. That said, the reduction in its propensity to ultimately output 'hallucinations' may facilitate you being able to drop down from GPT-4 to GPT-3.5 and you may end up spending less despite the significant increase in usefulness and reliability of the output. GPT 3.5 is also a better fit for the Agent type based on response times. A potential win-win! Experiment!

For Chatbot to work in Chat you must have Chat enabled.

This is governed mostly by a setting: chatbot_reply_job_time_delay over which you have discretion.

The intention of having this setting is to:

- protect you from reaching rate limits of Open AI

- protect your site from users that would like to spam the bot and cost you money.

It is now default '1' second and can now be reduced to zero 🏎️ , but be aware of the above risks.

Setting this zero and the bot, even in 'agent' mode, becomes a lot more 'snappy'.

Obviously this can be a bit artificial and no real person would actually type that fast ... but set it to your taste and wallet size.

NB I cannot directly control the speed of response of Open AI's API - and the general rule is the more sophisticated the model you set the slower this response will usually be. So GPT 3.5 is much faster that GPT 4 ... although this may change with the newer GPT 4 Turbo model.

For Chatbot to work in Chat you must have Chat enabled.

You must get a token from https://platform.openai.com/ in order to use the current bot. A default language model is set (one of the most sophisticated), but you can try a cheaper alternative, the list is here

There is an automated part of the setup: upon addition to a Discourse, the plugin currently sets up a AI bot user with the following attributes

- Name: 'Chatbot'

- User Id: -4

- Bio: "Hi, I’m not a real person. I’m a bot that can discuss things with you. Don't take me too seriously. Sometimes, I'm even right about stuff!"

- Group Name: "ai_bot_group"

- Group Full Name: "AI Bots"

You can edit the name, avatar and bio (see locale string in admin -> customize -> text) as you wish but make it easy to mention.

Initially no-one will have access to the bot, not even staff.

Calling the Open AI API is not free after an initial free allocation has expired! So, I've implemented a quota system to keep this under control, keep costs down and prevent abuse. The cost is not crazy with these small interactions, but it may add up if it gets popular. You can read more about OpenAI pricing on their pricing page.

In order to interact with the bot you must belong to a group that has been added to one of the three levels of trusted sets of groups, low, medium & high trust group sets. You can modify each of the number of allowed interactions per week per trusted group sets in the corresponding settings.

You must populate the groups too. That configuration is entirely up to you. They start out blank so initially no-one will have access to the bot. There are corresponding quotas in three additional settings.

Note the user gets the quota based on the highest trusted group they are a member of.

There are several locale text "settings" that influence what the bot receives and how the bot responds.

The most important one you should consider changing is the bot's system prompt. This is sent every time you speak to the bot.

For the basic bot, you can try a system prompt like:

’You are an extreme Formula One fan, you love everything to do with motorsport and its high octane levels of excitement’ instead of the default.

(For the agent bot you must keep everything after "You are a helpful assistant." or you may break the agent behaviour. Reset it if you run into problems. Again experiment!)

Try one that is most appropriate for the subject matter of your forum. Be creative!

Changing these locale strings can make the bot behave very differently but cannot be amended on the fly. I would recommend changing only the system prompt as the others play an important role in agent behaviour or providing information on who said what to the bot.

NB In Topics, the first Post and Topic Title are sent in addition to the window of Posts (determined by the lookback setting) to give the bot more context.

You can edit these strings in Admin -> Customize -> Text under chatbot.prompt.

The bot supports Chat Messages and Topic Posts, including Private Messages (if configured).

You can prompt the bot to respond by replying to it, or @ mentioning it. You can set how far the bot looks behind to get context for a response. The bigger the value the more costly will be each call.

There's a floating quick chat button that connects you immediately to the bot. This can be disabled in settings. You can choose whether to load the bot into a 1 to 1 chat or a Personal Message.

Now you can choose your preferred icon (default 🤖 ) or if setting left blank, will pick up the bot user's avatar! 😎

And remember, you can also customise the text that appears when it is expanded by editing the locale text using Admin -> Customize -> Text chatbot.

The only step necessary to remove it is to delete the clone statement from your app.yml.

I'm not responsible for what the bot responds with. Consider the plugin to be at Beta stage and things could go wrong. It will improve with feedback. But not necessarily the bots response 🤣 Please understand the pro's and con's of a LLM and what they are and aren't capable of and their limitations. They are very good at creating convincing text but can often be factually wrong.

Whatever you write on your forum may get forwarded to Open AI as part of the bots scan of the last few posts once it is prompted to reply (obviously this is restricted to the current Topic or Chat Channel). Whilst it almost certainly won't be incorporated into their pre-trained models, they will use the data in their analytics and logging. Be sure to add this fact into your forum's TOS & privacy statements. Related links: https://openai.com/policies/terms-of-use, https://openai.com/policies/privacy-policy, https://platform.openai.com/docs/data-usage-policies

Open AI made a statement about Copyright here: https://help.openai.com/en/articles/5008634-will-openai-claim-copyright-over-what-outputs-i-generate-with-the-api

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for discourse-chatbot

Similar Open Source Tools

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

aicodeguide

AI Code Guide is a comprehensive guide that covers everything you need to know about using AI to help you code or even code for you. It provides insights into the changing landscape of coding with AI, new tools, editors, and practices. The guide aims to consolidate information on AI coding and AI-assisted code generation in one accessible place. It caters to both experienced coders looking to leverage AI tools and beginners interested in 'vibe coding' to build software products. The guide covers various topics such as AI coding practices, different ways to use AI in coding, recommended resources, tools for AI coding, best practices for structuring prompts, and tips for using specific tools like Claude Code.

AnnA_Anki_neuronal_Appendix

AnnA is a Python script designed to create filtered decks in optimal review order for Anki flashcards. It uses Machine Learning / AI to ensure semantically linked cards are reviewed far apart. The script helps users manage their daily reviews by creating special filtered decks that prioritize reviewing cards that are most different from the rest. It also allows users to reduce the number of daily reviews while increasing retention and automatically identifies semantic neighbors for each note.

yet-another-applied-llm-benchmark

Yet Another Applied LLM Benchmark is a collection of diverse tests designed to evaluate the capabilities of language models in performing real-world tasks. The benchmark includes tests such as converting code, decompiling bytecode, explaining minified JavaScript, identifying encoding formats, writing parsers, and generating SQL queries. It features a dataflow domain-specific language for easily adding new tests and has nearly 100 tests based on actual scenarios encountered when working with language models. The benchmark aims to assess whether models can effectively handle tasks that users genuinely care about.

local-chat

LocalChat is a simple, easy-to-set-up, and open-source local AI chat tool that allows users to interact with generative language models on their own computers without transmitting data to a cloud server. It provides a chat-like interface for users to experience ChatGPT-like behavior locally, ensuring GDPR compliance and data privacy. Users can download LocalChat for macOS, Windows, or Linux to chat with open-weight generative language models.

LLocalSearch

LLocalSearch is a completely locally running search aggregator using LLM Agents. The user can ask a question and the system will use a chain of LLMs to find the answer. The user can see the progress of the agents and the final answer. No OpenAI or Google API keys are needed.

aiohomekit

aiohomekit is a Python library that implements the HomeKit protocol for controlling HomeKit accessories using asyncio. It is primarily used with Home Assistant, targeting the same versions of Python and following their code standards. The library is still under development and does not offer API guarantees yet. It aims to match the behavior of real HAP controllers, even when not strictly specified, and works around issues like JSON formatting, boolean encoding, header sensitivity, and TCP packet splitting. aiohomekit is primarily tested with Phillips Hue and Eve Extend bridges via Home Assistant, but is known to work with many more devices. It does not support BLE accessories and is intended for client-side use only.

modelbench

ModelBench is a tool for running safety benchmarks against AI models and generating detailed reports. It is part of the MLCommons project and is designed as a proof of concept to aggregate measures, relate them to specific harms, create benchmarks, and produce reports. The tool requires LlamaGuard for evaluating responses and a TogetherAI account for running benchmarks. Users can install ModelBench from GitHub or PyPI, run tests using Poetry, and create benchmarks by providing necessary API keys. The tool generates static HTML pages displaying benchmark scores and allows users to dump raw scores and manage cache for faster runs. ModelBench is aimed at enabling users to test their own models and create tests and benchmarks.

dota2ai

The Dota2 AI Framework project aims to provide a framework for creating AI bots for Dota2, focusing on coordination and teamwork. It offers a LUA sandbox for scripting, allowing developers to code bots that can compete in standard matches. The project acts as a proxy between the game and a web service through JSON objects, enabling bots to perform actions like moving, attacking, casting spells, and buying items. It encourages contributions and aims to enhance the AI capabilities in Dota2 modding.

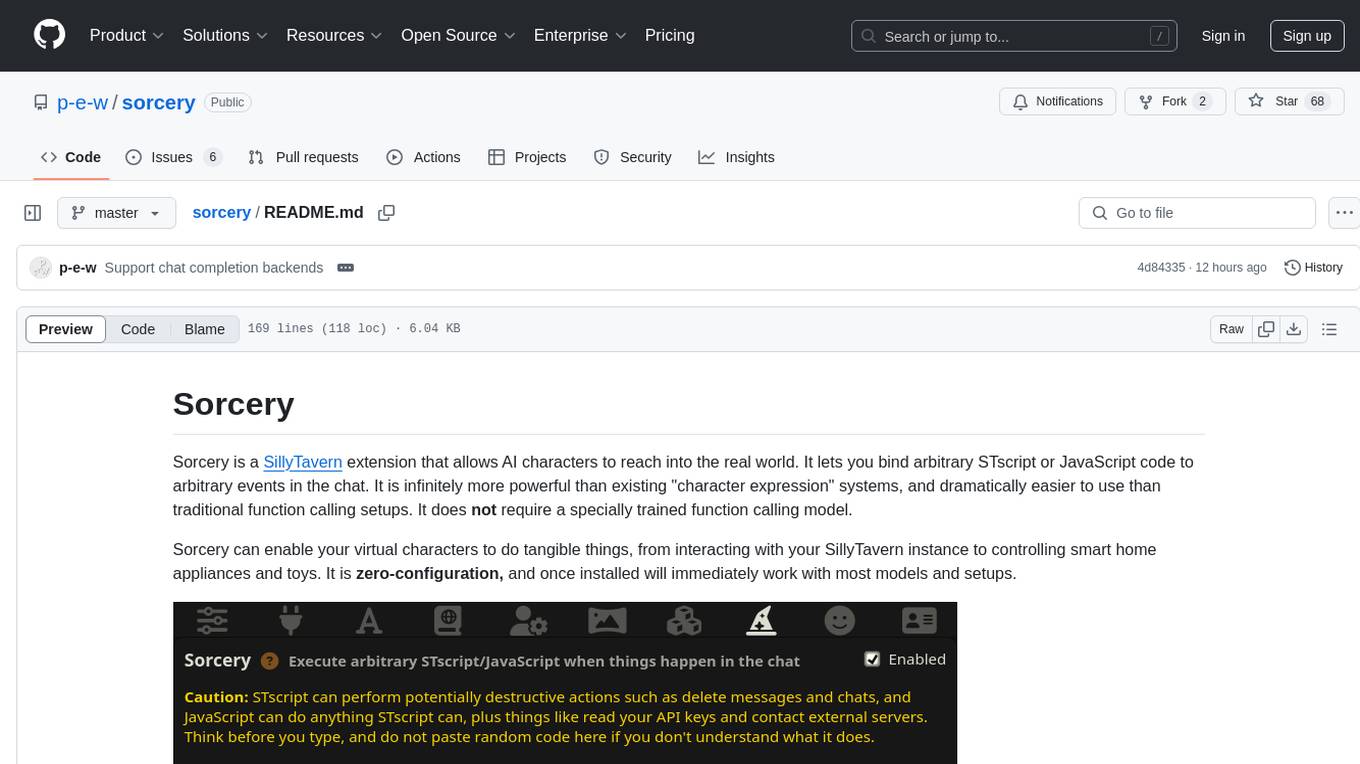

sorcery

Sorcery is a SillyTavern extension that allows AI characters to interact with the real world by executing user-defined scripts at specific events in the chat. It is easy to use and does not require a specially trained function calling model. Sorcery can be used to control smart home appliances, interact with virtual characters, and perform various tasks in the chat environment. It works by injecting instructions into the system prompt and intercepting markers to run associated scripts, providing a seamless user experience.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

ClipboardConqueror

Clipboard Conqueror is a multi-platform omnipresent copilot alternative. Currently requiring a kobold united or openAI compatible back end, this software brings powerful LLM based tools to any text field, the universal copilot you deserve. It simply works anywhere. No need to sign in, no required key. Provided you are using local AI, CC is a data secure alternative integration provided you trust whatever backend you use. *Special thank you to the creators of KoboldAi, KoboldCPP, llamma, openAi, and the communities that made all this possible to figure out.

ai-rag-chat-evaluator

This repository contains scripts and tools for evaluating a chat app that uses the RAG architecture. It provides parameters to assess the quality and style of answers generated by the chat app, including system prompt, search parameters, and GPT model parameters. The tools facilitate running evaluations, with examples of evaluations on a sample chat app. The repo also offers guidance on cost estimation, setting up the project, deploying a GPT-4 model, generating ground truth data, running evaluations, and measuring the app's ability to say 'I don't know'. Users can customize evaluations, view results, and compare runs using provided tools.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

reverse-engineering-assistant

ReVA (Reverse Engineering Assistant) is a project aimed at building a disassembler agnostic AI assistant for reverse engineering tasks. It utilizes a tool-driven approach, providing small tools to the user to empower them in completing complex tasks. The assistant is designed to accept various inputs, guide the user in correcting mistakes, and provide additional context to encourage exploration. Users can ask questions, perform tasks like decompilation, class diagram generation, variable renaming, and more. ReVA supports different language models for online and local inference, with easy configuration options. The workflow involves opening the RE tool and program, then starting a chat session to interact with the assistant. Installation includes setting up the Python component, running the chat tool, and configuring the Ghidra extension for seamless integration. ReVA aims to enhance the reverse engineering process by breaking down actions into small parts, including the user's thoughts in the output, and providing support for monitoring and adjusting prompts.

roam-extension-live-ai-assistant

Live AI is an AI Assistant tailor-made for Roam, providing access to the latest LLMs directly in Roam blocks. Users can interact with AI to extend their thinking, explore their graph, and chat with structured responses. The tool leverages Roam's features to write prompts, query graph parts, and chat with content. Users can dictate, translate, transform, and enrich content easily. Live AI supports various tasks like audio and video analysis, PDF reading, image generation, and web search. The tool offers features like Chat panel, Live AI context menu, and Ask Your Graph agent for versatile usage. Users can control privacy levels, compare AI models, create custom prompts, and apply styles for response formatting. Security concerns are addressed by allowing users to control data sent to LLMs.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.