compose-for-agents

Build and run AI agents using Docker Compose. A collection of ready-to-use examples for orchestrating open-source LLMs, tools, and agent runtimes.

Stars: 522

Compose for Agents is a tool that allows users to run demos using OpenAI models or locally with Docker Model Runner. The tool supports multi-agent and single-agent systems for various tasks such as fact-checking, summarizing GitHub issues, marketing strategy, SQL queries, travel planning, and more. Users can configure the demos by creating a `.mcp.env` file, supplying required tokens, and running `docker compose up --build`. Additionally, users can utilize OpenAI models by creating a `secret.openai-api-key` file and starting the project with the OpenAI configuration.

README:

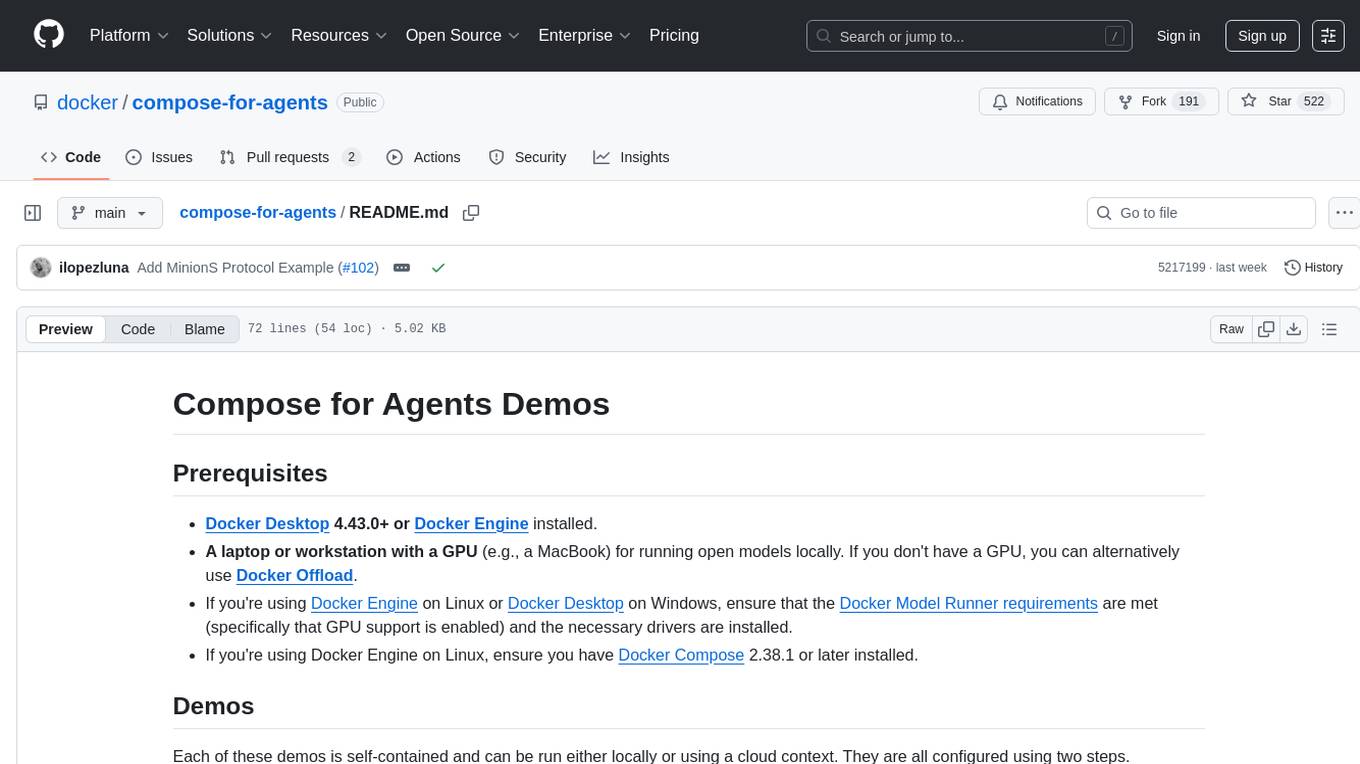

- Docker Desktop 4.43.0+ or Docker Engine installed.

- A laptop or workstation with a GPU (e.g., a MacBook) for running open models locally. If you don't have a GPU, you can alternatively use Docker Offload.

- If you're using Docker Engine on Linux or Docker Desktop on Windows, ensure that the Docker Model Runner requirements are met (specifically that GPU support is enabled) and the necessary drivers are installed.

- If you're using Docker Engine on Linux, ensure you have Docker Compose 2.38.1 or later installed.

Each of these demos is self-contained and can be run either locally or using a cloud context. They are all configured using two steps.

- change directory to the root of the demo project

- create a

.mcp.envfile from themcp.env.examplefile (if it exists, otherwise the demo doesn't need any secrets) and supply the required MCP tokens - run

docker compose up --build

The demos support using OpenAI models instead of running models locally with Docker Model Runner. To use OpenAI:

-

Create a

secret.openai-api-keyfile with your OpenAI API key:sk-... -

Start the project with the OpenAI configuration:

docker compose -f compose.yaml -f compose.openai.yaml up

| Demo | Agent System | Models | MCPs | project | compose |

|---|---|---|---|---|---|

| A2A Multi-Agent Fact Checker | Multi-Agent | OpenAI | duckduckgo | ./a2a | compose.yaml |

| Agno agent that summarizes GitHub issues | Multi-Agent | qwen3(local) | github-official | ./agno | compose.yaml |

| Vercel AI-SDK Chat-UI for mixing MCPs and Model | Single Agent | llama3.2(local), qwen3(local) | wikipedia-mcp, brave, resend(email) | ./vercel | compose.yaml |

| CrewAI Marketing Strategy Agent | Multi-Agent | qwen3(local) | duckduckgo | ./crew-ai | compose.yaml |

| ADK Multi-Agent Fact Checker | Multi-Agent | gemma3-qat(local) | duckduckgo | ./adk | compose.yaml |

| ADK & Cerebras Golang Experts | Multi-Agent | unsloth/qwen3-gguf:4B-UD-Q4_K_XL & ai/qwen2.5:latest (DMR local), llama-4-scout-17b-16e-instruct (Cerebras remote) | ./adk-cerebras | compose.yml | |

| LangGraph SQL Agent | Single Agent | qwen3(local) | postgres | ./langgraph | compose.yaml |

| Embabel Travel Agent | Multi-Agent | qwen3, Claude3.7, llama3.2, jimclark106/all-minilm:23M-F16 | brave, github-official, wikipedia-mcp, weather, google-maps, airbnb | ./embabel | compose.yaml and compose.dmr.yaml |

| Spring AI Brave Search | Single Agent | none | duckduckgo | ./spring-ai | compose.yaml |

| ADK Sock Store Agent | Multi-Agent | qwen3 | MongoDb, Brave, Curl, | ./adk-sock-shop | compose.yaml |

| Langchaingo DuckDuckGo Search | Single Agent | gemma3 | duckduckgo | ./langchaingo | compose.yaml |

| MinionS Cost-Efficient Local-Remote Collaboration | Local-Remote Protocol | qwen3(local), gpt-4o(remote) | ./minions | docker-compose.minions.yml |

This repository is dual-licensed under the Apache License 2.0 or the MIT License. You may choose either license to govern your use of the contributions made by Docker in this repository.

ℹ️ Note: Each example under may have its own

LICENSEfile. These are provided to reflect any third-party licensing requirements that apply to that specific example, and they must be respected accordingly.

SPDX-License-Identifier: Apache-2.0 OR MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for compose-for-agents

Similar Open Source Tools

compose-for-agents

Compose for Agents is a tool that allows users to run demos using OpenAI models or locally with Docker Model Runner. The tool supports multi-agent and single-agent systems for various tasks such as fact-checking, summarizing GitHub issues, marketing strategy, SQL queries, travel planning, and more. Users can configure the demos by creating a `.mcp.env` file, supplying required tokens, and running `docker compose up --build`. Additionally, users can utilize OpenAI models by creating a `secret.openai-api-key` file and starting the project with the OpenAI configuration.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

GenAIComps

GenAIComps is an initiative aimed at building enterprise-grade Generative AI applications using a microservice architecture. It simplifies the scaling and deployment process for production, abstracting away infrastructure complexities. GenAIComps provides a suite of containerized microservices that can be assembled into a mega-service tailored for real-world Enterprise AI applications. The modular approach of microservices allows for independent development, deployment, and scaling of individual components, promoting modularity, flexibility, and scalability. The mega-service orchestrates multiple microservices to deliver comprehensive solutions, encapsulating complex business logic and workflow orchestration. The gateway serves as the interface for users to access the mega-service, providing customized access based on user requirements.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

TinyLLM

TinyLLM is a project that helps build a small locally hosted language model with a web interface using consumer-grade hardware. It supports multiple language models, builds a local OpenAI API web service, and serves a Chatbot web interface with customizable prompts. The project requires specific hardware and software configurations for optimal performance. Users can run a local language model using inference servers like vLLM, llama-cpp-python, and Ollama. The Chatbot feature allows users to interact with the language model through a web-based interface, supporting features like summarizing websites, displaying news headlines, stock prices, weather conditions, and using vector databases for queries.

vscode-unify-chat-provider

The 'vscode-unify-chat-provider' repository is a tool that integrates multiple LLM API providers into VS Code's GitHub Copilot Chat using the Language Model API. It offers free tier access to mainstream models, perfect compatibility with major LLM API formats, deep adaptation to API features, best performance with built-in parameters, out-of-the-box configuration, import/export support, great UX, and one-click use of various models. The tool simplifies model setup, migration, and configuration for users, providing a seamless experience within VS Code for utilizing different language models.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

ai-agents-for-beginners

AI Agents for Beginners is a course that covers the fundamentals of building AI Agents. It consists of 10 lessons with code examples using Azure AI Foundry and GitHub Model Catalogs. The course utilizes AI Agent frameworks and services from Microsoft, such as Azure AI Agent Service, Semantic Kernel, and AutoGen. Learners can access written lessons, Python code samples, and additional learning resources for each lesson. The course encourages contributions and suggestions from the community and provides multi-language support for learners worldwide.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

awesome-generative-ai-data-scientist

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

RustySEO

RustySEO is a free, modern SEO/GEO toolkit designed to help users crawl and analyze websites and server logs without crawl limits. It is an all-in-one, cross-platform marketing toolkit for comprehensive SEO & GEO analysis, providing actionable insights into marketing and SEO strategies. The tool offers features such as shallow & deep crawl, technical diagnostics, on-page SEO analysis, dashboards, reporting, topic and keyword generators, AI chatbot, crawl history, image conversion and optimization, and more. RustySEO aims to be a robust, free alternative to costly commercial SEO tools, with integrations like Google PageSpeed Insights, Google Gemini, and more.

opik

Comet Opik is a repository containing two main services: a frontend and a backend. It provides a Python SDK for easy installation. Users can run the full application locally with minikube, following specific installation prerequisites. The repository structure includes directories for applications like Opik backend, with detailed instructions available in the README files. Users can manage the installation using simple k8s commands and interact with the application via URLs for checking the running application and API documentation. The repository aims to facilitate local development and testing of Opik using Kubernetes technology.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

willow-inference-server

Willow Inference Server (WIS) is a highly optimized language inference server implementation focused on enabling performant, cost-effective self-hosting of state-of-the-art models for speech and language tasks. It supports ASR and TTS tasks, runs on CUDA with low-end device support, and offers various transport options like REST, WebRTC, and Web Sockets. WIS is memory optimized, leverages CTranslate2 for Whisper support, and enables custom TTS voices. The server automatically detects available CUDA VRAM and optimizes functionality accordingly. Users can programmatically select Whisper models and parameters for each request to balance speed and quality.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

For similar tasks

compose-for-agents

Compose for Agents is a tool that allows users to run demos using OpenAI models or locally with Docker Model Runner. The tool supports multi-agent and single-agent systems for various tasks such as fact-checking, summarizing GitHub issues, marketing strategy, SQL queries, travel planning, and more. Users can configure the demos by creating a `.mcp.env` file, supplying required tokens, and running `docker compose up --build`. Additionally, users can utilize OpenAI models by creating a `secret.openai-api-key` file and starting the project with the OpenAI configuration.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.