ProphetFuzz

[CCS'24] An LLM-based, fully automated fuzzing tool for option combination testing.

Stars: 65

ProphetFuzz is a fully automated fuzzing tool based on Large Language Models (LLM) for testing high-risk option combinations with only documentation. It can predict and conduct fuzzing on high-risk option combinations without manual intervention. The tool consists of components for parsing documentation, extracting constraints, predicting combinations, assembling commands, generating files, and conducting fuzzing. ProphetFuzz has been used to uncover numerous vulnerabilities in various programs, earning CVE numbers for confirmed vulnerabilities. The tool has been credited to Dawei Wang and Geng Zhou.

README:

The implementation of the paper titled "ProphetFuzz: Fully Automated Prediction and Fuzzing of High-Risk Option Combinations with Only Documentation via Large Language Model"

ProphetFuzz is an LLM- based, fully automated fuzzing tool for option combination testing. ProphetFuzz can predict and conduct fuzzing on high-risk option combinations 1 with only documentation, and the entire process operates without manual intervention.

For more details, please refer to our paper from ACM CCS'24.

Due to page limitations, the Appendix of the paper could not be included within the main text. Please refer to Appendix.

.

├── Dockerfile

├── README.md

├── assets

│ ├── dataset

│ │ ├── groundtruth_for_20_programs.json

│ │ └── precision.json

│ └── images

├── fuzzing_handler

│ ├── cmd_fixer.py

│ ├── code_checker.py

│ ├── config.json

│ ├── run_cmin.py

│ ├── run_fuzzing.sh

│ └── utils

│ ├── analysis_util.py

│ ├── code_utils.py

│ └── execution_util.py

├── llm_interface

│ ├── assemble.py

│ ├── config

│ │ └── .env

│ ├── constraint.py

│ ├── few-shot

│ │ ├── manpage_htmldoc.json

│ │ ├── manpage_jbig2.json

│ │ ├── manpage_jhead.json

│ │ ├── manpage_makeswf.json

│ │ ├── manpage_mp4box.json

│ │ ├── manpage_opj_compress.json

│ │ ├── manpage_pdf2swf.json

│ │ └── manpage_yasm.json

│ ├── few-shot_generate.py

│ ├── input

│ ├── output

│ ├── predict.py

│ ├── restruct_manpage.py

│ └── utils

│ ├── gpt_utils.py

│ └── opt_utils.py

├── manpage_parser

│ ├── input

│ ├── output

│ ├── parser.py

│ └── utils

│ └── groff_utils.py

└── run_all_in_one.sh

- manpage_parser: Scripts for parsing documentation

- llm_interface: Scripts for extracting constraints, predicting high-risk option combinations, and assembling commands.

- fuzzing_handler: Scripts for preparing and conducting fuzzing.

- assets/dataset: Dataset for eveluating constraint extraction module.

- run_all_in_one.sh: Scripts for completing everything with one script.

- Dockerfile: Building our experiment environment (Tested on Ubuntu 20.04)

The implementations for various components of ProphetFuzz can be found in the following functions,

| Section | Component | File | Function |

|---|---|---|---|

| 3.2 | Constraint Extraction | llm_interface/constraint.py | extractRelationships |

| 3.2 | Self Check | llm_interface/constraint.py | checkRelationships |

| 3.3 | AutoCoT | llm_interface/few-shot_generate.py | generatePrompt |

| 3.3 | High-Risk Combination Prediction | llm_interface/predict.py | predictCombinations |

| 3.4 | Command Assembly | llm_interface/assembly.py | generateCommands |

| 3.5 | File Generation | fuzzing_handler/generate_combination.py | main |

| 3.5 | Corpus Minimization | fuzzing_handler/run_cmin.py | runCMinCommands |

| 3.5 | Fuzzing | fuzzing_handler/run_fuzzing.sh | runFuzzing |

-

Using Docker to Configure the Running Environment

- If you only want to complete the part that interacts with the LLM, you can directly use our pre-installed image (4GB):

docker run -it 4ugustus/prophetfuzz_base bash- If you want to complete the entire process, including seed generation, command repair, and fuzzing, please build the full image based on the pre-installed image:

docker build -t prophetfuzz:latest . docker run -it --privileged=true prophetfuzz bash # 'privileged' is used for setting up the fuzzing environment -

Set Up Your API Key: Set your OpenAI API key in the

llm_interface/config/.envfile:OPENAI_API_KEY="[Input Your API Key Here]" -

Run the Script: Execute the script to start the automated fuzzing process:

bash run_all_in_one.sh bison

Note: If you are not within our Docker environment, you might need to manually install dependencies and adjust the

fuzzing_handler/config.jsonfile to specify the path to the program under test.If you prefer to start fuzzing manually, use the following command:

fuzzer/afl-fuzz -i fuzzing_handler/input/bison -o fuzzing_handler/output/bison_prophet_1 -m none -K fuzzing_handler/argvs/argvs_bison.txt -- path/to/bison/bin/bison @@

We employ ProphetFuzz to perform persistent fuzzing on the latest versions of the programs in our dataset. To date, ProphetFuzz has uncovered 140 zero-day or half-day vulnerabilities, 93 of which have been confirmed by the developers, earning 22 CVE numbers.

| CVE | Program | Type |

|---|---|---|

| CVE-2024-3248 | xpdf | stack-buffer-overflow |

| CVE-2024-4853 | editcap | heap-buffer-overflow |

| CVE-2024-4855 | editcap | bad free |

| CVE-2024-31743 | ffmpeg | segmentation violation |

| CVE-2024-31744 | jasper | assertion failure |

| CVE-2024-31745 | dwarfdump | use-after-free |

| CVE-2024-31746 | objdump | heap-buffer-overflow |

| CVE-2024-32154 | ffmpeg | segmentation violation |

| CVE-2024-32157 | mupdf | segmentation violation |

| CVE-2024-32158 | mupdf | negative-size-param |

| CVE-2024-34960 | ffmpeg | floating point exception |

| CVE-2024-34961 | pspp | segmentation violation |

| CVE-2024-34962 | pspp | segmentation violation |

| CVE-2024-34963 | pspp | assertion failure |

| CVE-2024-34965 | pspp | assertion failure |

| CVE-2024-34966 | pspp | assertion failure |

| CVE-2024-34967 | pspp | assertion failure |

| CVE-2024-34968 | pspp | assertion failure |

| CVE-2024-34969 | pspp | segmentation violation |

| CVE-2024-34971 | pspp | segmentation violation |

| CVE-2024-34972 | pspp | assertion failure |

| CVE-2024-35316 | ffmpeg | segmentation violation |

Thanks to Dawei Wang (@4ugustus) and Geng Zhou (@Arbusz) for their valuable contributions to this project.

In case you would like to cite ProphetFuzz, you may use the following BibTex entry:

@inproceedings {wang2024prophet,

title = {ProphetFuzz: Fully Automated Prediction and Fuzzing of High-Risk Option Combinations with Only Documentation via Large Language Model},

author = {Wang, Dawei and Zhou, Geng and Chen, Li and Li, Dan and Miao, Yukai},

booktitle = {Proceedings of the 2024 ACM SIGSAC Conference on Computer and Communications Security},

publisher = {Association for Computing Machinery},

address = {Salt Lake City, UT, USA},

pages = {735–749},

year = {2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ProphetFuzz

Similar Open Source Tools

ProphetFuzz

ProphetFuzz is a fully automated fuzzing tool based on Large Language Models (LLM) for testing high-risk option combinations with only documentation. It can predict and conduct fuzzing on high-risk option combinations without manual intervention. The tool consists of components for parsing documentation, extracting constraints, predicting combinations, assembling commands, generating files, and conducting fuzzing. ProphetFuzz has been used to uncover numerous vulnerabilities in various programs, earning CVE numbers for confirmed vulnerabilities. The tool has been credited to Dawei Wang and Geng Zhou.

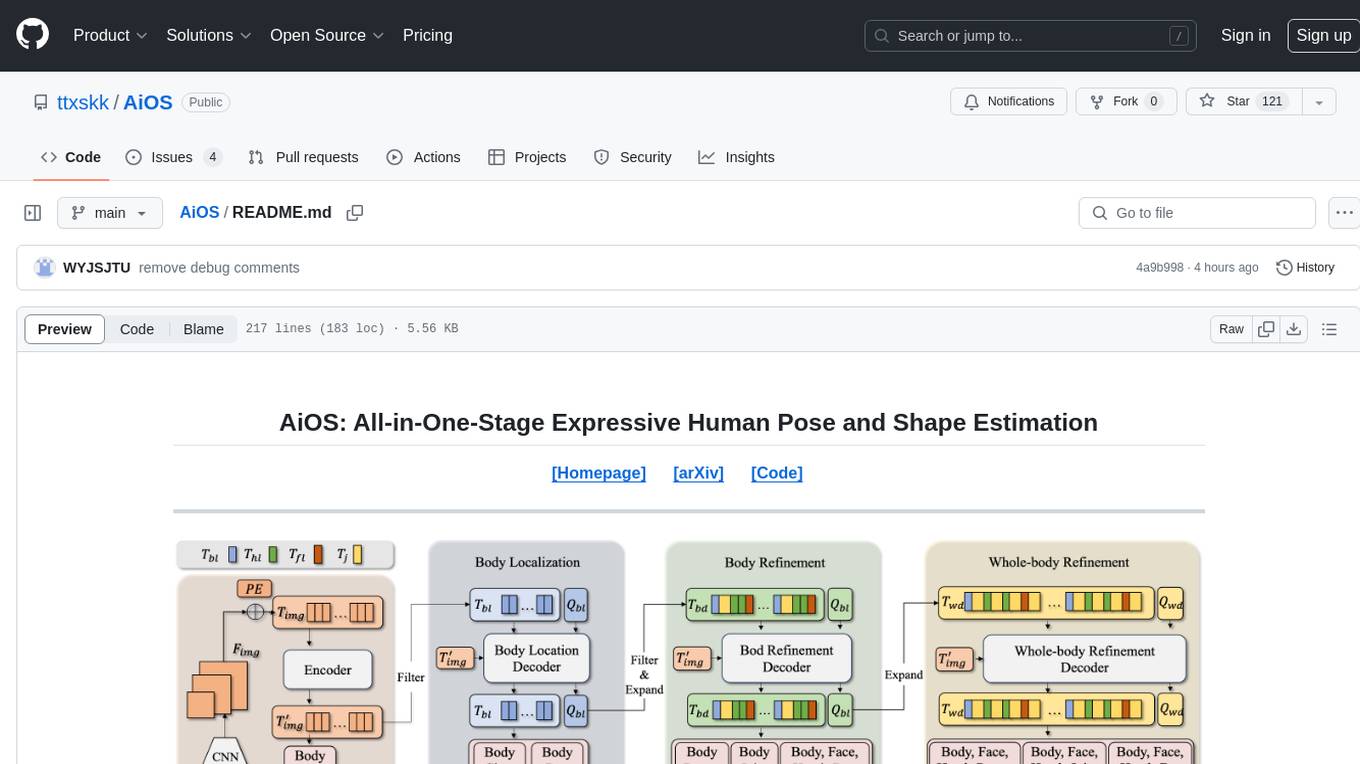

AiOS

AiOS is a tool for human pose and shape estimation, performing human localization and SMPL-X estimation in a progressive manner. It consists of body localization, body refinement, and whole-body refinement stages. Users can download datasets for evaluation, SMPL-X body models, and AiOS checkpoint. Installation involves creating a conda virtual environment, installing PyTorch, torchvision, Pytorch3D, MMCV, and other dependencies. Inference requires placing the video for inference and pretrained models in specific directories. Test results are provided for NMVE, NMJE, MVE, and MPJPE on datasets like BEDLAM and AGORA. Users can run scripts for AGORA validation, AGORA test leaderboard, and BEDLAM leaderboard. The tool acknowledges codes from MMHuman3D, ED-Pose, and SMPLer-X.

incidentfox

IncidentFox is an open-source AI SRE tool designed to assist in incident response by automatically investigating incidents, finding root causes, and suggesting fixes. It integrates with observability stack, infrastructure, and collaboration tools, forming hypotheses, collecting data, and reasoning through to find root causes. The tool is built for production on-call scenarios, handling log sampling, alert correlation, anomaly detection, and dependency mapping. IncidentFox is highly customizable, Slack-first, and works on various platforms like web UI, GitHub, PagerDuty, and API. It aims to reduce incident resolution time, alert noise, and improve knowledge retention for engineering teams.

claude-flow

Claude-Flow is a workflow automation tool designed to streamline and optimize business processes. It provides a user-friendly interface for creating and managing workflows, allowing users to automate repetitive tasks and improve efficiency. With features such as drag-and-drop workflow builder, customizable templates, and integration with popular business tools, Claude-Flow empowers users to automate their workflows without the need for extensive coding knowledge. Whether you are a small business owner looking to streamline your operations or a project manager seeking to automate task assignments, Claude-Flow offers a flexible and scalable solution to meet your workflow automation needs.

cactus

Cactus is an energy-efficient and fast AI inference framework designed for phones, wearables, and resource-constrained arm-based devices. It provides a bottom-up approach with no dependencies, optimizing for budget and mid-range phones. The framework includes Cactus FFI for integration, Cactus Engine for high-level transformer inference, Cactus Graph for unified computation graph, and Cactus Kernels for low-level ARM-specific operations. It is suitable for implementing custom models and scientific computing on mobile devices.

rho

Rho is an AI agent that runs on macOS, Linux, and Android, staying active, remembering past interactions, and checking in autonomously. It operates without cloud storage, allowing users to retain ownership of their data. Users can bring their own LLM provider and have full control over the agent's functionalities. Rho is built on the pi coding agent framework, offering features like persistent memory, scheduled tasks, and real email capabilities. The agent can be customized through checklists, scheduled triggers, and personalized voice and identity settings. Skills and extensions enhance the agent's capabilities, providing tools for notifications, clipboard management, text-to-speech, and more. Users can interact with Rho through commands and scripts, enabling tasks like checking status, triggering actions, and managing preferences.

claude-craft

Claude Craft is a comprehensive framework for AI-assisted development with Claude Code, providing standardized rules, agents, and commands across multiple technology stacks. It includes autonomous sprint capabilities, documentation accuracy improvements, CI hardening, and test coverage enhancements. With support for 10 technology stacks, 5 languages, 40 AI agents, 157 slash commands, and various project management features like BMAD v6 framework, Ralph Wiggum loop execution, skills, templates, checklists, and hooks system, Claude Craft offers a robust solution for project development and management. The tool also supports workflow methodology, development tracks, document generation, BMAD v6 project management, quality gates, batch processing, backlog migration, and Claude Code hooks integration.

starknet-agentic

Open-source stack for giving AI agents wallets, identity, reputation, and execution rails on Starknet. `starknet-agentic` is a monorepo with Cairo smart contracts for agent wallets, identity, reputation, and validation, TypeScript packages for MCP tools, A2A integration, and payment signing, reusable skills for common Starknet agent capabilities, and examples and docs for integration. It provides contract primitives + runtime tooling in one place for integrating agents. The repo includes various layers such as Agent Frameworks / Apps, Integration + Runtime Layer, Packages / Tooling Layer, Cairo Contract Layer, and Starknet L2. It aims for portability of agent integrations without giving up Starknet strengths, with a cross-chain interop strategy and skills marketplace. The repository layout consists of directories for contracts, packages, skills, examples, docs, and website.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

runanywhere-sdks

RunAnywhere is an on-device AI tool for mobile apps that allows users to run LLMs, speech-to-text, text-to-speech, and voice assistant features locally, ensuring privacy, offline functionality, and fast performance. The tool provides a range of AI capabilities without relying on cloud services, reducing latency and ensuring that no data leaves the device. RunAnywhere offers SDKs for Swift (iOS/macOS), Kotlin (Android), React Native, and Flutter, making it easy for developers to integrate AI features into their mobile applications. The tool supports various models for LLM, speech-to-text, and text-to-speech, with detailed documentation and installation instructions available for each platform.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features like Virtual API System, Solvable Queries, and Stable Evaluation System. The benchmark ensures consistency through a caching system and API simulators, filters queries based on solvability using LLMs, and evaluates model performance using GPT-4 with metrics like Solvable Pass Rate and Solvable Win Rate.

AIGC_text_detector

AIGC_text_detector is a repository containing the official codes for the paper 'Multiscale Positive-Unlabeled Detection of AI-Generated Texts'. It includes detector models for both English and Chinese texts, along with stronger detectors developed with enhanced training strategies. The repository provides links to download the detector models, datasets, and necessary preprocessing tools. Users can train RoBERTa and BERT models on the HC3-English dataset using the provided scripts.

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

For similar tasks

ProphetFuzz

ProphetFuzz is a fully automated fuzzing tool based on Large Language Models (LLM) for testing high-risk option combinations with only documentation. It can predict and conduct fuzzing on high-risk option combinations without manual intervention. The tool consists of components for parsing documentation, extracting constraints, predicting combinations, assembling commands, generating files, and conducting fuzzing. ProphetFuzz has been used to uncover numerous vulnerabilities in various programs, earning CVE numbers for confirmed vulnerabilities. The tool has been credited to Dawei Wang and Geng Zhou.

For similar jobs

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

vpnfast.github.io

VPNFast is a lightweight and fast VPN service provider that offers secure and private internet access. With VPNFast, users can protect their online privacy, bypass geo-restrictions, and secure their internet connection from hackers and snoopers. The service provides high-speed servers in multiple locations worldwide, ensuring a reliable and seamless VPN experience for users. VPNFast is easy to use, with a user-friendly interface and simple setup process. Whether you're browsing the web, streaming content, or accessing sensitive information, VPNFast helps you stay safe and anonymous online.

taranis-ai

Taranis AI is an advanced Open-Source Intelligence (OSINT) tool that leverages Artificial Intelligence to revolutionize information gathering and situational analysis. It navigates through diverse data sources like websites to collect unstructured news articles, utilizing Natural Language Processing and Artificial Intelligence to enhance content quality. Analysts then refine these AI-augmented articles into structured reports that serve as the foundation for deliverables such as PDF files, which are ultimately published.

NightshadeAntidote

Nightshade Antidote is an image forensics tool used to analyze digital images for signs of manipulation or forgery. It implements several common techniques used in image forensics including metadata analysis, copy-move forgery detection, frequency domain analysis, and JPEG compression artifacts analysis. The tool takes an input image, performs analysis using the above techniques, and outputs a report summarizing the findings.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.