mcp-debugger

LLM-driven debugger server – give your AI agents step-through debugging superpowers

Stars: 70

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP). The tool supports multi-language debugging with clean adapter patterns, including Python debugging via debugpy, JavaScript (Node.js) debugging via js-debug, and Rust debugging via CodeLLDB. It offers features like mock adapter for testing, STDIO and SSE transport modes, zero-runtime dependencies, Docker and npm packages for deployment, structured JSON responses for easy parsing, path validation to prevent crashes, and AI-aware line context for intelligent breakpoint placement with code context.

README:

MCP server for multi-language debugging – give your AI agents debugging superpowers 🚀

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP).

🆕 Version 0.17.0: Rust debugging support (Alpha)! Debug Rust programs with CodeLLDB, including Cargo projects, async code, and full variable inspection—plus step commands now return the active source context so agents keep their place automatically.

🔥 Version 0.16.0: JavaScript/Node.js debugging support (Alpha)! Full debugging capabilities with bundled js-debug, TypeScript support, and zero-runtime dependencies via improved npx distribution.

🎬 Demo Video: See the debugger in action!

Recording in progress - This will show an AI agent discovering and fixing the variable swap bug in real-time

- 🌐 Multi-language support – Clean adapter pattern for any language

- 🐍 Python debugging via debugpy – Full DAP protocol support

- 🟨 JavaScript (Node.js) debugging via js-debug – VSCode's proven debugger (Alpha)

- 🦀 Rust debugging via CodeLLDB – Debug Rust & Cargo projects (Alpha)

WARNING: On Windows, use the GNU toolchain for full variable inspection. Run

mcp-debugger check-rust-binary <path-to-exe>to verify your build and see Rust Debugging on Windows for detailed guidance. NOTE: The published npm bundle ships the Linux x64 CodeLLDB runtime to stay under registry size limits. On macOS or Windows, point theCODELLDB_PATHenvironment variable at an existing CodeLLDB installation (for example from the VSCode extension) or clone the repo and runpnpm --filter @debugmcp/adapter-rust run build:adapterto vendor your platform binaries locally.

If you're on Windows and want the quickest path to a working GNU toolchain + dlltool configuration, run:

pwsh scripts/setup/windows-rust-debug.ps1The script installs the stable-gnu toolchain (via rustup), exposes dlltool.exe from rustup's self-contained directory, builds the bundled Rust examples, and (optionally) runs the Rust smoke tests. Add -UpdateUserPath if you want the dlltool path persisted to your user PATH/DLLTOOL variables.

The script will also attempt to provision an MSYS2-based MinGW-w64 toolchain (via winget + pacman) so cargo +stable-gnu has a fully functional dlltool/ld/as stack. If MSYS2 is already installed, it simply reuses it; otherwise it guides you through installing it (or warns so you can install manually).

- 🧪 Mock adapter for testing – Test without external dependencies

- 🔌 STDIO and SSE transport modes – Works with any MCP client

- 📦 Zero-runtime dependencies – Self-contained bundles via tsup (~3 MB)

- ⚡ npx ready – Run directly with

npx @debugmcp/mcp-debugger- no installation needed - 📊 1019 tests passing – battle-tested end-to-end

- 🐳 Docker and npm packages – Deploy anywhere

- 🤖 Built for AI agents – Structured JSON responses for easy parsing

- 🛡️ Path validation – Prevents crashes from non-existent files

- 📝 AI-aware line context – Intelligent breakpoint placement with code context

Add to your MCP settings configuration:

{

"mcpServers": {

"mcp-debugger": {

"command": "node",

"args": ["C:/path/to/mcp-debugger/dist/index.js", "--log-level", "debug", "--log-file", "C:/path/to/logs/debug-mcp-server.log"],

"disabled": false,

"autoApprove": ["create_debug_session", "set_breakpoint", "get_variables"]

}

}

}For Claude Code users, we provide an automated installation script:

# Clone the repository

git clone https://github.com/yourusername/mcp-debugger.git

cd mcp-debugger

# Run the installation script

./scripts/install-claude-mcp.sh

# Verify the connection

/home/ubuntu/.claude/local/claude mcp listImportant: The stdio argument is required to prevent console output from corrupting the JSON-RPC protocol. See CLAUDE.md for detailed setup and docs/MCP_CLAUDE_CODE_INTEGRATION.md for troubleshooting.

docker run -v $(pwd):/workspace debugmcp/mcp-debugger:latest

⚠️ The Docker image intentionally ships only the Python and JavaScript adapters. Rust debugging requires the local, SSE, or packed deployments where the adapter runs next to your toolchain.

npm install -g @debugmcp/mcp-debugger

mcp-debugger --helpOr use without installation via npx:

npx @debugmcp/mcp-debugger --help📸 Screenshot: MCP Integration in Action

This screenshot will show real-time MCP protocol communication with tool calls and JSON responses flowing between the AI agent and debugger.

mcp-debugger exposes debugging operations as MCP tools that can be called with structured JSON parameters:

// Tool: create_debug_session

// Request:

{

"language": "python", // or "javascript", "rust", or "mock" for testing

"name": "My Debug Session"

}

// Response:

{

"success": true,

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"message": "Created python debug session: My Debug Session"

}📸 Screenshot: Active Debugging Session

This screenshot will show the debugger paused at a breakpoint with the stack trace visible in the left panel, local variables in the right panel, and source code with line highlighting in the center.

| Tool | Description | Status |

|---|---|---|

create_debug_session |

Create a new debugging session | ✅ Implemented |

list_debug_sessions |

List all active sessions | ✅ Implemented |

set_breakpoint |

Set a breakpoint in a file | ✅ Implemented |

start_debugging |

Start debugging a script | ✅ Implemented |

get_stack_trace |

Get the current stack trace | ✅ Implemented |

get_scopes |

Get variable scopes for a frame | ✅ Implemented |

get_variables |

Get variables in a scope | ✅ Implemented |

step_over |

Step over the current line | ✅ Implemented |

step_into |

Step into a function | ✅ Implemented |

step_out |

Step out of a function | ✅ Implemented |

continue_execution |

Continue running | ✅ Implemented |

close_debug_session |

Close a session | ✅ Implemented |

pause_execution |

Pause running execution | ❌ Not Implemented |

evaluate_expression |

Evaluate expressions | ❌ Not Implemented |

get_source_context |

Get source code context | ✅ Implemented |

📸 Screenshot: Multi-Session Debugging

This screenshot will show the debugger managing multiple concurrent debug sessions, demonstrating how AI agents can debug different scripts simultaneously with isolated session management.

Version 0.10.0 introduces a clean adapter pattern that separates language-agnostic core functionality from language-specific implementations:

┌─────────────┐ ┌──────────────┐ ┌─────────────────┐

│ MCP Client │────▶│ SessionManager│────▶│ AdapterRegistry │

└─────────────┘ └──────────────┘ └─────────────────┘

│ │

▼ ▼

┌──────────────┐ ┌─────────────────┐

│ ProxyManager │◀─────│ Language Adapter│

└──────────────┘ └─────────────────┘

│

┌──────────────┴───────────────┐

│ │

┌─────▼──────┐ ┌──────▼──────┐ ┌─────▼──────┐

│Python │ │JavaScript │ │Mock │

│Adapter │ │Adapter │ │Adapter │

└────────────┘ └─────────────┘ └────────────┘

Want to add debugging support for your favorite language? Check out the Adapter Development Guide!

Here's a complete debugging session example:

# buggy_swap.py

def swap_variables(a, b):

a = b # Bug: loses original value of 'a'

b = a # Bug: 'b' gets the new value of 'a'

return a, b// Tool: create_debug_session

// Request:

{

"language": "python",

"name": "Swap Bug Investigation"

}

// Response:

{

"success": true,

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"message": "Created python debug session: Swap Bug Investigation"

}// Tool: set_breakpoint

// Request:

{

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"file": "buggy_swap.py",

"line": 2

}

// Response:

{

"success": true,

"breakpointId": "28e06119-619e-43c0-b029-339cec2615df",

"file": "C:\\path\\to\\buggy_swap.py",

"line": 2,

"verified": false,

"message": "Breakpoint set at C:\\path\\to\\buggy_swap.py:2"

}// Tool: start_debugging

// Request:

{

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"scriptPath": "buggy_swap.py"

}

// Response:

{

"success": true,

"state": "paused",

"message": "Debugging started for buggy_swap.py. Current state: paused",

"data": {

"message": "Debugging started for buggy_swap.py. Current state: paused",

"reason": "breakpoint"

}

}First, get the scopes:

// Tool: get_scopes

// Request:

{

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"frameId": 3

}

// Response:

{

"success": true,

"scopes": [

{

"name": "Locals",

"variablesReference": 5,

"expensive": false,

"presentationHint": "locals",

"source": {}

},

{

"name": "Globals",

"variablesReference": 6,

"expensive": false,

"source": {}

}

]

}Then get the local variables:

// Tool: get_variables

// Request:

{

"sessionId": "a4d1acc8-84a8-44fe-a13e-28628c5b33c7",

"scope": 5

}

// Response:

{

"success": true,

"variables": [

{"name": "a", "value": "10", "type": "int", "variablesReference": 0, "expandable": false},

{"name": "b", "value": "20", "type": "int", "variablesReference": 0, "expandable": false}

],

"count": 2,

"variablesReference": 5

}📸 Screenshot: Variable Inspection Reveals the Bug

This screenshot will show the TUI visualizer after stepping over line 4, where both variables incorrectly show value 20, clearly demonstrating the variable swap bug. The left panel shows the execution state, the center shows the highlighted code, and the right panel displays the incorrect variable values.

- 📘 Tool Reference – Complete API documentation

- 🚦 Getting Started Guide – First-time setup

- 🏗️ Architecture Overview – Multi-language design

- 🔧 Adapter Development – Add new languages

- 🔌 Dynamic Loading Architecture – Runtime discovery, lazy loading, caching

- 🧩 Adapter API Reference – Adapter, factory, loader, and registry contracts

- 🔄 Migration Guide – Upgrading to v0.15.0 (dynamic loading)

- 🐍 Python Debugging Guide – Python-specific features

- 🟨 JavaScript Debugging Guide – JavaScript/TypeScript features

- 🐹 Go Debugging Guide – Go debugging with Delve

- Rust Debugging on Windows - Toolchain requirements and troubleshooting

- 🤖 AI Integration Guide – Leverage AI-friendly features

- 🔧 Troubleshooting – Common issues & solutions

We welcome contributions! See CONTRIBUTING.md for guidelines.

# Development setup

git clone https://github.com/debugmcp/mcp-debugger.git

cd mcp-debugger

# Install dependencies and vendor debug adapters

pnpm install

# All debug adapters (JavaScript js-debug, Rust CodeLLDB) are automatically downloaded

# Build the project

pnpm build

# Run tests

pnpm test

# Check adapter vendoring status

pnpm vendor:status

# Force re-vendor all adapters (if needed)

pnpm vendor:forceThe project automatically vendors debug adapters during pnpm install:

- JavaScript: Downloads Microsoft's js-debug from GitHub releases

- Rust: Downloads CodeLLDB binaries for the current platform

-

CI Environment: Set

SKIP_ADAPTER_VENDOR=trueto skip vendoring

To manually manage adapters:

# Check current vendoring status

pnpm vendor:status

# Re-vendor all adapters

pnpm vendor

# Clean and re-vendor (force)

pnpm vendor:force

# Clean vendor directories only

pnpm clean:vendorWe use Act to run GitHub Actions workflows locally:

# Build the Docker image first

docker build -t mcp-debugger:local .

# Run tests with Act (use WSL2 on Windows)

act -j build-and-test --matrix os:ubuntu-latestSee tests/README.md for detailed testing instructions.

- ✅ Production Ready: v0.17.0 with Rust adapter (Alpha), richer stepping responses, and polished JavaScript distribution

- ✅ 1019 tests passing end-to-end

- ✅ Clean architecture with adapter pattern

- 🟨 JavaScript/Node.js: Alpha support with full debugging loop

- ✅ Go: Full debugging support via Delve DAP

- 🚧 Coming Soon: Ruby, C/C++, and more language adapters

- 📈 Active Development: Regular updates and improvements

See Roadmap.md for planned features.

MIT License - see LICENSE for details.

Built with:

- Model Context Protocol by Anthropic

- Debug Adapter Protocol by Microsoft

- debugpy for Python debugging

Give your AI the power to debug like a developer – in any language! 🎯

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-debugger

Similar Open Source Tools

mcp-debugger

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP). The tool supports multi-language debugging with clean adapter patterns, including Python debugging via debugpy, JavaScript (Node.js) debugging via js-debug, and Rust debugging via CodeLLDB. It offers features like mock adapter for testing, STDIO and SSE transport modes, zero-runtime dependencies, Docker and npm packages for deployment, structured JSON responses for easy parsing, path validation to prevent crashes, and AI-aware line context for intelligent breakpoint placement with code context.

one

ONE is a modern web and AI agent development toolkit that empowers developers to build AI-powered applications with high performance, beautiful UI, AI integration, responsive design, type safety, and great developer experience. It is perfect for building modern web applications, from simple landing pages to complex AI-powered platforms.

WebAI-to-API

This project implements a web API that offers a unified interface to Google Gemini and Claude 3. It provides a self-hosted, lightweight, and scalable solution for accessing these AI models through a streaming API. The API supports both Claude and Gemini models, allowing users to interact with them in real-time. The project includes a user-friendly web UI for configuration and documentation, making it easy to get started and explore the capabilities of the API.

multi-agent-shogun

multi-agent-shogun is a system that runs multiple AI coding CLI instances simultaneously, orchestrating them like a feudal Japanese army. It supports Claude Code, OpenAI Codex, GitHub Copilot, and Kimi Code. The system allows you to command your AI army with zero coordination cost, enabling parallel execution, non-blocking workflow, cross-session memory, event-driven communication, and full transparency. It also features skills discovery, phone notifications, pane border task display, shout mode, and multi-CLI support.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

kiss_ai

KISS AI is a lightweight and powerful multi-agent evolutionary framework that simplifies building AI agents. It uses native function calling for efficiency and accuracy, making building AI agents as straightforward as possible. The framework includes features like multi-agent orchestration, agent evolution and optimization, relentless coding agent for long-running tasks, output formatting, trajectory saving and visualization, GEPA for prompt optimization, KISSEvolve for algorithm discovery, self-evolving multi-agent, Docker integration, multiprocessing support, and support for various models from OpenAI, Anthropic, Gemini, Together AI, and OpenRouter.

vibe-remote

Vibe Remote is a tool that allows developers to code using AI agents through Slack or Discord, eliminating the need for a laptop or IDE. It provides a seamless experience for coding tasks, enabling users to interact with AI agents in real-time, delegate tasks, and monitor progress. The tool supports multiple coding agents, offers a setup wizard for easy installation, and ensures security by running locally on the user's machine. Vibe Remote enhances productivity by reducing context-switching and enabling parallel task execution within isolated workspaces.

OpenSpec

OpenSpec is a tool for spec-driven development, aligning humans and AI coding assistants to agree on what to build before any code is written. It adds a lightweight specification workflow that ensures deterministic, reviewable outputs without the need for API keys. With OpenSpec, stakeholders can draft change proposals, review and align with AI assistants, implement tasks based on agreed specs, and archive completed changes for merging back into the source-of-truth specs. It works seamlessly with existing AI tools, offering shared visibility into proposed, active, or archived work.

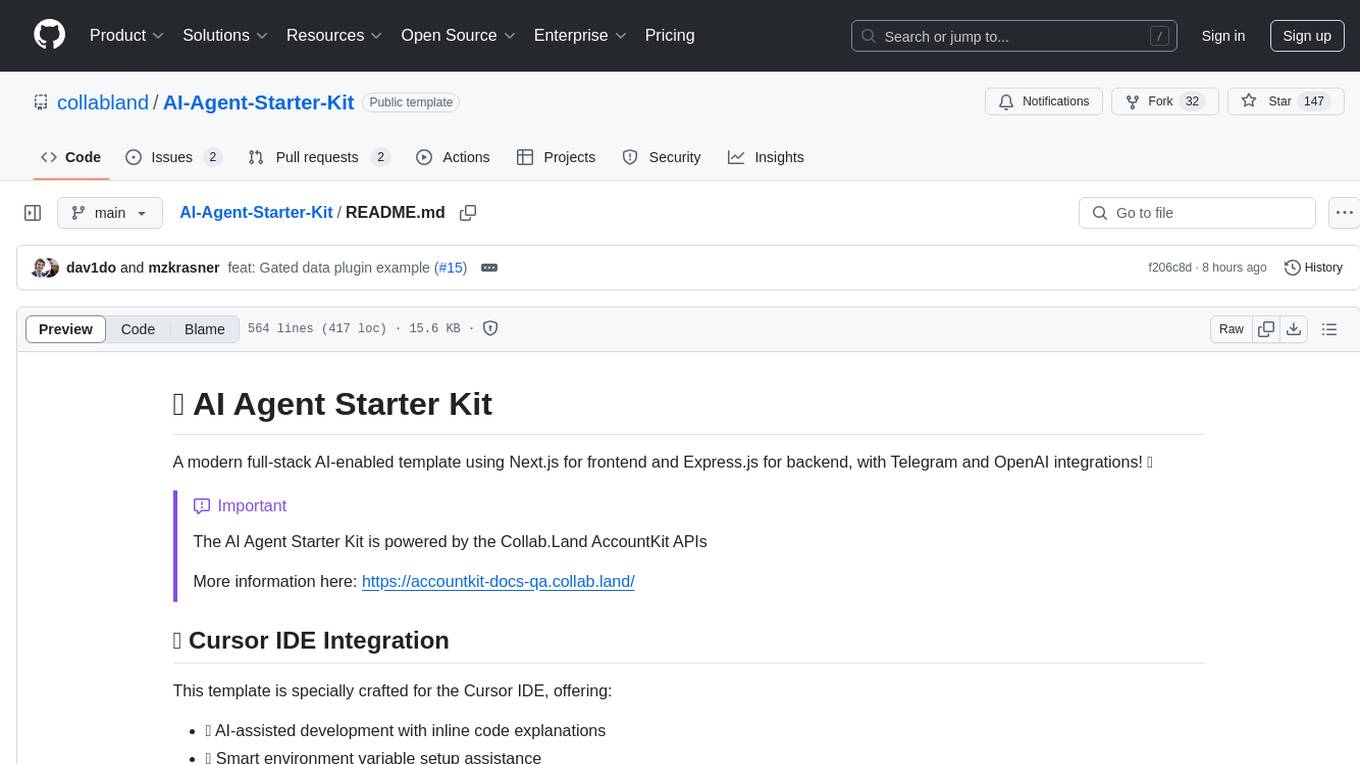

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

sandbox

AIO Sandbox is an all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. It provides a unified, secure execution environment for AI agents and developers, with features like unified file system, multiple interfaces, secure execution, zero configuration, and agent-ready MCP-compatible APIs. The tool allows users to run shell commands, perform file operations, automate browser tasks, and integrate with various development tools and services.

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

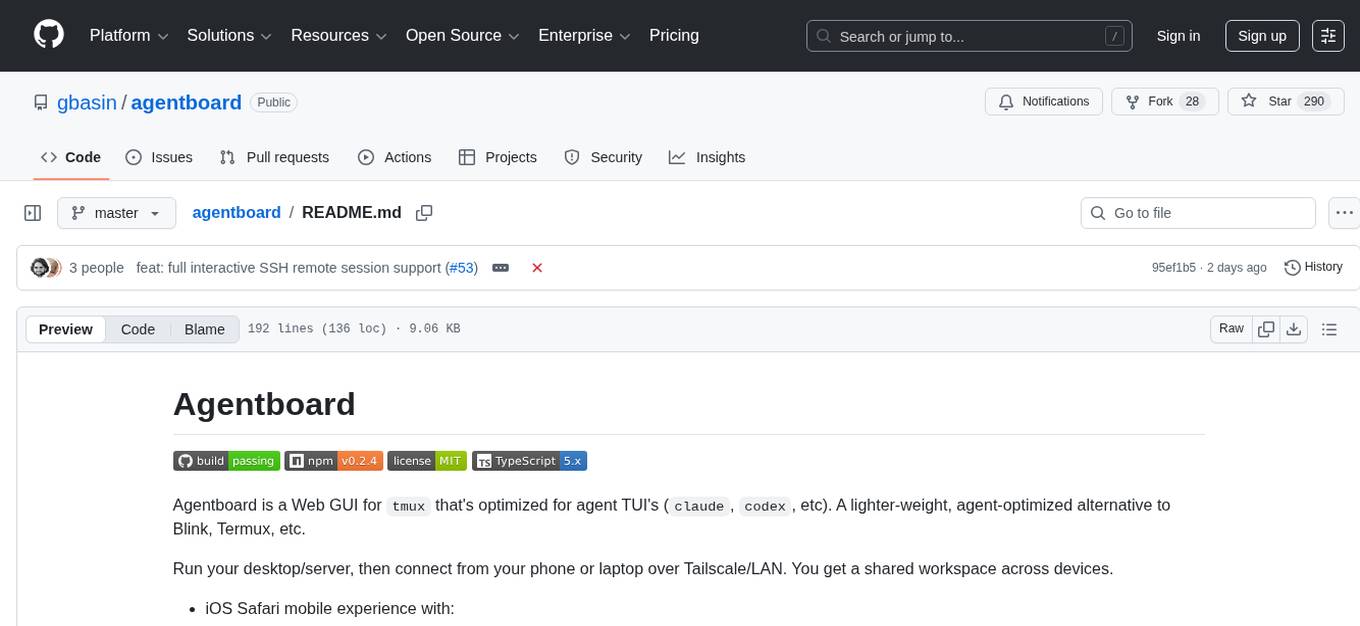

agentboard

Agentboard is a Web GUI for tmux optimized for agent TUI's like claude and codex. It provides a shared workspace across devices with features such as paste support, touch scrolling, virtual arrow keys, log tracking, and session pinning. Users can interact with tmux sessions from any device through a live terminal stream. The tool allows session discovery, status inference, and terminal I/O streaming for efficient agent management.

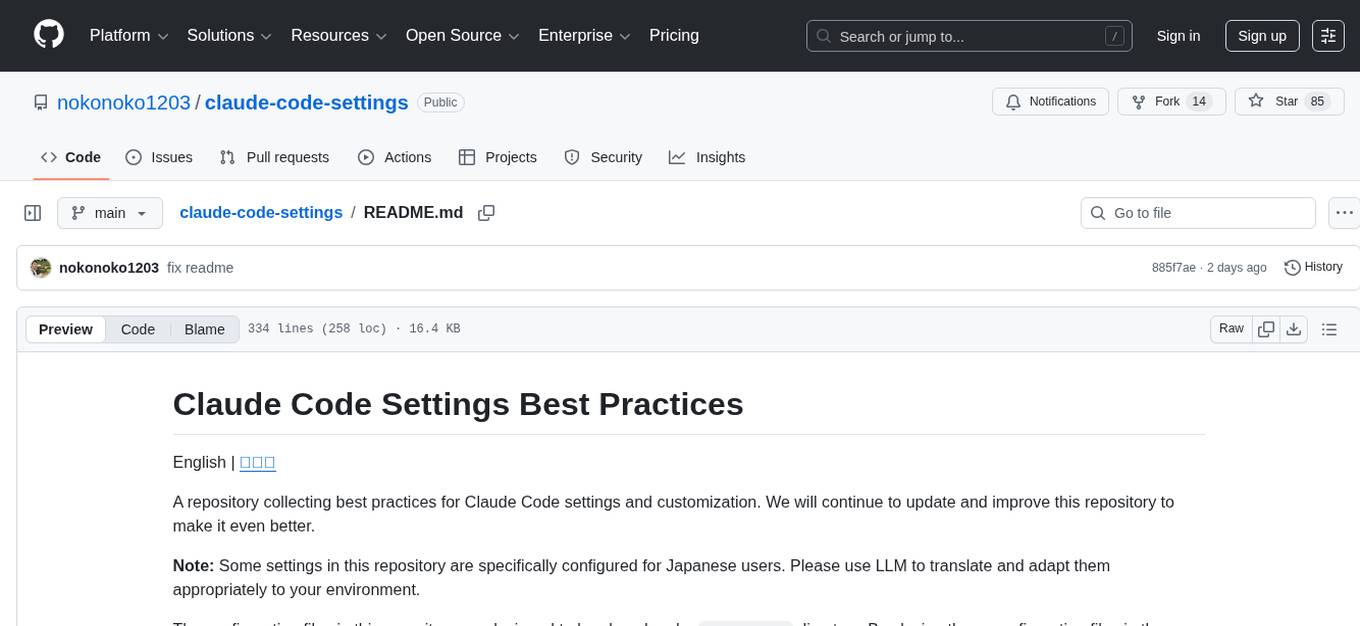

claude-code-settings

A repository collecting best practices for Claude Code settings and customization. It provides configuration files for customizing Claude Code's behavior and building an efficient development environment. The repository includes custom agents and skills for specific domains, interactive development workflow features, efficient development rules, and team workflow with Codex MCP. Users can leverage the provided configuration files and tools to enhance their development process and improve code quality.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

For similar tasks

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

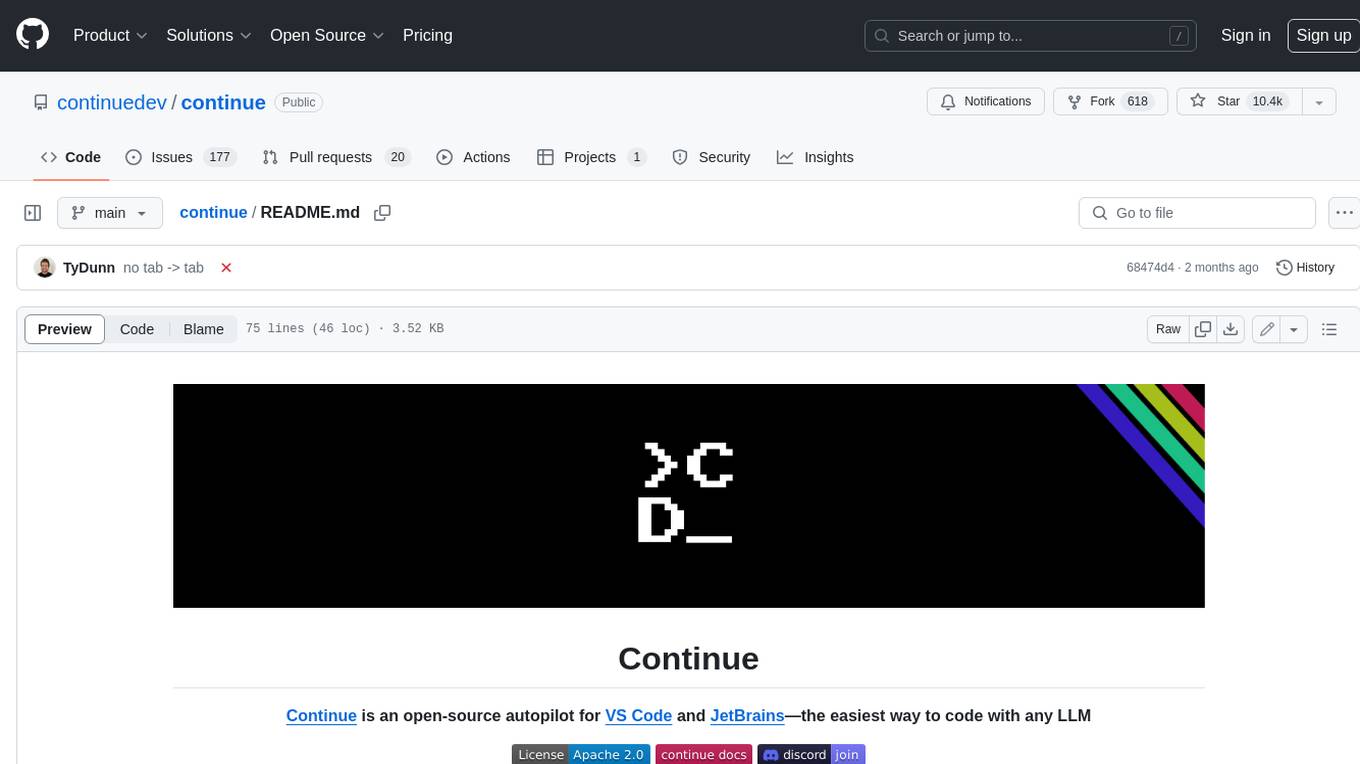

continue

Continue is an open-source autopilot for VS Code and JetBrains that allows you to code with any LLM. With Continue, you can ask coding questions, edit code in natural language, generate files from scratch, and more. Continue is easy to use and can help you save time and improve your coding skills.

anterion

Anterion is an open-source AI software engineer that extends the capabilities of `SWE-agent` to plan and execute open-ended engineering tasks, with a frontend inspired by `OpenDevin`. It is designed to help users fix bugs and prototype ideas with ease. Anterion is equipped with easy deployment and a user-friendly interface, making it accessible to users of all skill levels.

sglang

SGLang is a structured generation language designed for large language models (LLMs). It makes your interaction with LLMs faster and more controllable by co-designing the frontend language and the runtime system. The core features of SGLang include: - **A Flexible Front-End Language**: This allows for easy programming of LLM applications with multiple chained generation calls, advanced prompting techniques, control flow, multiple modalities, parallelism, and external interaction. - **A High-Performance Runtime with RadixAttention**: This feature significantly accelerates the execution of complex LLM programs by automatic KV cache reuse across multiple calls. It also supports other common techniques like continuous batching and tensor parallelism.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

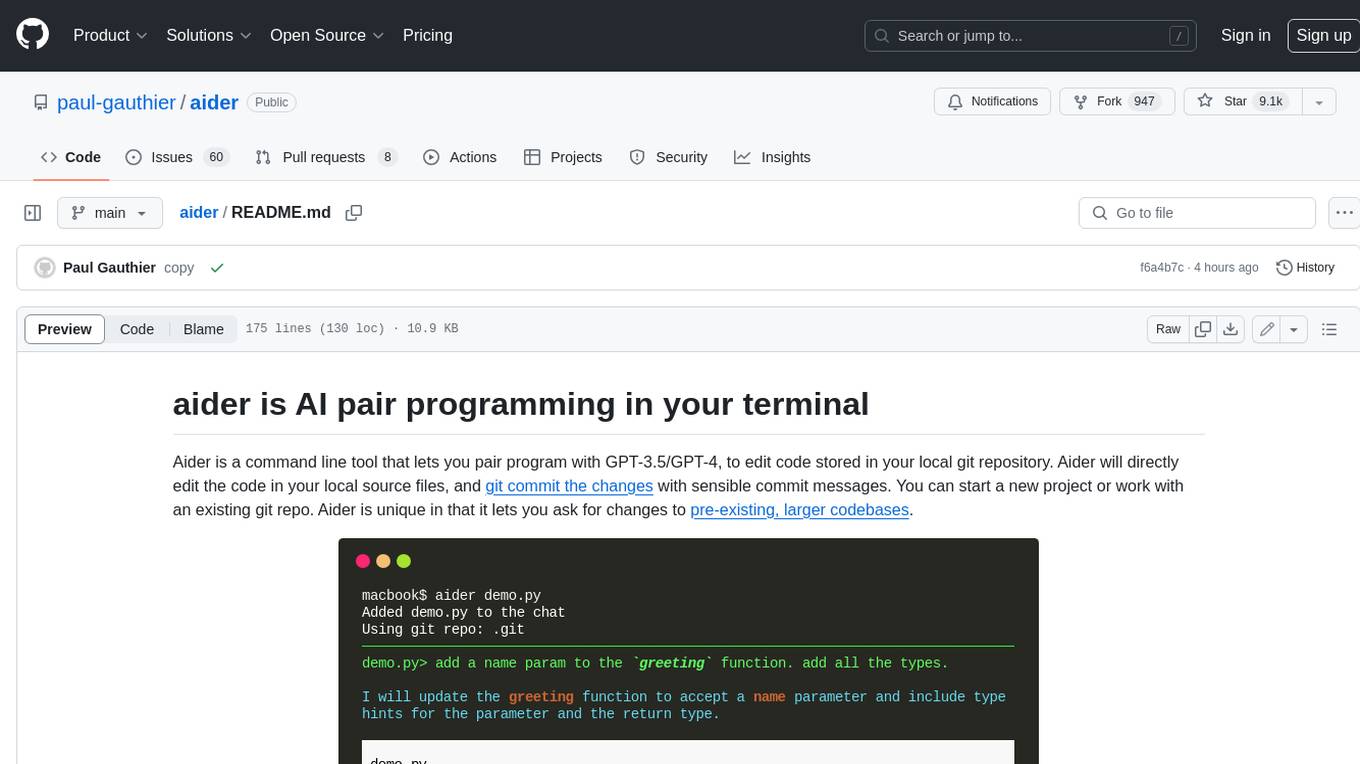

aider

Aider is a command-line tool that lets you pair program with GPT-3.5/GPT-4 to edit code stored in your local git repository. Aider will directly edit the code in your local source files and git commit the changes with sensible commit messages. You can start a new project or work with an existing git repo. Aider is unique in that it lets you ask for changes to pre-existing, larger codebases.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.