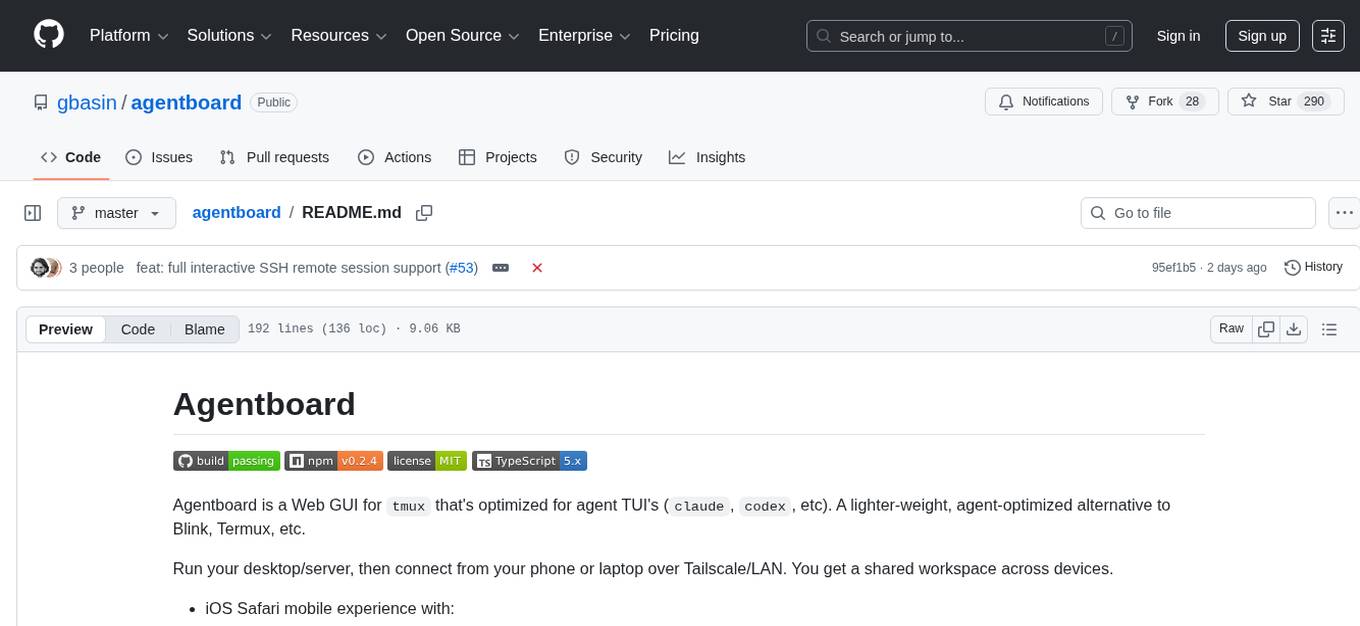

agentboard

Web GUI for tmux optimized for AI agent TUIs, with support for iOS safari and mac w/ keyboard shortcuts

Stars: 290

Agentboard is a Web GUI for tmux optimized for agent TUI's like claude and codex. It provides a shared workspace across devices with features such as paste support, touch scrolling, virtual arrow keys, log tracking, and session pinning. Users can interact with tmux sessions from any device through a live terminal stream. The tool allows session discovery, status inference, and terminal I/O streaming for efficient agent management.

README:

Agentboard is a Web GUI for tmux that's optimized for agent TUI's (claude, codex, etc). A lighter-weight, agent-optimized alternative to Blink, Termux, etc.

Run your desktop/server, then connect from your phone or laptop over Tailscale/LAN. You get a shared workspace across devices.

- iOS Safari mobile experience with:

- Paste support (including images)

- Touch scrolling

- Virtual arrow keys / d-pad

- Quick keys toolbar (ctrl, esc, etc.)

- Out-of-the-box log tracking and matching for Claude, Codex, and Pi — auto-matches sessions to active tmux windows, with one-click restore for inactive sessions.

- Shows the last user prompt for each session, so you can remember what each agent is working on

- Pin agent TUI sessions to auto-resume them when the server restarts (useful if your machine reboots or tmux dies)

┌─────────────┐ SSH ┌─────────────┐

│ Remote Host ├───────────►│ │ WebSocket ┌────────────┐

│ (tmux) │ │ Agentboard ├──────────────►│ Browser │

└─────────────┘ tmux │ Server │ │ (React + │

┌─────────────┐ CLI │ │ │ xterm.js) │

│ Local tmux ├───────────►│ - discover │ └────────────┘

│ sessions │ │ sessions │

└─────────────┘ │ - parse │

┌─────────────┐ read │ agent │

│ Agent logs ├───────────►│ logs │

│ ~/.claude/ │ └─────────────┘

└─────────────┘

- Session discovery — polls local tmux windows and (optionally) remote hosts over SSH

- Status inference — reads pane content and Claude/Codex JSONL logs to determine if each agent is working, waiting for input, or asking for permission

- Live terminal — streams I/O through the server so you can interact with any session from any device

| Terminal | Sessions | Pinning |

|---|---|---|

|

|

|

| Terminal | Controls |

|---|---|

|

|

- tmux (

brew install tmux/apt install tmux) - Tailscale (recommended) or any network path to your machine

npm install -g @gbasin/agentboard

agentboardOr run directly:

npx @gbasin/agentboardThen open http://localhost:4040 (or http://<your-machine>:4040 from another device).

For persistent deployment on Linux, see systemd/README.md.

Requires Bun 1.3.6+ (see Troubleshooting).

bun install

bun run devOpen http://<your-machine>:5173 (Vite dev server). In production, UI is served from the backend port (default 4040).

Production:

bun run build

bun run startFor persistent deployment on Linux, see systemd/README.md.

| Action | Mac | Windows/Linux |

|---|---|---|

| Previous session | Ctrl+Option+[ |

Ctrl+Shift+[ |

| Next session | Ctrl+Option+] |

Ctrl+Shift+] |

| New session | Ctrl+Option+N |

Ctrl+Shift+N |

| Kill session | Ctrl+Option+X |

Ctrl+Shift+X |

PORT=4040

HOSTNAME=0.0.0.0

TMUX_SESSION=agentboard

REFRESH_INTERVAL_MS=5000

DISCOVER_PREFIXES=work,external

PRUNE_WS_SESSIONS=true

TERMINAL_MODE=pty

TERMINAL_MONITOR_TARGETS=true

VITE_ALLOWED_HOSTS=nuc,myserver

AGENTBOARD_DB_PATH=~/.agentboard/agentboard.db

AGENTBOARD_INACTIVE_MAX_AGE_HOURS=24

AGENTBOARD_EXCLUDE_PROJECTS=<empty>,/workspace

AGENTBOARD_HOST=blade

AGENTBOARD_REMOTE_HOSTS=mba,carbon,worm

AGENTBOARD_REMOTE_POLL_MS=2000

AGENTBOARD_REMOTE_TIMEOUT_MS=4000

AGENTBOARD_REMOTE_STALE_MS=45000

AGENTBOARD_REMOTE_SSH_OPTS=-o BatchMode=yes -o ConnectTimeout=3

AGENTBOARD_REMOTE_ALLOW_ATTACH=false

AGENTBOARD_REMOTE_ALLOW_CONTROL=false

HOSTNAME controls which interfaces the server binds to (default 0.0.0.0 for network access; use 127.0.0.1 for local-only).

DISCOVER_PREFIXES lets you discover and control windows from other tmux sessions. If unset, all sessions except the managed one are discovered.

PRUNE_WS_SESSIONS removes orphaned agentboard-ws-* tmux sessions on startup (set to false to disable).

TERMINAL_MODE selects terminal I/O strategy: pty (default, grouped session) or pipe-pane (PTY-less, works in daemon/systemd/docker without -t).

TERMINAL_MONITOR_TARGETS (pipe-pane only) polls tmux to detect closed targets (set to false to disable).

VITE_ALLOWED_HOSTS allows access to the Vite dev server from other hostnames. Useful with Tailscale MagicDNS - add your machine name (e.g., nuc) to access the dev server at http://nuc:5173 from other devices on your tailnet.

All persistent data is stored in ~/.agentboard/: session database (agentboard.db) and logs (agentboard.log). Override paths with AGENTBOARD_DB_PATH and LOG_FILE.

AGENTBOARD_INACTIVE_MAX_AGE_HOURS limits inactive sessions shown in the UI to those with recent activity (default: 24 hours). Older sessions remain in the database but are not displayed or processed for orphan rematch.

AGENTBOARD_EXCLUDE_PROJECTS filters out sessions from specific project directories (comma-separated). Use <empty> to exclude sessions with no project path. Useful for hiding automated/spam sessions.

AGENTBOARD_SKIP_MATCHING_PATTERNS controls which orphan sessions skip expensive window matching (comma-separated). Defaults: <codex-exec> (headless Codex exec sessions), /private/tmp/*, /private/var/folders/*, /var/folders/*, /tmp/*. Patterns support trailing * for prefix matching. Set to empty string to disable skip matching entirely.

AGENTBOARD_HOST sets the host label for local sessions (default: hostname).

AGENTBOARD_REMOTE_HOSTS enables remote tmux polling over SSH. Provide a comma-separated list of hosts (e.g., mba,carbon,worm). Remote sessions show live status (working/waiting/permission) via pane content capture over SSH.

AGENTBOARD_REMOTE_POLL_MS, AGENTBOARD_REMOTE_TIMEOUT_MS, and AGENTBOARD_REMOTE_STALE_MS control remote poll cadence, SSH timeout, and stale host cutoff.

AGENTBOARD_REMOTE_SSH_OPTS appends extra SSH options (space-separated).

AGENTBOARD_REMOTE_ALLOW_ATTACH enables interactive terminal attach to remote sessions (input, resize, copy-mode). When false (default), remote sessions are view-only.

AGENTBOARD_REMOTE_ALLOW_CONTROL enables destructive remote session management (create, kill, rename) via the UI. Setting this to true implies REMOTE_ALLOW_ATTACH=true. Kill and rename are restricted to agentboard-managed sessions — externally-discovered remote sessions cannot be killed or renamed even with control enabled.

SSH multiplexing (recommended): Each poll cycle opens SSH connections to every remote host. Enable SSH connection multiplexing to reuse connections and reduce overhead from ~200-500ms to ~5ms per poll. Add to your ~/.ssh/config:

Host *

ControlMaster auto

ControlPath ~/.ssh/sockets/%r@%h-%p

ControlPersist 600

Then create the sockets directory: mkdir -p ~/.ssh/sockets && chmod 700 ~/.ssh/sockets

LOG_LEVEL=info # debug | info | warn | error (default: info)

LOG_FILE=~/.agentboard/agentboard.log # default; set empty to disable file logging

Console output is pretty-printed in development, JSON in production (NODE_ENV=production). File output is always JSON. Set LOG_FILE= (empty) to disable file logging.

If you see infinite open terminal failed: not a terminal errors, you need to upgrade Bun:

bun upgradeRoot cause: Bun versions prior to 1.3.6 had a bug where the terminal option in Bun.spawn() incorrectly set stdin to /dev/null instead of the PTY. Since tmux attach requires stdin to be a terminal, it fails immediately. This was fixed in Bun 1.3.6.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agentboard

Similar Open Source Tools

agentboard

Agentboard is a Web GUI for tmux optimized for agent TUI's like claude and codex. It provides a shared workspace across devices with features such as paste support, touch scrolling, virtual arrow keys, log tracking, and session pinning. Users can interact with tmux sessions from any device through a live terminal stream. The tool allows session discovery, status inference, and terminal I/O streaming for efficient agent management.

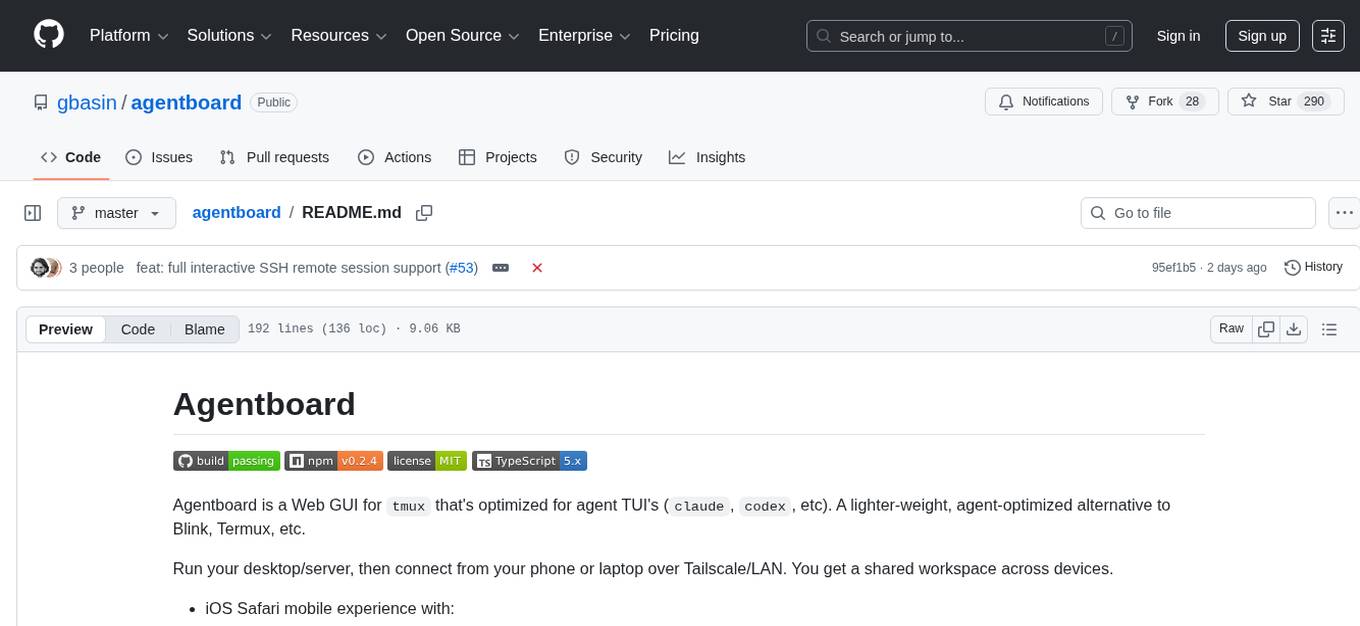

multi-agent-shogun

multi-agent-shogun is a system that runs multiple AI coding CLI instances simultaneously, orchestrating them like a feudal Japanese army. It supports Claude Code, OpenAI Codex, GitHub Copilot, and Kimi Code. The system allows you to command your AI army with zero coordination cost, enabling parallel execution, non-blocking workflow, cross-session memory, event-driven communication, and full transparency. It also features skills discovery, phone notifications, pane border task display, shout mode, and multi-CLI support.

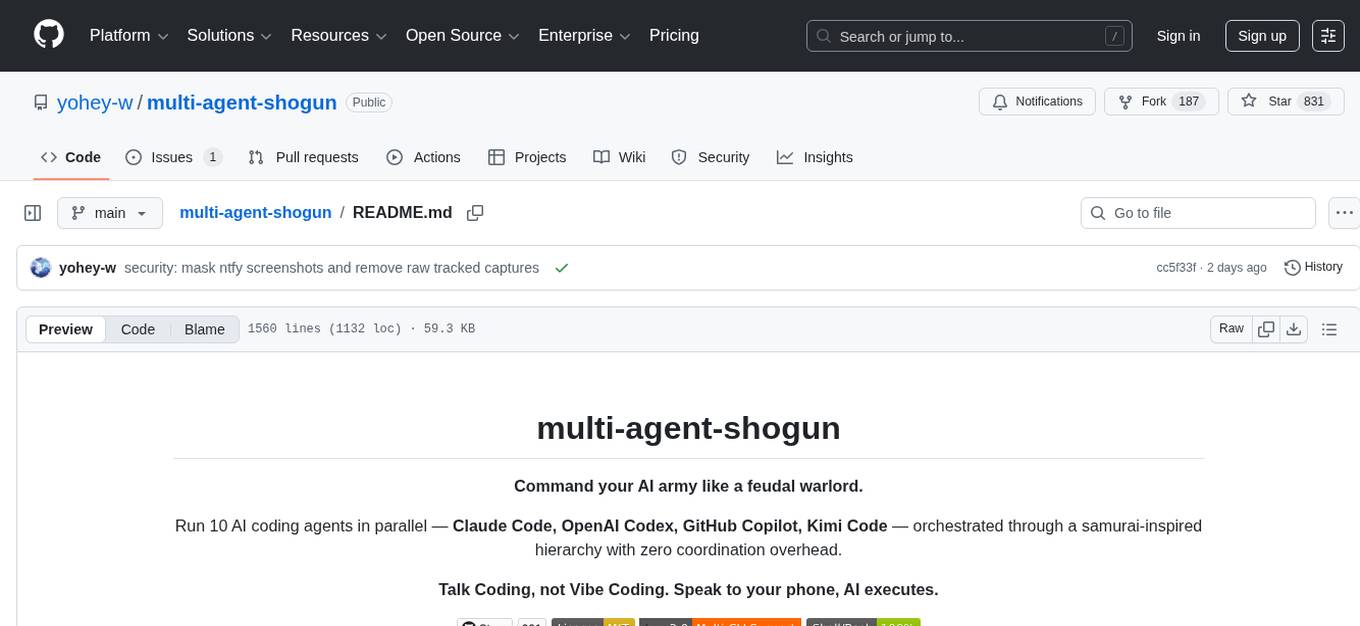

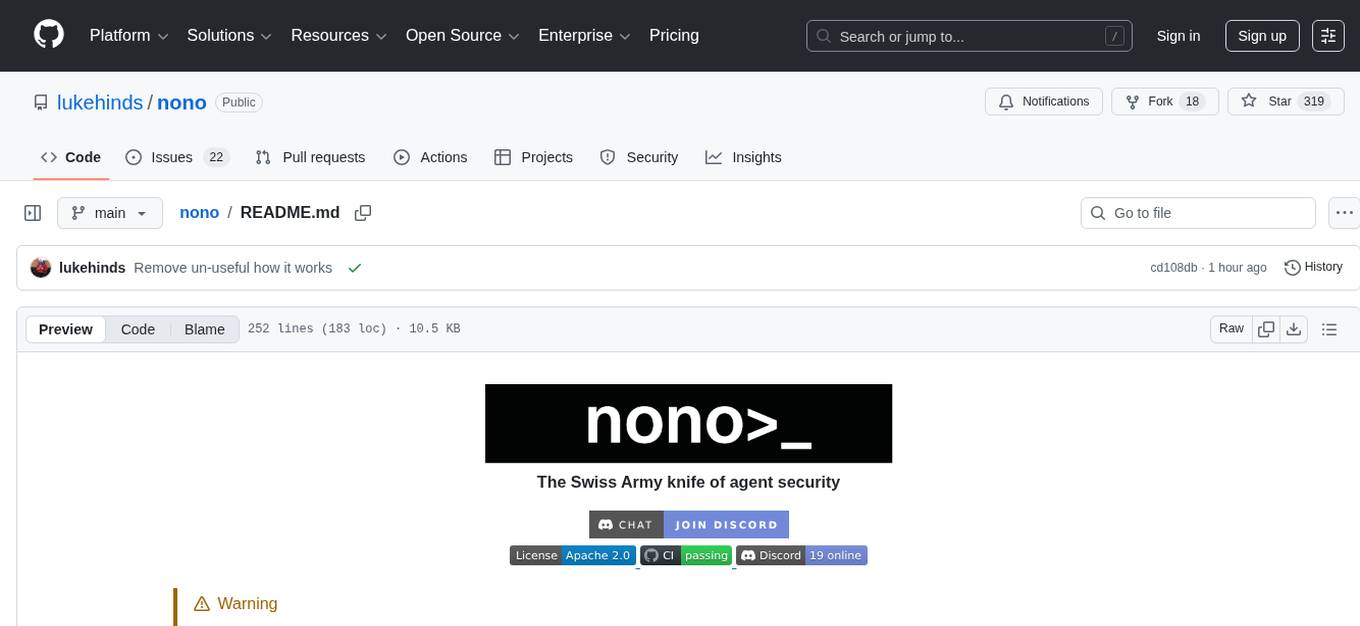

nono

nono is a secure, kernel-enforced capability shell for running AI agents and any POSIX style process. It leverages OS security primitives to create an environment where unauthorized operations are structurally impossible. It provides protections against destructive commands and securely stores API keys, tokens, and secrets. The tool is agent-agnostic, works with any AI agent or process, and blocks dangerous commands by default. It follows a capability-based security model with defense-in-depth, ensuring secure execution of commands and protecting sensitive data.

httpjail

httpjail is a cross-platform tool designed for monitoring and restricting HTTP/HTTPS requests from processes using network isolation and transparent proxy interception. It provides process-level network isolation, HTTP/HTTPS interception with TLS certificate injection, script-based and JavaScript evaluation for custom request logic, request logging, default deny behavior, and zero-configuration setup. The tool operates on Linux and macOS, creating an isolated network environment for target processes and intercepting all HTTP/HTTPS traffic through a transparent proxy enforcing user-defined rules.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

vibe-remote

Vibe Remote is a tool that allows developers to code using AI agents through Slack or Discord, eliminating the need for a laptop or IDE. It provides a seamless experience for coding tasks, enabling users to interact with AI agents in real-time, delegate tasks, and monitor progress. The tool supports multiple coding agents, offers a setup wizard for easy installation, and ensures security by running locally on the user's machine. Vibe Remote enhances productivity by reducing context-switching and enabling parallel task execution within isolated workspaces.

aiconfigurator

The `aiconfigurator` tool assists in finding a strong starting configuration for disaggregated serving in AI deployments. It helps optimize throughput at a given latency by evaluating thousands of configurations based on model, GPU count, and GPU type. The tool models LLM inference using collected data for a target machine and framework, running via CLI and web app. It generates configuration files for deployment with Dynamo, offering features like customized configuration, all-in-one automation, and tuning with advanced features. The tool estimates performance by breaking down LLM inference into operations, collecting operation execution times, and searching for strong configurations. Supported features include models like GPT and operations like attention, KV cache, GEMM, AllReduce, embedding, P2P, element-wise, MoE, MLA BMM, TRTLLM versions, and parallel modes like tensor-parallel and pipeline-parallel.

lihil

Lihil is a performant, productive, and professional web framework designed to make Python the mainstream programming language for web development. It is 100% test covered and strictly typed, offering fast performance, ergonomic API, and built-in solutions for common problems. Lihil is suitable for enterprise web development, delivering robust and scalable solutions with best practices in microservice architecture and related patterns. It features dependency injection, OpenAPI docs generation, error response generation, data validation, message system, testability, and strong support for AI features. Lihil is ASGI compatible and uses starlette as its ASGI toolkit, ensuring compatibility with starlette classes and middlewares. The framework follows semantic versioning and has a roadmap for future enhancements and features.

OpenSpec

OpenSpec is a tool for spec-driven development, aligning humans and AI coding assistants to agree on what to build before any code is written. It adds a lightweight specification workflow that ensures deterministic, reviewable outputs without the need for API keys. With OpenSpec, stakeholders can draft change proposals, review and align with AI assistants, implement tasks based on agreed specs, and archive completed changes for merging back into the source-of-truth specs. It works seamlessly with existing AI tools, offering shared visibility into proposed, active, or archived work.

mcp-debugger

mcp-debugger is a Model Context Protocol (MCP) server that provides debugging tools as structured API calls. It enables AI agents to perform step-through debugging of multiple programming languages using the Debug Adapter Protocol (DAP). The tool supports multi-language debugging with clean adapter patterns, including Python debugging via debugpy, JavaScript (Node.js) debugging via js-debug, and Rust debugging via CodeLLDB. It offers features like mock adapter for testing, STDIO and SSE transport modes, zero-runtime dependencies, Docker and npm packages for deployment, structured JSON responses for easy parsing, path validation to prevent crashes, and AI-aware line context for intelligent breakpoint placement with code context.

LangGraph-Expense-Tracker

LangGraph Expense tracker is a small project that explores the possibilities of LangGraph. It allows users to send pictures of invoices, which are then structured and categorized into expenses and stored in a database. The project includes functionalities for invoice extraction, database setup, and API configuration. It consists of various modules for categorizing expenses, creating database tables, and running the API. The database schema includes tables for categories, payment methods, and expenses, each with specific columns to track transaction details. The API documentation is available for reference, and the project utilizes LangChain for processing expense data.

sandbox

AIO Sandbox is an all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. It provides a unified, secure execution environment for AI agents and developers, with features like unified file system, multiple interfaces, secure execution, zero configuration, and agent-ready MCP-compatible APIs. The tool allows users to run shell commands, perform file operations, automate browser tasks, and integrate with various development tools and services.

skillshare

One source of truth for AI CLI skills. Sync everywhere with one command — from personal to organization-wide. Stop managing skills tool-by-tool. `skillshare` gives you one shared skill source and pushes it everywhere your AI agents work. Safe by default with non-destructive merge mode. True bidirectional flow with `collect`. Cross-machine ready with Git-native `push`/`pull`. Team + project friendly with global skills for personal workflows and repo-scoped collaboration. Visual control panel with `skillshare ui` for browsing, install, target management, and sync status in one place.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

spatz-2

Spatz-2 is a complete, fullstack template for Svelte, utilizing technologies such as Sveltekit, Pocketbase, OpenAI, Vercel AI SDK, TailwindCSS, svelte-animations, and Zod. It offers features like user authentication, admin dashboard, dark/light mode themes, AI chatbot, guestbook, and forms with client/server validation. The project structure includes components, stores, routes, APIs, and icons. Spatz-2 aims to provide a futuristic web framework for building fast web apps with advanced functionalities and easy customization.

For similar tasks

agentboard

Agentboard is a Web GUI for tmux optimized for agent TUI's like claude and codex. It provides a shared workspace across devices with features such as paste support, touch scrolling, virtual arrow keys, log tracking, and session pinning. Users can interact with tmux sessions from any device through a live terminal stream. The tool allows session discovery, status inference, and terminal I/O streaming for efficient agent management.

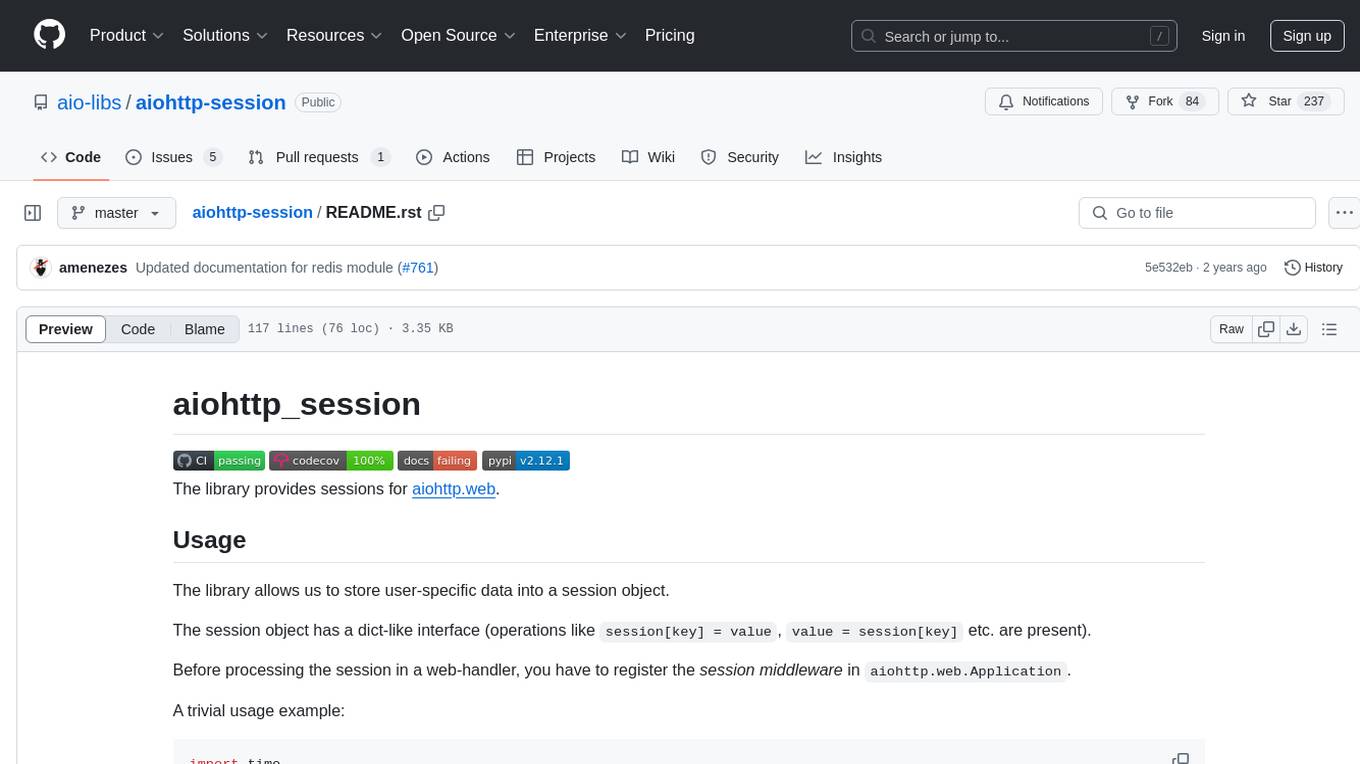

aiohttp-session

aiohttp_session is a Python library that provides session management for aiohttp.web applications. It allows storing user-specific data in session objects with a dict-like interface. The library offers different session storage options, including SimpleCookieStorage for testing, EncryptedCookieStorage for secure data storage, and RedisStorage for storing data in Redis. Users can easily integrate session management into their aiohttp.web applications by registering the session middleware. The library is designed to simplify session handling and enhance the security of web applications.

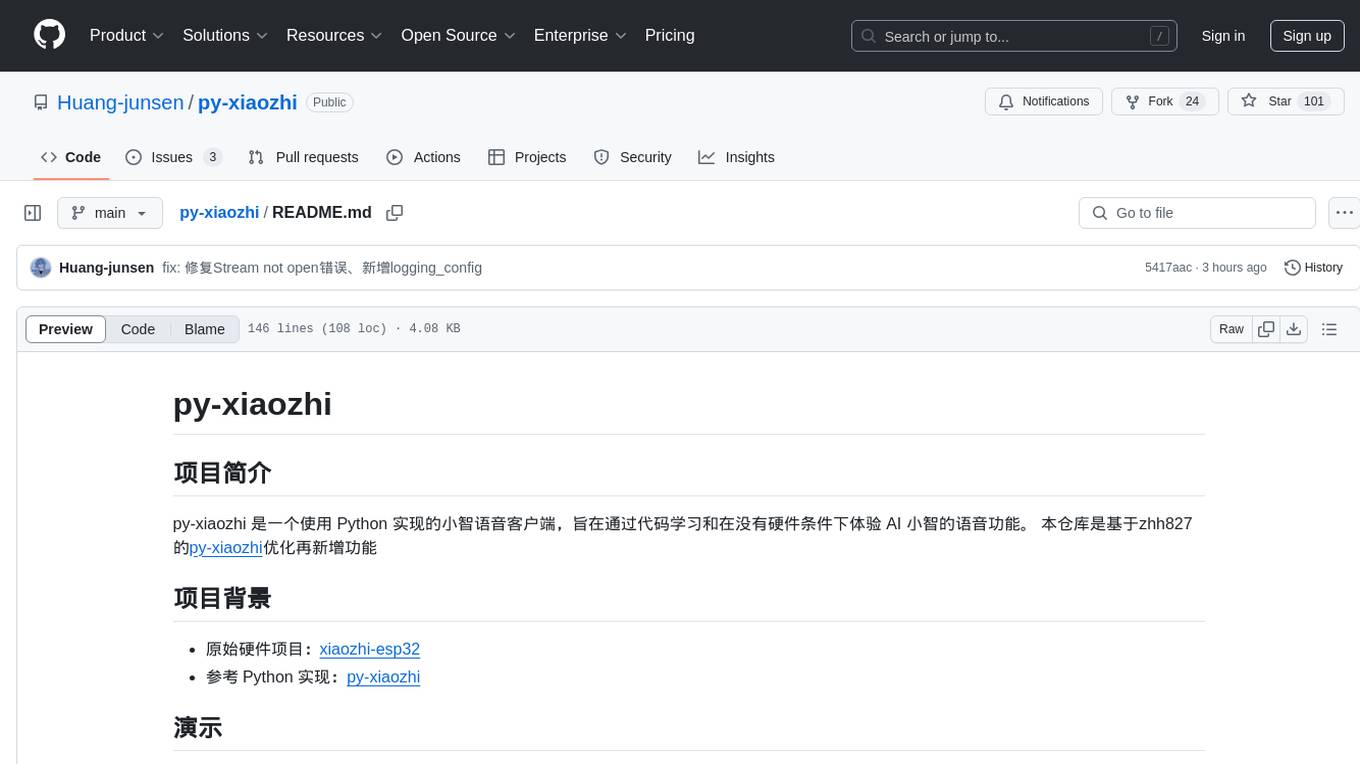

py-xiaozhi

py-xiaozhi is a Python-based XiaoZhi voice client designed for learning code and experiencing AI XiaoZhi's voice functions without hardware conditions. It features voice interaction, graphical interface, volume control, session management, encrypted audio transmission, CLI mode, and automatic copying of verification codes and opening browsers for first-time users. The project aims to optimize and add new features to zhh827's py-xiaozhi based on the original hardware project xiaozhi-esp32 and the Python implementation py-xiaozhi.

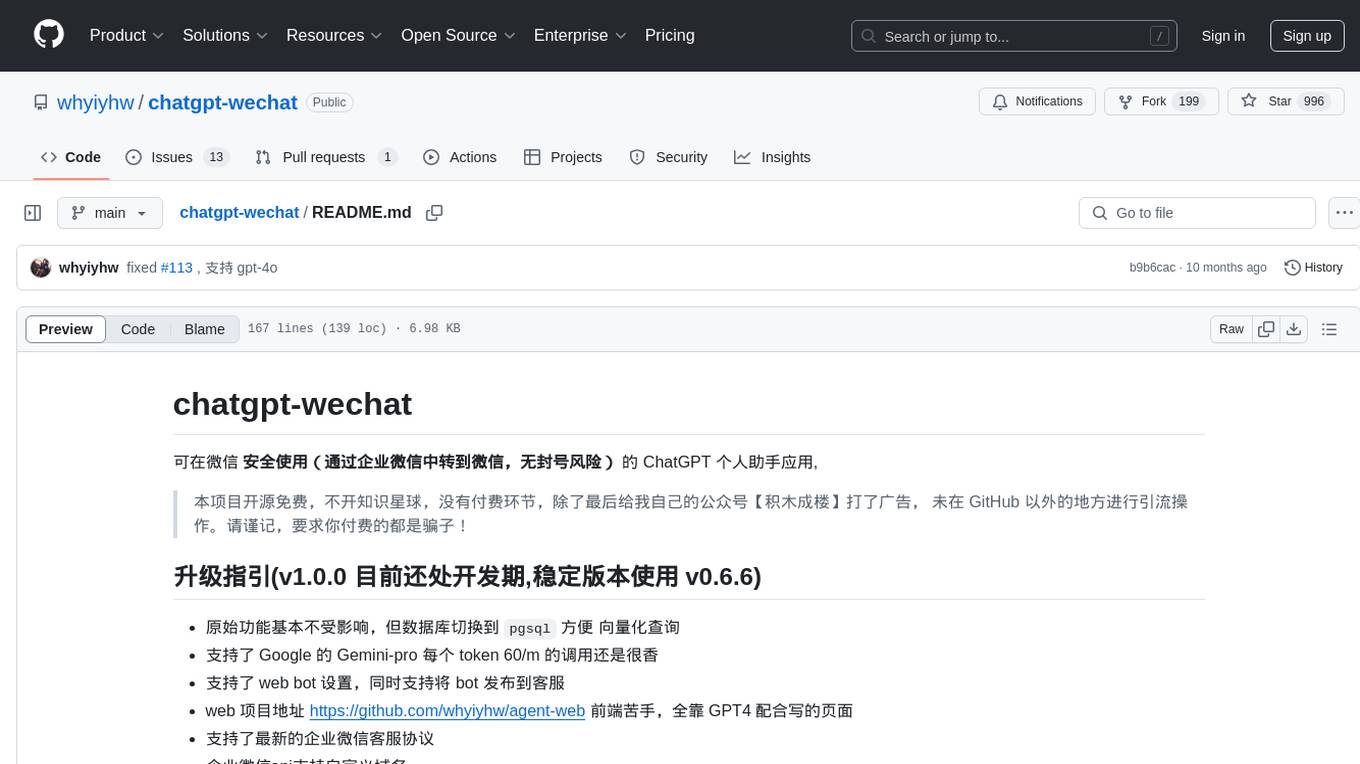

chatgpt-wechat

ChatGPT-WeChat is a personal assistant application that can be safely used on WeChat through enterprise WeChat without the risk of being banned. The project is open source and free, with no paid sections or external traffic operations except for advertising on the author's public account '积木成楼'. It supports various features such as secure usage on WeChat, multi-channel customer service message integration, proxy support, session management, rapid message response, voice and image messaging, drawing capabilities, private data storage, plugin support, and more. Users can also develop their own capabilities following the rules provided. The project is currently in development with stable versions available for use.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

SWE-ReX

SWE-ReX is a runtime interface for interacting with sandboxed shell environments, allowing AI agents to run any command on any environment. It enables agents to interact with running shell sessions, use interactive command line tools, and manage multiple shell sessions in parallel. SWE-ReX simplifies agent development and evaluation by abstracting infrastructure concerns, supporting fast parallel runs on various platforms, and disentangling agent logic from infrastructure.

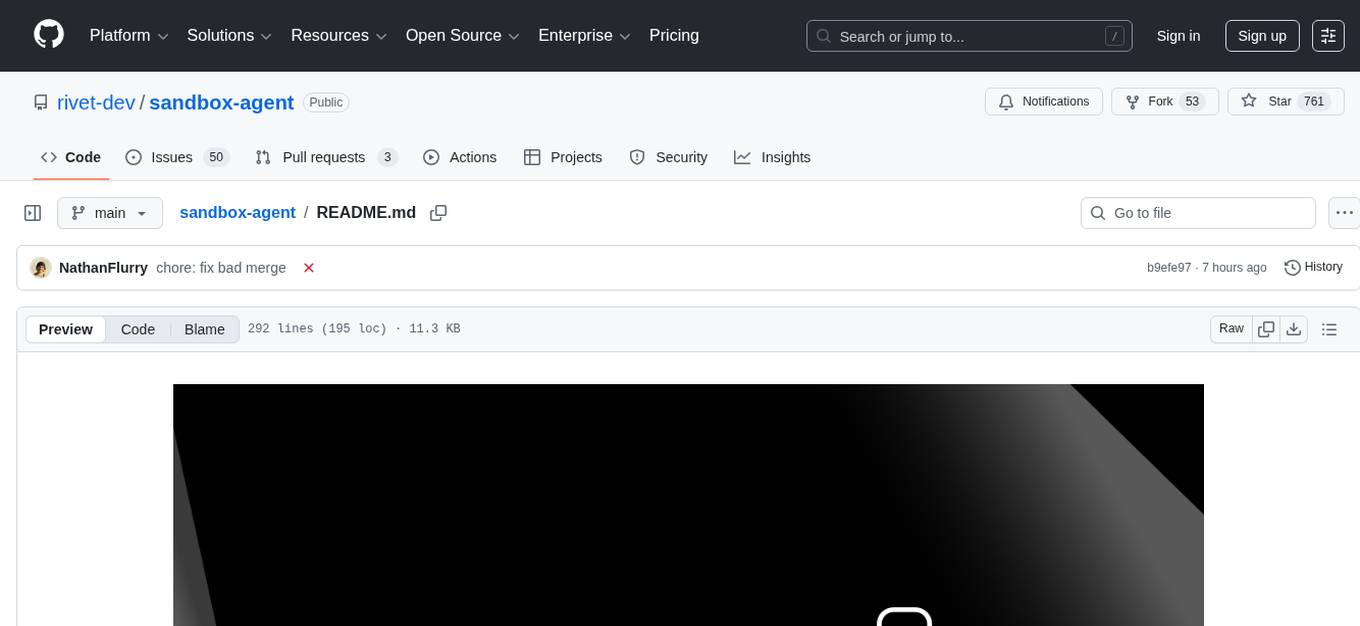

sandbox-agent

Sandbox Agent is a server that runs inside sandboxes to control coding agents remotely over HTTP. It provides a universal API to interact with coding agents like Claude Code, Codex, OpenCode, and Amp, allowing users to manage sessions, handle permissions, and stream events. The tool solves the challenges of executing coding agents remotely, standardizes APIs for different agents, and persists session data for later replay and auditing. It offers features like streaming events, human-in-the-loop interactions, automatic agent installation, and runs inside any sandbox environment.

Lim-Code

LimCode is a powerful VS Code AI programming assistant that supports multiple AI models, intelligent tool invocation, and modular architecture. It features support for various AI channels, a smart tool system for code manipulation, MCP protocol support for external tool extension, intelligent context management, session management, and more. Users can install LimCode from the plugin store or via VSIX, or build it from the source code. The tool offers a rich set of features for AI programming and code manipulation within the VS Code environment.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.