mcp-graphql

Model Context Protocol server for GraphQL

Stars: 62

mcp-graphql is a Model Context Protocol server that enables Large Language Models (LLMs) to interact with GraphQL APIs. It provides schema introspection and query execution capabilities, allowing models to dynamically discover and use GraphQL APIs. The server offers tools for retrieving the GraphQL schema and executing queries against the endpoint. Mutations are disabled by default for security reasons. Users can install mcp-graphql via Smithery or manually to Claude Desktop. It is recommended to carefully consider enabling mutations in production environments to prevent unauthorized data modifications.

README:

A Model Context Protocol server that enables LLMs to interact with GraphQL APIs. This implementation provides schema introspection and query execution capabilities, allowing models to discover and use GraphQL APIs dynamically.

Run mcp-graphql with the correct endpoint, it will automatically try to introspect your queries.

| Argument | Description | Default |

|---|---|---|

--endpoint |

GraphQL endpoint URL | http://localhost:4000/graphql |

--headers |

JSON string containing headers for requests | {} |

--enable-mutations |

Enable mutation operations (disabled by default) | false |

--name |

Name of the MCP server | mcp-graphql |

--schema |

Path to a local GraphQL schema file (optional) | - |

# Basic usage with a local GraphQL server

npx mcp-graphql --endpoint http://localhost:3000/graphql

# Using with custom headers

npx mcp-graphql --endpoint https://api.example.com/graphql --headers '{"Authorization":"Bearer token123"}'

# Enable mutation operations

npx mcp-graphql --endpoint http://localhost:3000/graphql --enable-mutations

# Using a local schema file instead of introspection

npx mcp-graphql --endpoint http://localhost:3000/graphql --schema ./schema.graphqlThe server provides two main tools:

-

introspect-schema: This tool retrieves the GraphQL schema. Use this first if you don't have access to the schema as a resource. This uses either the local schema file or an introspection query.

-

query-graphql: Execute GraphQL queries against the endpoint. By default, mutations are disabled unless

--enable-mutationsis specified.

- graphql-schema: The server exposes the GraphQL schema as a resource that clients can access. This is either the local schema file or based on an introspection query.

To install GraphQL MCP Server for Claude Desktop automatically via Smithery:

npx -y @smithery/cli install mcp-graphql --client claudeIt can be manually installed to Claude:

{

"mcpServers": {

"mcp-graphql": {

"command": "npx",

"args": ["mcp-graphql", "--endpoint", "http://localhost:3000/graphql"]

}

}

}Mutations are disabled by default as a security measure to prevent an LLM from modifying your database or service data. Consider carefully before enabling mutations in production environments.

This is a very generic implementation where it allows for complete introspection and for your users to do whatever (including mutations). If you need a more specific implementation I'd suggest to just create your own MCP and lock down tool calling for clients to only input specific query fields and/or variables. You can use this as a reference.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mcp-graphql

Similar Open Source Tools

mcp-graphql

mcp-graphql is a Model Context Protocol server that enables Large Language Models (LLMs) to interact with GraphQL APIs. It provides schema introspection and query execution capabilities, allowing models to dynamically discover and use GraphQL APIs. The server offers tools for retrieving the GraphQL schema and executing queries against the endpoint. Mutations are disabled by default for security reasons. Users can install mcp-graphql via Smithery or manually to Claude Desktop. It is recommended to carefully consider enabling mutations in production environments to prevent unauthorized data modifications.

mcp-server-qdrant

The mcp-server-qdrant repository is an official Model Context Protocol (MCP) server designed for keeping and retrieving memories in the Qdrant vector search engine. It acts as a semantic memory layer on top of the Qdrant database. The server provides tools like 'qdrant-store' for storing information in the database and 'qdrant-find' for retrieving relevant information. Configuration is done using environment variables, and the server supports different transport protocols. It can be installed using 'uvx' or Docker, and can also be installed via Smithery for Claude Desktop. The server can be used with Cursor/Windsurf as a code search tool by customizing tool descriptions. It can store code snippets and help developers find specific implementations or usage patterns. The repository is licensed under the Apache License 2.0.

mcp-server

The UI5 Model Context Protocol server offers tools to improve the developer experience when working with agentic AI tools. It helps with creating new UI5 projects, detecting and fixing UI5-specific errors, and providing additional UI5-specific information for agentic AI tools. The server supports various tools such as scaffolding new UI5 applications, fetching UI5 API documentation, providing UI5 development best practices, extracting metadata and configuration from UI5 projects, retrieving version information for the UI5 framework, analyzing and reporting issues in UI5 code, offering guidelines for converting UI5 applications to TypeScript, providing UI Integration Cards development best practices, scaffolding new UI Integration Cards, and validating the manifest against the UI5 Manifest schema. The server requires Node.js and npm versions specified, along with an MCP client like VS Code or Cline. Configuration options are available for customizing the server's behavior, and specific setup instructions are provided for MCP clients like VS Code and Cline.

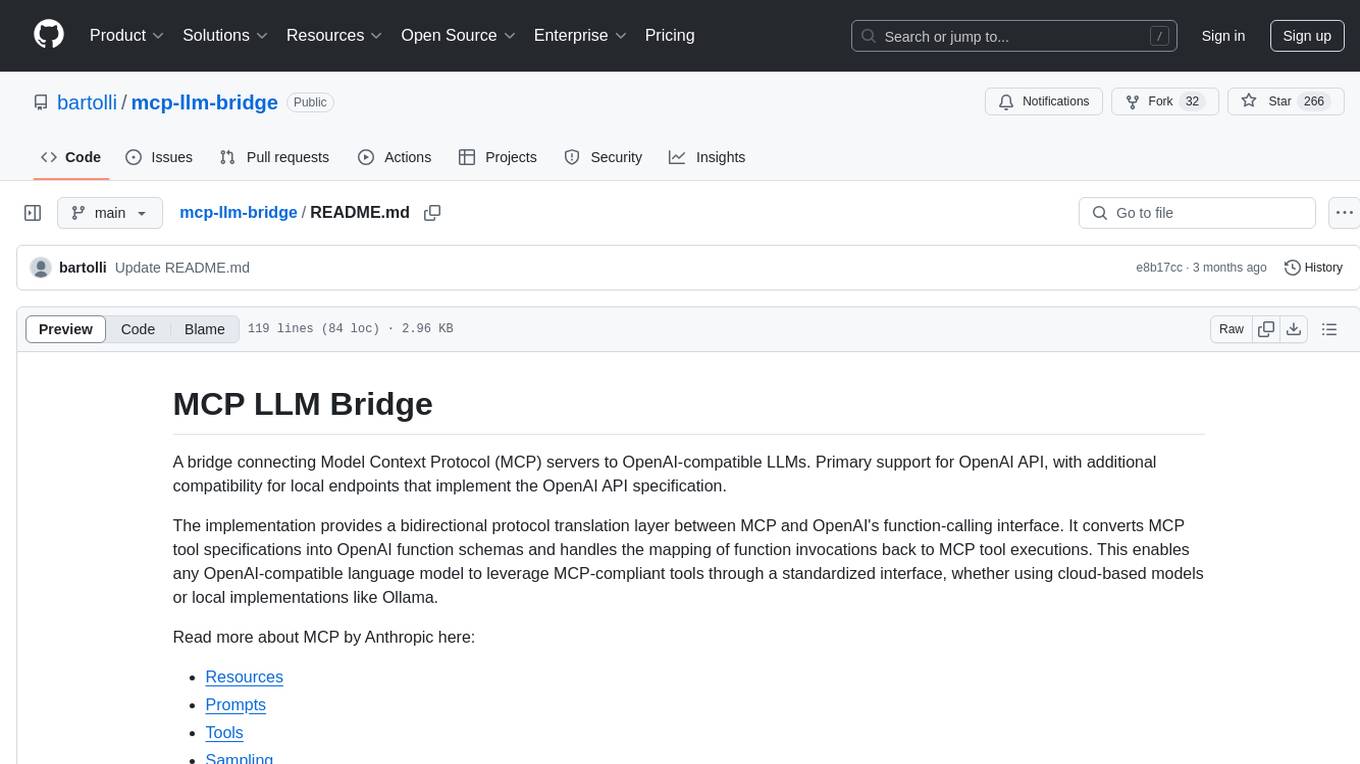

mcp-llm-bridge

The MCP LLM Bridge is a tool that acts as a bridge connecting Model Context Protocol (MCP) servers to OpenAI-compatible LLMs. It provides a bidirectional protocol translation layer between MCP and OpenAI's function-calling interface, enabling any OpenAI-compatible language model to leverage MCP-compliant tools through a standardized interface. The tool supports primary integration with the OpenAI API and offers additional compatibility for local endpoints that implement the OpenAI API specification. Users can configure the tool for different endpoints and models, facilitating the execution of complex queries and tasks using cloud-based or local models like Ollama and LM Studio.

supabase-mcp

Supabase MCP Server standardizes how Large Language Models (LLMs) interact with Supabase, enabling AI assistants to manage tables, fetch config, and query data. It provides tools for project management, database operations, project configuration, branching (experimental), and development tools. The server is pre-1.0, so expect some breaking changes between versions.

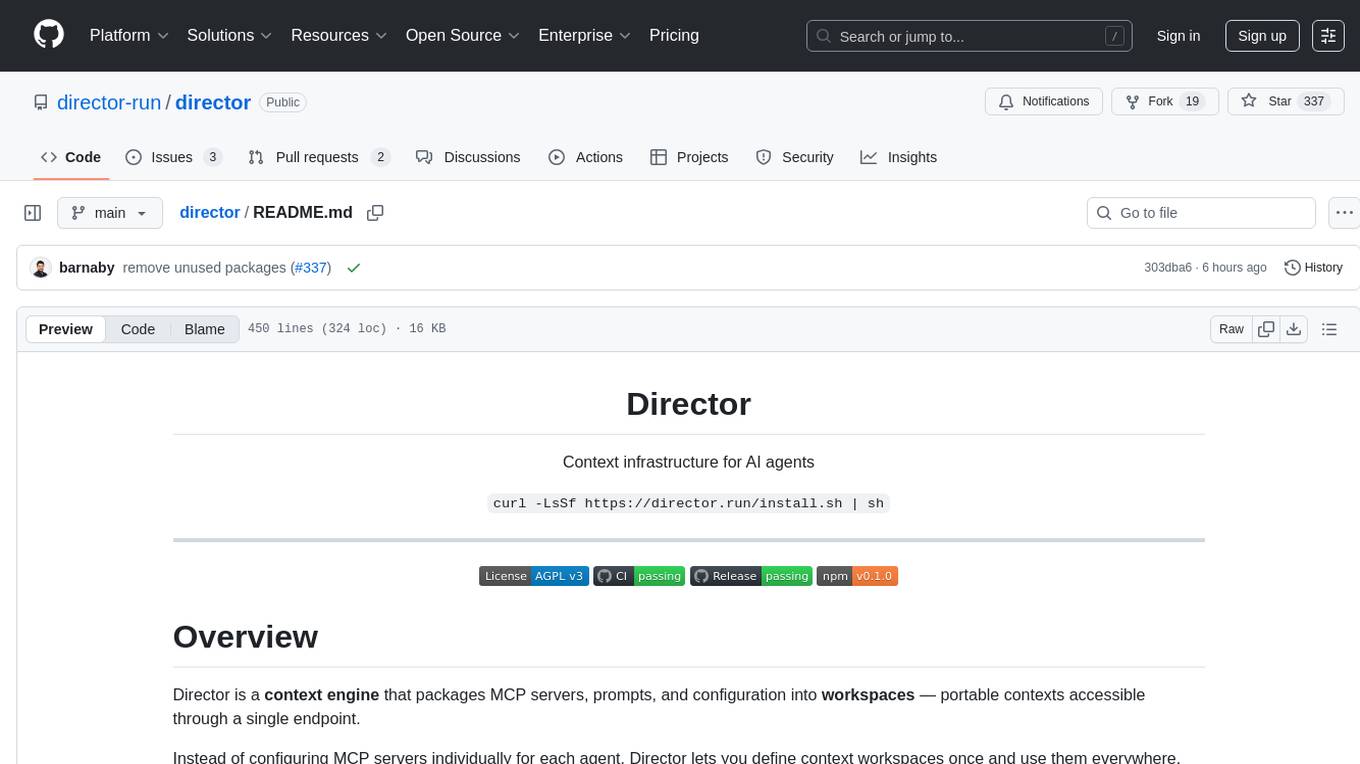

director

Director is a context infrastructure tool for AI agents that simplifies managing MCP servers, prompts, and configurations by packaging them into portable workspaces accessible through a single endpoint. It allows users to define context workspaces once and share them across different AI clients, enabling seamless collaboration, instant context switching, and secure isolation of untrusted servers without cloud dependencies or API keys. Director offers features like workspaces, universal portability, local-first architecture, sandboxing, smart filtering, unified OAuth, observability, multiple interfaces, and compatibility with all MCP clients and servers.

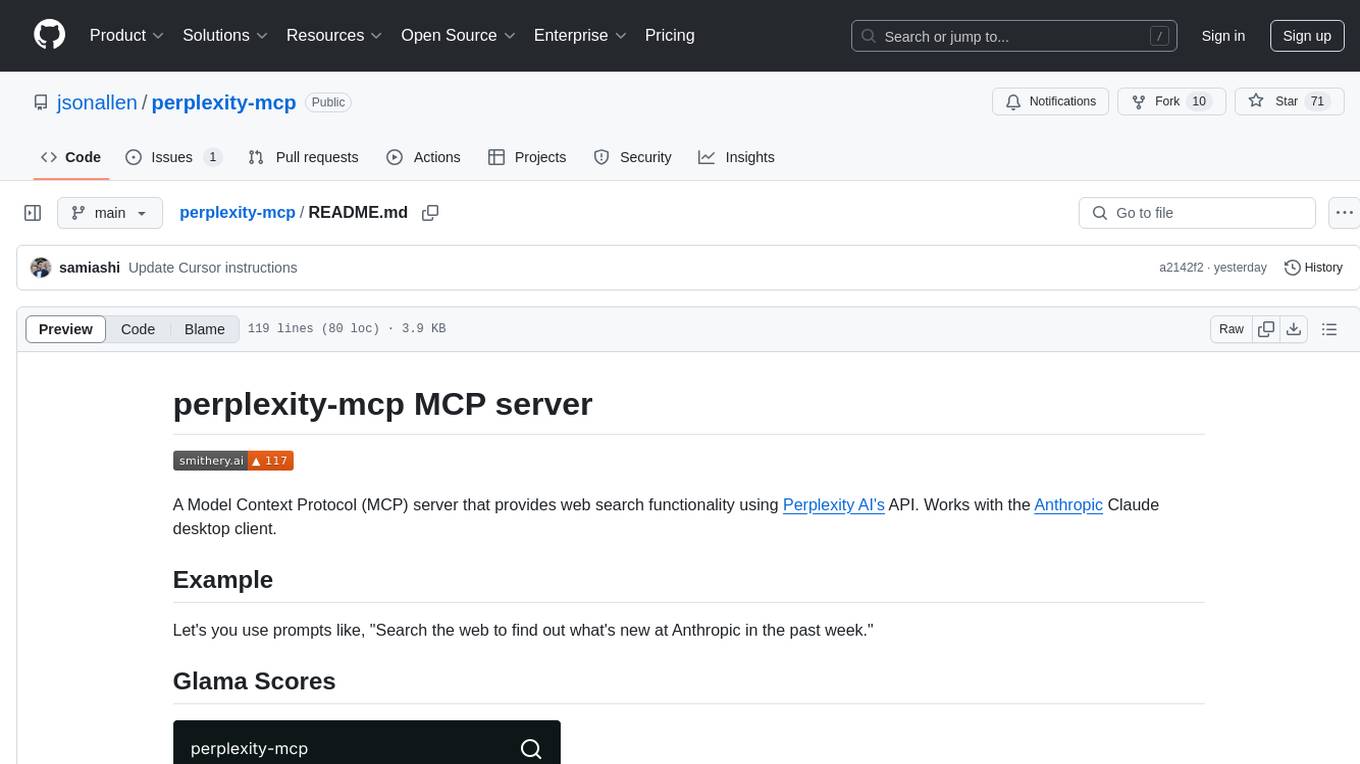

perplexity-mcp

Perplexity-mcp is a Model Context Protocol (MCP) server that provides web search functionality using Perplexity AI's API. It works with the Anthropic Claude desktop client. The server allows users to search the web with specific queries and filter results by recency. It implements the perplexity_search_web tool, which takes a query as a required argument and can filter results by day, week, month, or year. Users need to set up environment variables, including the PERPLEXITY_API_KEY, to use the server. The tool can be installed via Smithery and requires UV for installation. It offers various models for different contexts and can be added as an MCP server in Cursor or Claude Desktop configurations.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

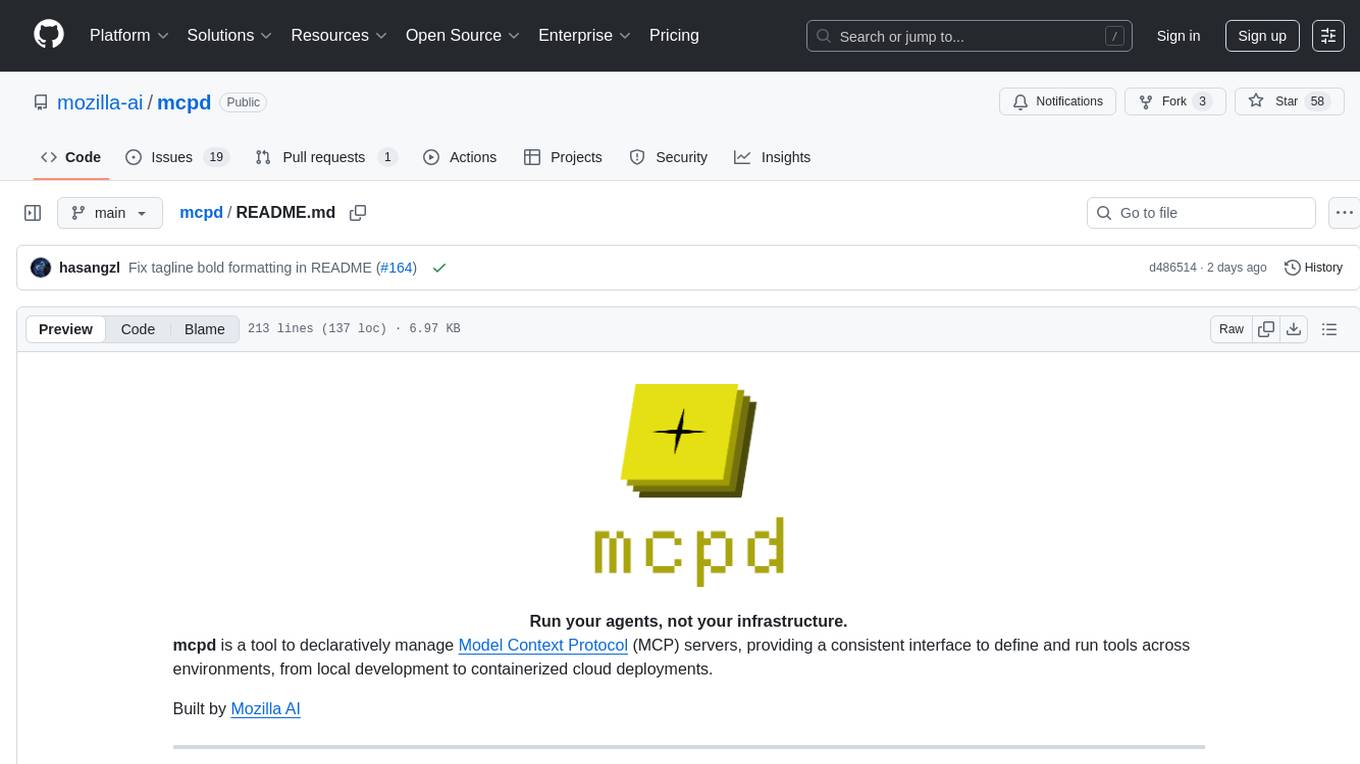

mcpd

mcpd is a tool developed by Mozilla AI to declaratively manage Model Context Protocol (MCP) servers, enabling consistent interface for defining and running tools across different environments. It bridges the gap between local development and enterprise deployment by providing secure secrets management, declarative configuration, and seamless environment promotion. mcpd simplifies the developer experience by offering zero-config tool setup, language-agnostic tooling, version-controlled configuration files, enterprise-ready secrets management, and smooth transition from local to production environments.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

mysql_mcp_server

A Model Context Protocol (MCP) server that enables secure interaction with MySQL databases. This server allows AI assistants to list tables, read data, and execute SQL queries through a controlled interface, making database exploration and analysis safer and more structured. It provides features such as listing available MySQL tables as resources, reading table contents, executing SQL queries with proper error handling, secure database access through environment variables, and comprehensive logging. The tool ensures security best practices by never committing environment variables or credentials, using a database user with minimal required permissions, implementing query whitelisting for production use, and monitoring and logging all database operations.

sparql-llm

This project provides tools to enhance the capabilities of Large Language Models (LLMs) in generating SPARQL queries for specific endpoints. It includes reusable components, a chat web service, and an experimental MCP server. The system integrates Retrieval-Augmented Generation (RAG) and SPARQL query validation through endpoint schemas to ensure accurate query generation on large-scale knowledge graphs. Components can work independently or as part of a chat-based system requiring endpoint metadata. Features include metadata extraction, SPARQL query validation, deployable chat system, and live example chat system at chat.expasy.org.

basic-memory

Basic Memory is a tool that enables users to build persistent knowledge through natural conversations with Large Language Models (LLMs) like Claude. It uses the Model Context Protocol (MCP) to allow compatible LLMs to read and write to a local knowledge base stored in simple Markdown files on the user's computer. The tool facilitates creating structured notes during conversations, maintaining a semantic knowledge graph, and keeping all data local and under user control. Basic Memory aims to address the limitations of ephemeral LLM interactions by providing a structured, bi-directional, and locally stored knowledge management solution.

MCPJungle

MCPJungle is a self-hosted MCP Gateway for private AI agents, serving as a registry for Model Context Protocol Servers. Developers use it to manage servers and tools centrally, while clients discover and consume tools from a single 'Gateway' MCP Server. Suitable for developers using MCP Clients like Claude & Cursor, building production-grade AI Agents, and organizations managing client-server interactions. The tool allows quick start, installation, usage, server and client setup, connection to Claude and Cursor, enabling/disabling tools, managing tool groups, authentication, enterprise features like access control and OpenTelemetry metrics. Limitations include lack of long-running connections to servers and no support for OAuth flow. Contributions are welcome.

sosumi.ai

sosumi.ai provides Apple Developer documentation in an AI-readable format by converting JavaScript-rendered pages into Markdown. It offers an HTTP API to access Apple docs, supports external Swift-DocC sites, integrates with MCP server, and provides tools like searchAppleDocumentation and fetchAppleDocumentation. The project can be self-hosted and is currently hosted on Cloudflare Workers. It is built with Hono and supports various runtimes. The application is designed for accessibility-first, on-demand rendering of Apple Developer pages to Markdown.

For similar tasks

mcp-graphql

mcp-graphql is a Model Context Protocol server that enables Large Language Models (LLMs) to interact with GraphQL APIs. It provides schema introspection and query execution capabilities, allowing models to dynamically discover and use GraphQL APIs. The server offers tools for retrieving the GraphQL schema and executing queries against the endpoint. Mutations are disabled by default for security reasons. Users can install mcp-graphql via Smithery or manually to Claude Desktop. It is recommended to carefully consider enabling mutations in production environments to prevent unauthorized data modifications.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

aiosql

aiosql is a Python module that allows you to organize SQL statements in .sql files and load them into your Python application as methods to call. It supports various database drivers like SQLite, PostgreSQL, MySQL, MariaDB, and DuckDB. The project is an implementation of Kris Jenkins' yesql library to the Python ecosystem, allowing users to easily reuse SQL code in SQL GUIs or CLI tools. With aiosql, you can write, version control, comment, and run SQL code using files without losing the ability to use them as you would any other SQL file. It provides support for PEP 249 and asyncio based drivers, enabling users to execute parametric SQL queries from Python methods.

dbhub

DBHub is a universal database gateway that implements the Model Context Protocol (MCP) server interface. It allows MCP-compatible clients to connect to and explore different databases. The gateway supports various database resources and tools, providing capabilities such as executing queries, listing connectors, generating SQL, and explaining database elements. Users can easily configure their database connections and choose between different transport modes like stdio and sse. DBHub also offers a demo mode with a sample employee database for testing purposes.

mcp-llm-bridge

The MCP LLM Bridge is a tool that acts as a bridge connecting Model Context Protocol (MCP) servers to OpenAI-compatible LLMs. It provides a bidirectional protocol translation layer between MCP and OpenAI's function-calling interface, enabling any OpenAI-compatible language model to leverage MCP-compliant tools through a standardized interface. The tool supports primary integration with the OpenAI API and offers additional compatibility for local endpoints that implement the OpenAI API specification. Users can configure the tool for different endpoints and models, facilitating the execution of complex queries and tasks using cloud-based or local models like Ollama and LM Studio.

mysql_mcp_server

A Model Context Protocol (MCP) server that enables secure interaction with MySQL databases. This server allows AI assistants to list tables, read data, and execute SQL queries through a controlled interface, making database exploration and analysis safer and more structured. It provides features such as listing available MySQL tables as resources, reading table contents, executing SQL queries with proper error handling, secure database access through environment variables, and comprehensive logging. The tool ensures security best practices by never committing environment variables or credentials, using a database user with minimal required permissions, implementing query whitelisting for production use, and monitoring and logging all database operations.

boost

Laravel Boost accelerates AI-assisted development by providing essential context and structure for generating high-quality, Laravel-specific code. It includes an MCP server with specialized tools, AI guidelines, and a Documentation API. Boost is designed to streamline AI-assisted coding workflows by offering precise, context-aware results and extensive Laravel-specific information.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.