remix-antd-admin

🎰Remix Antd Admin is a fullstack website building solution based on fullstack React Router、Antd、Prisma. (WIP)

Stars: 72

Remix Antd Admin is a full-stack management system built on Remix and Antd/TailwindCSS, featuring RBAC permission management and remix-i18n integration. It aims to provide a modern, simple, fast, and scalable full-stack website. The project is currently in development, transitioning from UI to full-stack, with a focus on stable architecture. The frontend utilizes remix data flow with server-side rendering, while the backend API uses redux-toolkit/query, prisma, rxjs, zod, and remix action/loader for server API services. The project includes authorization management with jose jwt for external API services beyond web applications. Key libraries used are Remix, express, Prisma, and pgsql.

README:

一个基于 React Router、React、Antd、TailwindCSS、Prisma 和 RBAC 的现代全栈 Web 解决方案(WIP)。

Remix Antd Admin 正在开发中,如果您有任何建议或问题,请随时提交 Issue 或 Pull Request。

- 🚀 使用 React Router 进行路由管理

- 🎉 使用 TailwindCSS 进行样式设计

- 🔒 默认使用 TypeScript

- 📖 使用 Prisma 作为 ORM

- 🔄 多种数据获取方式:redux/loader

- 🔐 RBAC 权限管理

- 🌐 使用 remix-i18n 进行国际化

- 📖 remix-antd-admin 文档

# git

git clone https://github.com/yyong008/remix-antd-admin.git

# 或者 gitee

git clone https://gitee.com/yyong008/remix-antd-admin.git

cd remix-antd-admin

# 开发环境

bun run dev # 在浏览器中打开端口

# 生产环境

bun run build├── package.json

├── Dockerfile

├── pnpm-lock.yaml

├── build/

│ ├── client/ # 静态资源

│ └── server/ # 服务器端代码

└── public/ # 静态资源

└── server/

└── index.js # 服务器启动入口文件bun run docker:build您可以使用 TailwindCSS、Antd 以及其他 CSS 或 CSS-in-JS 解决方案来控制样式。

如果我的项目对您有帮助,请给我买一杯咖啡 💌buy-me-a-coffee💌

Copyright (c) 2023-present Yong-

使用 React Router 和其他开源技术,带着 ❤️ 构建。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for remix-antd-admin

Similar Open Source Tools

remix-antd-admin

Remix Antd Admin is a full-stack management system built on Remix and Antd/TailwindCSS, featuring RBAC permission management and remix-i18n integration. It aims to provide a modern, simple, fast, and scalable full-stack website. The project is currently in development, transitioning from UI to full-stack, with a focus on stable architecture. The frontend utilizes remix data flow with server-side rendering, while the backend API uses redux-toolkit/query, prisma, rxjs, zod, and remix action/loader for server API services. The project includes authorization management with jose jwt for external API services beyond web applications. Key libraries used are Remix, express, Prisma, and pgsql.

spatz

Spatz is a complete, fullstack template for Svelte that includes features such as Sveltekit for building fast web apps, Pocketbase for User Auth and Database, OpenAI for chatbots, Vercel AI SDK for AI/ML models, TailwindCSS for UI development, DaisyUI for components, and Zod for schema declaration and validation. The template provides a structured project setup with components, stores, routes, and APIs. It also offers theming and styling options with pre-loaded themes from DaisyUI. Contributions are welcomed through feature requests or pull requests.

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

buster

Buster is a modern analytics platform designed with AI in mind, focusing on self-serve experiences powered by Large Language Models. It addresses pain points in existing tools by advocating for AI-centric app development, cost-effective data warehousing, improved CI/CD processes, and empowering data teams to create powerful, user-friendly data experiences. The platform aims to revolutionize AI analytics by enabling data teams to build deep integrations and own their entire analytics stack.

spatz-2

Spatz-2 is a complete, fullstack template for Svelte, utilizing technologies such as Sveltekit, Pocketbase, OpenAI, Vercel AI SDK, TailwindCSS, svelte-animations, and Zod. It offers features like user authentication, admin dashboard, dark/light mode themes, AI chatbot, guestbook, and forms with client/server validation. The project structure includes components, stores, routes, APIs, and icons. Spatz-2 aims to provide a futuristic web framework for building fast web apps with advanced functionalities and easy customization.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

azooKey-Desktop

azooKey-Desktop is an open-source Japanese input system for macOS that incorporates the high-precision neural kana-kanji conversion engine 'Zenzai'. It offers features such as neural kana-kanji conversion, profile prompt, history learning, user dictionary, integration with personal optimization system 'Tuner', 'nice feeling conversion' with LLM, live conversion, and native support for AZIK. The tool is currently in alpha version, and its operation is not guaranteed. Users can install it via `.pkg` file or Homebrew. Development contributions are welcome, and the project has received support from the Information-technology Promotion Agency, Japan (IPA) for the 2024 fiscal year's untapped IT human resources discovery and nurturing project.

y-gui

y-gui is a web-based graphical interface for AI chat interactions with support for multiple AI models and powerful MCP integrations. It provides interactive chat capabilities with AI models, supports multiple bot configurations, and integrates with Gmail, Google Calendar, and image generation services. The tool offers a comprehensive MCP integration system, secure authentication with Auth0 and Google login, dark/light theme support, real-time updates, and responsive design for all devices. The architecture consists of a frontend React application and a backend Cloudflare Workers with D1 storage. It allows users to manage emails, create calendar events, and generate images directly within chat conversations.

astrsk

astrsk is a tool that pushes the boundaries of AI storytelling by offering advanced AI agents, customizable response formatting, and flexible prompt editing for immersive roleplaying experiences. It provides complete AI agent control, a visual flow editor for conversation flows, and ensures 100% local-first data storage. The tool is true cross-platform with support for various AI providers and modern technologies like React, TypeScript, and Tailwind CSS. Coming soon features include cross-device sync, enhanced session customization, and community features.

ChatMate-GPT

ChatMate-GPT is a chat application based on Open AI GPT-3, developed using React Native for Android and iOS. It allows users to chat with GPT in a conversational format, supports multiple language and interface themes settings, includes a ChatGPT-Shortcut prompt word library, offers highly customizable API settings, supports various message reply features like Markdown rendering and CSV export, enables iCloud synchronization for chat sessions, provides multiple API server settings, URL Scheme support, message sorting, font size customization, real-time message token and cost display, and more.

pegainfer

PegaInfer is a machine learning tool designed for predictive analytics and pattern recognition. It provides a user-friendly interface for training and deploying machine learning models without the need for extensive coding knowledge. With PegaInfer, users can easily analyze large datasets, make predictions, and uncover hidden patterns in their data. The tool supports various machine learning algorithms and allows for customization to suit specific use cases. Whether you are a data scientist, business analyst, or researcher, PegaInfer can help streamline your data analysis process and enhance decision-making capabilities.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

openkf

OpenKF (Open Knowledge Flow) is an online intelligent customer service system. It is an open-source customer service system based on OpenIM, supporting LLM (Local Knowledgebase) customer service and multi-channel customer service. It is easy to integrate with third-party systems, deploy, and perform secondary development. The system provides features like login page, config page, dashboard page, platform page, and session page. Users can quickly get started with OpenKF by following the installation and run instructions. The architecture follows MVC design with a standardized directory structure. The community encourages involvement through community meetings, contributions, and development. OpenKF is licensed under the Apache 2.0 license.

OpenOutreach

OpenOutreach is a self-hosted, open-source LinkedIn automation tool designed for B2B lead generation. It automates the entire outreach process in a stealthy, human-like way by discovering and enriching target profiles, ranking profiles using ML for smart prioritization, sending personalized connection requests, following up with custom messages after acceptance, and tracking everything in a built-in CRM with web UI. It offers features like undetectable behavior, fully customizable Python-based campaigns, local execution with CRM, easy deployment with Docker, and AI-ready templating for hyper-personalized messages.

typewhisper-mac

TypeWhisper for Mac is a speech-to-text and AI text processing tool designed for macOS. It allows users to transcribe audio using on-device AI models or cloud APIs like Groq and OpenAI, and process the results with custom LLM prompts. The tool offers features such as multiple transcription engines, on-device or cloud processing, streaming preview, file transcription, subtitle export, system-wide dictation with hotkeys, AI processing with custom prompts and translation, personalization through profiles, dictionary, snippets, and history, integration and extensibility via plugins, HTTP API, and CLI tool. The tool is designed for macOS 15.0 and later, supports Apple Silicon, and offers a multilingual UI with English and German languages.

codexia

Codexia is a powerful GUI and Toolkit for Codex CLI, offering features like fork chat, file-tree integration, notepad, git diff, built-in pdf/csv/xlsx viewer, and more. It provides multi-file format support, flexible configuration with multiple AI providers, professional UX with responsive UI, security features like sandbox execution modes, and prioritizes privacy. The tool supports interactive chat, code generation/editing, file operations with sandbox, command execution with approval, multiple AI providers, project-aware assistance, streaming responses, and built-in web search. The roadmap includes plans for MCP tool call, more file format support, better UI customization, plugin system, real-time collaboration, performance optimizations, and token count.

For similar tasks

remix-antd-admin

Remix Antd Admin is a full-stack management system built on Remix and Antd/TailwindCSS, featuring RBAC permission management and remix-i18n integration. It aims to provide a modern, simple, fast, and scalable full-stack website. The project is currently in development, transitioning from UI to full-stack, with a focus on stable architecture. The frontend utilizes remix data flow with server-side rendering, while the backend API uses redux-toolkit/query, prisma, rxjs, zod, and remix action/loader for server API services. The project includes authorization management with jose jwt for external API services beyond web applications. Key libraries used are Remix, express, Prisma, and pgsql.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

synmetrix

Synmetrix is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube.js to consolidate metrics from various sources and distribute them downstream via a SQL API. Use cases include data democratization, business intelligence and reporting, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

cool-admin-java

Cool-admin-java is an open-source backend permission management system with features like Ai coding, flow arrangement, modularity, and plugin support. It is used to quickly build backend applications. The system offers a modern development experience by providing functionalities such as one-click generation of API interfaces to frontend pages, drag-and-drop flow arrangement, modularized code for easy maintenance, and extensibility through plugin installation for features like payments, SMS, and emails.

iceburgcrm

Iceburg CRM is a metadata driven CRM with AI abilities that allows users to quickly prototype any CRM. It offers features like metadata creations, import/export in multiple formats, field validation, themes, role permissions, calendar, audit logs, API, workflow, field level relationships, module level relationships, and more. Created with Vue 3 for the frontend, Laravel 10 for the backend, Tailwinds with DaisyUI plugin, and Inertia for routing. Users can install default, admin panel, core, custom, or AI versions. The tool supports AI Assist for module data suggestions and provides API endpoints for CRM modules, search, specific module data, record updates, and deletions. Iceburg CRM also includes themes, custom field types, calendar, datalets, workflow, roles and permissions, import/export functionality, and custom seeding options.

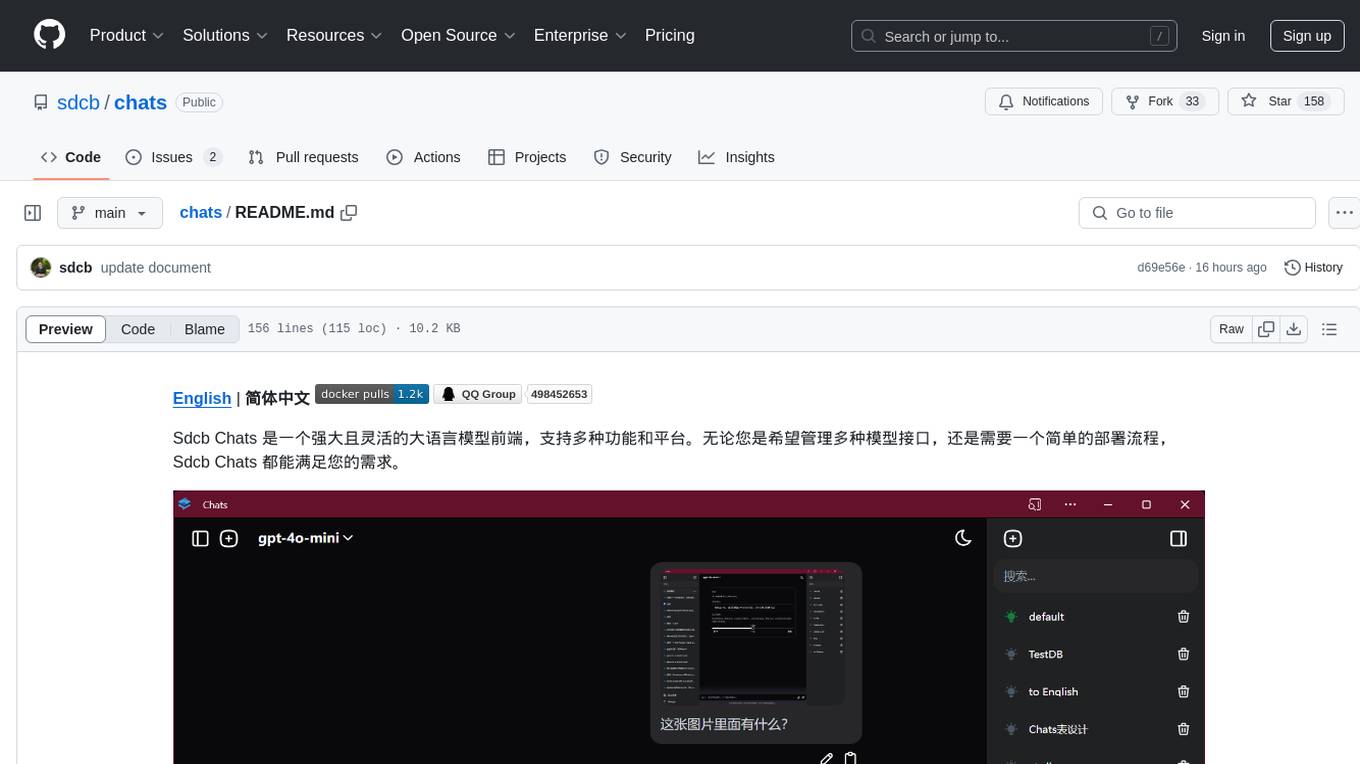

chats

Sdcb Chats is a powerful and flexible frontend for large language models, supporting multiple functions and platforms. Whether you want to manage multiple model interfaces or need a simple deployment process, Sdcb Chats can meet your needs. It supports dynamic management of multiple large language model interfaces, integrates visual models to enhance user interaction experience, provides fine-grained user permission settings for security, real-time tracking and management of user account balances, easy addition, deletion, and configuration of models, transparently forwards user chat requests based on the OpenAI protocol, supports multiple databases including SQLite, SQL Server, and PostgreSQL, compatible with various file services such as local files, AWS S3, Minio, Aliyun OSS, Azure Blob Storage, and supports multiple login methods including Keycloak SSO and phone SMS verification.

Slurm-web

Slurm-web is an open source web dashboard designed for Slurm based HPC clusters. It provides a graphical user interface to track jobs, insights, and visualizations for monitoring HPC supercomputers. The tool offers features like interactive charts, job filtering, live status updates, node visualization, RBAC permissions, LDAP authentication, and integration with Prometheus for metrics collection.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.