mlir-aie

An MLIR-based toolchain for AMD AI Engine-enabled devices.

Stars: 483

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

README:

This project emphasizes fast, open-source toolchains for NPU devices including LLVM-based code generation. IRON contains a close-to-metal toolkit that empowers performance engineers to create fast and efficient designs for Ryzen™ AI NPUs powered by AI Engines. It provides Python APIs that enable developers to harness the unique architectural capabilities of AMD’s NPUs. However, this project is not intended to represent an end-to-end compilation flow for all application designs---it is designed to complement, not replace, mainstream NPU tooling for inference like the AMD Ryzen™ AI Software Platform. Targeting researchers and enthusiasts, IRON is designed to unlock the full potential of NPUs for a wide range of workloads, from machine learning to digital signal processing and beyond. This repository includes programming guides and examples demonstrating the APIs. Additionally, the Peano component extends the LLVM framework by adding support for the AI Engine processor as a target architecture, enabling integration with popular compiler frontends such as clang. Developers can leverage the AIE API header library to implement efficient vectorized AIE core code in C++ that can be compiled by Peano.

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity.

The IRON Python API for Ryzen™ AI NPUs is described in the following paper:

E. Hunhoff, J. Melber, K. Denolf, A. Bisca, S. Bayliss, S. Neuendorffer, J. Fifield, J. Lo, P. Vasireddy, P. James-Roxby, E. Keller. "Efficiency, Expressivity, and Extensibility in a Close-to-Metal NPU Programming Interface". In 33rd IEEE International Symposium On Field-Programmable Custom Computing Machines, May 2025.

These instructions will guide you through everything required for building and executing a program on the Ryzen™ AI NPU, starting from a fresh bare-bones Ubuntu 24.04 or Ubuntu 24.10 install.

Be sure you have the latest BIOS on your laptop or mini-PC that enables the NPU. See here.

If starting from Ubuntu 24.04 you may need to update the Linux kernel to 6.11+ by installing the Hardware Enablement (HWE) stack:

sudo apt update

sudo apt install --install-recommends linux-generic-hwe-24.04

sudo rebootTurn off SecureBoot (Allows for unsigned drivers to be installed):

BIOS → Security → Secure boot → Disable

-

Execute the scripted build process:

This script will install package dependencies, build the xdna-driver and xrt packages, and install them. These steps require

sudoaccess.bash ./utils/build_drivers.sh

-

Reboot as directed after the script exits.

sudo reboot

-

Check that the NPU is working if the device appears with xrt-smi:

source /opt/xilinx/xrt/setup.sh xrt-smi examineAt the bottom of the output you should see:

Devices present BDF : Name ------------------------------------ [0000:66:00.1] : NPU Strix

-

Install the following packages needed for MLIR-AIE:

# Python versions 3.10, 3.12 and 3.13 are currently supported by our wheels sudo apt install \ build-essential clang clang-14 lld lld-14 cmake ninja-build python3-venv python3-pip -

(Optional) Install opencv which is needed for vision programming examples:

sudo apt install libopencv-dev python3-opencv

-

Clone the mlir-aie repository:

git clone https://github.com/Xilinx/mlir-aie.git cd mlir-aie -

Setup a virtual environment:

python3 -m venv ironenv source ironenv/bin/activate python3 -m pip install --upgrade pip -

Install IRON library, mlir-aie and llvm-aie compilers from wheels and dependencies:

For release v1.0:

# Install IRON library and mlir-aie from a wheel python3 -m pip install mlir_aie -f https://github.com/Xilinx/mlir-aie/releases/expanded_assets/v1.0 # Install Peano from a llvm-aie wheel python3 -m pip install https://github.com/Xilinx/llvm-aie/releases/download/nightly/llvm_aie-19.0.0.2025041501+b2a279c1-py3-none-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl # Install basic Python requirements (still needed for release v1.0, but is no longer needed for latest wheels) python3 -m pip install -r python/requirements.txt # Install MLIR Python Extras HOST_MLIR_PYTHON_PACKAGE_PREFIX=aie python3 -m pip install -r python/requirements_extras.txt

For daily latest:

# Install IRON library and mlir-aie from a wheel python3 -m pip install mlir_aie -f https://github.com/Xilinx/mlir-aie/releases/expanded_assets/latest-wheels-2 # Install Peano from llvm-aie wheel python3 -m pip install llvm-aie -f https://github.com/Xilinx/llvm-aie/releases/expanded_assets/nightly # Install MLIR Python Extras HOST_MLIR_PYTHON_PACKAGE_PREFIX=aie python3 -m pip install -r python/requirements_extras.txt

-

(Optional) Install Python packages required for development and testing:

# Install Python requirements for development and testing python3 -m pip install -r python/requirements_dev.txt # This installs the pre-commit hooks defined in .pre-commit-config.yaml pre-commit install

-

Setup environment

source utils/env_setup.sh -

(Optional) Install ML Python packages for ml programming examples:

# Install Torch for ML examples python3 -m pip install -r python/requirements_ml.txt -

(Optional) Install Jupyter Notebook Python packages:

# Install Jupyter Notebook python3 -m pip install -r python/requirements_notebook.txt # This creates an ipykernel (for use in notebooks) using the ironenv venv python3 -m ipykernel install --user --name ironenv # Only for Release v1.0 and non wheel-based installs: # The install generally captures in the $PYTHONPATH by the `env_setup.sh` script. # However, jupyter notebooks don't always get access to the PYTHONPATH (e.g., if they are run with # vscode) so we save the ${MLIR_AIE_INSTALL_DIR}/python in a .pth file in the site packages dir of the # ironenv venv; this allows the iron ipykernel to find the install dir regardless of if PYTHONPATH is # available or not. MLIR_AIE_INSTALL=`$(pip show mlir_aie | grep ^Location: | awk '{print $2}')/mlir_aie` \ venv_site_packages=`python3 -c 'import sysconfig; print(sysconfig.get_paths()["purelib"])'` \ echo ${MLIR_AIE_INSTALL}/python > $venv_site_packages/mlir-aie.pth

For your design of interest, for instance from programming_examples, 2 steps are needed: (i) build the AIE design and then (ii) build the host code.

-

Goto the design of interest and run:

make

-

Build host code and execute the design:

make run

-

Continue to the IRON AIE Application Programming Guide

-

Additional MLIR-AIE documentation is available on the website

-

AIE API header library documentation for single-core AIE programming in C++ is avaiable here

-

If you are a university researcher or student and interested in trying these tools on our Ryzen™ AI AUP Cloud systems, please contact the AMD University Program

You may skip the Vitis™ installation step if you intend to only target AMD XDNA™/AIE-ML (AIE2) and AMD XDNA™ 2 (AIE2P) using our open-source single-core compiler Peano. Compiling with

xchessccis not supported without installing AMD Vitis™ AIE Essentials.

-

Install Vitis™ AIE Essentials from Ryzen AI Software 1.3 Early Access. We will assume you use the installation directory,

/tools/ryzen_ai-1.3.0/vitis_aie_essentials.This is an early access lounge, you must register and be granted access at this time.

-

Download VAIML Installer for Linux based compilation:

ryzen_ai-1.3.0ea1.tgz -

Extract the required tools:

tar -xzvf ryzen_ai-1.3.0ea1.tgz cd ryzen_ai-1.3.0 mkdir vitis_aie_essentials mv vitis_aie_essentials*.whl vitis_aie_essentials cd vitis_aie_essentials unzip vitis_aie_essentials*.whl

-

-

Set up an AI Engine license.

-

Get a local license for AI Engine tools from https://www.xilinx.com/getlicense.

-

Copy your license file (Xilinx.lic) to your preferred location, e.g.

/opt/Xilinx.lic:

-

-

Setup your environment using the following script for Vitis™ for AIETools:

#!/bin/bash ################################################################################# # Setup Vitis AIE Essentials ################################################################################# export AIETOOLS_ROOT=/tools/ryzen_ai-1.3.0/vitis_aie_essentials export PATH=$PATH:${AIETOOLS_ROOT}/bin export LM_LICENSE_FILE=/opt/Xilinx.lic

Be sure you have the latest BIOS for your laptop or mini PC, this will ensure the NPU (sometimes referred to as IPU) is enabled in the system. You may need to manually enable the NPU:

Advanced → CPU Configuration → IPU

NOTE: Some manufacturers only provide Windows executables to update the BIOS, please do this before installing Ubuntu.

IRON AIE Application Programming Guide

Building mlir-aie tools from source

MLIR Dialect and Compiler Documentation

Interested in contributing MLIR-AIE? Information for developers

Copyright© 2019-2024 Advanced Micro Devices, Inc

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mlir-aie

Similar Open Source Tools

mlir-aie

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

modelence

Modelence is an all-in-one TypeScript framework for startups shipping production apps, aiming to eliminate boilerplate for standard web app features. It provides authentication, database setup, cron jobs, AI observability, and email functionalities. Modelence requires Node.js 20.20 or higher. Developers can create projects, install dependencies, and start the development server quickly. For local development, contributors can clone the repository, install dependencies, build the package, and test changes in a real application. Modelence offers examples for further guidance.

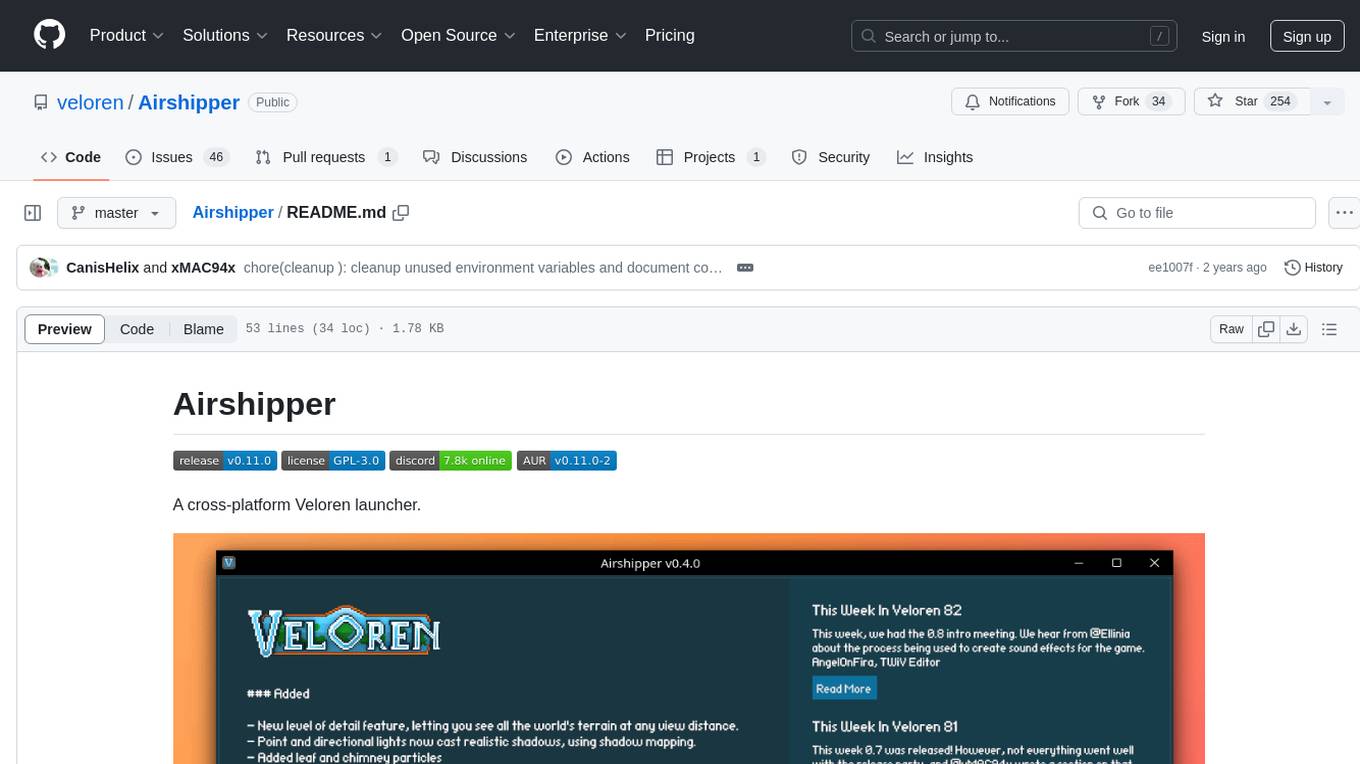

Airshipper

Airshipper is a cross-platform Veloren launcher that allows users to update/download and start nightly builds of the game. It features a fancy UI with self-updating capabilities on Windows. Users can compile it from source and also have the option to install Airshipper-Server for advanced configurations. Note that Airshipper is still in development and may not be stable for all users.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

swarmauri-sdk

Swarmauri SDK is a repository containing core interfaces, standard ABCs, and standard concrete references of the SwarmaURI Framework. It provides a set of tools and functionalities for developers to work with the SwarmaURI ecosystem. The SDK aims to streamline the development process and enhance the interoperability of applications within the framework. Developers can easily integrate SwarmaURI features into their projects by leveraging the resources available in this repository.

RoboMatrix

RoboMatrix is a skill-centric hierarchical framework for scalable robot task planning and execution in an open-world environment. It provides a structured approach to robot task execution using a combination of hardware components, environment configuration, installation procedures, and data collection methods. The framework is developed using the ROS2 framework on Ubuntu and supports robots from DJI's RoboMaster series. Users can follow the provided installation guidance to set up RoboMatrix and utilize it for various tasks such as data collection, task execution, and dataset construction. The framework also includes a supervised fine-tuning dataset and aims to optimize communication and release additional components in the future.

comp

Comp AI is an open-source compliance automation platform designed to assist companies in achieving compliance with standards like SOC 2, ISO 27001, and GDPR. It transforms compliance into an engineering problem solved through code, automating evidence collection, policy management, and control implementation while maintaining data and infrastructure control.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

AMD-AI

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

prime

Prime is a framework for efficient, globally distributed training of AI models over the internet. It includes features such as fault-tolerant training with ElasticDeviceMesh, asynchronous distributed checkpointing, live checkpoint recovery, custom Int8 All-Reduce Kernel, maximizing bandwidth utilization, PyTorch FSDP2/DTensor ZeRO-3 implementation, and CPU off-loading. The framework aims to optimize communication, checkpointing, and bandwidth utilization for large-scale AI model training.

AirCasting

AirCasting is a platform for gathering, visualizing, and sharing environmental data. It aims to provide a central hub for environmental data, making it easier for people to access and use this information to make informed decisions about their environment.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

SREGym

SREGym is an AI-native platform for designing, developing, and evaluating AI agents for Site Reliability Engineering (SRE). It provides a comprehensive benchmark suite with 86 different SRE problems, including OS-level faults, metastable failures, and concurrent failures. Users can run agents in isolated Docker containers, specify models from multiple providers, and access detailed documentation on the platform's website.

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

For similar tasks

mlir-aie

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.