Best AI tools for< Compiler Developer >

Infographic

20 - AI tool Sites

BugFree.ai

BugFree.ai is an AI-powered platform designed to help users practice system design and behavior interviews, similar to Leetcode. The platform offers a range of features to assist users in preparing for technical interviews, including mock interviews, real-time feedback, and personalized study plans. With BugFree.ai, users can improve their problem-solving skills and gain confidence in tackling complex interview questions.

ThinkRoot

ThinkRoot is an AI Compiler that empowers users to transform their ideas into fully functional applications within minutes. By leveraging advanced artificial intelligence algorithms, ThinkRoot streamlines the app development process, eliminating the need for extensive coding knowledge. With a user-friendly interface and intuitive design, ThinkRoot caters to both novice and experienced developers, offering a seamless experience from concept to deployment. Whether you're a startup looking to prototype quickly or an individual with a creative vision, ThinkRoot provides the tools and resources to bring your ideas to life effortlessly.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

Coddy

Coddy is an AI-powered coding assistant that helps developers write better code faster. It provides real-time feedback, code completion, and error detection, making it the perfect tool for both beginners and experienced developers. Coddy also integrates with popular development tools like Visual Studio Code and GitHub, making it easy to use in your existing workflow.

PseudoEditor

PseudoEditor is a free online pseudocode editor and compiler designed to simplify the process of writing pseudocode. It offers dynamic syntax highlighting, code saving, error highlighting, and a fast compiler to help users write and test pseudocode efficiently. The platform aims to provide a smooth writing experience, allowing users to write pseudocode up to 5 times faster than traditional methods. PseudoEditor is the first and only browser-based pseudocode editor, offering a range of features to enhance the coding experience.

GetSelected.ai

GetSelected.ai is a personal AI-powered interviewer platform that helps users enhance their interview skills through AI technology. The platform offers features such as mock interviews, personalized feedback, job position customization, AI-driven quizzes, resume optimization, and code compiler for IT roles. Users can practice interview scenarios, improve communication skills, and prepare for recruitment processes with the help of AI tools. GetSelected.ai aims to provide a comprehensive and customizable experience to meet unique career goals and stand out in the competitive job market.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

PLEASEDONTCODE

PLEASEDONTCODE is an AI Code Generator designed for Arduino and ESP32 embedded systems. It simplifies coding processes, automates code generation, and helps users overcome common obstacles in software development for electronic boards. The platform offers a guided interface with six steps to create error-free, syntactically correct, and logically sound code, enabling users to focus on their projects rather than troubleshooting.

Papnox ERP

Papnox ERP is an AI-powered ERP software designed specifically for paper distributors. It offers cloud-based accounting, invoicing, and inventory management solutions. The platform includes modules for CRM, payroll management, website building, and report generation. Papnox ERP is known for its early adoption of disruptive technologies and provides unique insights into the paper distribution industry. The software aims to streamline business operations and enhance efficiency for paper distributors.

Visual Studio

Visual Studio is an integrated development environment (IDE) and code editor designed for software developers and teams. It offers a comprehensive set of tools and features to enhance every stage of software development, including code editing, debugging, building, and publishing applications. Visual Studio also includes compilers, code completion tools, graphical designers, and AI-powered coding assistance through GitHub Copilot integration.

Floneum

Floneum is a versatile AI-powered tool designed for language tasks. It offers a user-friendly interface to build workflows using large language models. With Floneum, users can securely extend functionality by writing plugins in various languages compiled to WebAssembly. The tool provides a sandboxed environment for plugins, ensuring limited resource access. With 41 built-in plugins, Floneum simplifies tasks such as text generation, search engine operations, file handling, Python execution, browser automation, and data manipulation.

ONNX

ONNX is an open standard for machine learning interoperability, providing a common format to represent machine learning models. It defines a set of operators and a file format for AI developers to use models across various frameworks, tools, runtimes, and compilers. ONNX promotes interoperability, hardware access, and community engagement.

SoraPrompt

SoraPrompt is an AI model that can create realistic and imaginative scenes from text instructions. It is the latest text-to-video technology from the OpenAI development team. Users can compile text prompts to generate video query summaries for efficient content analysis. SoraPrompt also allows users to share their interests and ideas with others.

Narada

Narada is an AI application designed for busy professionals to streamline their work processes. It leverages cutting-edge AI technology to automate tasks, connect favorite apps, and enhance productivity through intelligent automation. Narada's LLM Compiler routes text and voice commands to the right tools in real time, offering seamless app integration and time-saving features.

Rargus

Rargus is a generative AI tool that specializes in turning customer feedback into actionable insights for businesses. By collecting feedback from various channels and utilizing custom AI analysis, Rargus helps businesses understand customer needs and improve product development. The tool enables users to compile and analyze feedback efficiently, leading to data-driven decision-making and successful product launches. Rargus also offers solutions for consumer insights, product management, and product marketing, helping businesses enhance customer satisfaction and drive growth.

Replexica

Replexica is an AI-powered i18n compiler for React that is JSON-free and LLM-backed. It is designed for shipping multi-language frontends fast.

illbeback.ai

illbeback.ai is the #1 site for AI jobs around the world. It provides a platform for both job seekers and employers to connect in the field of Artificial Intelligence. The website features a wide range of AI job listings from top companies, offering opportunities for professionals in the AI industry to advance their careers. With a user-friendly interface, illbeback.ai simplifies the job search process for AI enthusiasts and provides valuable resources for companies looking to hire AI talent.

Roadmapped.ai

Roadmapped.ai is an AI-powered platform designed to help users learn various topics efficiently and quickly. By providing a structured roadmap generated in seconds, the platform eliminates the need to navigate through scattered online resources aimlessly. Users can input a topic they want to learn, and the AI will generate a personalized roadmap with curated resources. The platform also offers features like AI-powered YouTube search, saving roadmaps, priority support, and access to a private Discord community.

Twig AI

Twig AI is an AI tool designed for Customer Experience, offering an AI assistant that resolves customer issues instantly, supporting both users and support agents 24/7. It provides features like converting user requests into API calls, instant responses for user questions, and factual answers cited with trustworthy sources. Twig simplifies data retrieval from external sources, offers personalization options, and includes a built-in knowledge base. The tool aims to drive agent productivity, provide insights to monitor customer experience, and offers various application interfaces for different user roles.

AI Document Creator

AI Document Creator is an innovative tool that leverages artificial intelligence to assist users in generating various types of documents efficiently. The application utilizes advanced algorithms to analyze input data and create well-structured documents tailored to the user's needs. With AI Document Creator, users can save time and effort in document creation, ensuring accuracy and consistency in their outputs. The tool is user-friendly and accessible, making it suitable for individuals and businesses seeking to streamline their document creation process.

1 - Open Source Tools

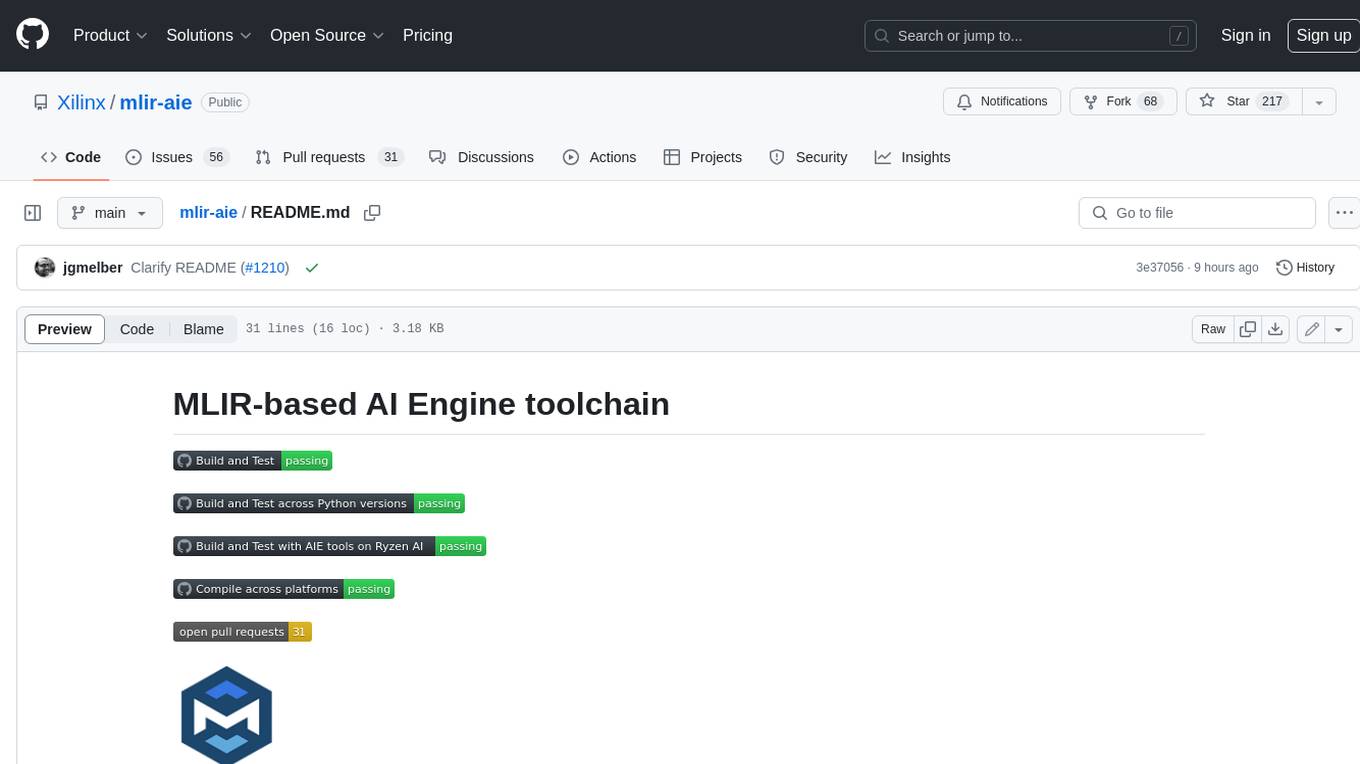

mlir-aie

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

20 - OpenAI Gpts

Melange Mentor

I'm a tutor for JavaScript and Melange, a compiler for OCaml that targets JavaScript.

FlutterCraft

FlutterCraft is an AI-powered assistant that streamlines Flutter app development. It interprets user-provided descriptions to generate and compile Flutter app code, providing ready-to-install APK and iOS files. Ideal for rapid prototyping, FlutterCraft makes app development accessible and efficient.

Linux Kernel Expert

Formal and professional Linux Kernel Expert, adept in technical jargon.

ReScript

Write ReScript code. Trained with versions 10 & 11. Documentation github.com/guillempuche/gpt-rescript

Gandi IDE Shader Helper

Helps you code a shader for Gandi IDE project in GLSL. https://getgandi.com/extensions/glsl-in-gandi-ide

Lead Scout

I compile and enrich precise company and professional profiles. Simply provide any name, email address, or company and I'll generate a complete profile.

BioinformaticsManual

Compile instructions from the web and github for bioinformatics applications. Receive line-by-line instructions and commands to get started

A Remedy for Everything

Natural remedies for over 220 Ailments Compiled from 5 Years of Extensive Research.

Coloring Book Generator

Crafts full coloring books with a cover and compiled into a downloadable document.

Daily Horoscope

Get your daily horoscope summary, categorized and compiled from various online sources. For entertainment purposes only.

Interview Pro

By combining the expertise of top career coaches with advanced AI, our GPT helps you excel in interviews across various job functions and levels. We've also compiled the most practical tips for you | We value your experience, please contact [email protected] if you need support ❤️!