gin-vue-admin

🚀Vite+Vue3+Gin拥有AI辅助的基础开发平台,企业级业务AI+开发解决方案,支持TS和JS混用。它集成了JWT鉴权、权限管理、动态路由、显隐可控组件、分页封装、多点登录拦截、资源权限、上传下载、代码生成器、表单生成器和可配置的导入导出等开发必备功能。

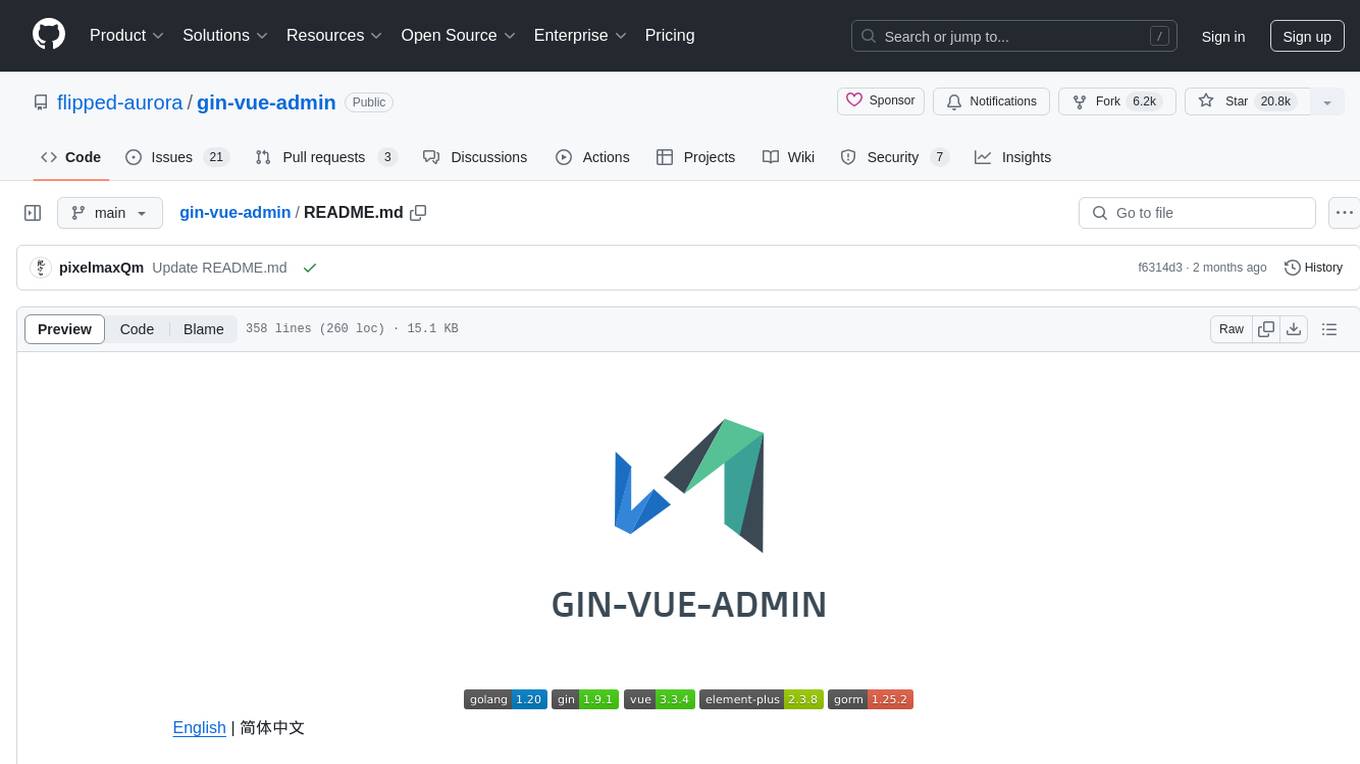

Stars: 23477

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

README:

English | 简体中文

|

📄 创建基础模板 🤖 AI生成结构 ⏰ 生成代码 🏷️ 分配权限 🎉 基础CURD生成完成 |

在线文档 : https://www.gin-vue-admin.com

开发教学 (贡献者: LLemonGreen And Fann)

1.本项目从起步到开发到部署均有文档和详细视频教程

2.本项目需要您有一定的golang和vue基础

3.您完全可以通过我们的教程和文档完成一切操作,因此我们不再提供免费的技术服务,如需服务请进行付费支持

4.如果您将此项目用于商业用途,请遵守Apache2.0协议并保留作者技术支持声明。您需保留如下版权声明信息,以及日志和代码中所包含的版权声明信息。所需保留信息均为文案性质,不会影响任何业务内容,如决定商用【产生收益的商业行为均在商用行列】或者必须剔除请购买授权

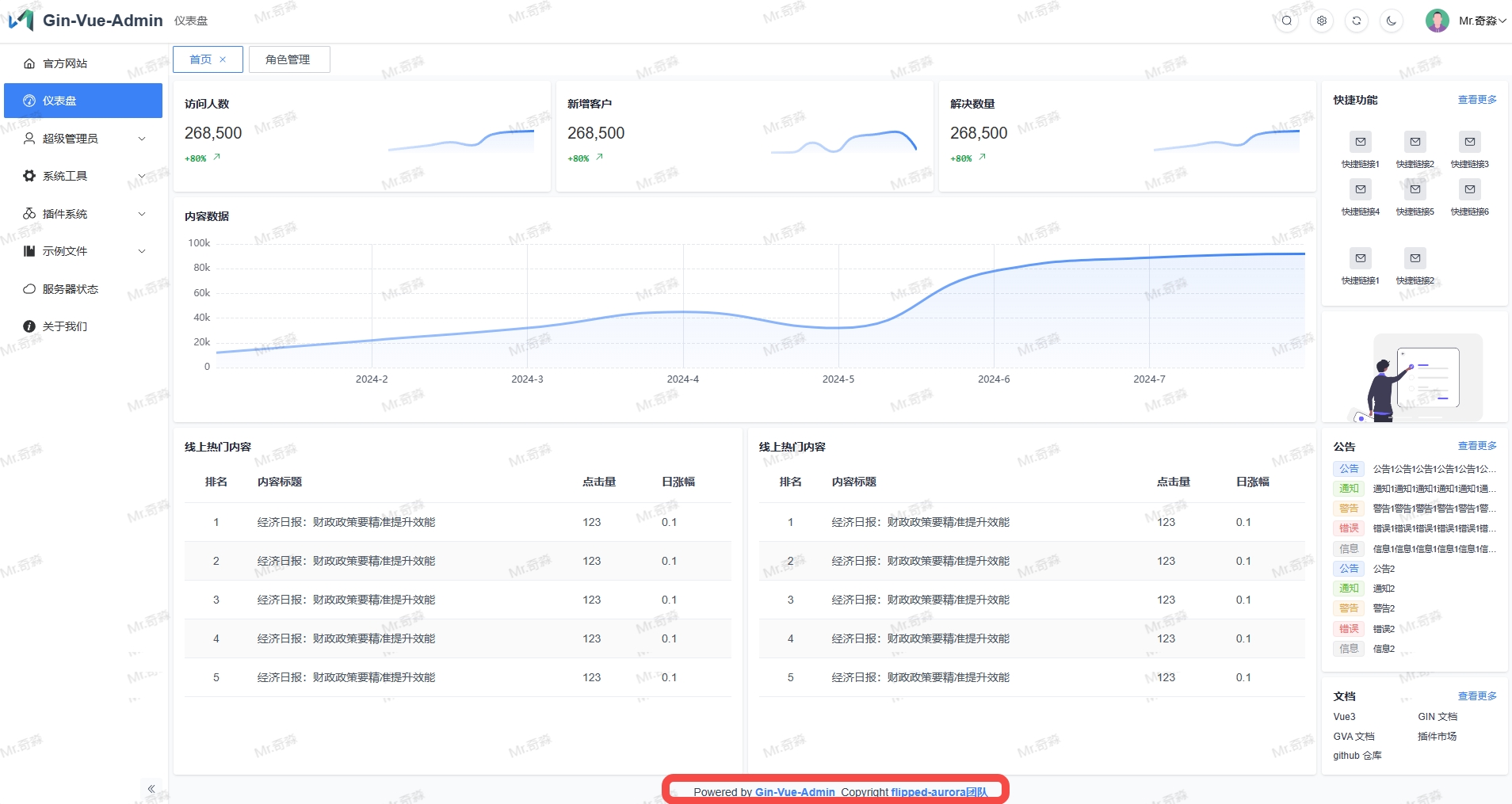

Gin-vue-admin是一个基于 vue 和 gin 开发的全栈前后端分离的开发基础平台,集成jwt鉴权,动态路由,动态菜单,casbin鉴权,表单生成器,代码生成器等功能,提供多种示例文件,让您把更多时间专注在业务开发上。

在线预览: http://demo.gin-vue-admin.com

测试用户名:admin

测试密码:123456

Hi! 首先感谢你使用 gin-vue-admin。

Gin-vue-admin 是一套为快速研发准备的一整套前后端分离架构式的开源框架,旨在快速搭建中小型项目。

Gin-vue-admin 的成长离不开大家的支持,如果你愿意为 gin-vue-admin 贡献代码或提供建议,请阅读以下内容。

-

issue 仅用于提交 Bug 或 Feature 以及设计相关的内容,其它内容可能会被直接关闭。

-

在提交 issue 之前,请搜索相关内容是否已被提出。

-

请先 fork 一份到自己的项目下,不要直接在仓库下建分支。

-

commit 信息要以

[文件名]: 描述信息的形式填写,例如README.md: fix xxx bug。 -

如果是修复 bug,请在 PR 中给出描述信息。

-

合并代码需要两名维护人员参与:一人进行 review 后 approve,另一人再次 review,通过后即可合并。

- node版本 > v18.16.0

- golang版本 >= v1.22

- IDE推荐:Goland

使用 Goland 等编辑工具,打开server目录,不可以打开 gin-vue-admin 根目录

# 克隆项目

git clone https://github.com/flipped-aurora/gin-vue-admin.git

# 进入server文件夹

cd server

# 使用 go mod 并安装go依赖包

go generate

# 运行

go run .

# 进入web文件夹

cd web

# 安装依赖

npm install

# 启动web项目

npm run servego install github.com/swaggo/swag/cmd/swag@latestcd server

swag init执行上面的命令后,server目录下会出现docs文件夹里的

docs.go,swagger.json,swagger.yaml三个文件更新,启动go服务之后, 在浏览器输入 http://localhost:8888/swagger/index.html 即可查看swagger文档

使用VSCode打开根目录下的工作区文件gin-vue-admin.code-workspace,在边栏可以看到三个虚拟目录:backend、frontend、root。

在运行和调试中也可以看到三个task:Backend、Frontend、Both (Backend & Frontend)。运行Both (Backend & Frontend)可以同时启动前后端项目。

在工作区配置文件中有go.toolsEnvVars字段,是用于VSCode自身的go工具环境变量。此外在多go版本的系统中,可以通过gopath、go.goroot指定运行版本。

"go.gopath": null,

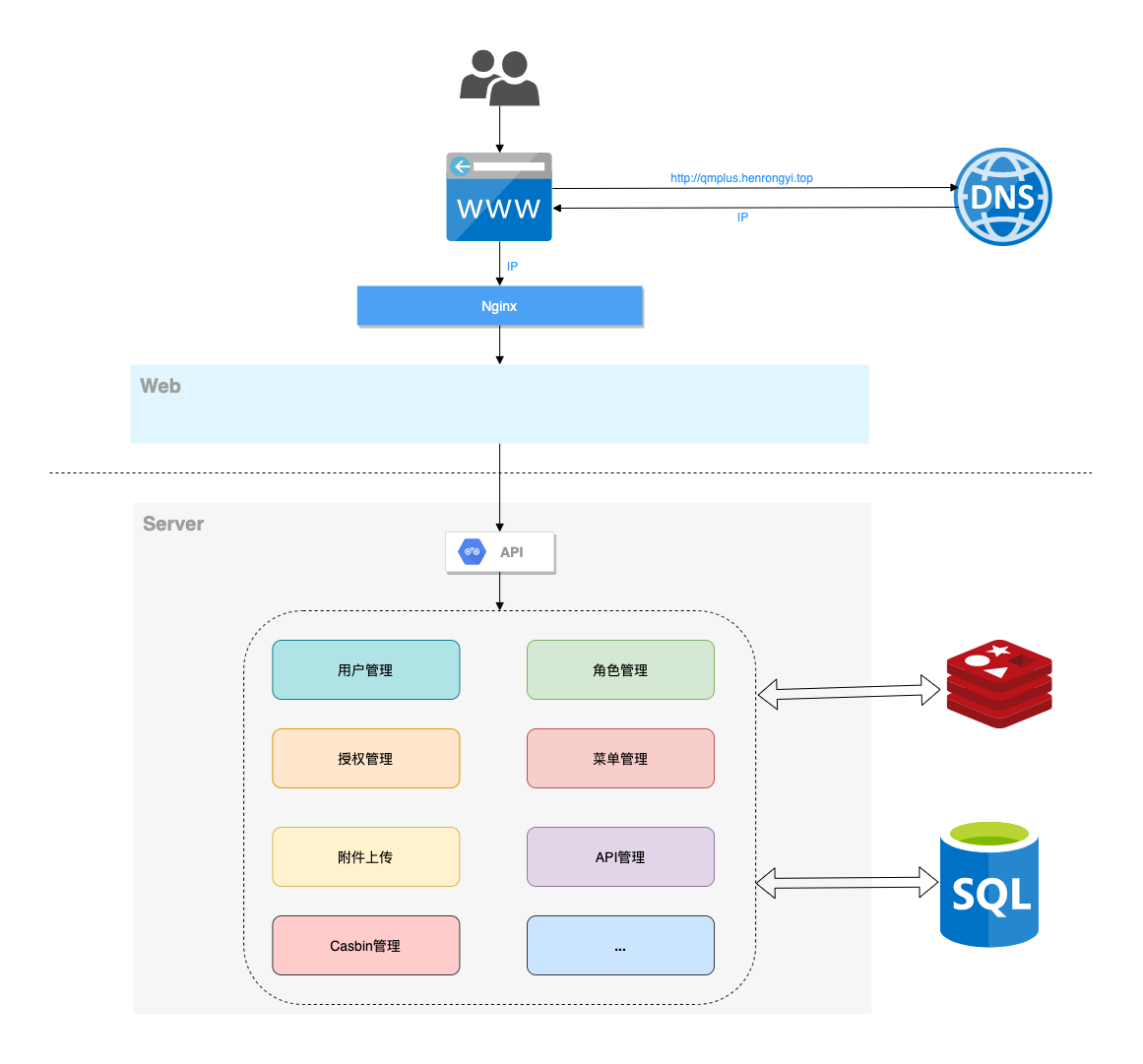

"go.goroot": null,- 前端:用基于 Vue 的 Element 构建基础页面。

- 后端:用 Gin 快速搭建基础restful风格API,Gin 是一个go语言编写的Web框架。

- 数据库:采用

MySql> (5.7) 版本 数据库引擎 InnoDB,使用 gorm 实现对数据库的基本操作。 - 缓存:使用

Redis实现记录当前活跃用户的jwt令牌并实现多点登录限制。 - API文档:使用

Swagger构建自动化文档。 - 配置文件:使用 fsnotify 和 viper 实现

yaml格式的配置文件。 - 日志:使用 zap 实现日志记录。

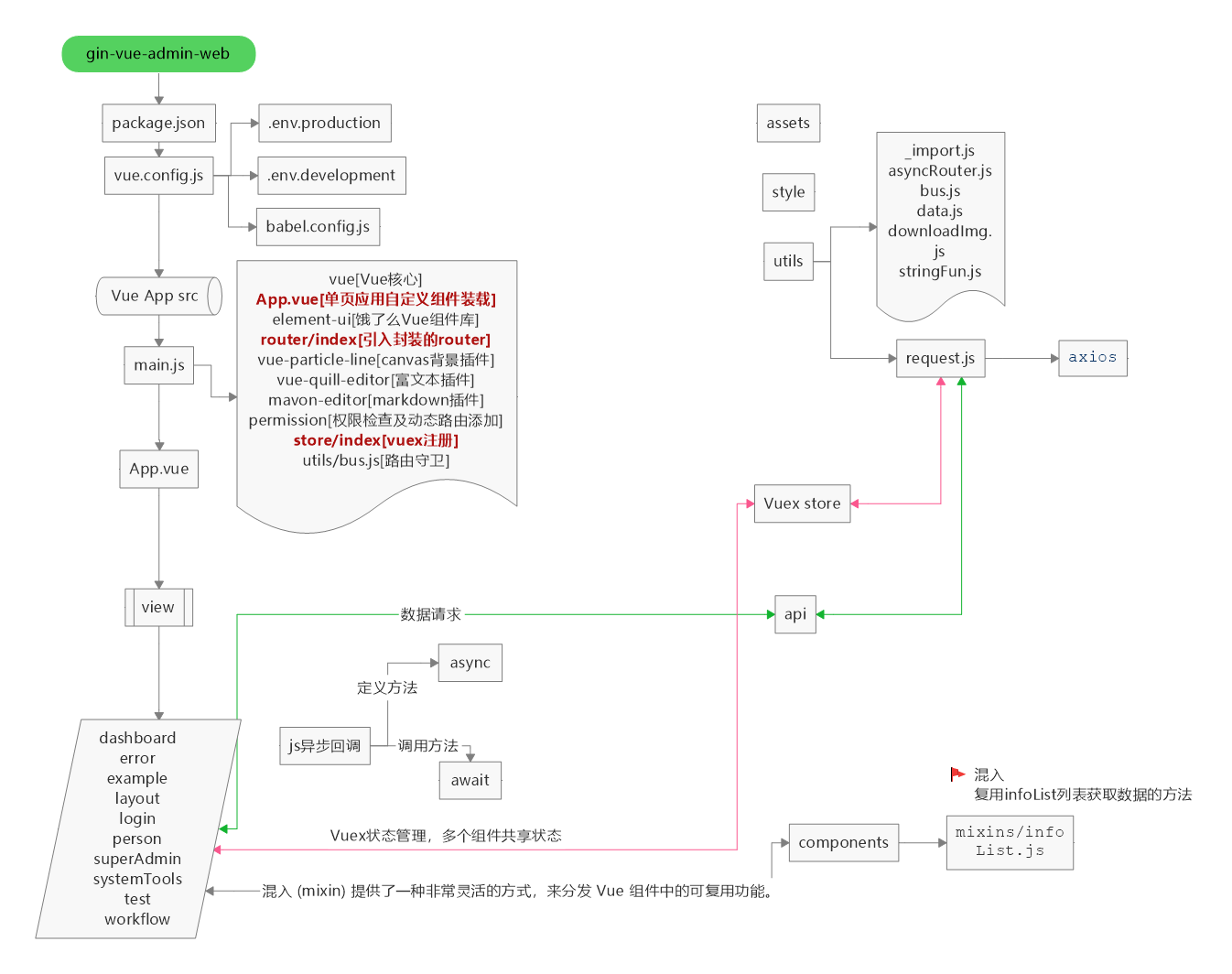

4.2 前端详细设计图 (提供者:baobeisuper)

├── server

├── api (api层)

│ └── v1 (v1版本接口)

├── config (配置包)

├── core (核心文件)

├── docs (swagger文档目录)

├── global (全局对象)

├── initialize (初始化)

│ └── internal (初始化内部函数)

├── middleware (中间件层)

├── model (模型层)

│ ├── request (入参结构体)

│ └── response (出参结构体)

├── packfile (静态文件打包)

├── resource (静态资源文件夹)

│ ├── excel (excel导入导出默认路径)

│ ├── page (表单生成器)

│ └── template (模板)

├── router (路由层)

├── service (service层)

├── source (source层)

└── utils (工具包)

├── timer (定时器接口封装)

└── upload (oss接口封装)

web

├── babel.config.js

├── Dockerfile

├── favicon.ico

├── index.html -- 主页面

├── limit.js -- 助手代码

├── package.json -- 包管理器代码

├── src -- 源代码

│ ├── api -- api 组

│ ├── App.vue -- 主页面

│ ├── assets -- 静态资源

│ ├── components -- 全局组件

│ ├── core -- gva 组件包

│ │ ├── config.js -- gva网站配置文件

│ │ ├── gin-vue-admin.js -- 注册欢迎文件

│ │ └── global.js -- 统一导入文件

│ ├── directive -- v-auth 注册文件

│ ├── main.js -- 主文件

│ ├── permission.js -- 路由中间件

│ ├── pinia -- pinia 状态管理器,取代vuex

│ │ ├── index.js -- 入口文件

│ │ └── modules -- modules

│ │ ├── dictionary.js

│ │ ├── router.js

│ │ └── user.js

│ ├── router -- 路由声明文件

│ │ └── index.js

│ ├── style -- 全局样式

│ │ ├── base.scss

│ │ ├── basics.scss

│ │ ├── element_visiable.scss -- 此处可以全局覆盖 element-plus 样式

│ │ ├── iconfont.css -- 顶部几个icon的样式文件

│ │ ├── main.scss

│ │ ├── mobile.scss

│ │ └── newLogin.scss

│ ├── utils -- 方法包库

│ │ ├── asyncRouter.js -- 动态路由相关

│ │ ├── btnAuth.js -- 动态权限按钮相关

│ │ ├── bus.js -- 全局mitt声明文件

│ │ ├── date.js -- 日期相关

│ │ ├── dictionary.js -- 获取字典方法

│ │ ├── downloadImg.js -- 下载图片方法

│ │ ├── format.js -- 格式整理相关

│ │ ├── image.js -- 图片相关方法

│ │ ├── page.js -- 设置页面标题

│ │ ├── request.js -- 请求

│ │ └── stringFun.js -- 字符串文件

| ├── view -- 主要view代码

| | ├── about -- 关于我们

| | ├── dashboard -- 面板

| | ├── error -- 错误

| | ├── example --上传案例

| | ├── iconList -- icon列表

| | ├── init -- 初始化数据

| | | ├── index -- 新版本

| | | ├── init -- 旧版本

| | ├── layout -- layout约束页面

| | | ├── aside

| | | ├── bottomInfo -- bottomInfo

| | | ├── screenfull -- 全屏设置

| | | ├── setting -- 系统设置

| | | └── index.vue -- base 约束

| | ├── login --登录

| | ├── person --个人中心

| | ├── superAdmin -- 超级管理员操作

| | ├── system -- 系统检测页面

| | ├── systemTools -- 系统配置相关页面

| | └── routerHolder.vue -- page 入口页面

├── vite.config.js -- vite 配置文件

└── yarn.lock

- 权限管理:基于

jwt和casbin实现的权限管理。 - 文件上传下载:实现基于

七牛云,阿里云,腾讯云的文件上传操作(请开发自己去各个平台的申请对应token或者对应key)。 - 分页封装:前端使用

mixins封装分页,分页方法调用mixins即可。 - 用户管理:系统管理员分配用户角色和角色权限。

- 角色管理:创建权限控制的主要对象,可以给角色分配不同api权限和菜单权限。

- 菜单管理:实现用户动态菜单配置,实现不同角色不同菜单。

- api管理:不同用户可调用的api接口的权限不同。

- 配置管理:配置文件可前台修改(在线体验站点不开放此功能)。

- 条件搜索:增加条件搜索示例。

- restful示例:可以参考用户管理模块中的示例API。

- 前端文件参考: web/src/view/superAdmin/api/api.vue

- 后台文件参考: server/router/sys_api.go

- 多点登录限制:需要在

config.yaml中把system中的use-multipoint修改为true(需要自行配置Redis和Config中的Redis参数,测试阶段,有bug请及时反馈)。 - 分片上传:提供文件分片上传和大文件分片上传功能示例。

- 表单生成器:表单生成器借助 @Variant Form 。

- 代码生成器:后台基础逻辑以及简单curd的代码生成器。

https://www.yuque.com/flipped-aurora

内有前端框架教学视频。如果觉得项目对您有所帮助可以添加我的个人微信:shouzi_1994,欢迎您提出宝贵的需求。

(1)手把手教学视频

(2)后端目录结构调整介绍以及使用方法

(3)golang基础教学视频

bilibili:https://space.bilibili.com/322210472/channel/detail?cid=108884

(4)gin框架基础教学

bilibili:https://space.bilibili.com/322210472/channel/detail?cid=126418&ctype=0

(5)gin-vue-admin 版本更新介绍视频

| 微信 |

|---|

|

防止广告进群,添加微信,输入以下代码执行结果(请勿转码为string)

str := "5Yqg5YWlR1ZB5Lqk5rWB576k"

decodeBytes, err := base64.StdEncoding.DecodeString(str)

fmt.Println(decodeBytes, err)

感谢您对gin-vue-admin的贡献!

如果你觉得这个项目对你有帮助,你可以请作者喝饮料 🍹 点我

请严格遵守Apache 2.0协议并保留作品声明,去除版权信息请务必获取授权

未授权去除版权信息将依法追究法律责任

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gin-vue-admin

Similar Open Source Tools

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

JeecgBoot

JeecgBoot is a Java AI Low Code Platform for Enterprise web applications, based on BPM and code generator. It features a SpringBoot2.x/3.x backend, SpringCloud, Ant Design Vue3, Mybatis-plus, Shiro, JWT, supporting microservices, multi-tenancy, and AI capabilities like DeepSeek and ChatGPT. The powerful code generator allows for one-click generation of frontend and backend code without writing any code. JeecgBoot leads the way in AI low-code development mode, helping to solve 80% of repetitive work in Java projects and allowing developers to focus more on business logic.

z.ai2api_python

Z.AI2API Python is a lightweight OpenAI API proxy service that integrates seamlessly with existing applications. It supports the full functionality of GLM-4.5 series models and features high-performance streaming responses, enhanced tool invocation, support for thinking mode, integration with search models, Docker deployment, session isolation for privacy protection, flexible configuration via environment variables, and intelligent upstream model routing.

private-llm-qa-bot

This is a production-grade knowledge Q&A chatbot implementation based on AWS services and the LangChain framework, with optimizations at various stages. It supports flexible configuration and plugging of vector models and large language models. The front and back ends are separated, making it easy to integrate with IM tools (such as Feishu).

py-xiaozhi

py-xiaozhi is a Python-based XiaoZhi voice client designed for learning code and experiencing AI XiaoZhi's voice functions without hardware conditions. It features voice interaction, graphical interface, volume control, session management, encrypted audio transmission, CLI mode, and automatic copying of verification codes and opening browsers for first-time users. The project aims to optimize and add new features to zhh827's py-xiaozhi based on the original hardware project xiaozhi-esp32 and the Python implementation py-xiaozhi.

AIxVuln

AIxVuln is an automated vulnerability discovery and verification system based on large models (LLM) + function calling + Docker sandbox. The system manages 'projects' through a web UI/desktop client, automatically organizing multiple 'digital humans' for environment setup, code auditing, vulnerability verification, and report generation. It utilizes an isolated Docker environment for dependency installation, service startup, PoC verification, and evidence collection, ultimately producing downloadable vulnerability reports. The system has already discovered dozens of vulnerabilities in real open-source projects.

banana-slides

Banana-slides is a native AI-powered PPT generation application based on the nano banana pro model. It supports generating complete PPT presentations from ideas, outlines, and page descriptions. The app automatically extracts attachment charts, uploads any materials, and allows verbal modifications, aiming to truly 'Vibe PPT'. It lowers the threshold for creating PPTs, enabling everyone to quickly create visually appealing and professional presentations.

PaiAgent

PaiAgent is an enterprise-level AI workflow visualization orchestration platform that simplifies the combination and scheduling of AI capabilities. It allows developers and business users to quickly build complex AI processing flows through an intuitive drag-and-drop interface, without the need to write code, enabling collaboration of various large models.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

vibium

Vibium is a browser automation infrastructure designed for AI agents, providing a single binary that manages browser lifecycle, WebDriver BiDi protocol, and an MCP server. It offers zero configuration, AI-native capabilities, and is lightweight with no runtime dependencies. It is suitable for AI agents, test automation, and any tasks requiring browser interaction.

memsearch

Memsearch is a tool that allows users to give their AI agents persistent memory in a few lines of code. It enables users to write memories as markdown and search them semantically. Inspired by OpenClaw's markdown-first memory architecture, Memsearch is pluggable into any agent framework. The tool offers features like smart deduplication, live sync, and a ready-made Claude Code plugin for building agent memory.

WenShape

WenShape is a context engineering system for creating long novels. It addresses the challenge of narrative consistency over thousands of words by using an orchestrated writing process, dynamic fact tracking, and precise token budget management. All project data is stored in YAML/Markdown/JSONL text format, naturally supporting Git version control.

topsha

LocalTopSH is an AI Agent Framework designed for companies and developers who require 100% on-premise AI agents with data privacy. It supports various OpenAI-compatible LLM backends and offers production-ready security features. The framework allows simple deployment using Docker compose and ensures that data stays within the user's network, providing full control and compliance. With cost-effective scaling options and compatibility in regions with restrictions, LocalTopSH is a versatile solution for deploying AI agents on self-hosted infrastructure.

Autopilot-Notes

Autopilot Notes is an open-source knowledge base for systematically learning autonomous driving technology. It covers basic theory, hardware, algorithms, tools, and practical engineering practices across 10+ chapters. The repository provides daily updates on industry trends, in-depth analysis of mainstream solutions like Tesla, Baidu Apollo, and Openpilot, and hands-on content including simulation, deployment, and optimization. Contributors are welcome to submit pull requests to improve the documentation.

boxlite

BoxLite is an embedded, lightweight micro-VM runtime designed for AI agents running OCI containers with hardware-level isolation. It is built for high concurrency with no daemon required, offering features like lightweight VMs, high concurrency, hardware isolation, embeddability, and OCI compatibility. Users can spin up 'Boxes' to run containers for AI agent sandboxes and multi-tenant code execution scenarios where Docker alone is insufficient and full VM infrastructure is too heavy. BoxLite supports Python, Node.js, and Rust with quick start guides for each, along with features like CPU/memory limits, storage options, networking capabilities, security layers, and image registry configuration. The tool provides SDKs for Python and Node.js, with Go support coming soon. It offers detailed documentation, examples, and architecture insights for users to understand how BoxLite works under the hood.

AiToEarn

AiToEarn is a one-click publishing tool for multiple self-media platforms such as Douyin, Xiaohongshu, Video Number, and Kuaishou. It allows users to publish videos with ease, observe popular content across the web, and view rankings of explosive articles on Xiaohongshu. The tool is also capable of providing daily and weekly rankings of popular content on Xiaohongshu, Douyin, Video Number, and Kuaishou. In progress features include expanding publishing parameters to support short video e-commerce, adding an AI tool ranking list, enabling AI automatic comments, and AI comment search.

For similar tasks

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

jetson-generative-ai-playground

This repo hosts tutorial documentation for running generative AI models on NVIDIA Jetson devices. The documentation is auto-generated and hosted on GitHub Pages using their CI/CD feature to automatically generate/update the HTML documentation site upon new commits.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

MetaGPT

MetaGPT is a multi-agent framework that enables GPT to work in a software company, collaborating to tackle more complex tasks. It assigns different roles to GPTs to form a collaborative entity for complex tasks. MetaGPT takes a one-line requirement as input and outputs user stories, competitive analysis, requirements, data structures, APIs, documents, etc. Internally, MetaGPT includes product managers, architects, project managers, and engineers. It provides the entire process of a software company along with carefully orchestrated SOPs. MetaGPT's core philosophy is "Code = SOP(Team)", materializing SOP and applying it to teams composed of LLMs.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.