LLM-FuzzX

LLM-FuzzX is a user-friendly fuzz testing tool for Large Language Models (e.g., GPT, Claude, LLaMA), featuring advanced task-aware mutation strategies, fine-grained evaluations, and jailbreak detection to uncover potential security vulnerabilities and enhance model robustness.

Stars: 108

LLM-FuzzX is an open-source user-friendly fuzz testing tool for large language models (e.g., GPT, Claude, LLaMA), equipped with advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly discover potential security vulnerabilities and enhance model robustness. The tool features a user-friendly web interface for visual configuration and real-time monitoring, supports various advanced mutation methods, integrates RoBERTa model for real-time jailbreak detection and evaluation, supports multiple language models like GPT, Claude, LLaMA, provides visualization analysis with seed flowcharts and experiment data statistics, and offers detailed logging support for main, mutation, and jailbreak logs.

README:

LLM-FuzzX is an open-source, user-friendly fuzzing tool for Large Language Models (like GPT, Claude, LLaMA) featuring advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly identify potential security vulnerabilities and enhance model robustness. The methodology is primarily based on LLM-Fuzzer.

- 🚀 User-Friendly Interface: Intuitive web interface with visual configuration and real-time monitoring

- 🔄 Diverse Mutation Strategies: Support for various advanced mutation methods, including similar mutation, crossover mutation, expansion mutation, etc.

- 📊 Real-time Evaluation Feedback: Integrated RoBERTa model for real-time jailbreak detection and evaluation

- 🌐 Multi-model Support: Compatible with mainstream LLMs including GPT, Claude, LLaMA, etc.

- 📈 Visualization Analysis: Multi-dimensional analysis with seed flow diagrams and experimental data statistics

- 🔍 Fine-grained Logging: Support for multi-level logging, including main logs, mutation logs, jailbreak logs, etc.

LLM-FuzzX adopts a front-end and back-end separated architecture design, consisting of the following core modules:

- Fuzzing Engine: System's central scheduler, coordinating component workflows

- Seed Management: Responsible for seed storage, retrieval, and updates

- Model Interface: Unified model calling interface supporting multiple model implementations

- Evaluation System: RoBERTa-based jailbreak detection and multi-dimensional evaluation

- Similar Mutation: Maintains original template style while generating similar structured variants

- Crossover Mutation: Combines templates selected from the seed pool

- Expansion Mutation: Adds supplementary content to original templates

- Shortening Mutation: Generates more concise variants through compression and refinement

- Restatement Mutation: Rephrases while maintaining semantic meaning

- Target-aware Mutation: Generates variants based on target model characteristics

- Python 3.8+

- Node.js 14+

- CUDA support (for RoBERTa evaluation model)

- 8GB+ system memory

- Stable network connection

# Clone the project

git clone https://github.com/Windy3f3f3f3f/LLM-FuzzX.git

# Create virtual environment

conda create -n llm-fuzzx python=3.10

conda activate llm-fuzzx

# Install dependencies

cd LLM-FuzzX

pip install -r requirements.txt# Enter frontend directory

cd llm-fuzzer-frontend

# Install dependencies

npm install

# Start development server

npm run serve- Create

.envfile in project root to configure API keys:

OPENAI_API_KEY=your-openai-key

CLAUDE_API_KEY=your-claude-key

HUGGINGFACE_API_KEY=your-huggingface-key- Configure model parameters in

config.py:

MODEL_CONFIG = {

'target_model': 'gpt-3.5-turbo',

'mutator_model': 'gpt-3.5-turbo',

'evaluator_model': 'roberta-base',

'temperature': 0.7,

'max_tokens': 2048

}# Start backend service

python app.py # Default runs on http://localhost:10003

# Start frontend service

cd llm-fuzzer-frontend

npm run serve # Default runs on http://localhost:10001- Select target test model (supports GPT, Claude, LLaMA, etc.)

- Prepare test data

- Use preset question sets

- Custom input questions

- Configure test parameters

- Set maximum iteration count

- Select mutation strategies

- Configure evaluation thresholds

- Start testing and monitor in real-time

- View current progress

- Monitor success rate

- Analyze mutation effects

The system provides multi-level logging:

-

main.log: Main processes and key events -

mutation.log: Mutation operation records -

jailbreak.log: Successful jailbreak cases -

error.log: Errors and exceptions

LLM-FuzzX/

├── src/ # Backend source code

│ ├── api/ # API interfaces

│ ├── evaluation/ # Evaluation module

│ ├── fuzzing/ # Fuzzing core

│ ├── models/ # Model wrappers

│ └── utils/ # Utility functions

├── llm-fuzzer-frontend/ # Frontend code

├── scripts/ # Helper scripts

├── data/ # Data files

└── logs/ # Log files

-

Test Scale Settings

- Recommended to limit single test iterations to under 1000

- Start with small-scale trials for new scenarios

- Adjust concurrency based on available resources

-

Mutation Strategy Selection

- Prefer single mutation strategy for simple scenarios

- Combine multiple mutation methods for complex scenarios

- Maintain balance in mutation intensity

-

Resource Optimization

- Set reasonable API call intervals

- Clean historical records periodically

- Monitor system resource usage

Welcome to participate in the project through:

- Submit Issues

- Report bugs

- Suggest new features

- Share usage experiences

- Submit Pull Requests

- Fix issues

- Add features

- Improve documentation

- Methodology Contributions

- Provide new mutation strategies

- Design innovative evaluation methods

- Share testing experiences

This project is licensed under the MIT License. See the LICENSE file for details.

- Issue: GitHub Issues

- Email: [email protected]

[1] Yu, J., Lin, X., Yu, Z., & Xing, X. (2024). LLM-Fuzzer: Scaling Assessment of Large Language Model Jailbreaks. In 33rd USENIX Security Symposium (USENIX Security 24) (pp. 4657-4674). USENIX Association.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLM-FuzzX

Similar Open Source Tools

LLM-FuzzX

LLM-FuzzX is an open-source user-friendly fuzz testing tool for large language models (e.g., GPT, Claude, LLaMA), equipped with advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly discover potential security vulnerabilities and enhance model robustness. The tool features a user-friendly web interface for visual configuration and real-time monitoring, supports various advanced mutation methods, integrates RoBERTa model for real-time jailbreak detection and evaluation, supports multiple language models like GPT, Claude, LLaMA, provides visualization analysis with seed flowcharts and experiment data statistics, and offers detailed logging support for main, mutation, and jailbreak logs.

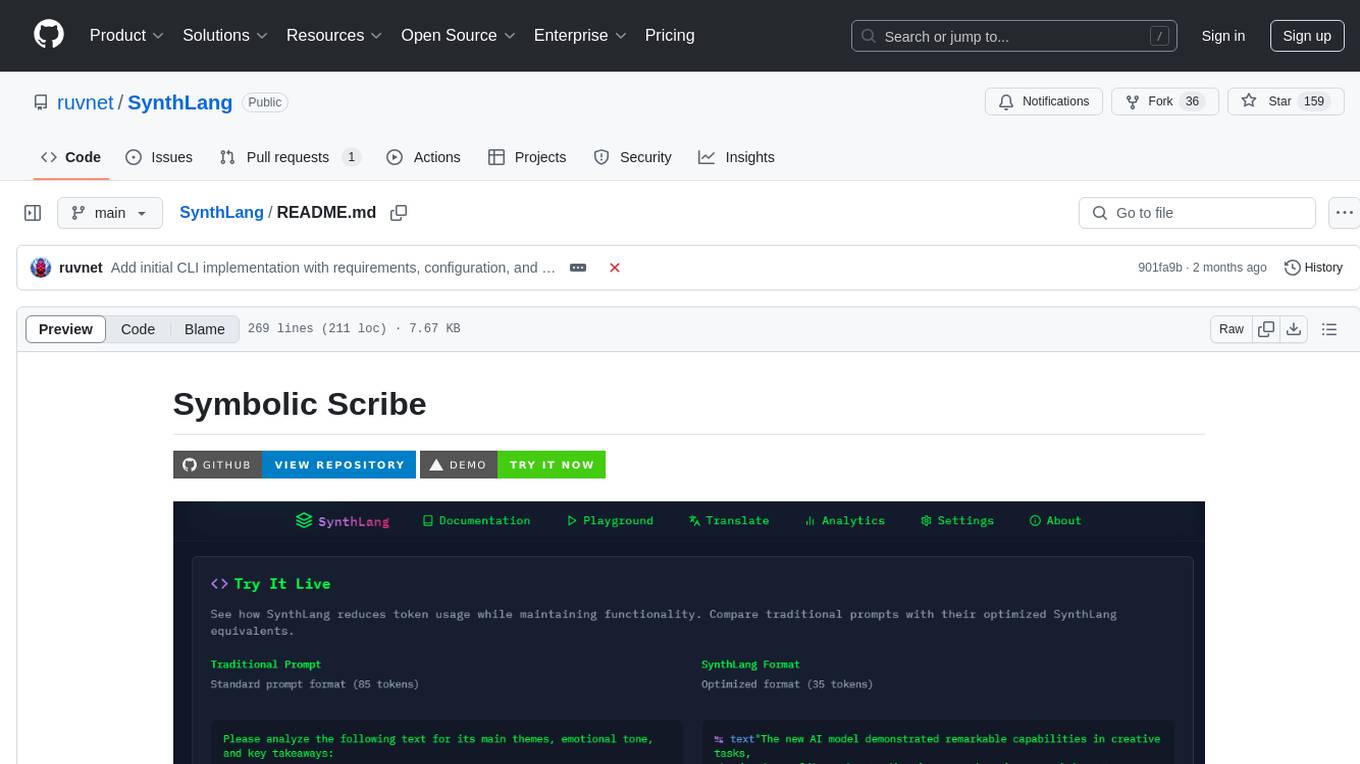

SynthLang

SynthLang is a tool designed to optimize AI prompts by reducing costs and improving processing speed. It brings academic rigor to prompt engineering, creating precise and powerful AI interactions. The tool includes core components like a Translator Engine, Performance Optimization, Testing Framework, and Technical Architecture. It offers mathematical precision, academic rigor, enhanced security, a modern interface, and instant testing. Users can integrate mathematical frameworks, model complex relationships, and apply structured prompts to various domains. Security features include API key management and data privacy. The tool also provides a CLI for prompt engineering and optimization capabilities.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

GhidrAssist

GhidrAssist is an advanced LLM-powered plugin for interactive reverse engineering assistance in Ghidra. It integrates Large Language Models (LLMs) to provide intelligent assistance for binary exploration and reverse engineering. The tool supports various OpenAI v1-compatible APIs, including local models and cloud providers. Key features include code explanation, interactive chat, custom queries, Graph-RAG knowledge system with semantic knowledge graph, community detection, security feature extraction, semantic graph tab, extended thinking/reasoning control, ReAct agentic mode, MCP integration, function calling, actions tab, RAG (Retrieval Augmented Generation), and RLHF dataset generation. The plugin uses a modular, service-oriented architecture with core services, Graph-RAG backend, data layer, and UI components.

aigne-framework

AIGNE Framework is a functional AI application development framework designed to simplify and accelerate the process of building modern applications. It combines functional programming features, powerful artificial intelligence capabilities, and modular design principles to help developers easily create scalable solutions. With key features like modular design, TypeScript support, multiple AI model support, flexible workflow patterns, MCP protocol integration, code execution capabilities, and Blocklet ecosystem integration, AIGNE Framework offers a comprehensive solution for developers. The framework provides various workflow patterns such as Workflow Router, Workflow Sequential, Workflow Concurrency, Workflow Handoff, Workflow Reflection, Workflow Orchestration, Workflow Code Execution, and Workflow Group Chat to address different application scenarios efficiently. It also includes built-in MCP support for running MCP servers and integrating with external MCP servers, along with packages for core functionality, agent library, CLI, and various models like OpenAI, Gemini, Claude, and Nova.

copilot-collections

Copilot Collections is an opinionated setup for GitHub Copilot tailored for delivery teams. It provides shared workflows, specialized agents, task prompts, reusable skills, and MCP integrations to streamline the software development process. The focus is on building features while letting Copilot handle the glue. The setup requires a GitHub Copilot Pro license and VS Code version 1.109 or later. It supports a standard workflow of Research, Plan, Implement, and Review, with specialized flows for UI-heavy tasks and end-to-end testing. Agents like Architect, Business Analyst, Software Engineer, UI Reviewer, Code Reviewer, and E2E Engineer assist in different stages of development. Skills like Task Analysis, Architecture Design, Codebase Analysis, Code Review, and E2E Testing provide specialized domain knowledge and workflows. The repository also includes prompts and chat commands for various tasks, along with instructions for installation and configuration in VS Code.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

MARBLE

MARBLE (Multi-Agent Coordination Backbone with LLM Engine) is a modular framework for developing, testing, and evaluating multi-agent systems leveraging Large Language Models. It provides a structured environment for agents to interact in simulated environments, utilizing cognitive abilities and communication mechanisms for collaborative or competitive tasks. The framework features modular design, multi-agent support, LLM integration, shared memory, flexible environments, metrics and evaluation, industrial coding standards, and Docker support.

CortexON

CortexON is an open-source, multi-agent AI system designed to automate and simplify everyday tasks. It integrates specialized agents like Web Agent, File Agent, Coder Agent, Executor Agent, and API Agent to accomplish user-defined objectives. CortexON excels at executing complex workflows, research tasks, technical operations, and business process automations by dynamically coordinating the agents' unique capabilities. It offers advanced research automation, multi-agent orchestration, integration with third-party APIs, code generation and execution, efficient file and data management, and personalized task execution for travel planning, market analysis, educational content creation, and business intelligence.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

xllm-service

xLLM-service is a service-layer framework developed based on the xLLM inference engine, providing efficient, fault-tolerant, and flexible LLM inference services for clustered deployment. It addresses challenges in enterprise-level service scenarios such as ensuring SLA of online services, improving resource utilization, reacting to changing request loads, resolving performance bottlenecks, and ensuring high reliability of computing instances. With features like unified scheduling, adaptive dynamic allocation, EPD three-stage disaggregation, and fault-tolerant architecture, xLLM-service offers efficient and reliable LLM inference services.

shandu

Shandu is an advanced AI research system that automates comprehensive research processes using language models, web scraping, and iterative exploration to generate well-structured reports with citations. It features intelligent state-based workflow, deep exploration, multi-source information synthesis, enhanced web scraping, smart source evaluation, content analysis pipeline, comprehensive report generation, parallel processing, adaptive search strategy, and full citation management.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

holisticai

Holistic AI is an open-source library dedicated to assessing and improving the trustworthiness of AI systems. It focuses on measuring and mitigating bias, explainability, robustness, security, and efficacy in AI models. The tool provides comprehensive metrics, mitigation techniques, a user-friendly interface, and visualization tools to enhance AI system trustworthiness. It offers documentation, tutorials, and detailed installation instructions for easy integration into existing workflows.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

Code-Atlas

Code Atlas is a lightweight interpreter developed in C++ that supports the execution of multi-language code snippets and partial Markdown rendering. It consumes significantly lower resources compared to similar tools, making it suitable for resource-limited devices. It leverages llama.cpp for local large-model inference and supports cloud-based large-model APIs. The tool provides features for code execution, Markdown rendering, local AI inference, and resource efficiency.

For similar tasks

LLM-FuzzX

LLM-FuzzX is an open-source user-friendly fuzz testing tool for large language models (e.g., GPT, Claude, LLaMA), equipped with advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly discover potential security vulnerabilities and enhance model robustness. The tool features a user-friendly web interface for visual configuration and real-time monitoring, supports various advanced mutation methods, integrates RoBERTa model for real-time jailbreak detection and evaluation, supports multiple language models like GPT, Claude, LLaMA, provides visualization analysis with seed flowcharts and experiment data statistics, and offers detailed logging support for main, mutation, and jailbreak logs.

hexstrike-ai

HexStrike AI is an advanced AI-powered penetration testing MCP framework with 150+ security tools and 12+ autonomous AI agents. It features a multi-agent architecture with intelligent decision-making, vulnerability intelligence, and modern visual engine. The platform allows for AI agent connection, intelligent analysis, autonomous execution, real-time adaptation, and advanced reporting. HexStrike AI offers a streamlined installation process, Docker container support, 250+ specialized AI agents/tools, native desktop client, advanced web automation, memory optimization, enhanced error handling, and bypassing limitations.

DeepAudit

DeepAudit is an AI audit team accessible to everyone, making vulnerability discovery within reach. It is a next-generation code security audit platform based on Multi-Agent collaborative architecture. It simulates the thinking mode of security experts, achieving deep code understanding, vulnerability discovery, and automated sandbox PoC verification through multiple intelligent agents (Orchestrator, Recon, Analysis, Verification). DeepAudit aims to address the three major pain points of traditional SAST tools: high false positive rate, blind spots in business logic, and lack of verification means. Users only need to import the project, and DeepAudit automatically starts working: identifying the technology stack, analyzing potential risks, generating scripts, sandbox verification, and generating reports, ultimately outputting a professional audit report. The core concept is to let AI attack like a hacker and defend like an expert.

AIxVuln

AIxVuln is an automated vulnerability discovery and verification system based on large models (LLM) + function calling + Docker sandbox. The system manages 'projects' through a web UI/desktop client, automatically organizing multiple 'digital humans' for environment setup, code auditing, vulnerability verification, and report generation. It utilizes an isolated Docker environment for dependency installation, service startup, PoC verification, and evidence collection, ultimately producing downloadable vulnerability reports. The system has already discovered dozens of vulnerabilities in real open-source projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.