Slurm-web

Open source web interface for Slurm HPC clusters

Stars: 386

Slurm-web is an open source web dashboard designed for Slurm based HPC clusters. It provides a graphical user interface to track jobs, insights, and visualizations for monitoring HPC supercomputers. The tool offers features like interactive charts, job filtering, live status updates, node visualization, RBAC permissions, LDAP authentication, and integration with Prometheus for metrics collection.

README:

Slurm-web is an open source web dashboard for Slurm based HPC clusters.

Slurm is the world leading workload manager for HPC clusters with all most advanced features to manage jobs and resources efficiently with a powerful command-line interface (CLI).

Slurm-web provides a clear graphical user interface with views to track your jobs, intuitive insights and advanced visualizations on top of Slurm to monitor status of HPC supercomputers in your organization, in a web browser on all your devices.

Many features in a reactive & responsive web UI:

- Dashboard with interactive charts of resources and jobs status

- Instant jobs filtering and sorting

- Live jobs status update

- Colored badges to visualize job status at a glance

- Advanced visualization of node status with racking topology

- Intuitive visualization of QOS and advanced reservations

- Multi-clusters support

- LDAP authentication (including Active Directory support)

- Advanced RBAC permissions management

- Transparent caching

- Integration with Prometheus to collect and chart timeseries metrics of Slurm

Get more details in Slurm-web advanced features overview.

To install and start using Slurm-web in a few steps, follow the quickstart guide! Packages are available for most Linux distributions for easy installation and upgrade.

The full documentation of Slurm-web is available online with software architecture details, installation guide, configuration references, troubleshooting guide, etc.

Slurm-web is considered stable and ready for production.

Slurm-web is developed and maintained by Rackslab, software editor of open source solutions designed to help HPC supercomputers administration, operations and management.

Need help to deploy or setup Slurm-web? There are multiple ways to get technical support.

Multiple community channels are available to ask questions:

- Matrix Chat #slurm-web:talk.rackslab.io: instant messaging for quick feedback and help.

[!NOTE] A Matrix account is required to access the chat room. It can be created in few steps on any Matrix network public provider such as matrix.org or gitter.im.

- GitHub Discussions Q&A: post your questions with more context and details for more in-depth answers.

This support is provided by the community with best-effort participation of Rackslab team. Please mind that people might not be available to answer your questions.

Rackslab offers commercial support to help organizations secure their deployment of Slurm-web with service level agreement (SLA) and minimal response time.

Our professional team has a unique expertise to offer a wide range of services from assistance to setup the installation to the most advanced bug resolution. Contact us for more information.

Want to contribute? Documentation to deploy a development environment is available in development README.md

Slurm-web is distributed under the terms of the GNU General Public License v3.0 or later (GPLv3+).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Slurm-web

Similar Open Source Tools

Slurm-web

Slurm-web is an open source web dashboard designed for Slurm based HPC clusters. It provides a graphical user interface to track jobs, insights, and visualizations for monitoring HPC supercomputers. The tool offers features like interactive charts, job filtering, live status updates, node visualization, RBAC permissions, LDAP authentication, and integration with Prometheus for metrics collection.

dreamfactory

DreamFactory is a self-hosted platform that provides governed API access to any data source for enterprise apps and local LLMs. It is a secure enterprise data access platform built on top of the Laravel framework, offering role-based access control, identity passthrough, and customization of API behavior using PHP, Python, and NodeJS scripting languages. DreamFactory allows users to generate powerful APIs for SQL and NoSQL databases, files, email, and push notifications in seconds, while ensuring security with features like user management, SSO authentication, OAuth, and Active Directory integration.

fast-wiki

FastWiki is an enterprise-level artificial intelligence customer service management system. It is a high-performance knowledge base system designed for large-scale information retrieval and intelligent search. Leveraging Microsoft's Semantic Kernel for deep learning and natural language processing, combined with .NET 8 and React framework, it provides an efficient, user-friendly, and scalable intelligent vector search platform. The system aims to offer an intelligent search solution that can understand and process complex queries, assisting users in quickly and accurately obtaining the needed information.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

taipy

Taipy is an open-source Python library for easy, end-to-end application development, featuring what-if analyses, smart pipeline execution, built-in scheduling, and deployment tools.

ClicShopping

ClicShopping AI™ is an open-source Ecommerce platform powered by Generative AI, designed for B2B, B2C, and B2B-B2C businesses. It offers seamless shopping experiences, advanced AI integration, modular architecture for customization, and responsive design across devices. With features like GPT API integration, RAG-powered Business Intelligence Agent, multi-model AI support, and security compliance, ClicShopping AI™ is a comprehensive solution for online businesses. It also provides internationalization support, performance analytics, server performance optimization, content management, API connections, shipping and payment options, and a marketplace for additional modules and apps.

Geoweaver

Geoweaver is an in-browser software that enables users to easily compose and execute full-stack data processing workflows using online spatial data facilities, high-performance computation platforms, and open-source deep learning libraries. It provides server management, code repository, workflow orchestration software, and history recording capabilities. Users can run it from both local and remote machines. Geoweaver aims to make data processing workflows manageable for non-coder scientists and preserve model run history. It offers features like progress storage, organization, SSH connection to external servers, and a web UI with Python support.

ClicShopping_V3

ClicShoppingAI is a powerful open-source Ecommerce solution that supports B2B, B2C, and B2B-B2C. Integrated with cutting-edge generative artificial intelligence systems like Gpt and Ollama, it helps merchants increase turnover and competitiveness for free. With AI capabilities, it optimizes inventory, offers personalized recommendations, and provides top-notch customer service. The solution is modular, lightweight, and user-friendly, with a seamless, responsive design for all devices. Installation is easy, empowering ongoing development through community support. Features include GPT API integration, generative AI functionalities, real-time safety stock predictive, WYSIWYG product description creation, image editor management, full SEO optimization, payment and shipping modules, extension system, GDPR compliance, multi-language support, and more.

DocsGPT

DocsGPT is an open-source documentation assistant powered by GPT models. It simplifies the process of searching for information in project documentation by allowing developers to ask questions and receive accurate answers. With DocsGPT, users can say goodbye to manual searches and quickly find the information they need. The tool aims to revolutionize project documentation experiences and offers features like live previews, Discord community, guides, and contribution opportunities. It consists of a Flask app, Chrome extension, similarity search index creation script, and a frontend built with Vite and React. Users can quickly get started with DocsGPT by following the provided setup instructions and can contribute to its development by following the guidelines in the CONTRIBUTING.md file. The project follows a Code of Conduct to ensure a harassment-free community environment for all participants. DocsGPT is licensed under MIT and is built with LangChain.

CSGHub

CSGHub is an open source, trustworthy large model asset management platform that can assist users in governing the assets involved in the lifecycle of LLM and LLM applications (datasets, model files, codes, etc). With CSGHub, users can perform operations on LLM assets, including uploading, downloading, storing, verifying, and distributing, through Web interface, Git command line, or natural language Chatbot. Meanwhile, the platform provides microservice submodules and standardized OpenAPIs, which could be easily integrated with users' own systems. CSGHub is committed to bringing users an asset management platform that is natively designed for large models and can be deployed On-Premise for fully offline operation. CSGHub offers functionalities similar to a privatized Huggingface(on-premise Huggingface), managing LLM assets in a manner akin to how OpenStack Glance manages virtual machine images, Harbor manages container images, and Sonatype Nexus manages artifacts.

bedrock-engineer

Bedrock Engineer is an AI assistant for software development tasks powered by Amazon Bedrock. It combines large language models with file system operations and web search functionality to support development processes. The autonomous AI agent provides interactive chat, file system operations, web search, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select agents, customize them, select tools, and customize tools. The tool also includes a website generator for React.js, Vue.js, Svelte.js, and Vanilla.js, with support for inline styling, Tailwind.css, and Material UI. Users can connect to design system data sources and generate AWS Step Functions ASL definitions.

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

countly-server

Countly is a privacy-first, AI-ready analytics and customer engagement platform built for organizations that require full data ownership and deployment flexibility. It can be deployed on-premises or in a private cloud, giving complete control over data, infrastructure, compliance, and security. Teams use Countly to understand user behavior across mobile, web, desktop, and connected devices, optimize product and customer experiences in real time, and automate and personalize customer engagement across channels. With flexible data tracking, customizable dashboards, and a modular plugin-based architecture, Countly scales with the product while ensuring long-term autonomy and zero vendor lock-in. Built for privacy, designed for flexibility, and ready for AI-driven innovation.

LabelLLM

LabelLLM is an open-source data annotation platform designed to optimize the data annotation process for LLM development. It offers flexible configuration, multimodal data support, comprehensive task management, and AI-assisted annotation. Users can access a suite of annotation tools, enjoy a user-friendly experience, and enhance efficiency. The platform allows real-time monitoring of annotation progress and quality control, ensuring data integrity and timeliness.

langchain4j

LangChain for Java simplifies integrating Large Language Models (LLMs) into Java applications by offering unified APIs for various LLM providers and embedding stores. It provides a comprehensive toolbox with tools for prompt templating, chat memory management, function calling, and high-level patterns like Agents and RAG. The library supports 15+ popular LLM providers and 15+ embedding stores, offering numerous examples to help users quickly start building LLM-powered applications. LangChain4j is a fusion of ideas from various projects and actively incorporates new techniques and integrations to keep users up-to-date. The project is under active development, with core functionality already in place for users to start building LLM-powered apps.

For similar tasks

Slurm-web

Slurm-web is an open source web dashboard designed for Slurm based HPC clusters. It provides a graphical user interface to track jobs, insights, and visualizations for monitoring HPC supercomputers. The tool offers features like interactive charts, job filtering, live status updates, node visualization, RBAC permissions, LDAP authentication, and integration with Prometheus for metrics collection.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

synmetrix

Synmetrix is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube.js to consolidate metrics from various sources and distribute them downstream via a SQL API. Use cases include data democratization, business intelligence and reporting, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

gin-vue-admin

Gin-vue-admin is a full-stack development platform based on Vue and Gin, integrating features like JWT authentication, dynamic routing, dynamic menus, Casbin authorization, form generator, code generator, etc. It provides various example files to help users focus more on business development. The project offers detailed documentation, video tutorials for setup and deployment, and a community for support and contributions. Users need a certain level of knowledge in Golang and Vue to work with this project. It is recommended to follow the Apache2.0 license if using the project for commercial purposes.

remix-antd-admin

Remix Antd Admin is a full-stack management system built on Remix and Antd/TailwindCSS, featuring RBAC permission management and remix-i18n integration. It aims to provide a modern, simple, fast, and scalable full-stack website. The project is currently in development, transitioning from UI to full-stack, with a focus on stable architecture. The frontend utilizes remix data flow with server-side rendering, while the backend API uses redux-toolkit/query, prisma, rxjs, zod, and remix action/loader for server API services. The project includes authorization management with jose jwt for external API services beyond web applications. Key libraries used are Remix, express, Prisma, and pgsql.

cool-admin-java

Cool-admin-java is an open-source backend permission management system with features like Ai coding, flow arrangement, modularity, and plugin support. It is used to quickly build backend applications. The system offers a modern development experience by providing functionalities such as one-click generation of API interfaces to frontend pages, drag-and-drop flow arrangement, modularized code for easy maintenance, and extensibility through plugin installation for features like payments, SMS, and emails.

iceburgcrm

Iceburg CRM is a metadata driven CRM with AI abilities that allows users to quickly prototype any CRM. It offers features like metadata creations, import/export in multiple formats, field validation, themes, role permissions, calendar, audit logs, API, workflow, field level relationships, module level relationships, and more. Created with Vue 3 for the frontend, Laravel 10 for the backend, Tailwinds with DaisyUI plugin, and Inertia for routing. Users can install default, admin panel, core, custom, or AI versions. The tool supports AI Assist for module data suggestions and provides API endpoints for CRM modules, search, specific module data, record updates, and deletions. Iceburg CRM also includes themes, custom field types, calendar, datalets, workflow, roles and permissions, import/export functionality, and custom seeding options.

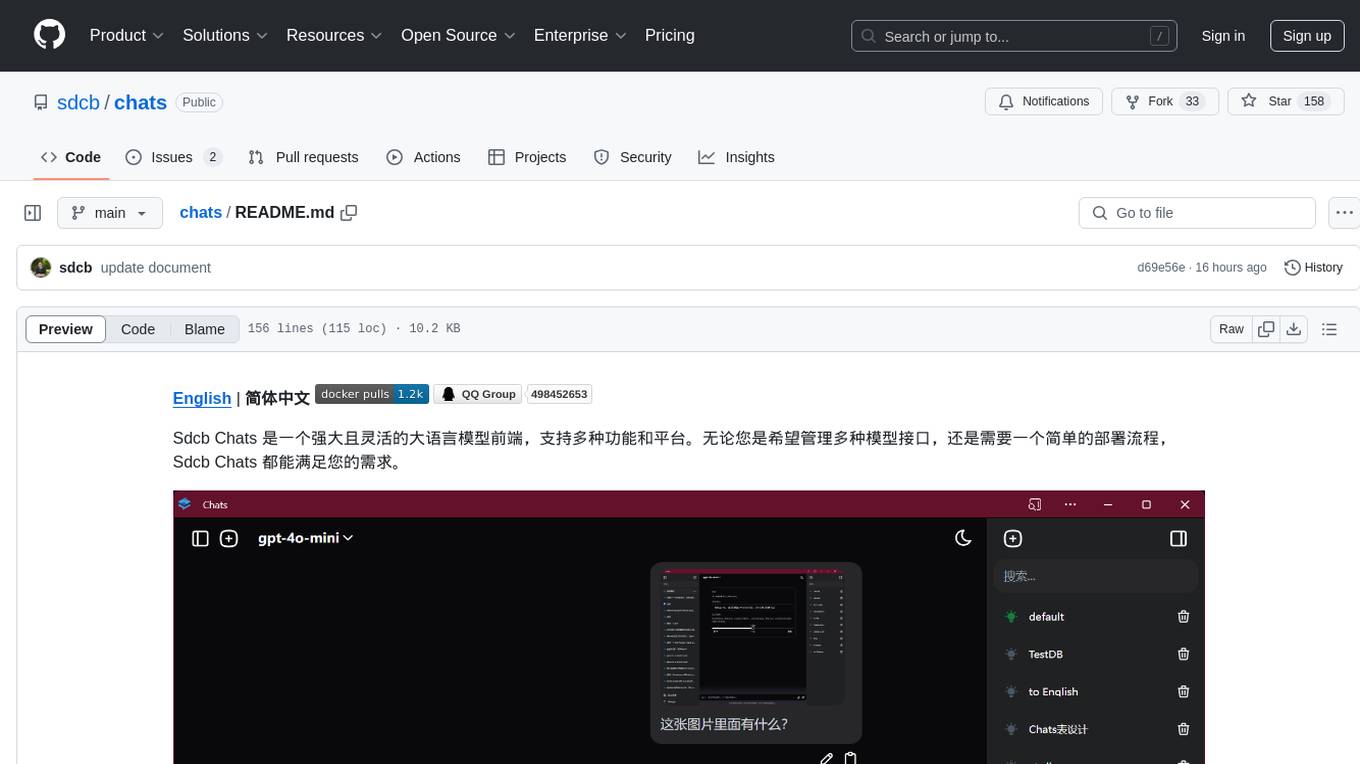

chats

Sdcb Chats is a powerful and flexible frontend for large language models, supporting multiple functions and platforms. Whether you want to manage multiple model interfaces or need a simple deployment process, Sdcb Chats can meet your needs. It supports dynamic management of multiple large language model interfaces, integrates visual models to enhance user interaction experience, provides fine-grained user permission settings for security, real-time tracking and management of user account balances, easy addition, deletion, and configuration of models, transparently forwards user chat requests based on the OpenAI protocol, supports multiple databases including SQLite, SQL Server, and PostgreSQL, compatible with various file services such as local files, AWS S3, Minio, Aliyun OSS, Azure Blob Storage, and supports multiple login methods including Keycloak SSO and phone SMS verification.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.